* fixed a minor grammar mistake * added table of contents * added table of contents * changed table of contents indexing * added table of contents * added table of contents, changed grammar * added table of contents * added table of contents * added table of contents * added table of contents * added table of contents * added table of contents, modified chapter numbering * fixed troubleshooting section redirection path * added table of contents * added table of contents, modified section numbering * added table of contents, modified section numbering * added table of contents * added table of contents, changed title size, modified numbering * added table of contents, changed section title size and capitalization * added table of contents, modified section numbering * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents capitalization issue * changed table of contents capitalization issue * changed table of contents location * changed table of contents * changed table of contents * changed section capitalization * removed comments * removed comments * removed comments

5.8 KiB

Run Local RAG using Langchain-Chatchat on Intel CPU and GPU

chatchat-space/Langchain-Chatchat is a Knowledge Base QA application using RAG pipeline; by porting it to ipex-llm, users can now easily run local RAG pipelines using Langchain-Chatchat with LLMs and Embedding models on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max).

See the demos of running LLaMA2-7B (English) and ChatGLM-3-6B (Chinese) on an Intel Core Ultra laptop below.

| English | 简体中文 |

|

|

| You could also click English or 简体中文 to watch the demo videos. | |

Note

You can change the UI language in the left-side menu. We currently support English and 简体中文 (see video demos below).

Table of Contents

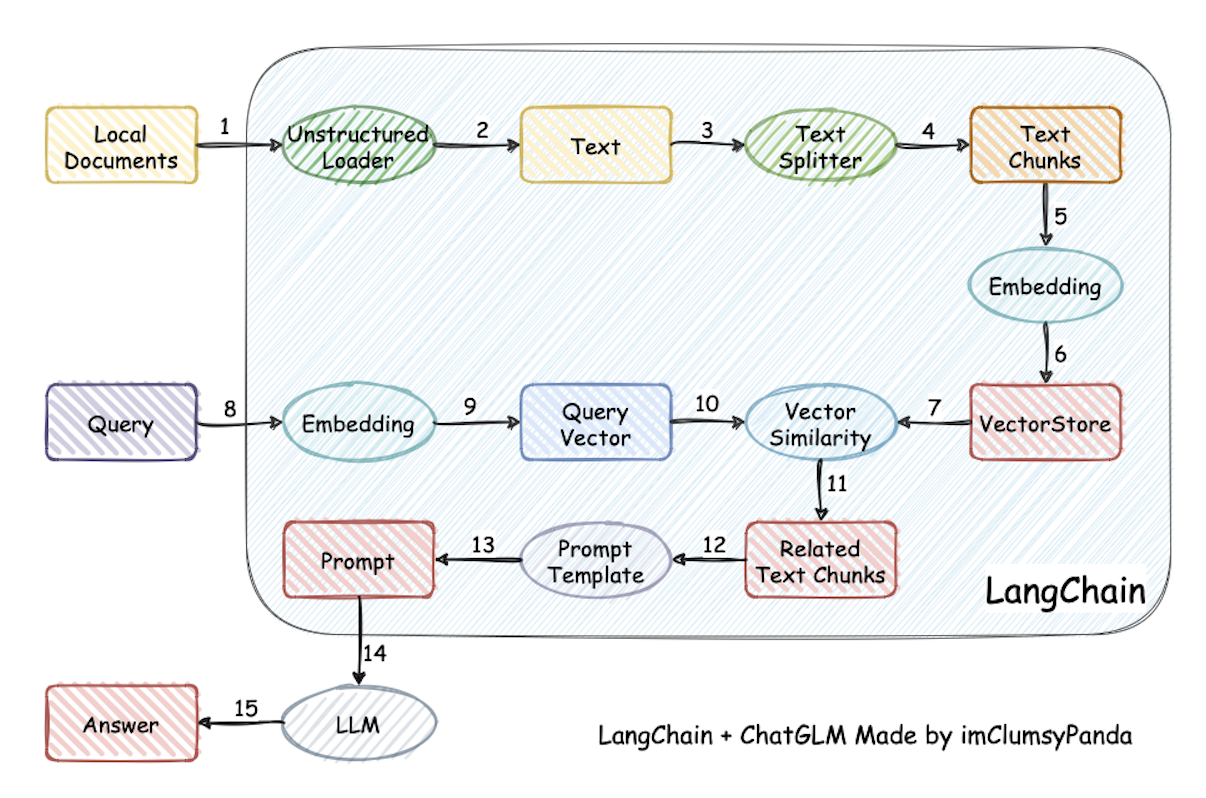

Langchain-Chatchat Architecture

See the Langchain-Chatchat architecture below (source).

Quickstart

Install and Run

Follow the guide that corresponds to your specific system and device from the links provided below:

- For systems with Intel Core Ultra integrated GPU: Windows Guide | Linux Guide

- For systems with Intel Arc A-Series GPU: Windows Guide | Linux Guide

- For systems with Intel Data Center Max Series GPU: Linux Guide

- For systems with Xeon-Series CPU: Linux Guide

How to use RAG

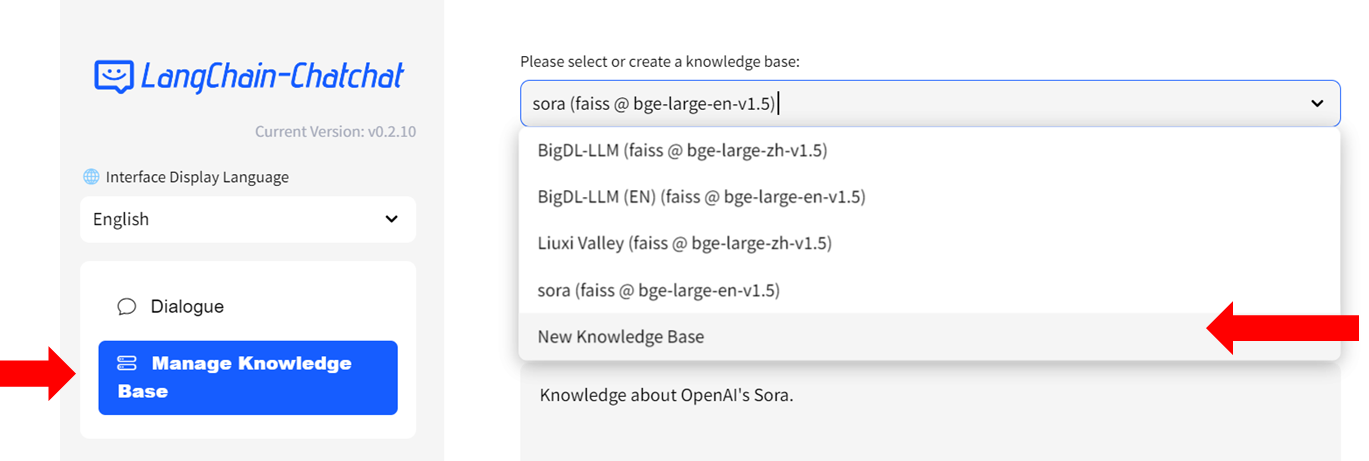

Step 1: Create Knowledge Base

- Select

Manage Knowledge Basefrom the menu on the left, then chooseNew Knowledge Basefrom the dropdown menu on the right side.

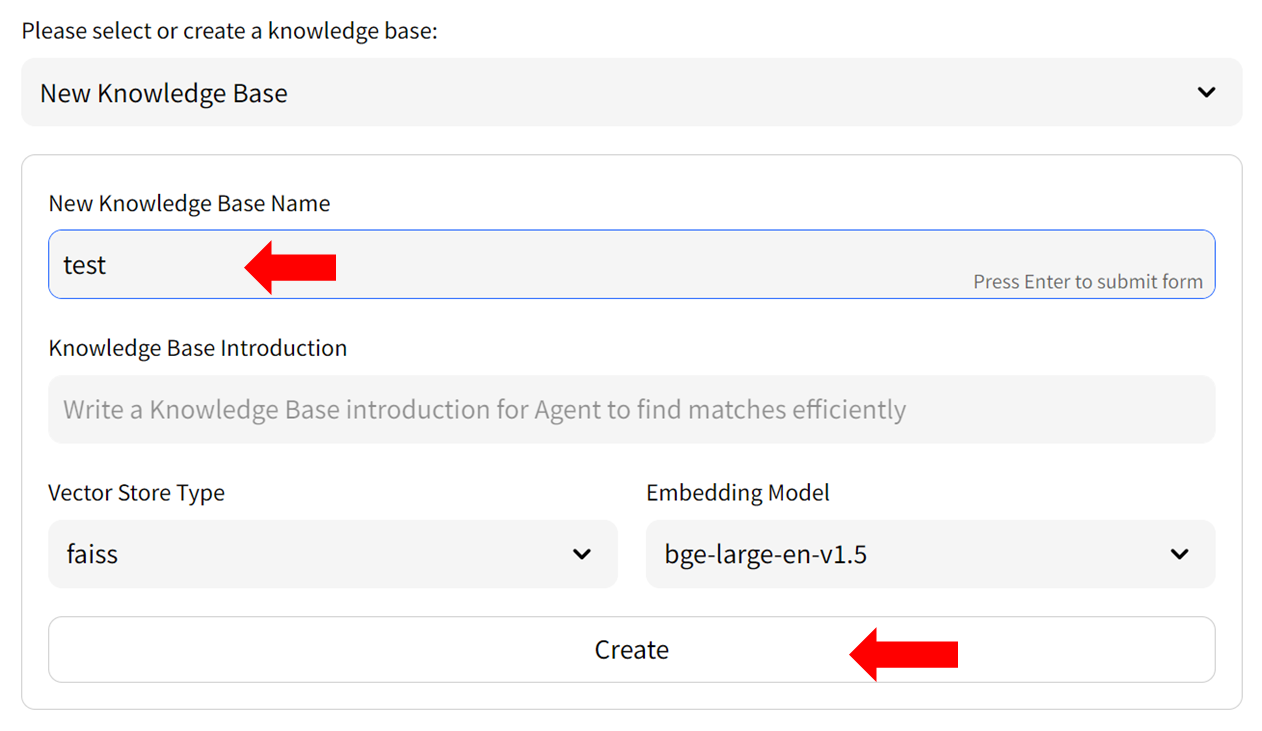

-

Fill in the name of your new knowledge base (example: "test") and press the

Createbutton. Adjust any other settings as needed.

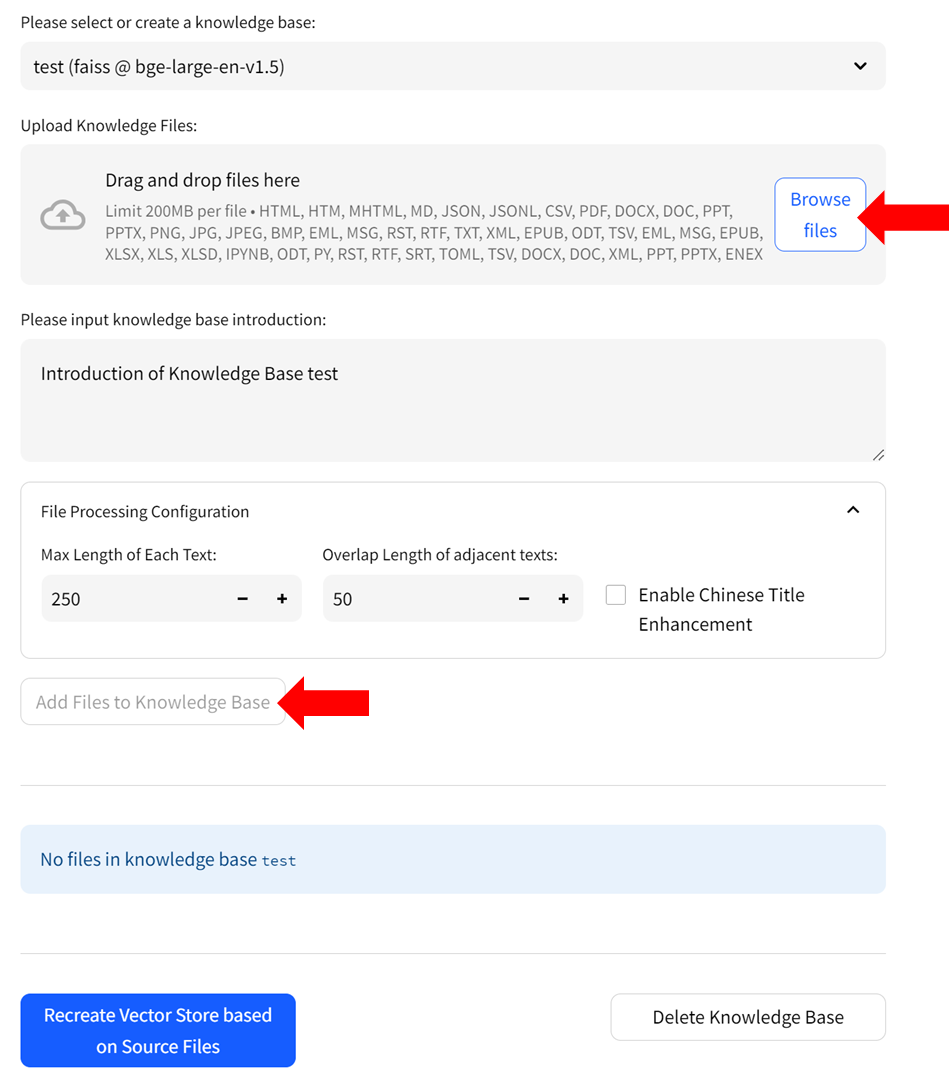

-

Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on

Add files to Knowledge Basebutton to build the vector store. Note: this process may take several minutes.

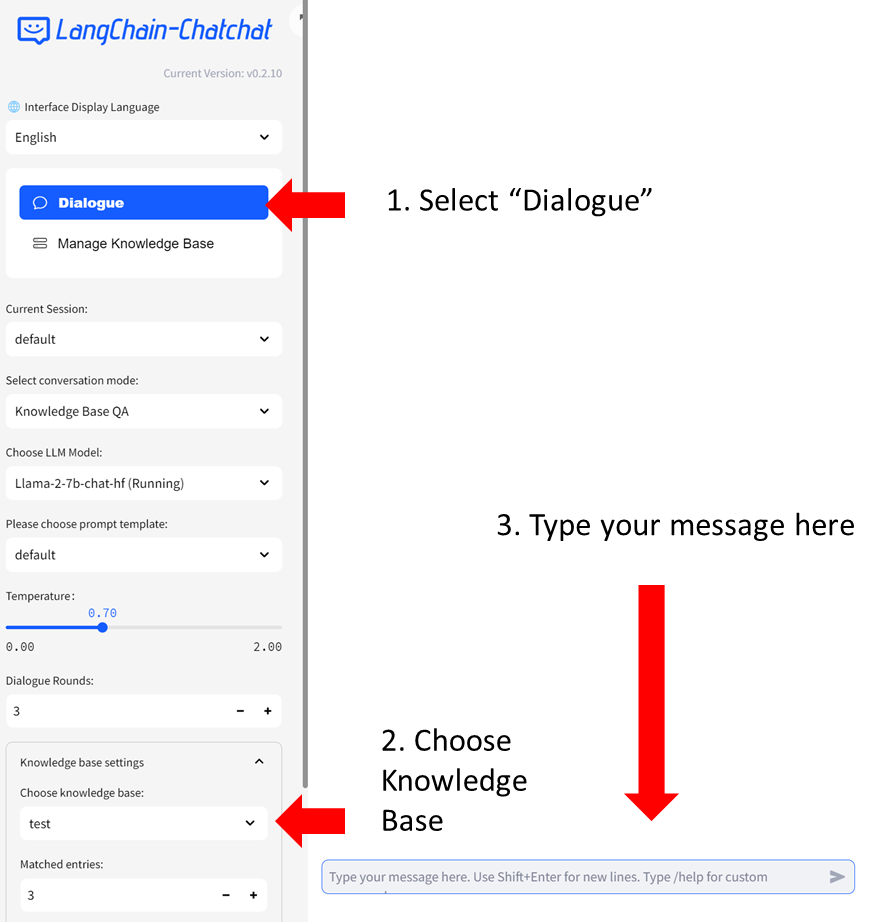

Step 2: Chat with RAG

You can now click Dialogue on the left-side menu to return to the chat UI. Then in Knowledge base settings menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

For more information about how to use Langchain-Chatchat, refer to Official Quickstart guide in English, Chinese, or the Wiki.

Troubleshooting & Tips

1. Version Compatibility

Ensure that you have installed ipex-llm>=2.1.0b20240327. To upgrade ipex-llm, use

pip install --pre --upgrade ipex-llm[xpu] -f https://developer.intel.com/ipex-whl-stable-xpu

2. Prompt Templates

In the left-side menu, you have the option to choose a prompt template. There're several pre-defined templates - those ending with '_cn' are Chinese templates, and those ending with '_en' are English templates. You can also define your own prompt templates in configs/prompt_config.py. Remember to restart the service to enable these changes.