* Rename bigdl/llm to ipex_llm * rm python/llm/src/bigdl * from bigdl.llm to from ipex_llm |

||

|---|---|---|

| .. | ||

| .gitignore | ||

| chat-ui.bat | ||

| chat.bat | ||

| chat.py | ||

| kv_cache.py | ||

| README-ui.md | ||

| README.md | ||

| setup.bat | ||

| setup.md | ||

BigDL-LLM Portable Zip For Windows: User Guide

Introduction

This portable zip includes everything you need to run an LLM with BigDL-LLM optimizations (except models) . Please refer to How to use section to get started.

13B model running on an Intel 11-Gen Core PC (real-time screen capture)

Verified Models

- ChatGLM2-6b

- Baichuan-13B-Chat

- Baichuan2-7B-Chat

- internlm-chat-7b-8k

- Llama-2-7b-chat-hf

How to use

- Download the zip from link here.

- (Optional) You could also build the zip on your own. Run

setup.batand it will generate the zip file. - Unzip

bigdl-llm.zip. - Download the model to your computer. Please ensure there is a file named

config.jsonin the model folder, otherwise the script won't work.

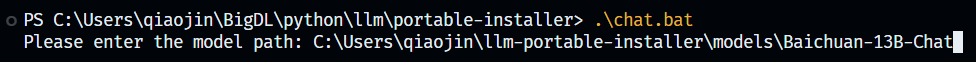

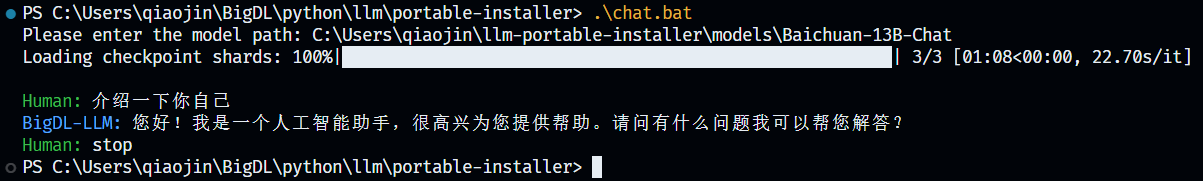

- Go into the unzipped folder and double click

chat.bat. Input the path of the model (e.g.path\to\model, note that there's no slash at the end of the path). Press Enter and wait until model finishes loading. Then enjoy chatting with the model!

- If you want to stop chatting, just input

stopand the model will stop running.