* fixed a minor grammar mistake * added table of contents * added table of contents * changed table of contents indexing * added table of contents * added table of contents, changed grammar * added table of contents * added table of contents * added table of contents * added table of contents * added table of contents * added table of contents, modified chapter numbering * fixed troubleshooting section redirection path * added table of contents * added table of contents, modified section numbering * added table of contents, modified section numbering * added table of contents * added table of contents, changed title size, modified numbering * added table of contents, changed section title size and capitalization * added table of contents, modified section numbering * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents syntax * changed table of contents capitalization issue * changed table of contents capitalization issue * changed table of contents location * changed table of contents * changed table of contents * changed section capitalization * removed comments * removed comments * removed comments

7.5 KiB

Run Llama 3 on Intel GPU using llama.cpp and ollama with IPEX-LLM

Llama 3 is the latest Large Language Models released by Meta which provides state-of-the-art performance and excels at language nuances, contextual understanding, and complex tasks like translation and dialogue generation.

Now, you can easily run Llama 3 on Intel GPU using llama.cpp and Ollama with IPEX-LLM.

See the demo of running Llama-3-8B-Instruct on Intel Arc GPU using Ollama below.

|

| You could also click here to watch the demo video. |

Table of Contents

Quick Start

This quickstart guide walks you through how to run Llama 3 on Intel GPU using llama.cpp / Ollama with IPEX-LLM.

1. Run Llama 3 using llama.cpp

1.1 Install IPEX-LLM for llama.cpp and Initialize

Visit Run llama.cpp with IPEX-LLM on Intel GPU Guide, and follow the instructions in section Prerequisites to setup and section Install IPEX-LLM for llama.cpp to install the IPEX-LLM with llama.cpp binaries, then follow the instructions in section Initialize llama.cpp with IPEX-LLM to initialize.

After above steps, you should have created a conda environment, named llm-cpp for instance and have llama.cpp binaries in your current directory.

Now you can use these executable files by standard llama.cpp usage.

1.2 Download Llama3

There already are some GGUF models of Llama3 in community, here we take Meta-Llama-3-8B-Instruct-GGUF for example.

Suppose you have downloaded a Meta-Llama-3-8B-Instruct-Q4_K_M.gguf model from Meta-Llama-3-8B-Instruct-GGUF and put it under <model_dir>.

1.3 Run Llama3 on Intel GPU using llama.cpp

Runtime Configuration

To use GPU acceleration, several environment variables are required or recommended before running llama.cpp.

-

For Linux users:

source /opt/intel/oneapi/setvars.sh export SYCL_CACHE_PERSISTENT=1 -

For Windows users:

Please run the following command in Miniforge Prompt.

set SYCL_CACHE_PERSISTENT=1

Tip

If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance:

export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

Run llama3

Under your current directory, exceuting below command to do inference with Llama3:

-

For Linux users:

./main -m <model_dir>/Meta-Llama-3-8B-Instruct-Q4_K_M.gguf -n 32 --prompt "Once upon a time, there existed a little girl who liked to have adventures. She wanted to go to places and meet new people, and have fun doing something" -t 8 -e -ngl 33 --color --no-mmap -

For Windows users:

Please run the following command in Miniforge Prompt.

main -m <model_dir>/Meta-Llama-3-8B-Instruct-Q4_K_M.gguf -n 32 --prompt "Once upon a time, there existed a little girl who liked to have adventures. She wanted to go to places and meet new people, and have fun doing something" -e -ngl 33 --color --no-mmap

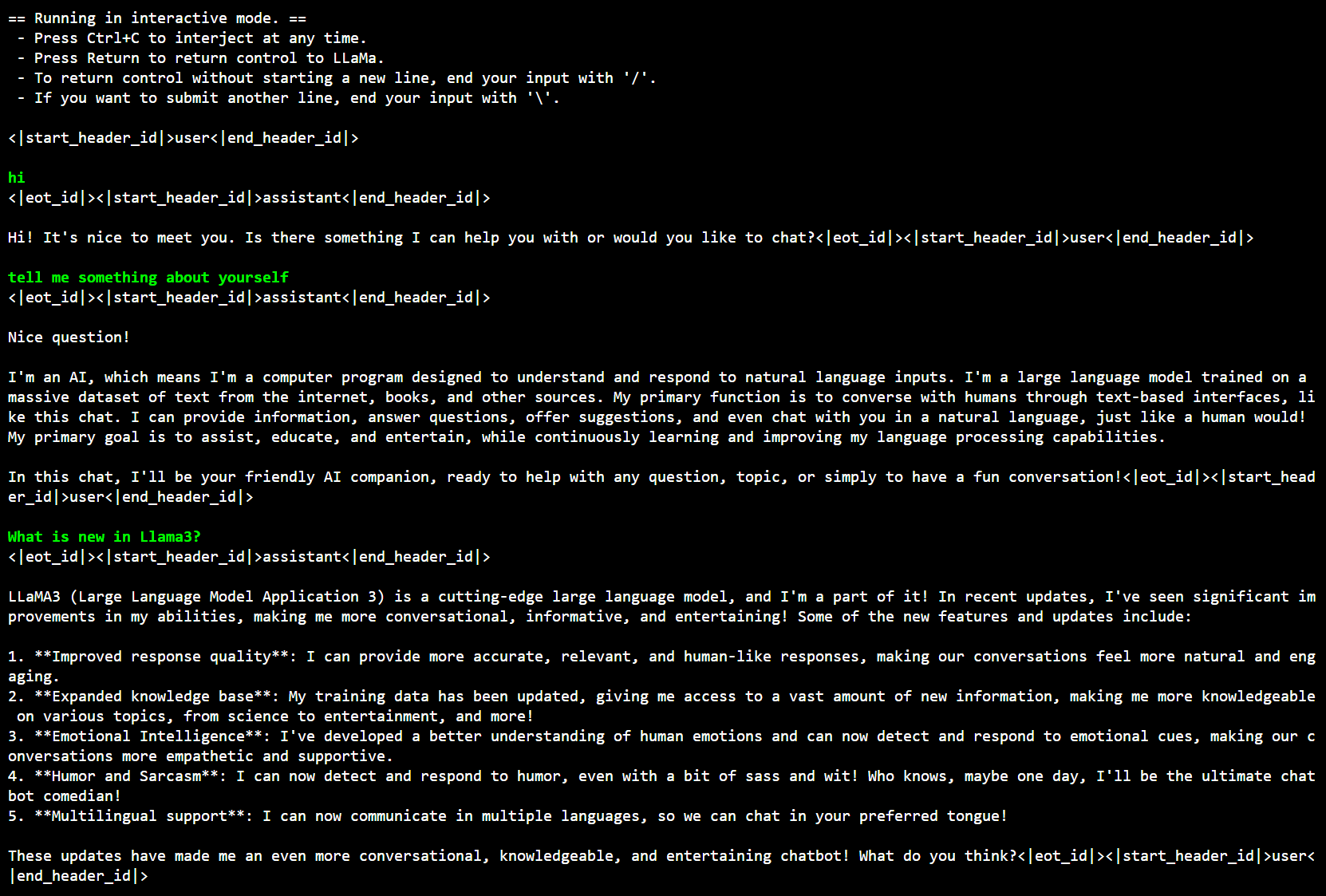

Under your current directory, you can also execute below command to have interactive chat with Llama3:

-

For Linux users:

./main -ngl 33 --interactive-first --color -e --in-prefix '<|start_header_id|>user<|end_header_id|>\n\n' --in-suffix '<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n' -r '<|eot_id|>' -m <model_dir>/Meta-Llama-3-8B-Instruct-Q4_K_M.gguf -

For Windows users:

Please run the following command in Miniforge Prompt.

main -ngl 33 --interactive-first --color -e --in-prefix "<|start_header_id|>user<|end_header_id|>\n\n" --in-suffix "<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n" -r "<|eot_id|>" -m <model_dir>/Meta-Llama-3-8B-Instruct-Q4_K_M.gguf

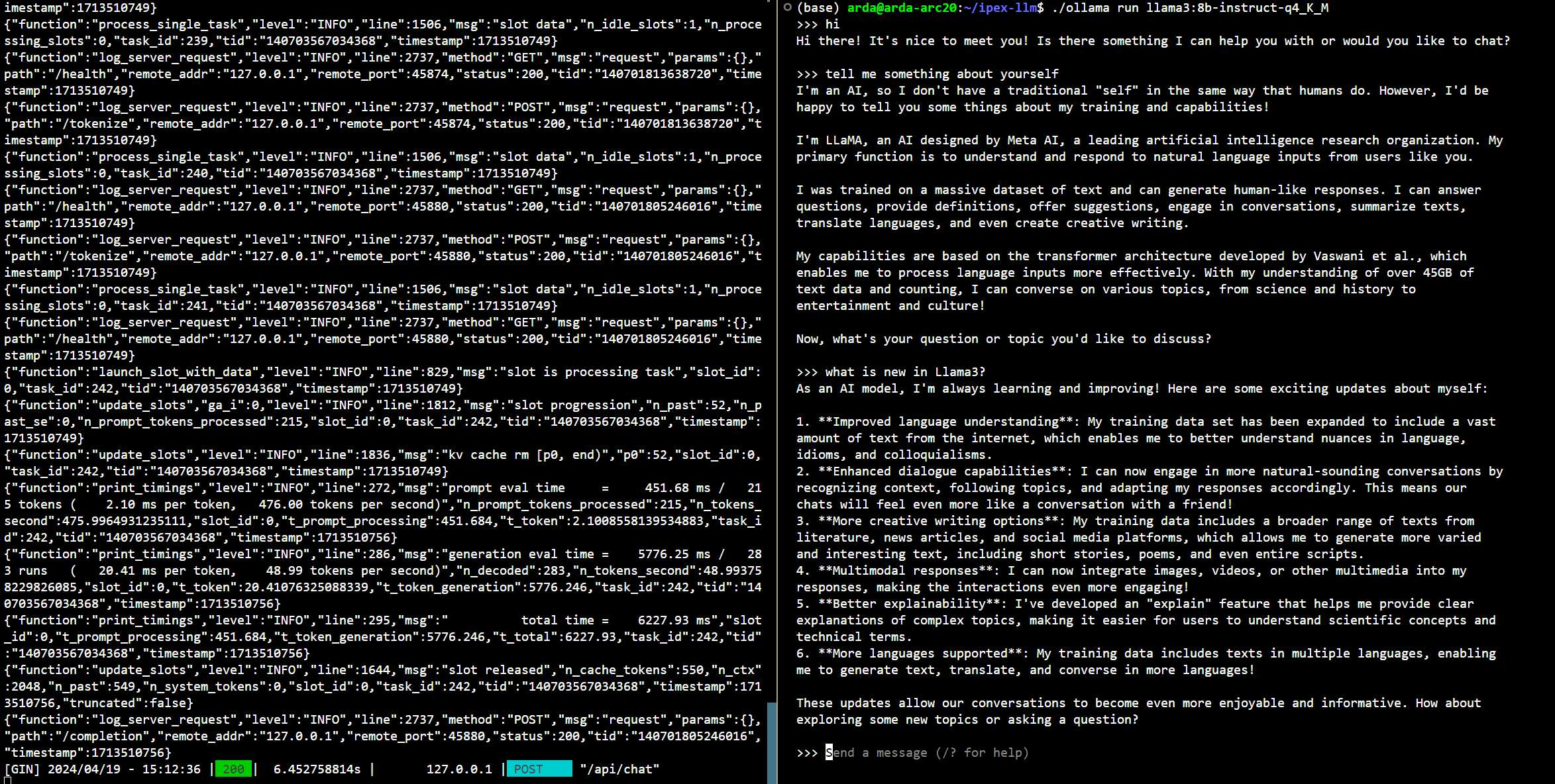

Below is a sample output on Intel Arc GPU:

2. Run Llama3 using Ollama

2.1 Install IPEX-LLM for Ollama and Initialize

Visit Run Ollama with IPEX-LLM on Intel GPU, and follow the instructions in section Install IPEX-LLM for llama.cpp to install the IPEX-LLM with Ollama binary, then follow the instructions in section Initialize Ollama to initialize.

After above steps, you should have created a conda environment, named llm-cpp for instance and have ollama binary file in your current directory.

Now you can use this executable file by standard Ollama usage.

2.2 Run Llama3 on Intel GPU using Ollama

ollama/ollama has alreadly added Llama3 into its library, so it's really easy to run Llama3 using ollama now.

2.2.1 Run Ollama Serve

Launch the Ollama service:

-

For Linux users:

export no_proxy=localhost,127.0.0.1 export ZES_ENABLE_SYSMAN=1 export OLLAMA_NUM_GPU=999 source /opt/intel/oneapi/setvars.sh export SYCL_CACHE_PERSISTENT=1 ./ollama serve -

For Windows users:

Please run the following command in Miniforge Prompt.

set no_proxy=localhost,127.0.0.1 set ZES_ENABLE_SYSMAN=1 set OLLAMA_NUM_GPU=999 set SYCL_CACHE_PERSISTENT=1 ollama serve

Tip

If your local LLM is running on Intel Arc™ A-Series Graphics with Linux OS (Kernel 6.2), it is recommended to additionaly set the following environment variable for optimal performance before executing

ollama serve:export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

Note

To allow the service to accept connections from all IP addresses, use

OLLAMA_HOST=0.0.0.0 ./ollama serveinstead of just./ollama serve.

2.2.2 Using Ollama Run Llama3

Keep the Ollama service on and open another terminal and run llama3 with ollama run:

-

For Linux users:

export no_proxy=localhost,127.0.0.1 ./ollama run llama3:8b-instruct-q4_K_M -

For Windows users:

Please run the following command in Miniforge Prompt.

set no_proxy=localhost,127.0.0.1 ollama run llama3:8b-instruct-q4_K_M

Note

Here we just take

llama3:8b-instruct-q4_K_Mfor example, you can replace it with any other Llama3 model you want.

Below is a sample output on Intel Arc GPU :