From f239bc329b322593d01ec5003d84d07c59a3c470 Mon Sep 17 00:00:00 2001

From: =?UTF-8?q?Cheen=20Hau=2C=20=E4=BF=8A=E8=B1=AA?=

<33478814+chtanch@users.noreply.github.com>

Date: Wed, 27 Mar 2024 17:58:57 +0800

Subject: [PATCH] Specify oneAPI minor version in documentation (#10561)

---

docs/readthedocs/source/doc/LLM/Overview/install_gpu.md | 2 +-

docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md | 2 +-

.../source/doc/LLM/Quickstart/install_windows_gpu.md | 2 +-

python/llm/example/GPU/vLLM-Serving/README.md | 2 +-

4 files changed, 4 insertions(+), 4 deletions(-)

diff --git a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

index 1f1de081..b46f39c2 100644

--- a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

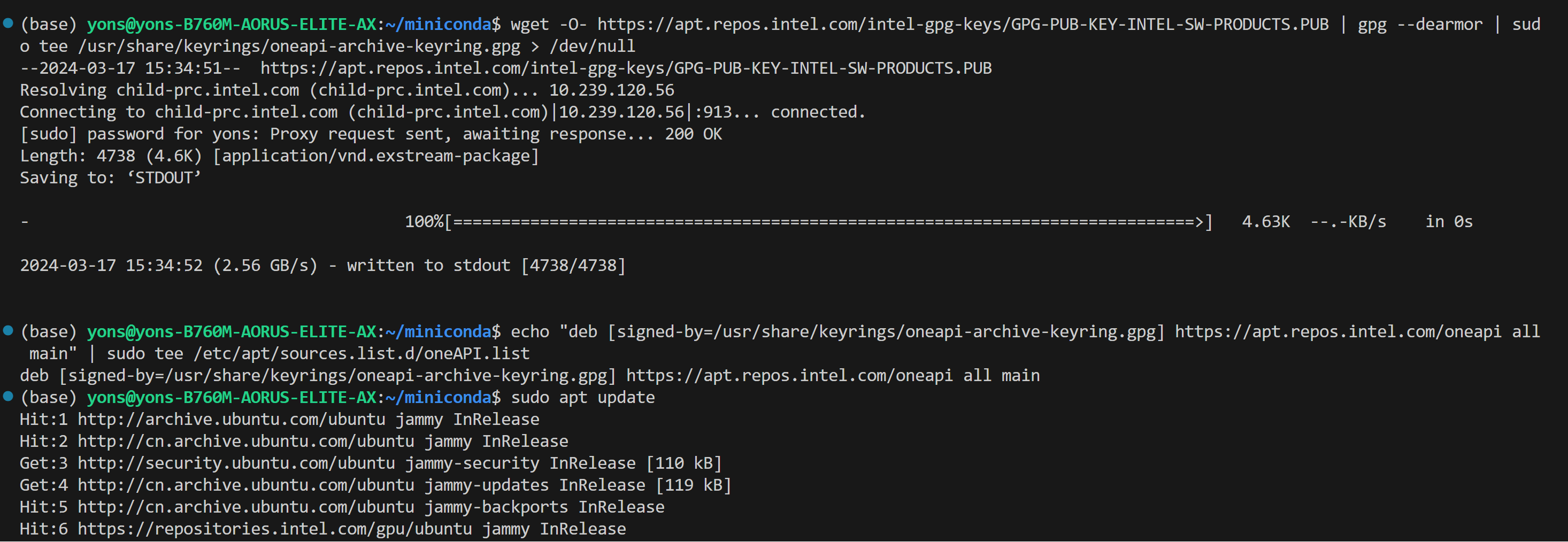

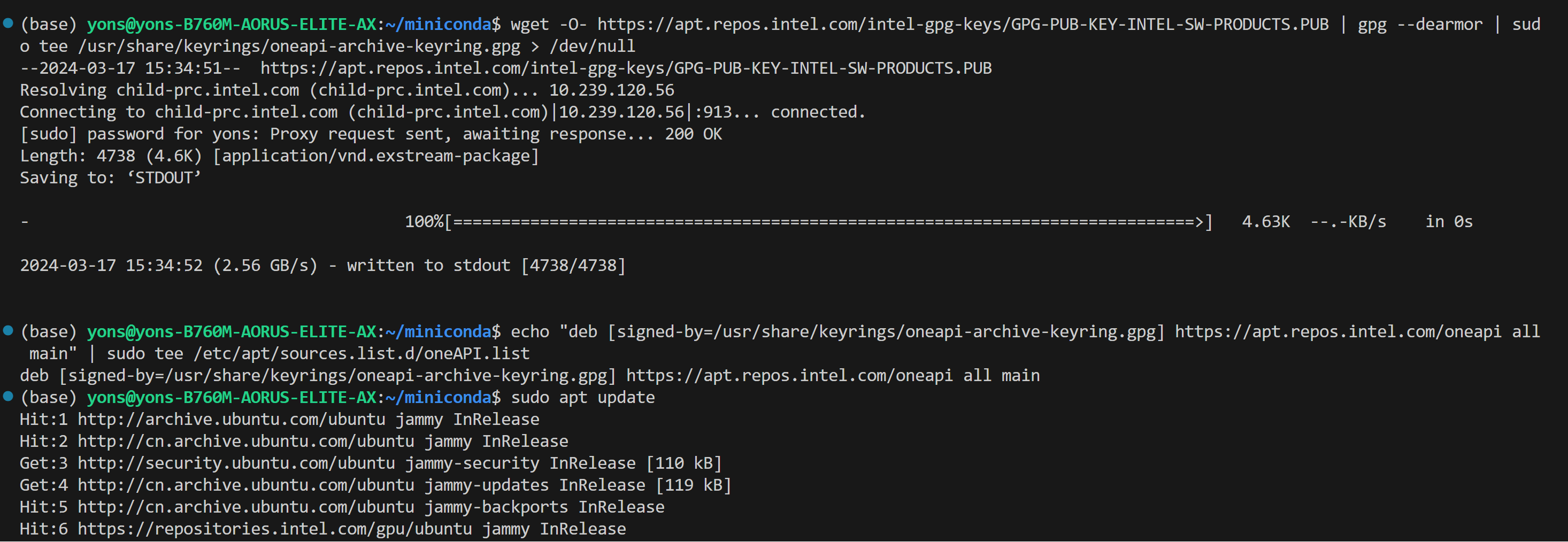

@@ -239,7 +239,7 @@ IPEX-LLM GPU support on Linux has been verified on:

.. code-block:: bash

- sudo apt install -y intel-basekit

+ sudo apt install -y intel-basekit=2024.0.1-43

.. note::

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

index 357e35d8..fb508bd1 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

@@ -65,7 +65,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo apt update

- sudo apt install intel-basekit

+ sudo apt install intel-basekit=2024.0.1-43

```

>  diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index eceb34e6..523d5aba 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -39,7 +39,7 @@ Download and install the latest GPU driver from the [official Intel download pag

pip install dpcpp-cpp-rt==2024.0.2 mkl-dpcpp==2024.0.0 onednn==2024.0.0

``` -->

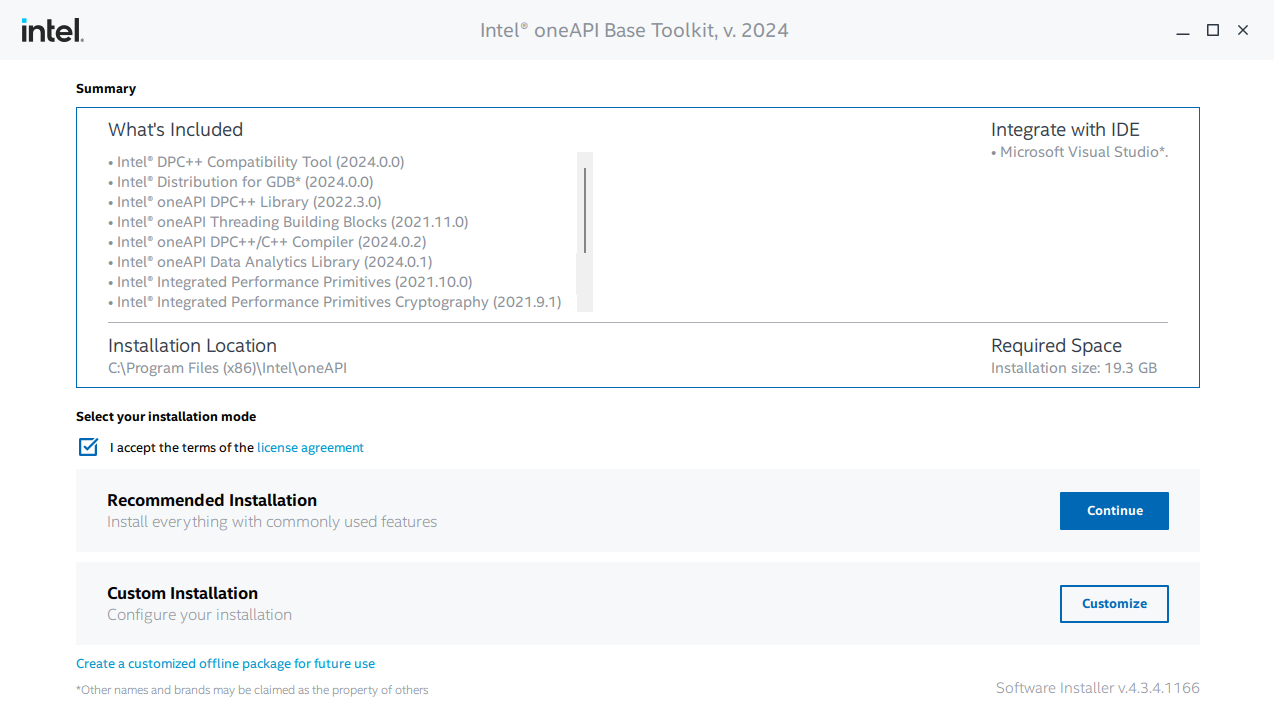

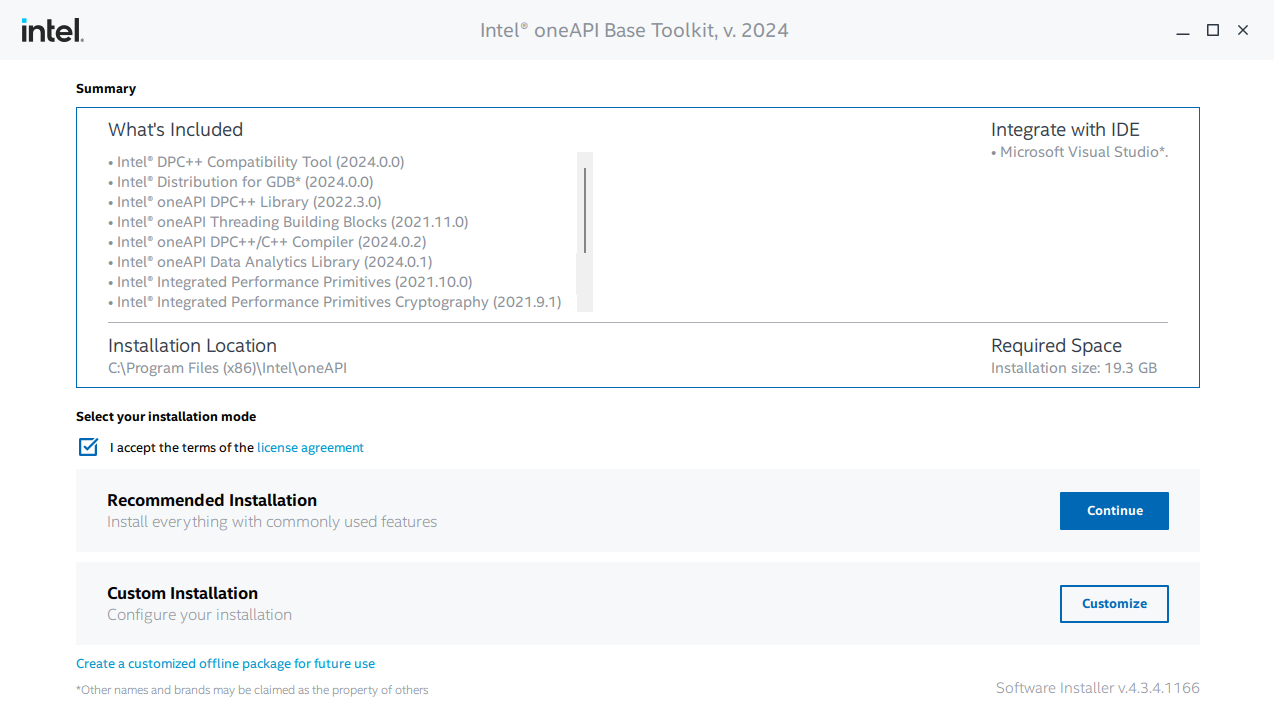

-Download and install the [**Intel oneAPI Base Toolkit**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

+Download and install the [**Intel oneAPI Base Toolkit 2024.0**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index eceb34e6..523d5aba 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -39,7 +39,7 @@ Download and install the latest GPU driver from the [official Intel download pag

pip install dpcpp-cpp-rt==2024.0.2 mkl-dpcpp==2024.0.0 onednn==2024.0.0

``` -->

-Download and install the [**Intel oneAPI Base Toolkit**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

+Download and install the [**Intel oneAPI Base Toolkit 2024.0**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

diff --git a/python/llm/example/GPU/vLLM-Serving/README.md b/python/llm/example/GPU/vLLM-Serving/README.md

index 301d884d..43ff04fc 100644

--- a/python/llm/example/GPU/vLLM-Serving/README.md

+++ b/python/llm/example/GPU/vLLM-Serving/README.md

@@ -10,7 +10,7 @@ In this example, we will run Llama2-7b model using Arc A770 and provide `OpenAI-

### 0. Environment

-To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

+To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit 2024.0. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

After install the toolkit, run the following commands in your environment before starting vLLM GPU:

```bash

diff --git a/python/llm/example/GPU/vLLM-Serving/README.md b/python/llm/example/GPU/vLLM-Serving/README.md

index 301d884d..43ff04fc 100644

--- a/python/llm/example/GPU/vLLM-Serving/README.md

+++ b/python/llm/example/GPU/vLLM-Serving/README.md

@@ -10,7 +10,7 @@ In this example, we will run Llama2-7b model using Arc A770 and provide `OpenAI-

### 0. Environment

-To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

+To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit 2024.0. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

After install the toolkit, run the following commands in your environment before starting vLLM GPU:

```bash

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index eceb34e6..523d5aba 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -39,7 +39,7 @@ Download and install the latest GPU driver from the [official Intel download pag

pip install dpcpp-cpp-rt==2024.0.2 mkl-dpcpp==2024.0.0 onednn==2024.0.0

``` -->

-Download and install the [**Intel oneAPI Base Toolkit**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

+Download and install the [**Intel oneAPI Base Toolkit 2024.0**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index eceb34e6..523d5aba 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -39,7 +39,7 @@ Download and install the latest GPU driver from the [official Intel download pag

pip install dpcpp-cpp-rt==2024.0.2 mkl-dpcpp==2024.0.0 onednn==2024.0.0

``` -->

-Download and install the [**Intel oneAPI Base Toolkit**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

+Download and install the [**Intel oneAPI Base Toolkit 2024.0**](https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html?operatingsystem=window&distributions=offline). During installation, you can continue with the default installation settings.

diff --git a/python/llm/example/GPU/vLLM-Serving/README.md b/python/llm/example/GPU/vLLM-Serving/README.md

index 301d884d..43ff04fc 100644

--- a/python/llm/example/GPU/vLLM-Serving/README.md

+++ b/python/llm/example/GPU/vLLM-Serving/README.md

@@ -10,7 +10,7 @@ In this example, we will run Llama2-7b model using Arc A770 and provide `OpenAI-

### 0. Environment

-To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

+To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit 2024.0. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

After install the toolkit, run the following commands in your environment before starting vLLM GPU:

```bash

diff --git a/python/llm/example/GPU/vLLM-Serving/README.md b/python/llm/example/GPU/vLLM-Serving/README.md

index 301d884d..43ff04fc 100644

--- a/python/llm/example/GPU/vLLM-Serving/README.md

+++ b/python/llm/example/GPU/vLLM-Serving/README.md

@@ -10,7 +10,7 @@ In this example, we will run Llama2-7b model using Arc A770 and provide `OpenAI-

### 0. Environment

-To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

+To use Intel GPUs for deep-learning tasks, you should install the XPU driver and the oneAPI Base Toolkit 2024.0. Please check the requirements at [here](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU#requirements).

After install the toolkit, run the following commands in your environment before starting vLLM GPU:

```bash