diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/bigdl_llm_migration.md b/docs/readthedocs/source/doc/LLM/Quickstart/bigdl_llm_migration.md

index 4f32cc87..afc79586 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/bigdl_llm_migration.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/bigdl_llm_migration.md

@@ -1,11 +1,36 @@

# `bigdl-llm` Migration Guide

+

+This guide helps you migrate your `bigdl-llm` application to use `ipex-llm`.

+

## Upgrade `bigdl-llm` package to `ipex-llm`

-First uninstall `bigdl-llm` and install `ipex-llm`.

+

+```eval_rst

+.. note::

+ This step assumes you have already installed `bigdl-llm`.

+```

+You need to uninstall `bigdl-llm` and install `ipex-llm`With your `bigdl-llm` conda envionment activated, exeucte the folloiwng command according to your device type and location:

+

+### For CPU

+

```bash

pip uninstall -y bigdl-llm

pip install --pre --upgrade ipex-llm[all] # for cpu

-pip install --pre --upgrade ipex-llm[xpu] -f https://developer.intel.com/ipex-whl-stable-xpu # for xpu

```

+

+### For GPU

+```eval_rst

+.. tabs::

+ .. tab:: US

+ .. code-block:: cmd

+ pip uninstall -y bigdl-llm

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+ .. tab:: CN

+ .. code-block:: cmd

+ pip uninstall -y bigdl-llm

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

+```

+

+

## Migrate `bigdl-llm` code to `ipex-llm`

There are two options to migrate `bigdl-llm` code to `ipex-llm`.

@@ -13,8 +38,8 @@ There are two options to migrate `bigdl-llm` code to `ipex-llm`.

To upgrade `bigdl-llm` code to `ipex-llm`, simply replace all `bigdl.llm` with `ipex_llm`:

```python

-#from bigdl.llm.transformers import AutoModelForCausalLM

-from ipex_llm.transformers import AutoModelForCausalLM

+#from bigdl.llm.transformers import AutoModelForCausalLM # Original line

+from ipex_llm.transformers import AutoModelForCausalLM #Updated line

model = AutoModelForCausalLM.from_pretrained(model_path,

load_in_4bit=True,

trust_remote_code=True)

@@ -24,8 +49,7 @@ model = AutoModelForCausalLM.from_pretrained(model_path,

To run in the compatible mode, simply add `import ipex_llm` at the beginning of the existing `bigdl-llm` code:

```python

-# need to add the below line before "import bigdl.llm"

-import ipex_llm

+import ipex_llm # Add this line before any bigdl.llm imports

from bigdl.llm.transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained(model_path,

load_in_4bit=True,

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

index a800f127..357e35d8 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

@@ -4,10 +4,11 @@ This guide demonstrates how to install IPEX-LLM on Linux with Intel GPUs. It app

IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and supports PyTorch 2.0 and PyTorch 2.1 on Linux. This page demonstrates IPEX-LLM with PyTorch 2.1. Check the [Installation](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#linux) page for more details.

+## Install Prerequisites

-## Install Intel GPU Driver

+### Install GPU Driver

-### For Linux kernel 6.2

+#### For Linux kernel 6.2

* Install arc driver

```bash

@@ -24,15 +25,12 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

```bash

sudo apt-get update

-

sudo apt-get -y install \

gawk \

dkms \

linux-headers-$(uname -r) \

libc6-dev

-

sudo apt install intel-i915-dkms intel-fw-gpu

-

sudo apt-get install -y gawk libc6-dev udev\

intel-opencl-icd intel-level-zero-gpu level-zero \

intel-media-va-driver-non-free libmfx1 libmfxgen1 libvpl2 \

@@ -59,24 +57,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

```

-## Setup Python Environment

-

-Install the Miniconda as follows if you don't have conda installed on your machine:

- ```bash

- wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

-

- bash Miniconda3-latest-Linux-x86_64.sh

-

- source ~/.bashrc

-

- # Verify the installation

- conda --version

- # rm Miniconda3-latest-Linux-x86_64.sh # if you don't need this file any longer

- ```

- >  -

-

-## Install oneAPI

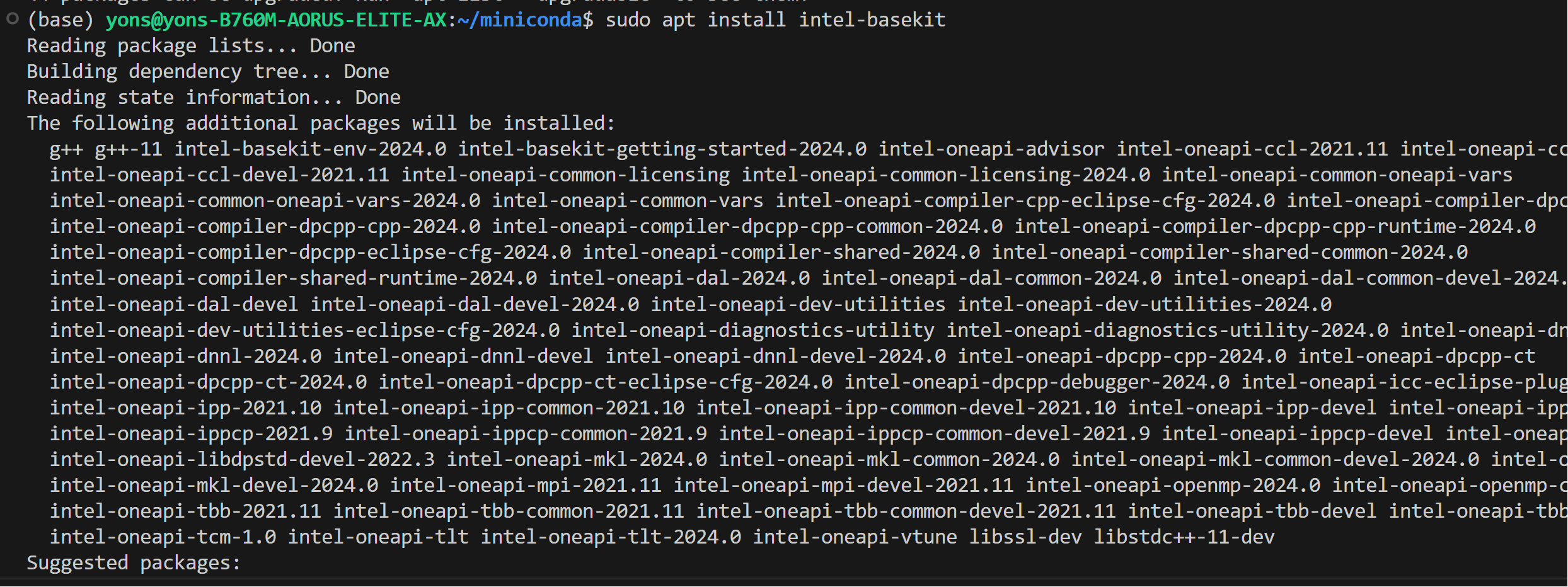

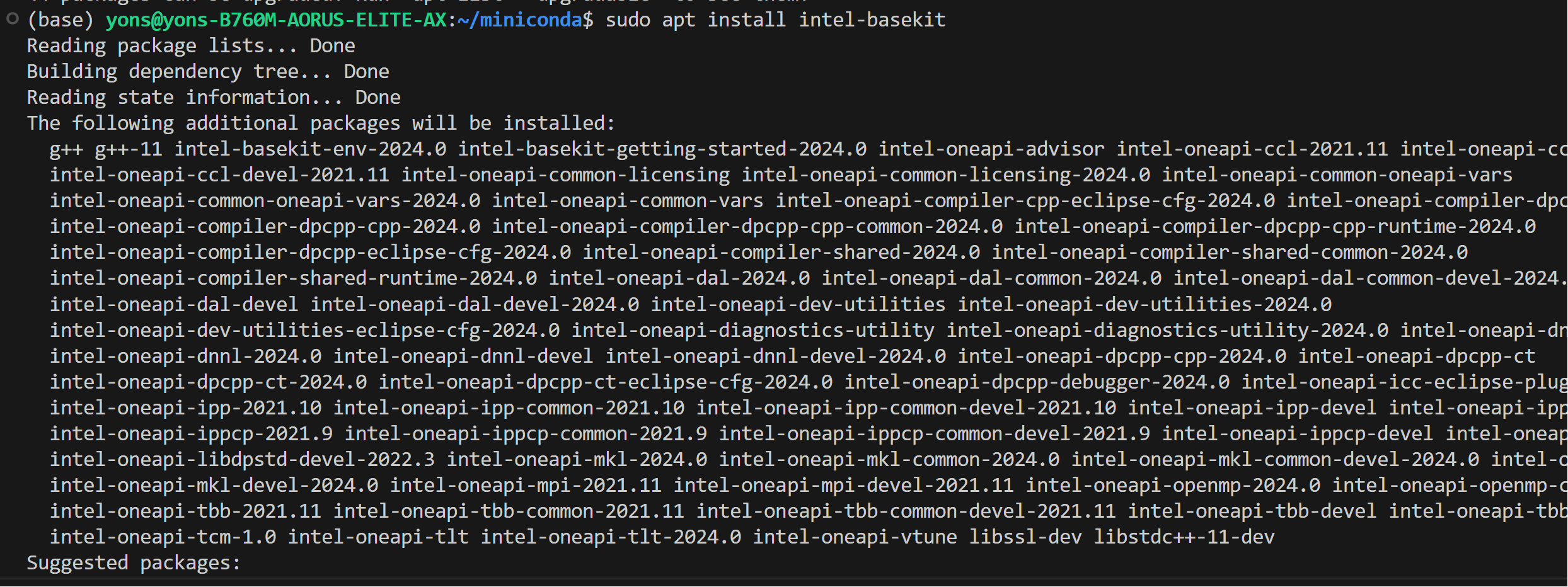

+### Install oneAPI

```

wget -O- https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB | gpg --dearmor | sudo tee /usr/share/keyrings/oneapi-archive-keyring.gpg > /dev/null

@@ -90,23 +71,36 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>

-

-

-## Install oneAPI

+### Install oneAPI

```

wget -O- https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB | gpg --dearmor | sudo tee /usr/share/keyrings/oneapi-archive-keyring.gpg > /dev/null

@@ -90,23 +71,36 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>  +### Setup Python Environment

+

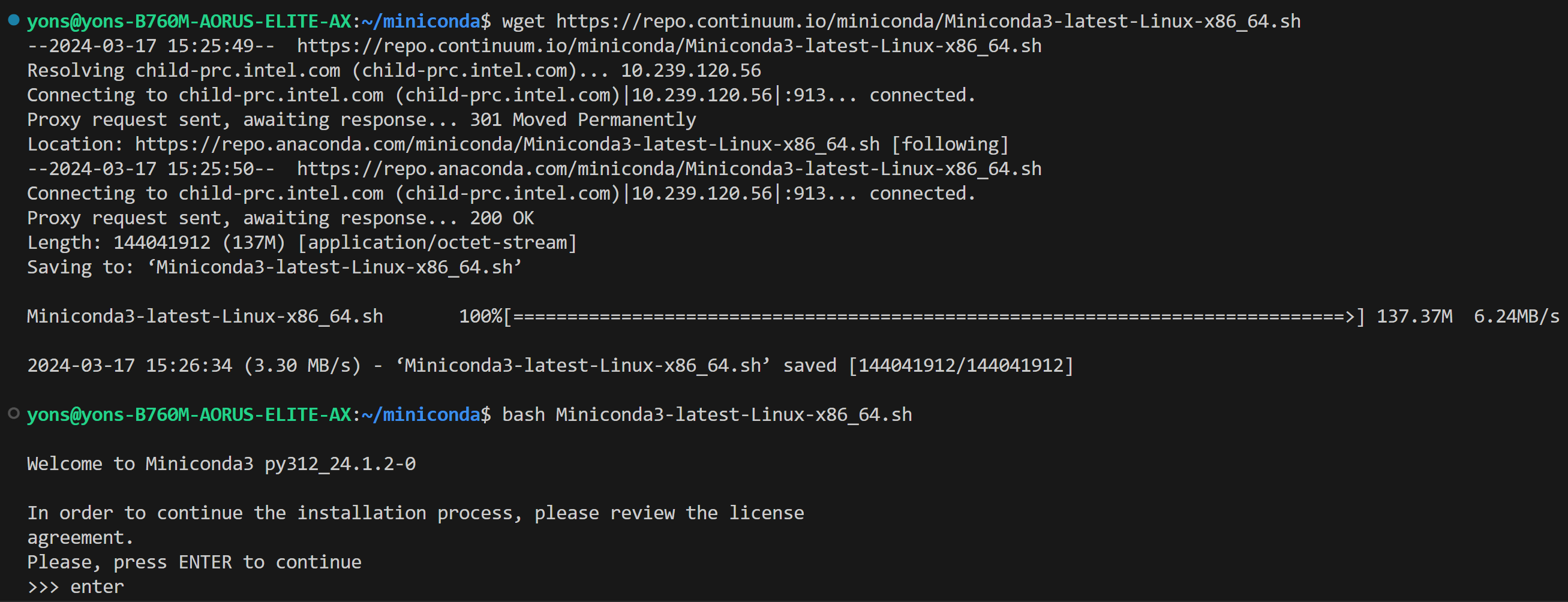

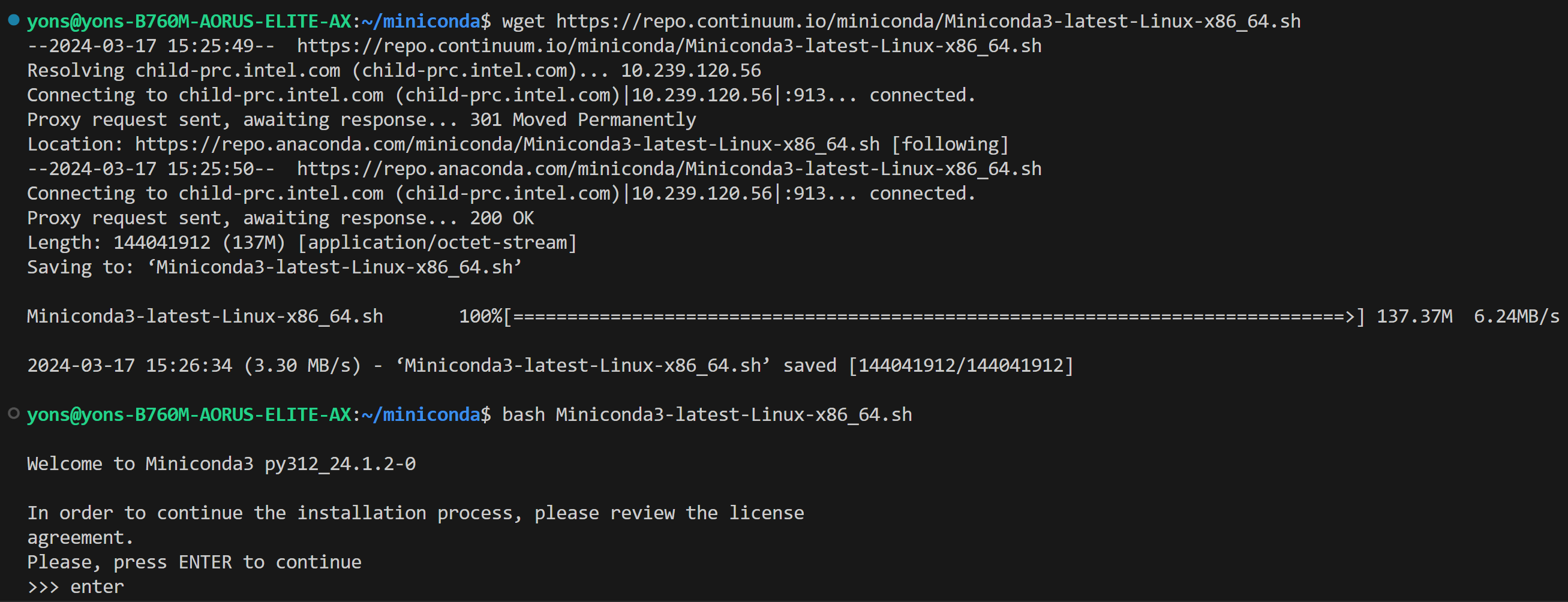

+Download and install the Miniconda as follows if you don't have conda installed on your machine:

+ ```bash

+ wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

+ bash Miniconda3-latest-Linux-x86_64.sh

+ source ~/.bashrc

+ ```

+

+You can use `conda --version` to verify you conda installation.

+

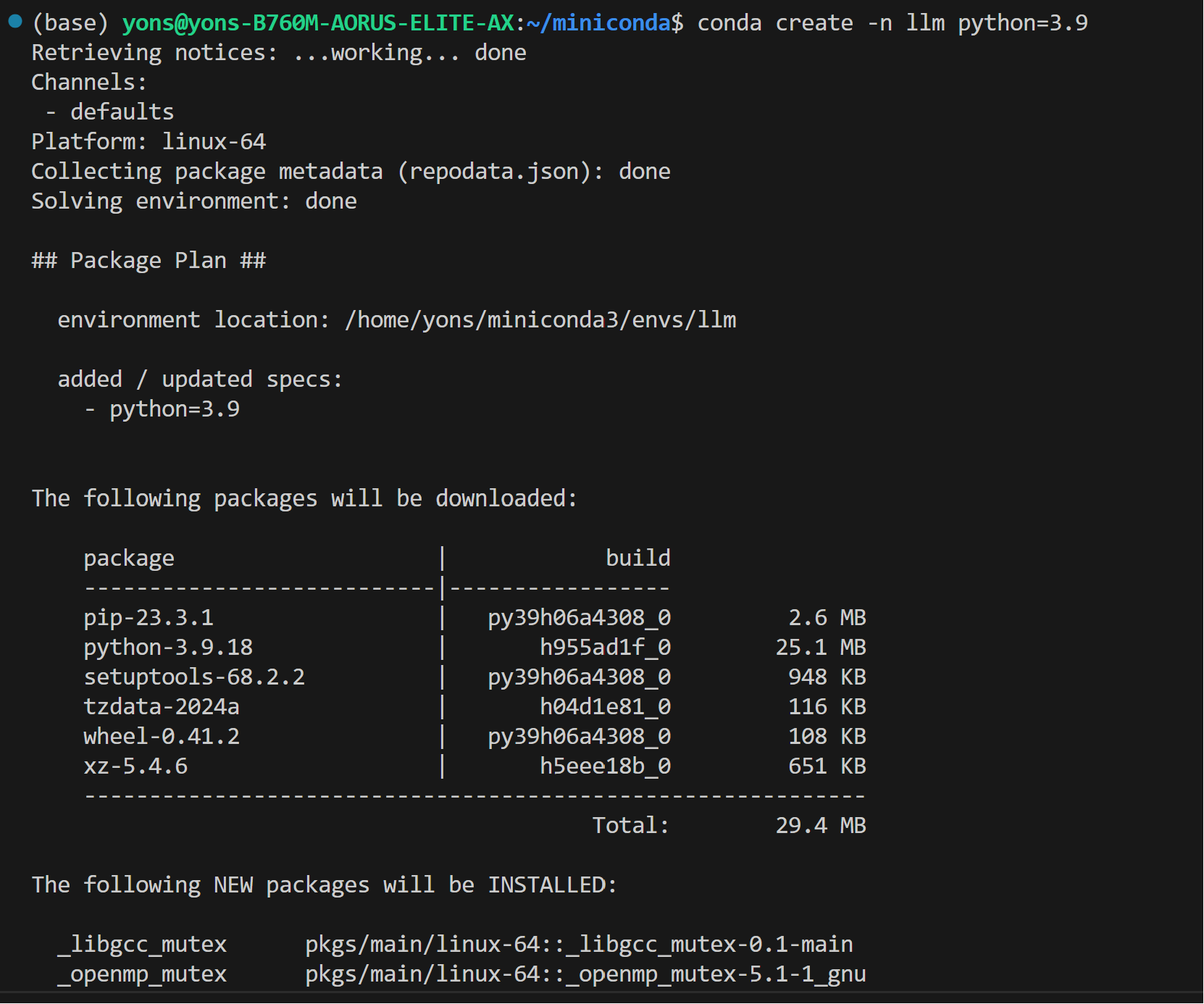

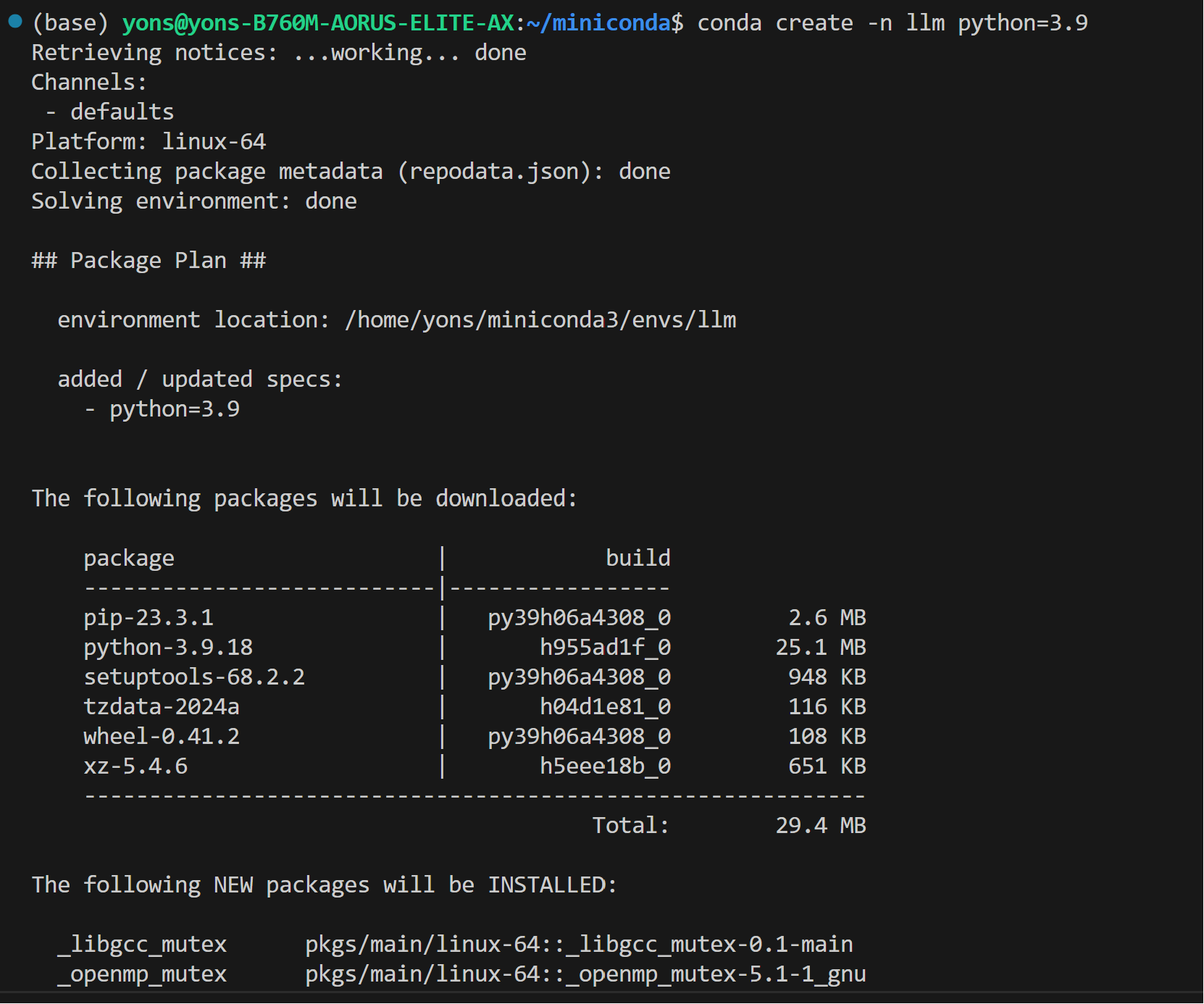

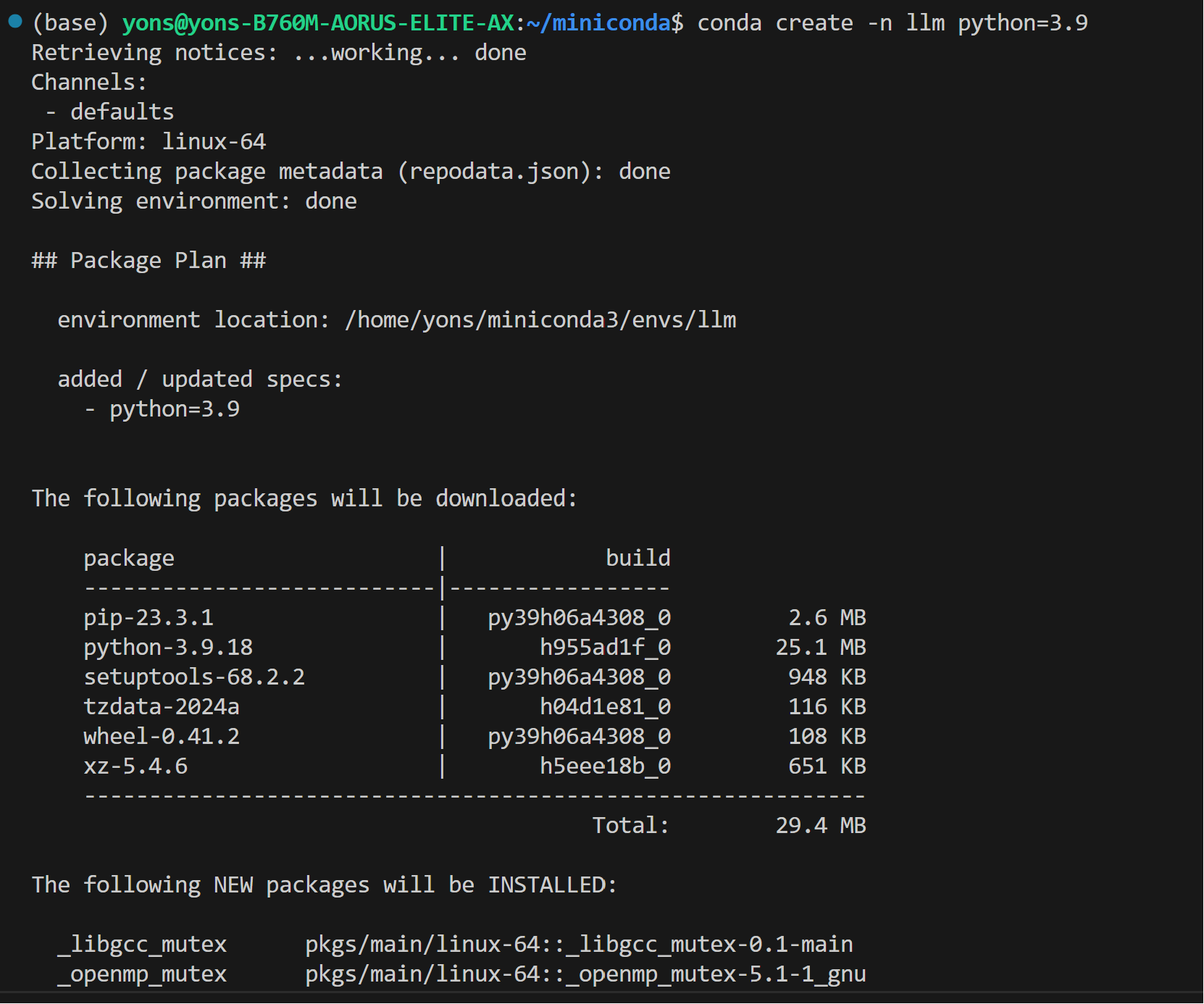

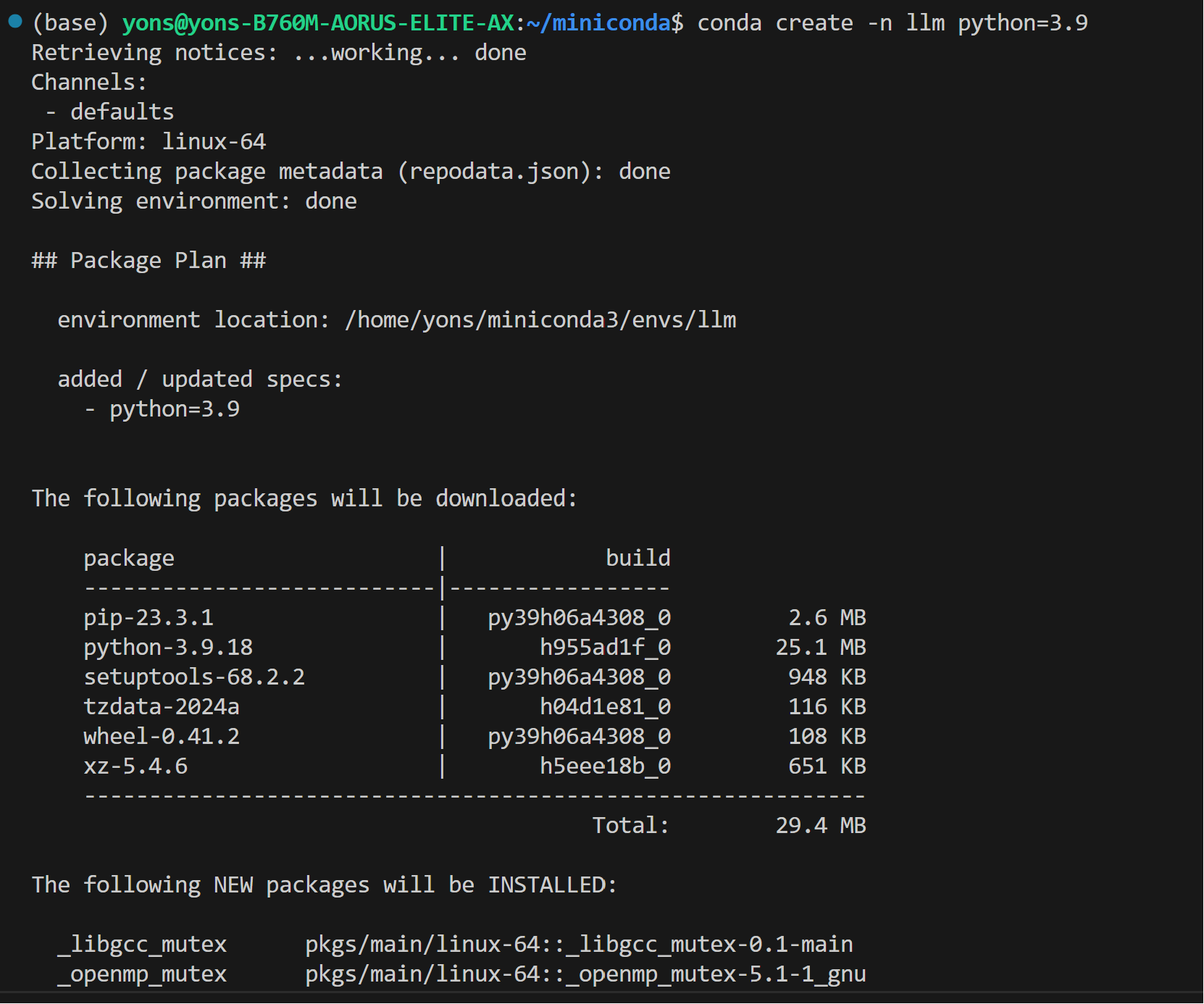

+After installation, create a new python environment `llm`:

+```cmd

+conda create -n llm python=3.9 libuv

+```

+Activate the newly created environment `llm`:

+```cmd

+conda activate llm

+```

+

## Install `ipex-llm`

* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

- conda create -n llm python=3.9

- conda activate llm

-

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

- >

+### Setup Python Environment

+

+Download and install the Miniconda as follows if you don't have conda installed on your machine:

+ ```bash

+ wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

+ bash Miniconda3-latest-Linux-x86_64.sh

+ source ~/.bashrc

+ ```

+

+You can use `conda --version` to verify you conda installation.

+

+After installation, create a new python environment `llm`:

+```cmd

+conda create -n llm python=3.9 libuv

+```

+Activate the newly created environment `llm`:

+```cmd

+conda activate llm

+```

+

## Install `ipex-llm`

* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

- conda create -n llm python=3.9

- conda activate llm

-

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

- >  -

- >

-

- >  -

-

-* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+## Verify Installation

+* You can verify if `ipex-llm` is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,38 +109,44 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >

-

-

-* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+## Verify Installation

+* You can verify if `ipex-llm` is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,38 +109,44 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >  -

-

## Runtime Configurations

To use GPU acceleration on Linux, several environment variables are required or recommended before running a GPU example.

-* For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+```eval_rst

+.. tabs::

+ .. tab:: Intel Arc™ A-Series and Intel Data Center GPU Flex

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+ For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

+ .. code-block:: bash

-* For Intel Data Center GPU Max Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

- # Recommended Environment Variables for optimal performance

- export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- export ENABLE_SDP_FUSION=1

- ```

- Please note that `libtcmalloc.so` can be installed by ```conda install -c conda-forge -y gperftools=2.10```.

+ # Recommended Environment Variables for optimal performance

+ export USE_XETLA=OFF

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ .. tab:: Intel Data Center GPU Max

+

+ For Intel Data Center GPU Max Series, we recommend:

+

+ .. code-block:: bash

+

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

+

+ # Recommended Environment Variables for optimal performance

+ export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ export ENABLE_SDP_FUSION=1

+

+ Please note that ``libtcmalloc.so`` can be installed by ``conda install -c conda-forge -y gperftools=2.10``

+

+```

## A Quick Example

@@ -156,16 +156,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

```bash

conda activate llm

```

-* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

- ```bash

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

-

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+* Step 2: Follow [Runtime Configurations Section](#runtime-configurations) above to prepare your runtime environment.

* Step 3: Create a new file named `demo.py` and insert the code snippet below.

```python

# Copy/Paste the contents to a new file demo.py

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 7997d740..eceb34e6 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -93,18 +93,18 @@ Choose either US or CN website for `extra-index-url`:

```

## Verify Installation

-You can verify if `ipex-llm` is successfully installed by simply running a few lines of code:

+You can verify if `ipex-llm` is successfully installed following below steps.

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

+### Step 1: Runtime Configurations

+ * Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

+ ```cmd

+ conda activate llm

+ ```

+ * Configure oneAPI variables by running the following command:

+ ```cmd

+ call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ ```

+* Set the following environment variables according to your device:

```eval_rst

.. tabs::

@@ -125,9 +125,12 @@ You can verify if `ipex-llm` is successfully installed by simply running a few l

For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

```

-* Step 4: Launch the Python interactive shell by typing `python` in the Anaconda prompt window and then press Enter.

-* Step 5: Copy following code to Anaconda prompt **line by line** and press Enter **after copying each line**.

+### Step 2: Run Python Code

+

+* Launch the Python interactive shell by typing `python` in the Anaconda prompt window and then press Enter.

+

+* Copy following code to Anaconda prompt **line by line** and press Enter **after copying each line**.

```python

import torch

from ipex_llm.transformers import AutoModel,AutoModelForCausalLM

@@ -156,41 +159,12 @@ To monitor your GPU's performance and status (e.g. memory consumption, utilizati

Now let's play with a real LLM. We'll be using the [Qwen-1.8B-Chat](https://huggingface.co/Qwen/Qwen-1_8B-Chat) model, a 1.8 billion parameter LLM for this demonstration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

-

- ```eval_rst

- .. tabs::

- .. tab:: Intel iGPU

-

- .. code-block:: cmd

-

- set SYCL_CACHE_PERSISTENT=1

- set BIGDL_LLM_XMX_DISABLED=1

-

- .. tab:: Intel Arc™ A770

-

- There is no need to set further environment variables.

- ```

-

- ```eval_rst

- .. seealso::

-

- For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

- ```

-* Step 4: Install additional package required for Qwen-1.8B-Chat to conduct:

+* Step 1: Follow [Runtime Configurations Section](#step-1-runtime-configurations) above to prepare your runtime environment.

+* Step 2: Install additional package required for Qwen-1.8B-Chat to conduct:

```cmd

pip install tiktoken transformers_stream_generator einops

```

-* Step 5: Create code file. IPEX-LLM supports loading model from Hugging Face or ModelScope. Please choose according to your requirements.

+* Step 3: Create code file. IPEX-LLM supports loading model from Hugging Face or ModelScope. Please choose according to your requirements.

```eval_rst

.. tabs::

.. tab:: Hugging Face

@@ -322,7 +296,7 @@ Now let's play with a real LLM. We'll be using the [Qwen-1.8B-Chat](https://hugg

This will allow the memory-intensive embedding layer to utilize the CPU instead of GPU.

```

-* Step 5. Run `demo.py` within the activated Python environment using the following command:

+* Step 4. Run `demo.py` within the activated Python environment using the following command:

```cmd

python demo.py

```

-

-

## Runtime Configurations

To use GPU acceleration on Linux, several environment variables are required or recommended before running a GPU example.

-* For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+```eval_rst

+.. tabs::

+ .. tab:: Intel Arc™ A-Series and Intel Data Center GPU Flex

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+ For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

+ .. code-block:: bash

-* For Intel Data Center GPU Max Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

- # Recommended Environment Variables for optimal performance

- export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- export ENABLE_SDP_FUSION=1

- ```

- Please note that `libtcmalloc.so` can be installed by ```conda install -c conda-forge -y gperftools=2.10```.

+ # Recommended Environment Variables for optimal performance

+ export USE_XETLA=OFF

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ .. tab:: Intel Data Center GPU Max

+

+ For Intel Data Center GPU Max Series, we recommend:

+

+ .. code-block:: bash

+

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

+

+ # Recommended Environment Variables for optimal performance

+ export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ export ENABLE_SDP_FUSION=1

+

+ Please note that ``libtcmalloc.so`` can be installed by ``conda install -c conda-forge -y gperftools=2.10``

+

+```

## A Quick Example

@@ -156,16 +156,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

```bash

conda activate llm

```

-* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

- ```bash

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

-

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+* Step 2: Follow [Runtime Configurations Section](#runtime-configurations) above to prepare your runtime environment.

* Step 3: Create a new file named `demo.py` and insert the code snippet below.

```python

# Copy/Paste the contents to a new file demo.py

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 7997d740..eceb34e6 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -93,18 +93,18 @@ Choose either US or CN website for `extra-index-url`:

```

## Verify Installation

-You can verify if `ipex-llm` is successfully installed by simply running a few lines of code:

+You can verify if `ipex-llm` is successfully installed following below steps.

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

+### Step 1: Runtime Configurations

+ * Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

+ ```cmd

+ conda activate llm

+ ```

+ * Configure oneAPI variables by running the following command:

+ ```cmd

+ call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ ```

+* Set the following environment variables according to your device:

```eval_rst

.. tabs::

@@ -125,9 +125,12 @@ You can verify if `ipex-llm` is successfully installed by simply running a few l

For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

```

-* Step 4: Launch the Python interactive shell by typing `python` in the Anaconda prompt window and then press Enter.

-* Step 5: Copy following code to Anaconda prompt **line by line** and press Enter **after copying each line**.

+### Step 2: Run Python Code

+

+* Launch the Python interactive shell by typing `python` in the Anaconda prompt window and then press Enter.

+

+* Copy following code to Anaconda prompt **line by line** and press Enter **after copying each line**.

```python

import torch

from ipex_llm.transformers import AutoModel,AutoModelForCausalLM

@@ -156,41 +159,12 @@ To monitor your GPU's performance and status (e.g. memory consumption, utilizati

Now let's play with a real LLM. We'll be using the [Qwen-1.8B-Chat](https://huggingface.co/Qwen/Qwen-1_8B-Chat) model, a 1.8 billion parameter LLM for this demonstration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

-

- ```eval_rst

- .. tabs::

- .. tab:: Intel iGPU

-

- .. code-block:: cmd

-

- set SYCL_CACHE_PERSISTENT=1

- set BIGDL_LLM_XMX_DISABLED=1

-

- .. tab:: Intel Arc™ A770

-

- There is no need to set further environment variables.

- ```

-

- ```eval_rst

- .. seealso::

-

- For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

- ```

-* Step 4: Install additional package required for Qwen-1.8B-Chat to conduct:

+* Step 1: Follow [Runtime Configurations Section](#step-1-runtime-configurations) above to prepare your runtime environment.

+* Step 2: Install additional package required for Qwen-1.8B-Chat to conduct:

```cmd

pip install tiktoken transformers_stream_generator einops

```

-* Step 5: Create code file. IPEX-LLM supports loading model from Hugging Face or ModelScope. Please choose according to your requirements.

+* Step 3: Create code file. IPEX-LLM supports loading model from Hugging Face or ModelScope. Please choose according to your requirements.

```eval_rst

.. tabs::

.. tab:: Hugging Face

@@ -322,7 +296,7 @@ Now let's play with a real LLM. We'll be using the [Qwen-1.8B-Chat](https://hugg

This will allow the memory-intensive embedding layer to utilize the CPU instead of GPU.

```

-* Step 5. Run `demo.py` within the activated Python environment using the following command:

+* Step 4. Run `demo.py` within the activated Python environment using the following command:

```cmd

python demo.py

```

-

-

-## Install oneAPI

+### Install oneAPI

```

wget -O- https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB | gpg --dearmor | sudo tee /usr/share/keyrings/oneapi-archive-keyring.gpg > /dev/null

@@ -90,23 +71,36 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>

-

-

-## Install oneAPI

+### Install oneAPI

```

wget -O- https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB | gpg --dearmor | sudo tee /usr/share/keyrings/oneapi-archive-keyring.gpg > /dev/null

@@ -90,23 +71,36 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>  +### Setup Python Environment

+

+Download and install the Miniconda as follows if you don't have conda installed on your machine:

+ ```bash

+ wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

+ bash Miniconda3-latest-Linux-x86_64.sh

+ source ~/.bashrc

+ ```

+

+You can use `conda --version` to verify you conda installation.

+

+After installation, create a new python environment `llm`:

+```cmd

+conda create -n llm python=3.9 libuv

+```

+Activate the newly created environment `llm`:

+```cmd

+conda activate llm

+```

+

## Install `ipex-llm`

* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

- conda create -n llm python=3.9

- conda activate llm

-

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

- >

+### Setup Python Environment

+

+Download and install the Miniconda as follows if you don't have conda installed on your machine:

+ ```bash

+ wget https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh

+ bash Miniconda3-latest-Linux-x86_64.sh

+ source ~/.bashrc

+ ```

+

+You can use `conda --version` to verify you conda installation.

+

+After installation, create a new python environment `llm`:

+```cmd

+conda create -n llm python=3.9 libuv

+```

+Activate the newly created environment `llm`:

+```cmd

+conda activate llm

+```

+

## Install `ipex-llm`

* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

- conda create -n llm python=3.9

- conda activate llm

-

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

- >  -

- >

-

- >  -

-

-* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+## Verify Installation

+* You can verify if `ipex-llm` is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,38 +109,44 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >

-

-

-* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+## Verify Installation

+* You can verify if `ipex-llm` is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,38 +109,44 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >  -

-

## Runtime Configurations

To use GPU acceleration on Linux, several environment variables are required or recommended before running a GPU example.

-* For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+```eval_rst

+.. tabs::

+ .. tab:: Intel Arc™ A-Series and Intel Data Center GPU Flex

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+ For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

+ .. code-block:: bash

-* For Intel Data Center GPU Max Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

- # Recommended Environment Variables for optimal performance

- export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- export ENABLE_SDP_FUSION=1

- ```

- Please note that `libtcmalloc.so` can be installed by ```conda install -c conda-forge -y gperftools=2.10```.

+ # Recommended Environment Variables for optimal performance

+ export USE_XETLA=OFF

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ .. tab:: Intel Data Center GPU Max

+

+ For Intel Data Center GPU Max Series, we recommend:

+

+ .. code-block:: bash

+

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

+

+ # Recommended Environment Variables for optimal performance

+ export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ export ENABLE_SDP_FUSION=1

+

+ Please note that ``libtcmalloc.so`` can be installed by ``conda install -c conda-forge -y gperftools=2.10``

+

+```

## A Quick Example

@@ -156,16 +156,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

```bash

conda activate llm

```

-* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

- ```bash

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

-

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+* Step 2: Follow [Runtime Configurations Section](#runtime-configurations) above to prepare your runtime environment.

* Step 3: Create a new file named `demo.py` and insert the code snippet below.

```python

# Copy/Paste the contents to a new file demo.py

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 7997d740..eceb34e6 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -93,18 +93,18 @@ Choose either US or CN website for `extra-index-url`:

```

## Verify Installation

-You can verify if `ipex-llm` is successfully installed by simply running a few lines of code:

+You can verify if `ipex-llm` is successfully installed following below steps.

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

+### Step 1: Runtime Configurations

+ * Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

+ ```cmd

+ conda activate llm

+ ```

+ * Configure oneAPI variables by running the following command:

+ ```cmd

+ call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ ```

+* Set the following environment variables according to your device:

```eval_rst

.. tabs::

@@ -125,9 +125,12 @@ You can verify if `ipex-llm` is successfully installed by simply running a few l

For other Intel dGPU Series, please refer to `this guide

-

-

## Runtime Configurations

To use GPU acceleration on Linux, several environment variables are required or recommended before running a GPU example.

-* For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+```eval_rst

+.. tabs::

+ .. tab:: Intel Arc™ A-Series and Intel Data Center GPU Flex

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+ For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

+ .. code-block:: bash

-* For Intel Data Center GPU Max Series, we recommend:

- ```bash

- # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

- # Recommended Environment Variables for optimal performance

- export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- export ENABLE_SDP_FUSION=1

- ```

- Please note that `libtcmalloc.so` can be installed by ```conda install -c conda-forge -y gperftools=2.10```.

+ # Recommended Environment Variables for optimal performance

+ export USE_XETLA=OFF

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ .. tab:: Intel Data Center GPU Max

+

+ For Intel Data Center GPU Max Series, we recommend:

+

+ .. code-block:: bash

+

+ # Configure oneAPI environment variables. Required step for APT or offline installed oneAPI.

+ # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

+ source /opt/intel/oneapi/setvars.sh

+

+ # Recommended Environment Variables for optimal performance

+ export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so

+ export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

+ export ENABLE_SDP_FUSION=1

+

+ Please note that ``libtcmalloc.so`` can be installed by ``conda install -c conda-forge -y gperftools=2.10``

+

+```

## A Quick Example

@@ -156,16 +156,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

```bash

conda activate llm

```

-* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

- ```bash

- # Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

- source /opt/intel/oneapi/setvars.sh

-

- # Recommended Environment Variables for optimal performance

- export USE_XETLA=OFF

- export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

- ```

+* Step 2: Follow [Runtime Configurations Section](#runtime-configurations) above to prepare your runtime environment.

* Step 3: Create a new file named `demo.py` and insert the code snippet below.

```python

# Copy/Paste the contents to a new file demo.py

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 7997d740..eceb34e6 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -93,18 +93,18 @@ Choose either US or CN website for `extra-index-url`:

```

## Verify Installation

-You can verify if `ipex-llm` is successfully installed by simply running a few lines of code:

+You can verify if `ipex-llm` is successfully installed following below steps.

-* Step 1: Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

- ```cmd

- conda activate llm

- ```

-* Step 2: Configure oneAPI variables by running the following command:

- ```cmd

- call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

- ```

-* Step 3:

- Please also set the following environment variable according to your device:

+### Step 1: Runtime Configurations

+ * Open the **Anaconda Prompt** and activate the Python environment `llm` you previously created:

+ ```cmd

+ conda activate llm

+ ```

+ * Configure oneAPI variables by running the following command:

+ ```cmd

+ call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+ ```

+* Set the following environment variables according to your device:

```eval_rst

.. tabs::

@@ -125,9 +125,12 @@ You can verify if `ipex-llm` is successfully installed by simply running a few l

For other Intel dGPU Series, please refer to `this guide