diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

index 1d465f3e..b43b8cbd 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

@@ -39,17 +39,30 @@ Follow the guide that corresponds to your specific system and device from the li

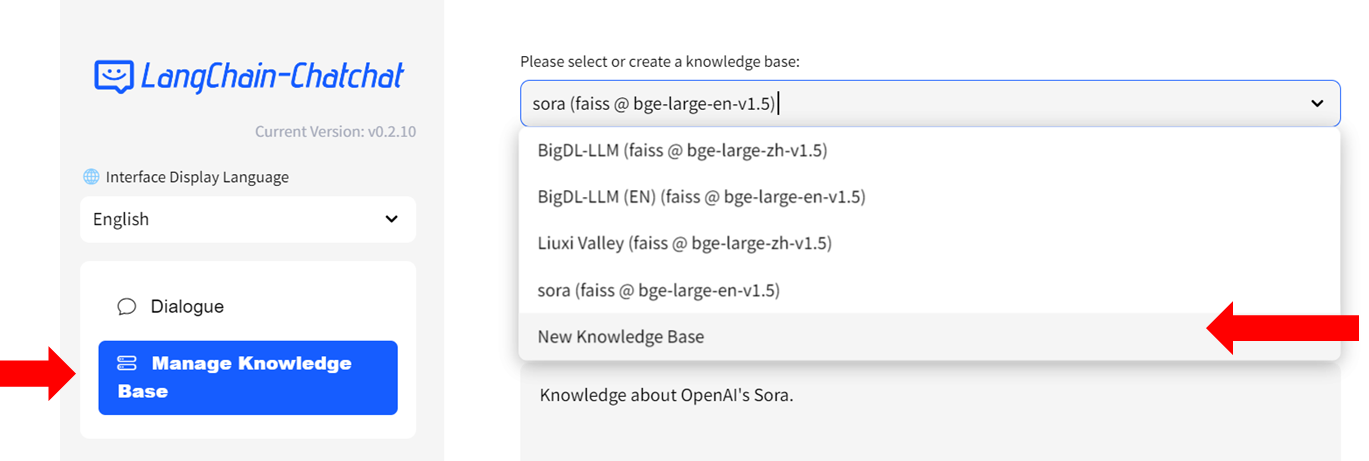

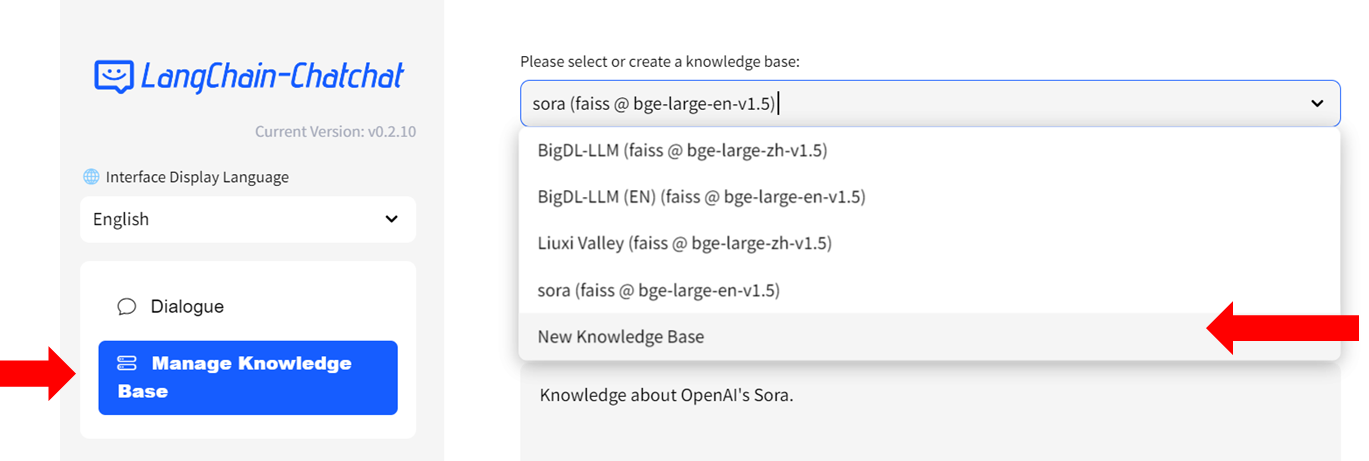

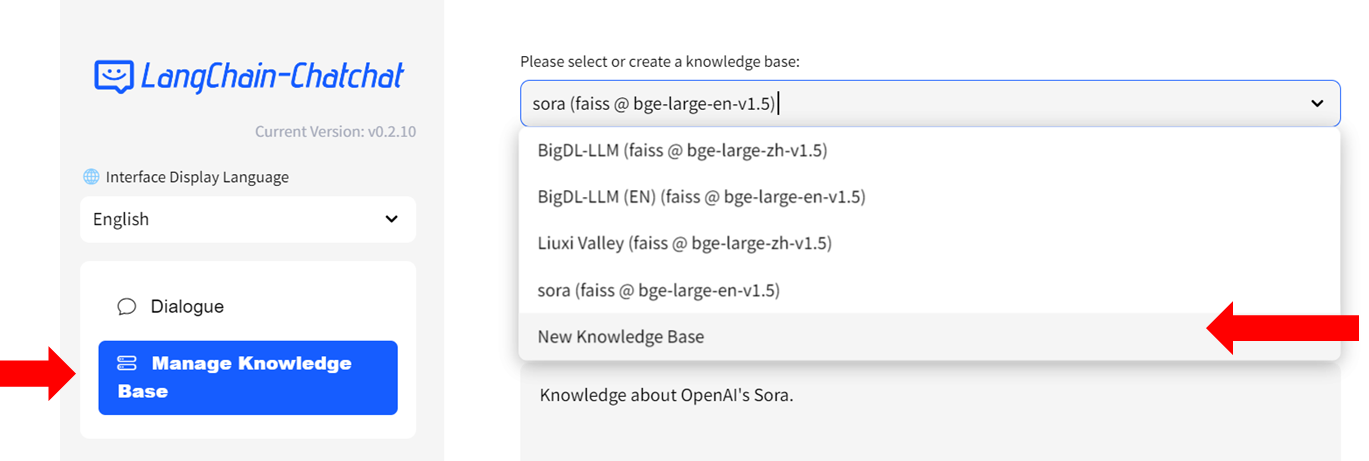

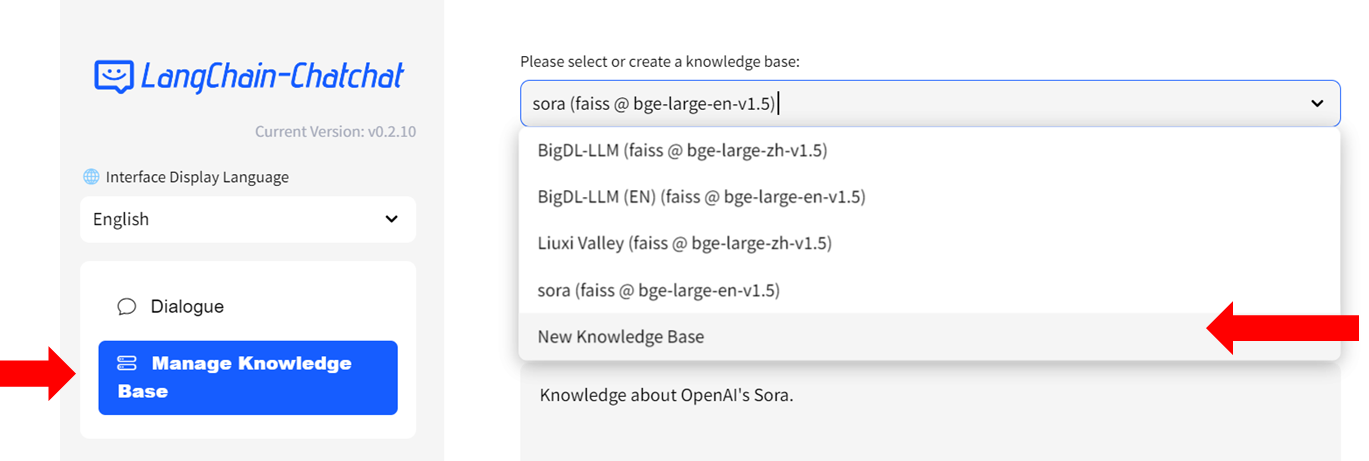

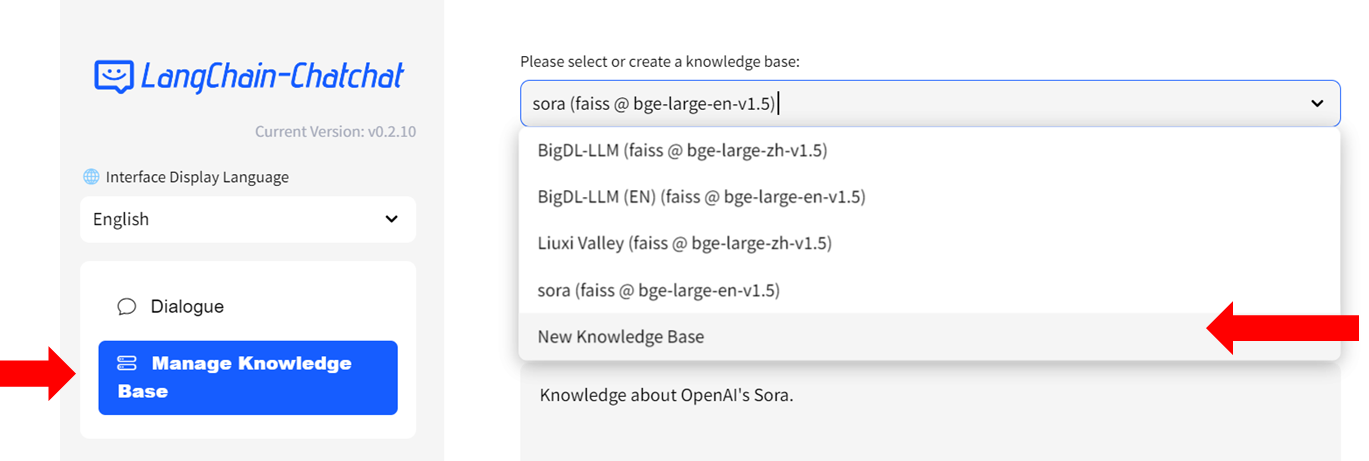

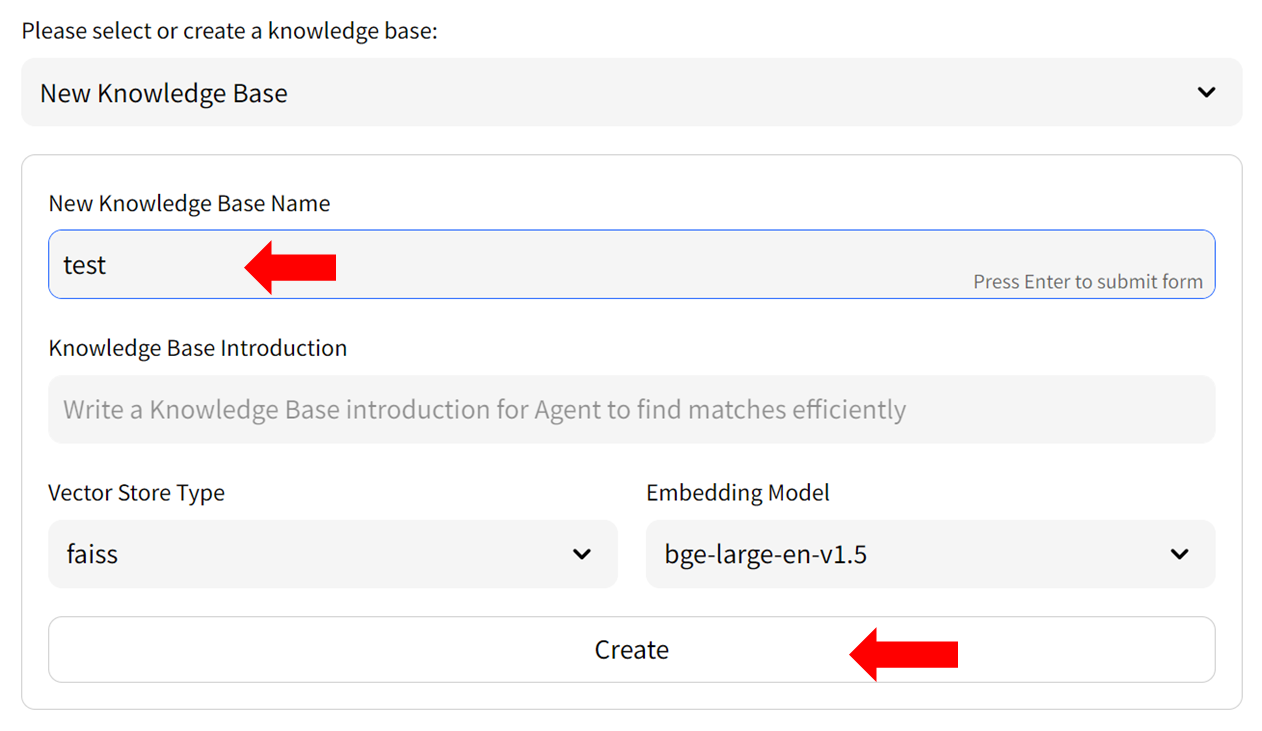

#### Step 1: Create Knowledge Base

- Select `Manage Knowledge Base` from the menu on the left, then choose `New Knowledge Base` from the dropdown menu on the right side.

-

+

+

+  +

+

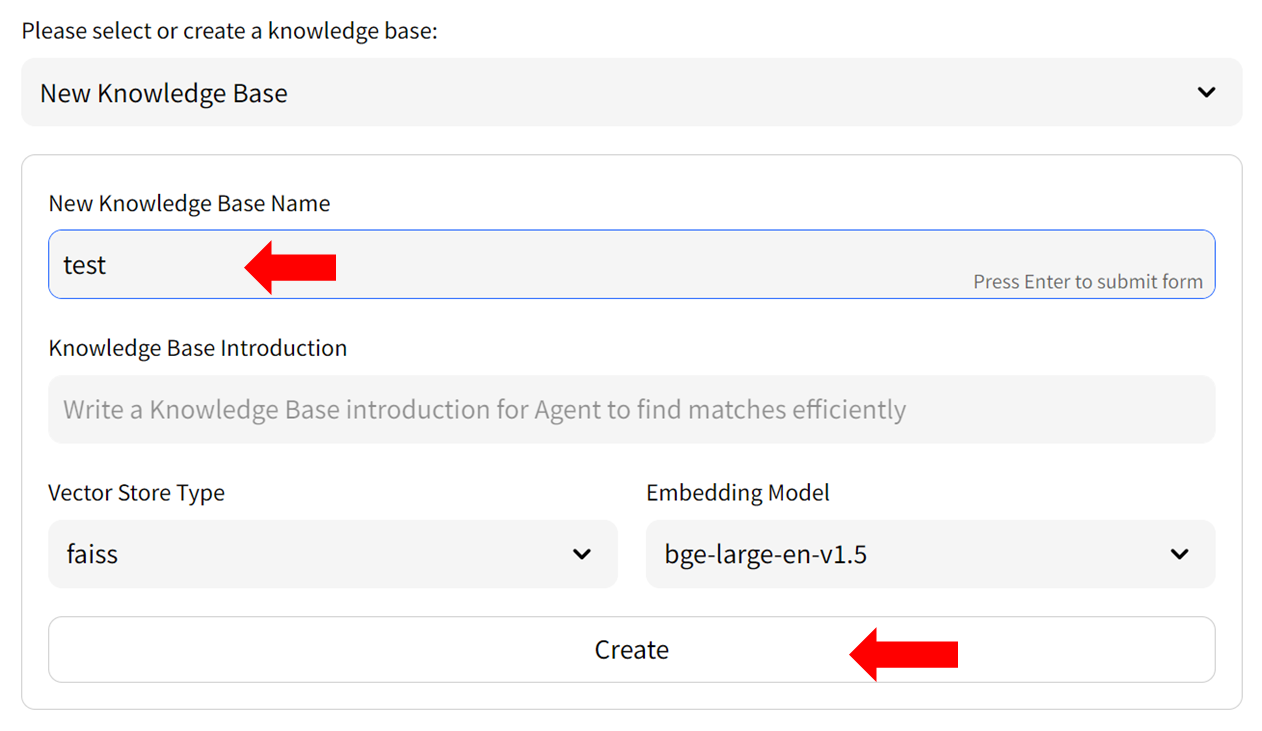

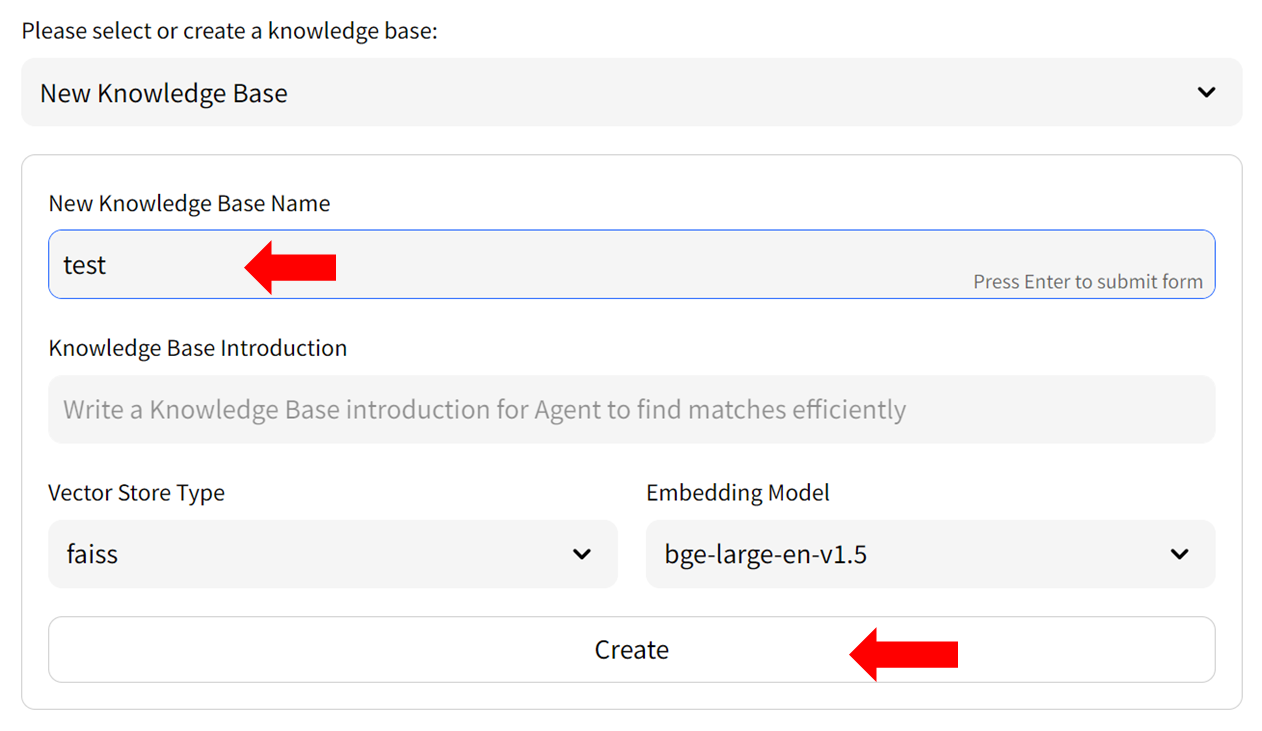

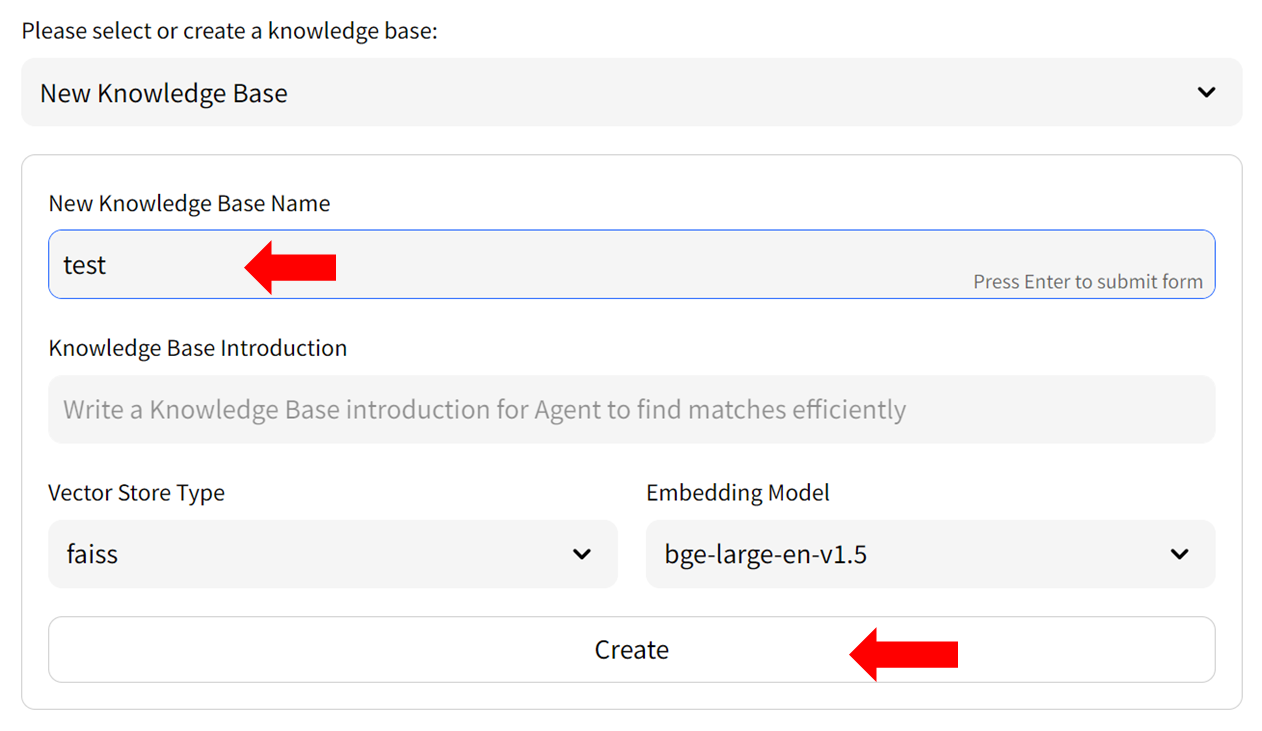

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

+  +

+

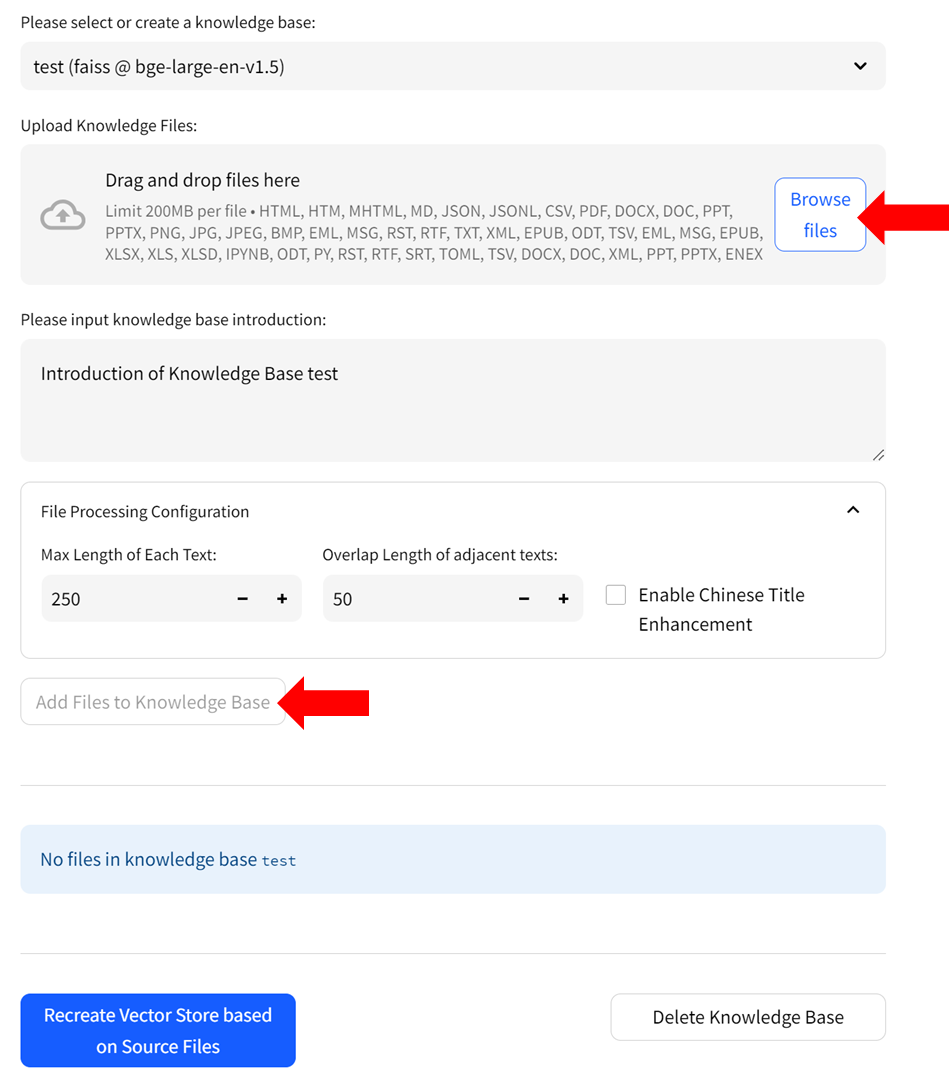

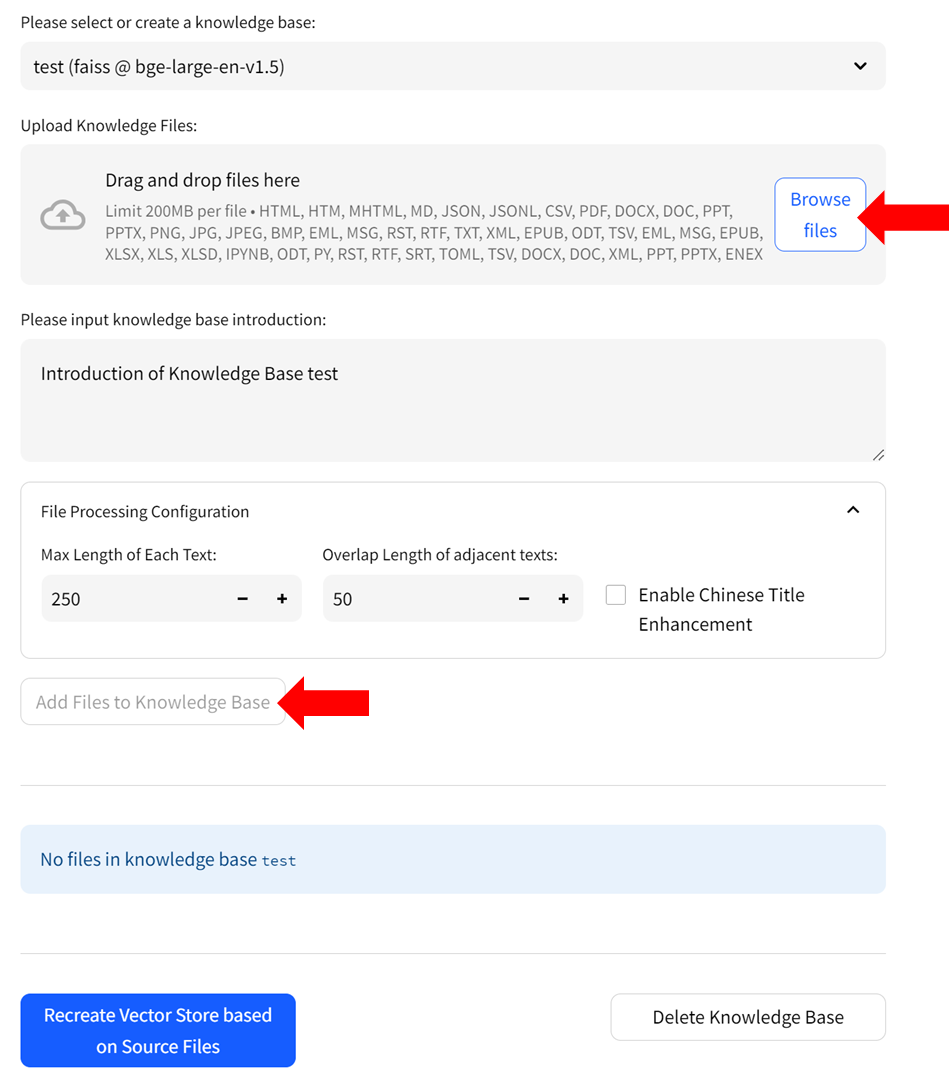

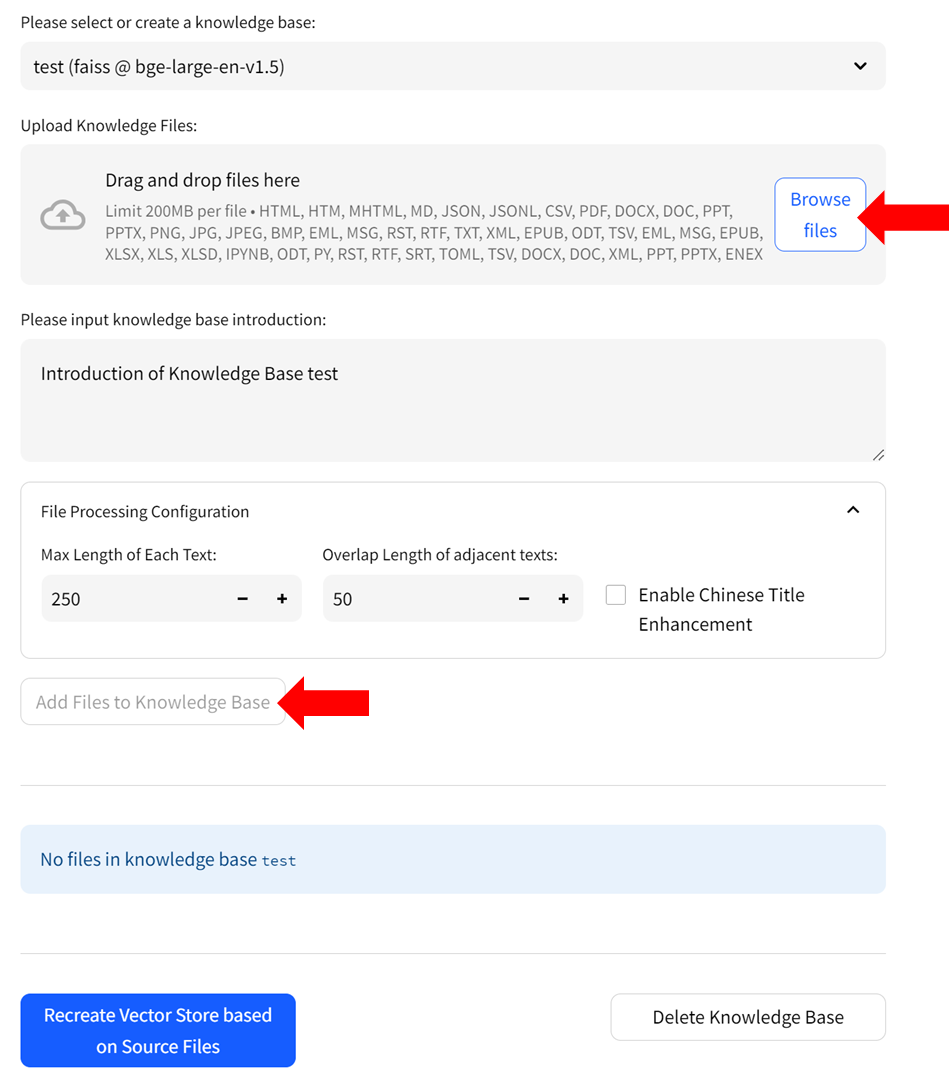

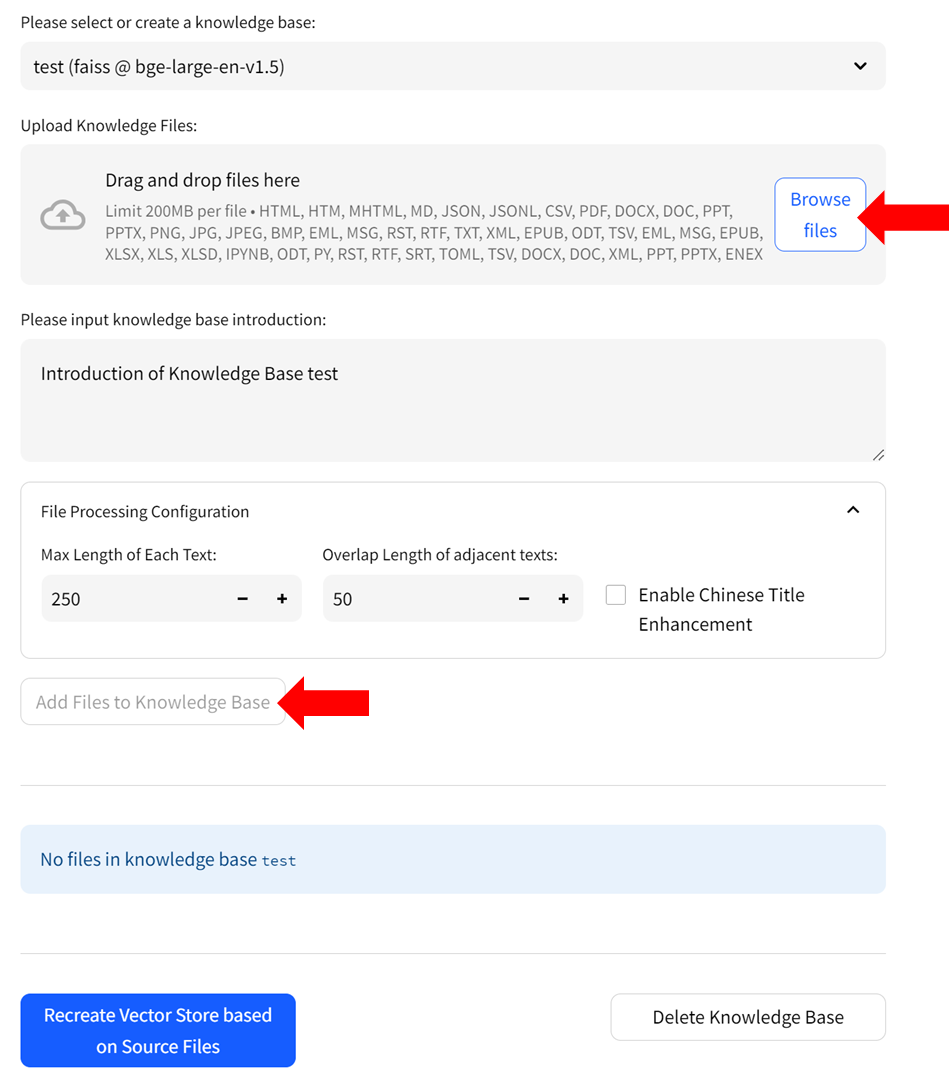

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

+

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

+

+  +

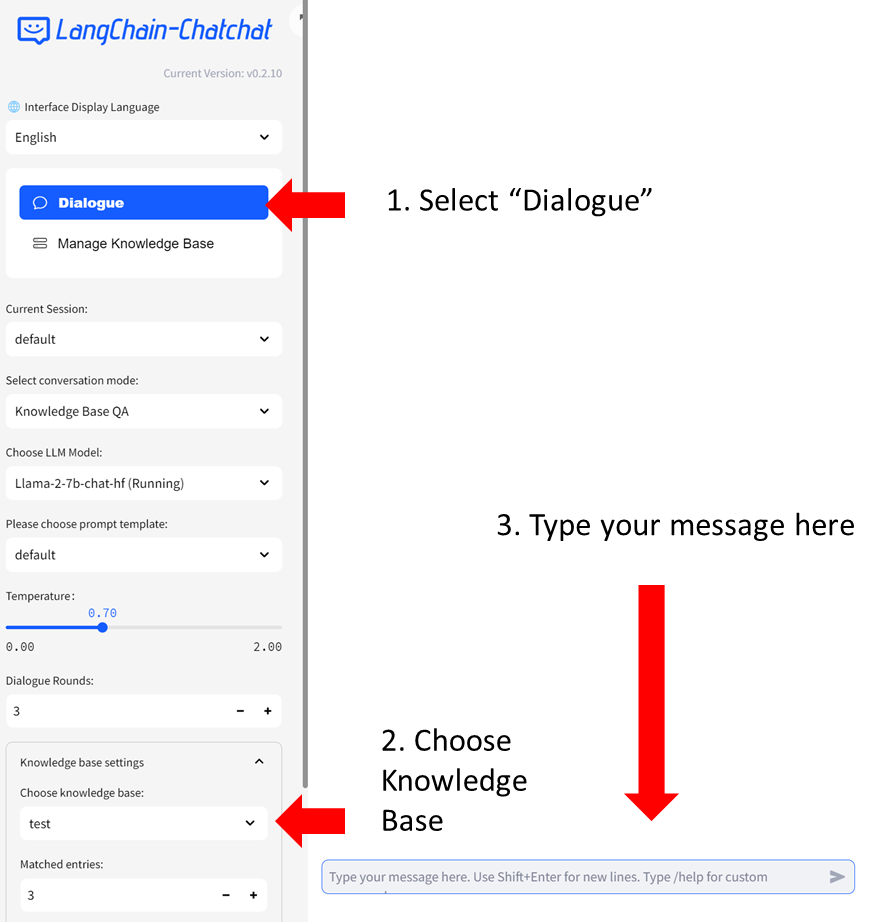

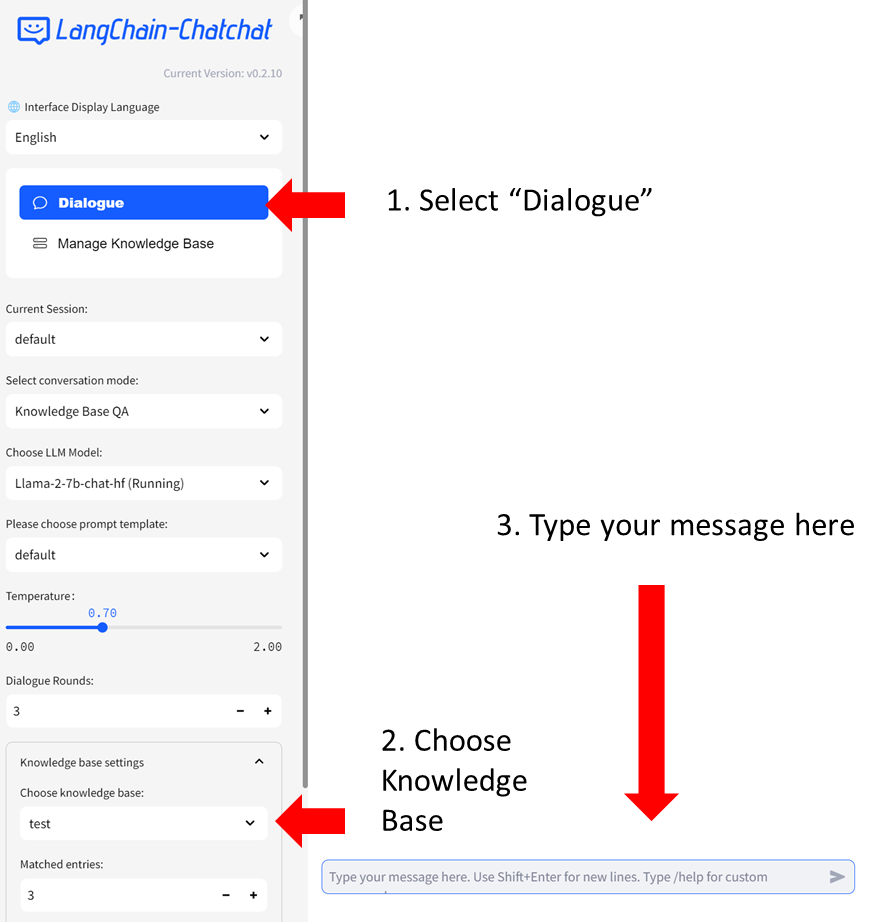

#### Step 2: Chat with RAG

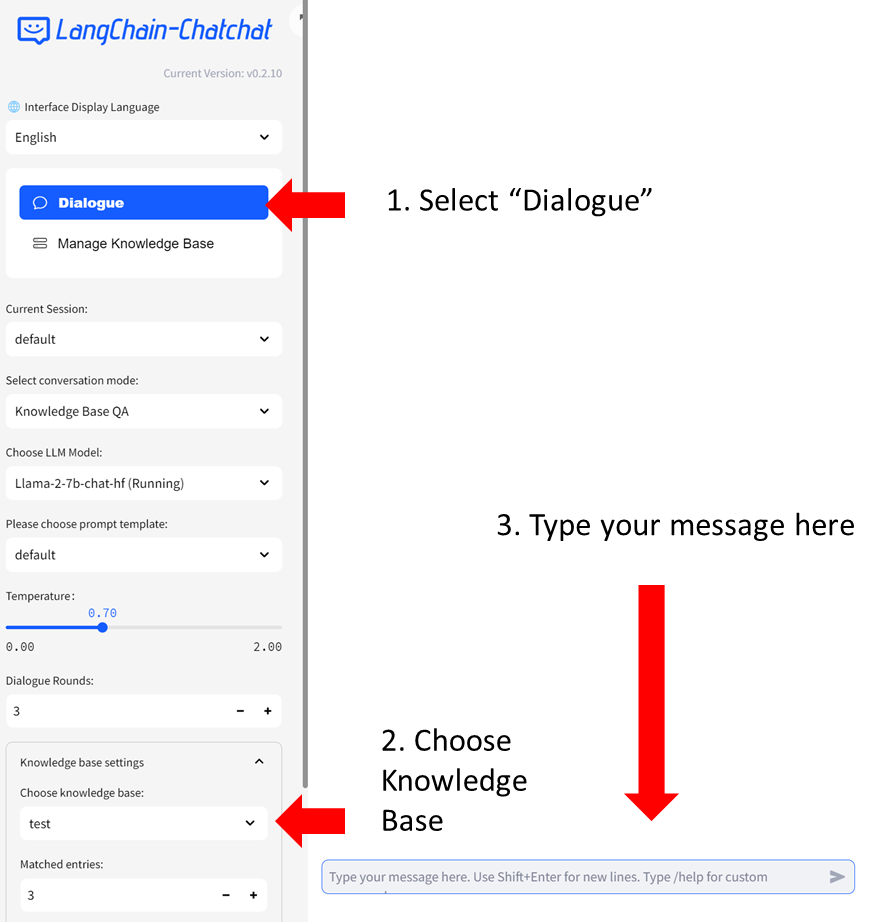

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

#### Step 2: Chat with RAG

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

+  +

+

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

index 51952f82..a71b82ea 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/dify_quickstart.md

@@ -125,32 +125,30 @@ Open your browser and access the Dify UI at `http://localhost:3000`.

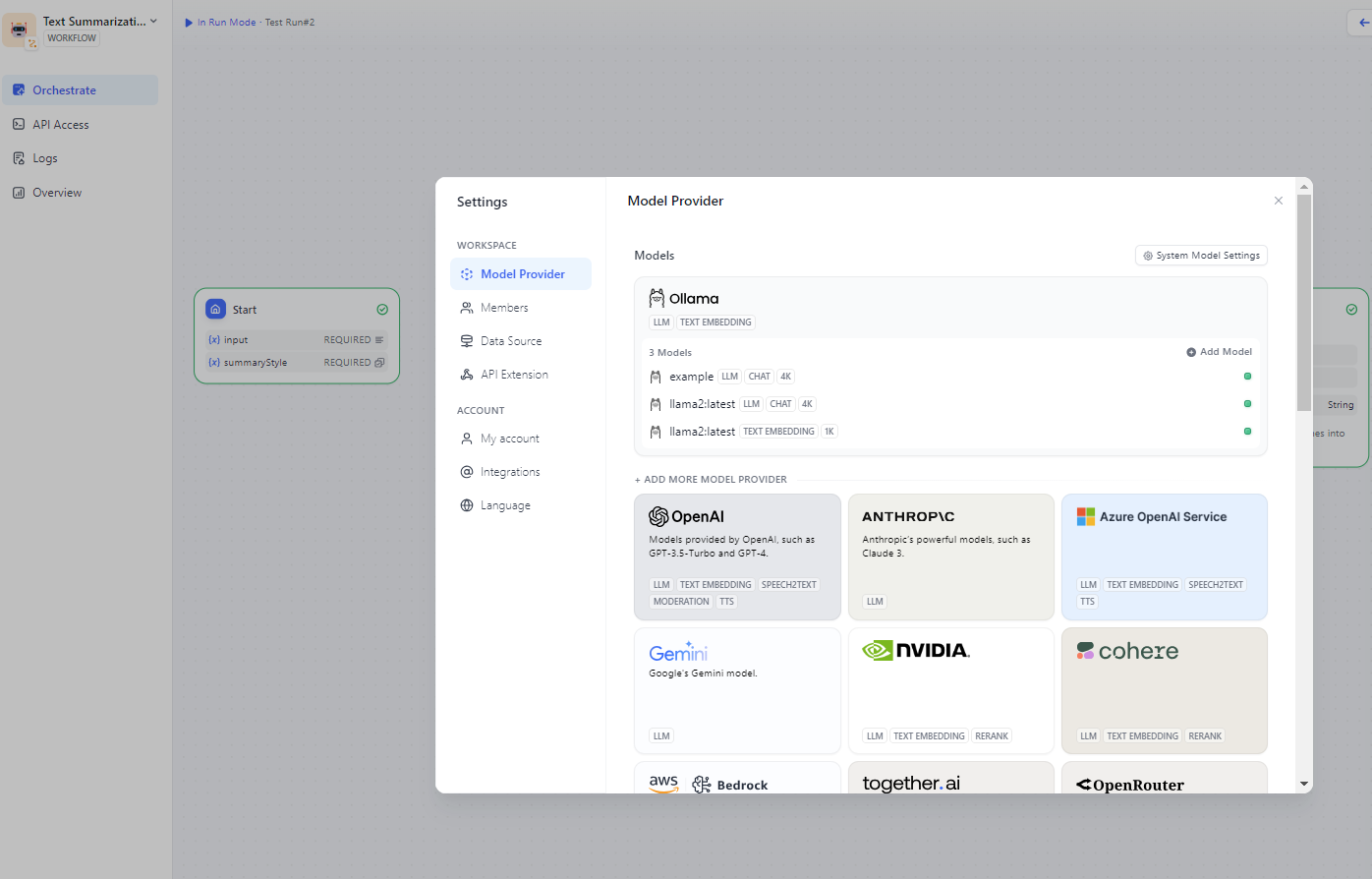

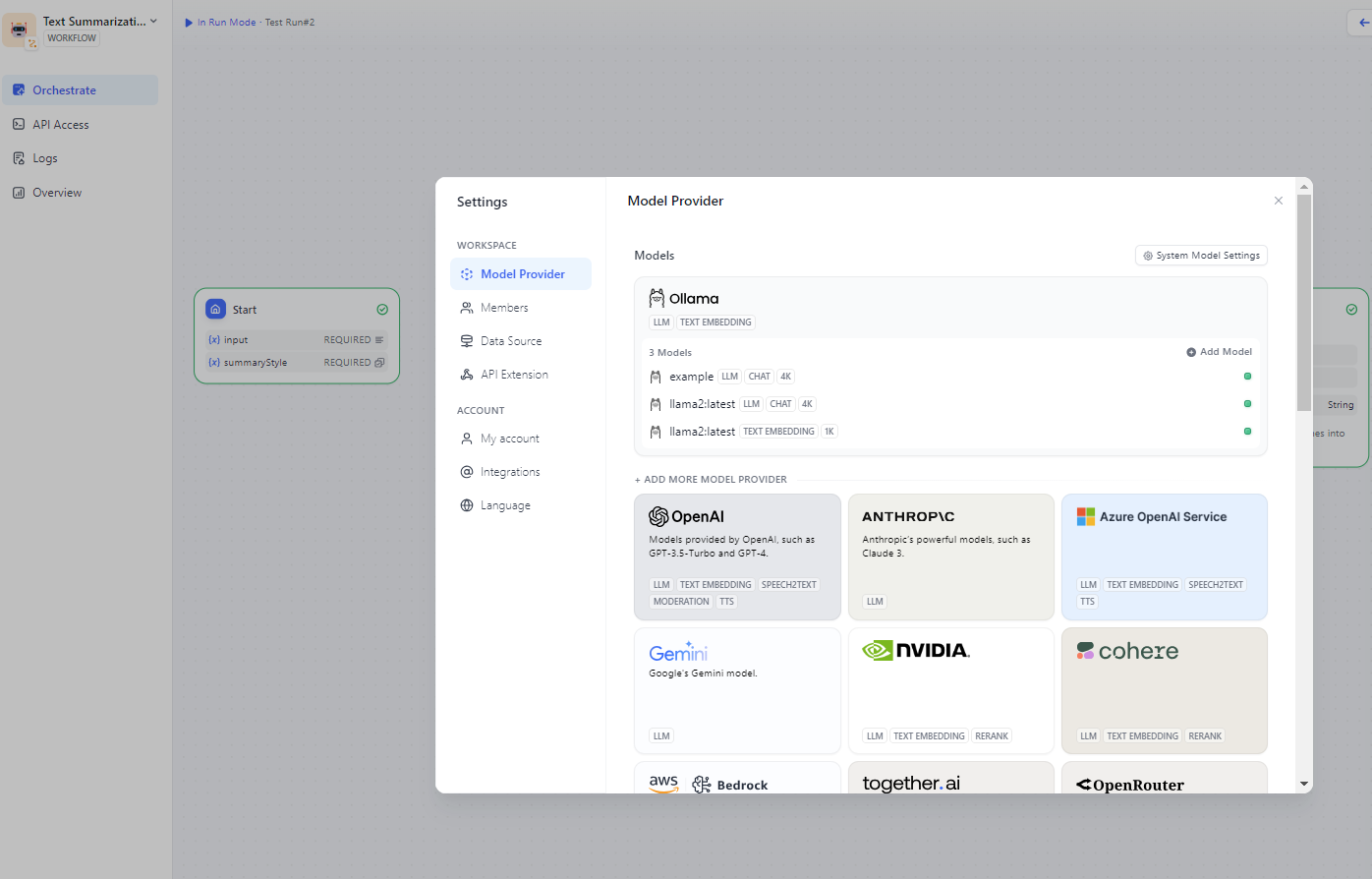

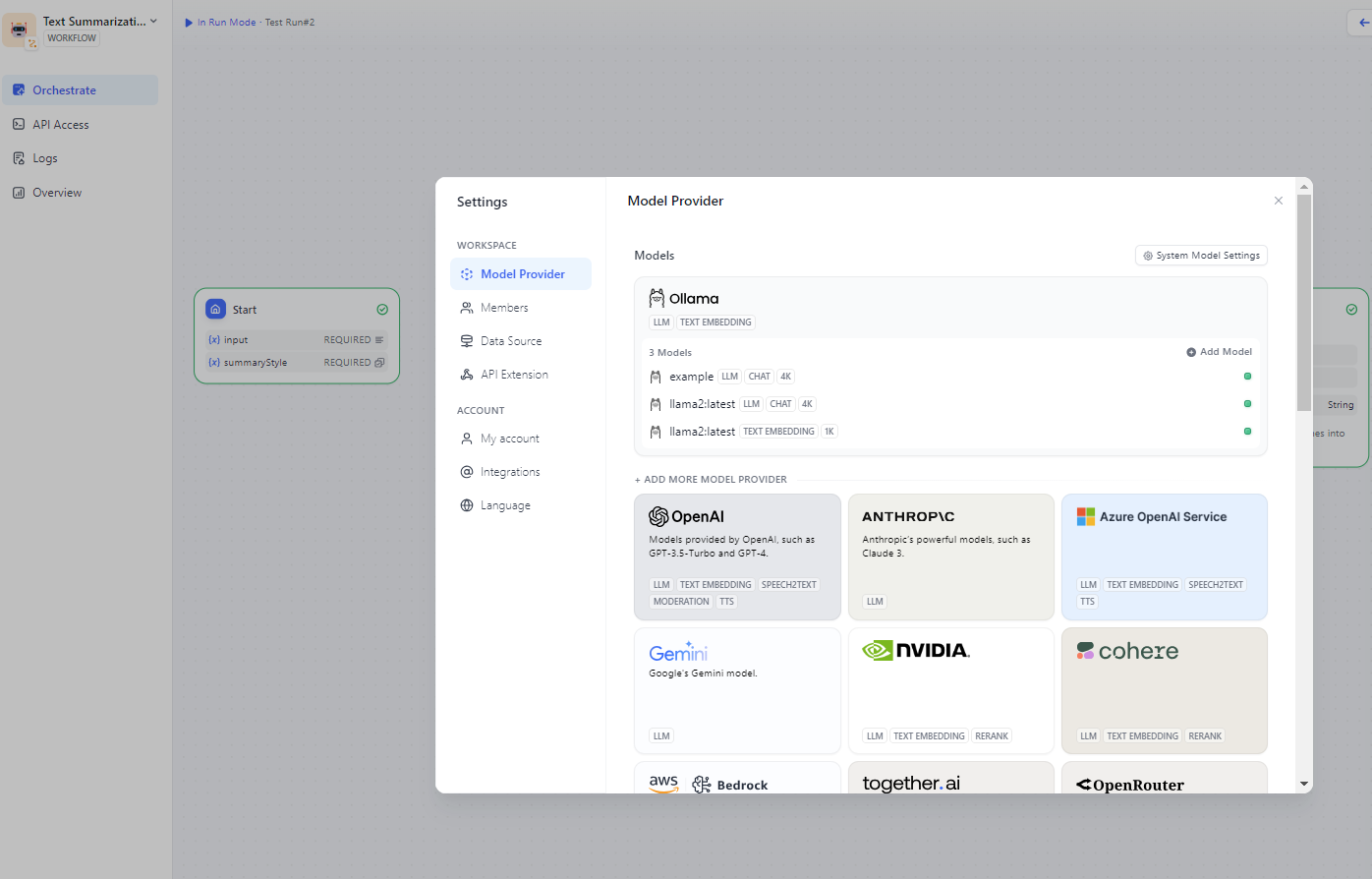

Configure the Ollama URL in `Settings > Model Providers > Ollama`. For detailed instructions on how to do this, see the [Ollama Guide in the Dify Documentation](https://docs.dify.ai/tutorials/model-configuration/ollama).

-

+

Once Ollama is successfully connected, you will see a list of Ollama models similar to the following:

+

+  +

+

-

-

-

-

-

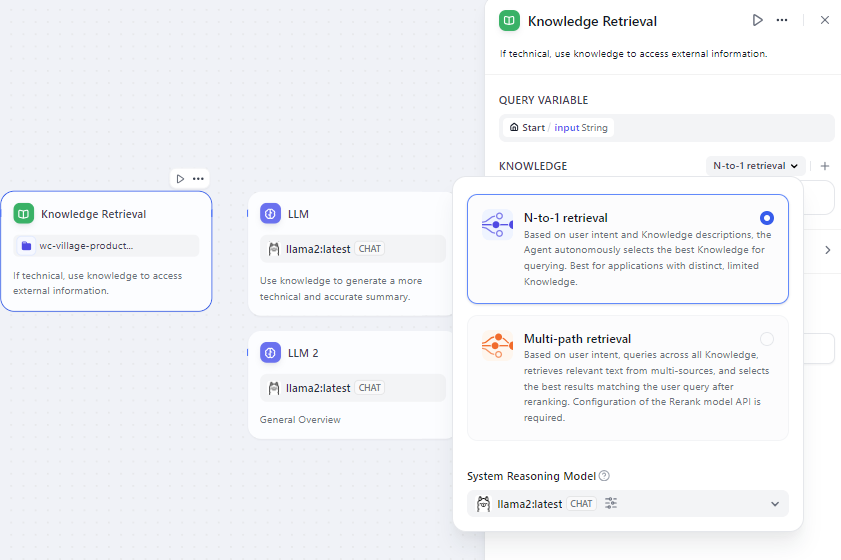

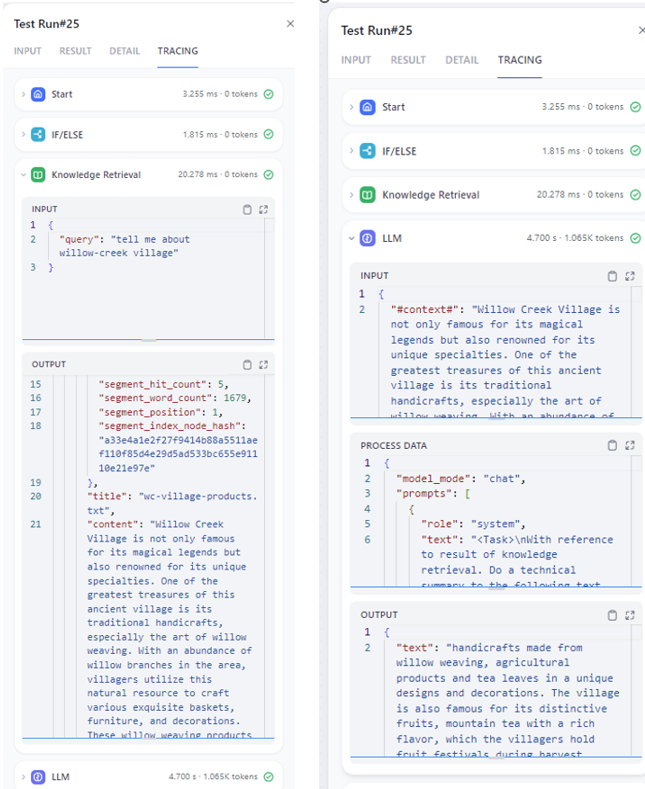

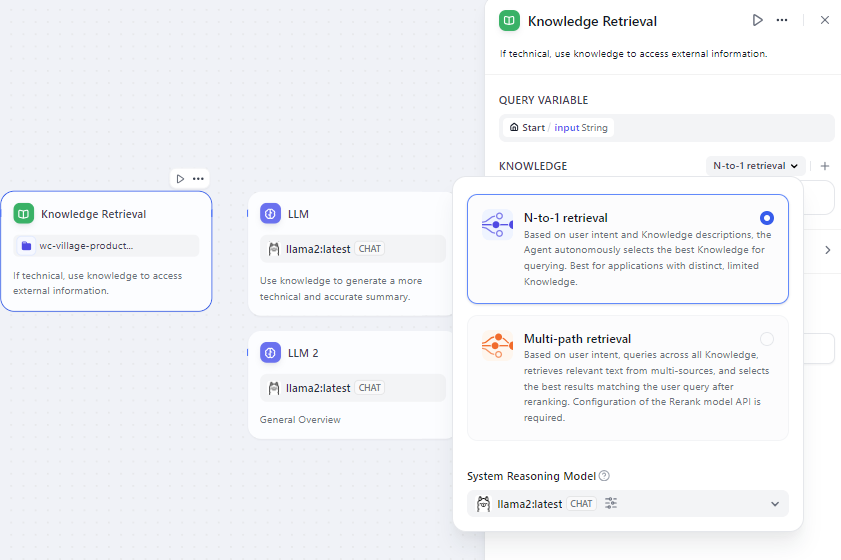

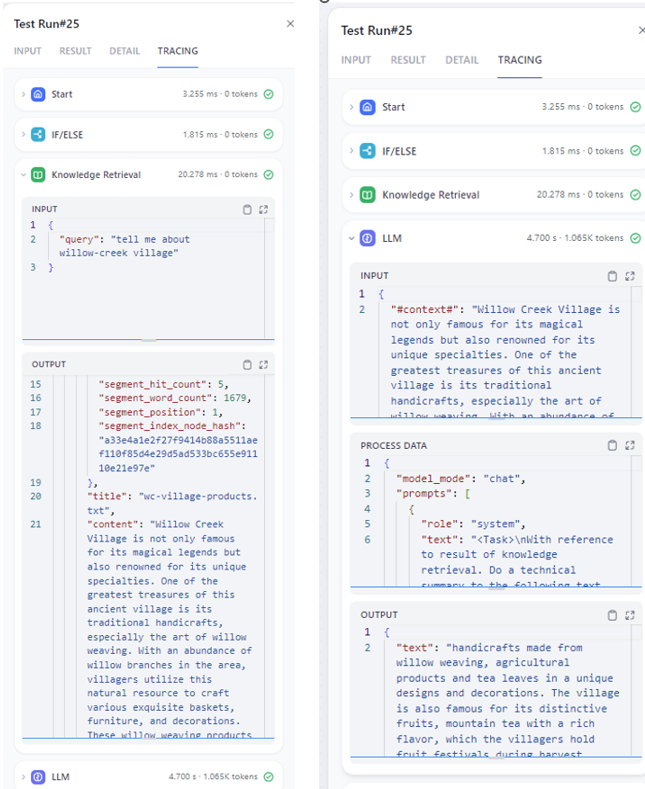

+  +

+

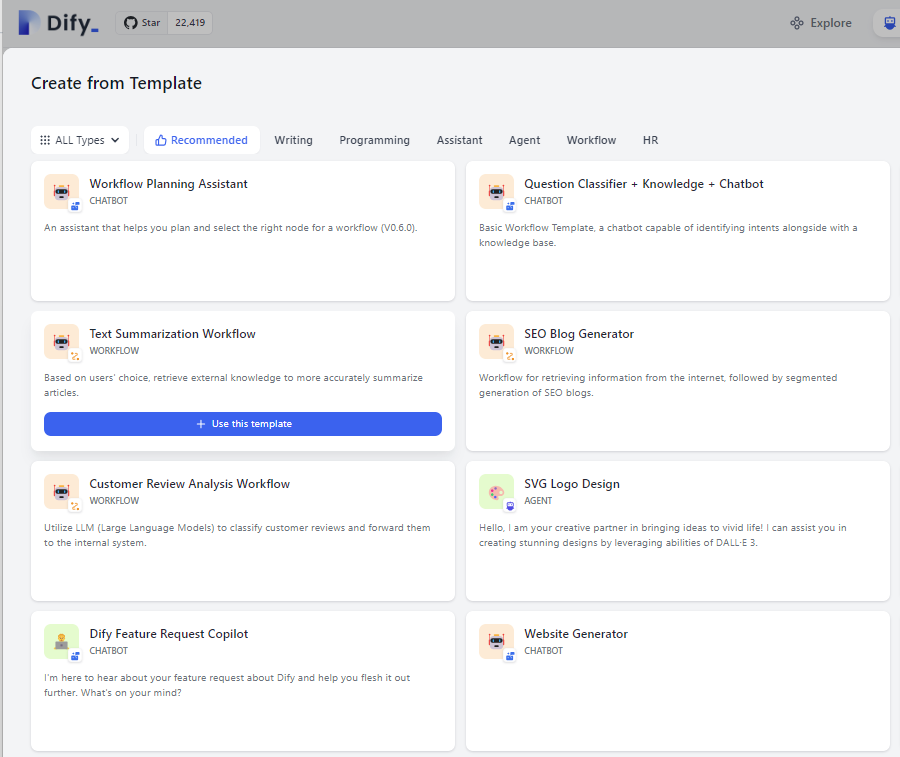

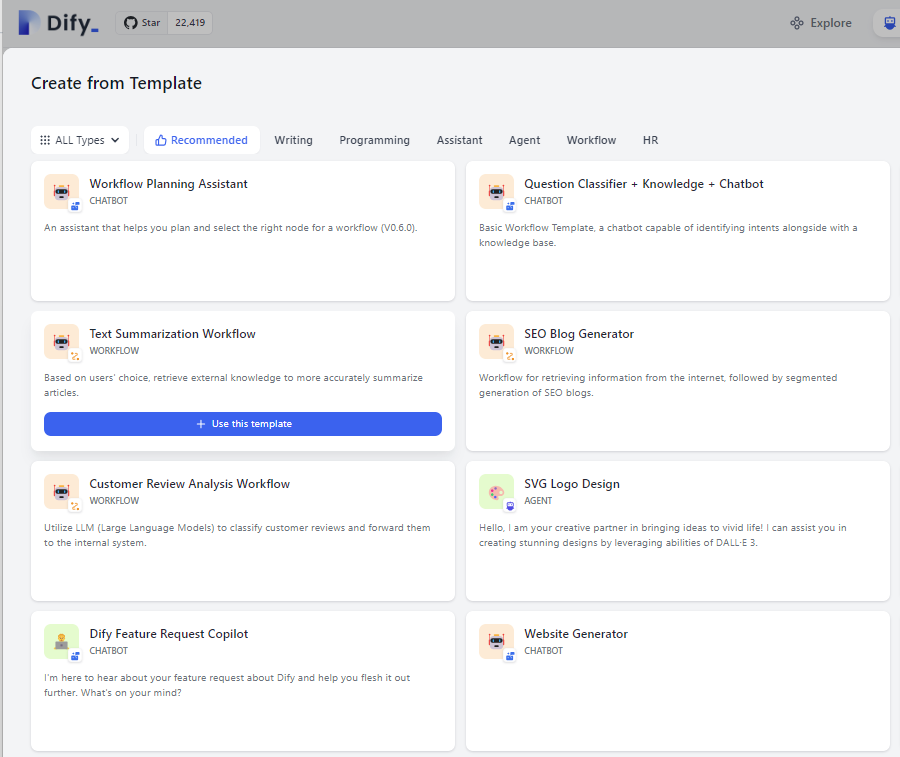

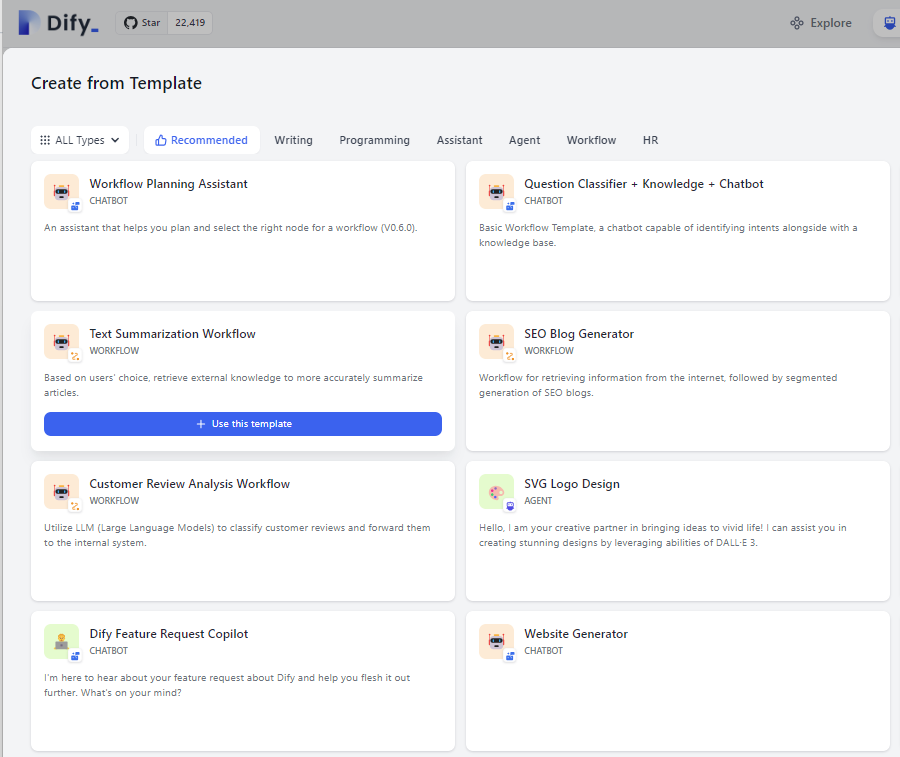

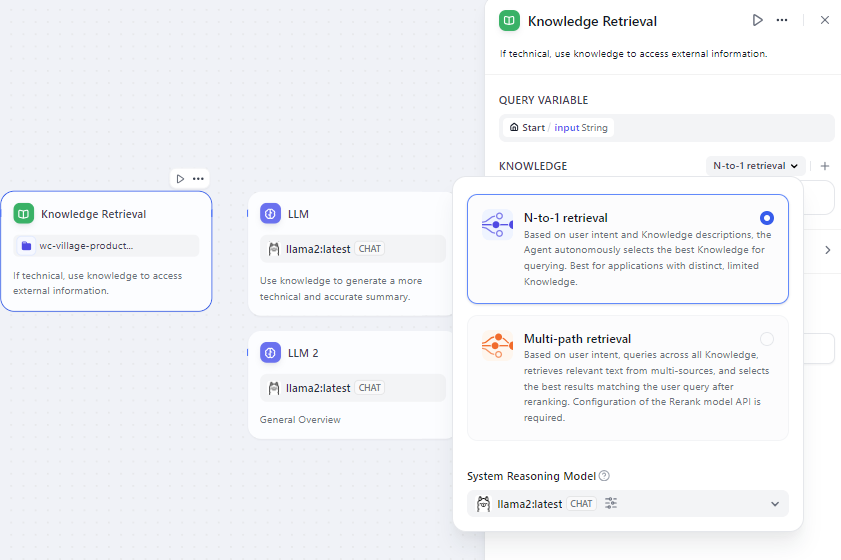

-2. Add a knowledge base and specify the LLM or embedding model to use.

-

-

-

-

-

+  +

+

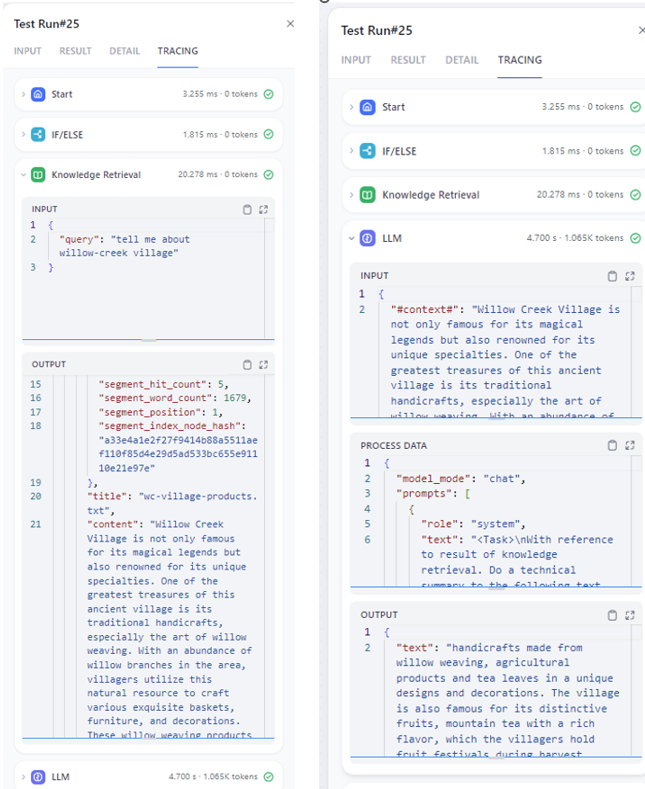

+- Enter your input in the workflow and execute it. You'll find retrieval results and generated answers on the right.

+

+ +

+

+

+

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

- Fill in the name of your new knowledge base (example: "test") and press the `Create` button. Adjust any other settings as needed.

-

+

+

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

+

- Upload knowledge files from your computer and allow some time for the upload to complete. Once finished, click on `Add files to Knowledge Base` button to build the vector store. Note: this process may take several minutes.

-

+

#### Step 2: Chat with RAG

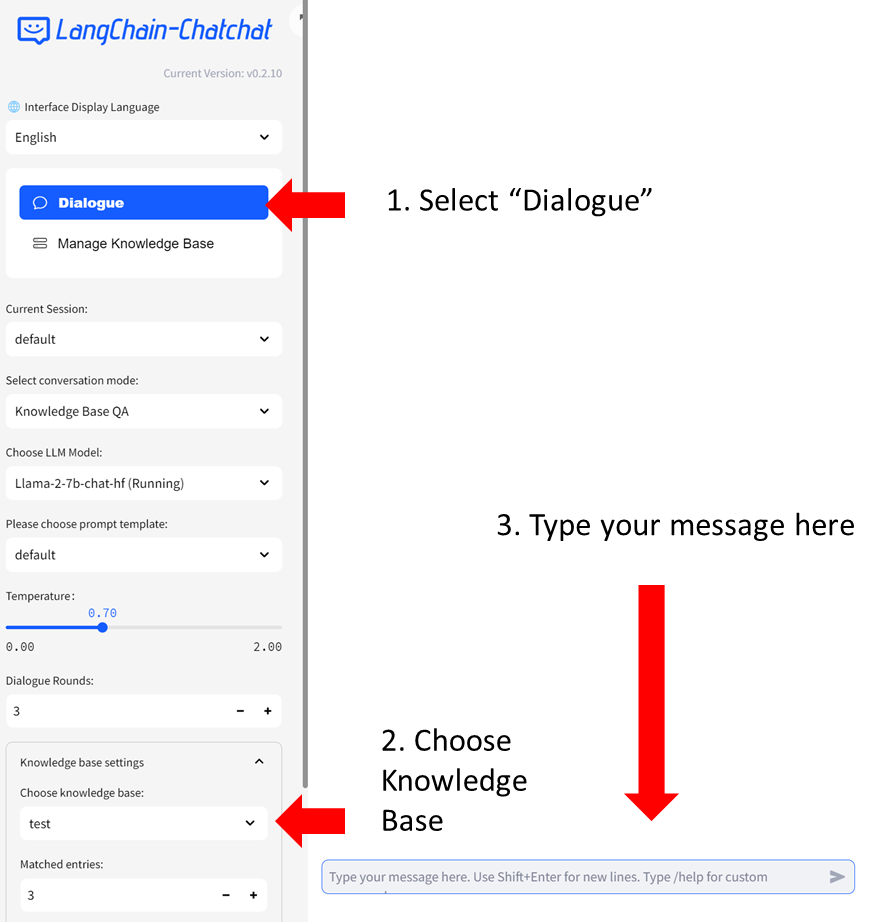

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

#### Step 2: Chat with RAG

You can now click `Dialogue` on the left-side menu to return to the chat UI. Then in `Knowledge base settings` menu, choose the Knowledge Base you just created, e.g, "test". Now you can start chatting.

-

+

+

-

- -

- -

- -

-