Merge remote-tracking branch 'upstream/main'

This commit is contained in:

commit

a42c25436e

36 changed files with 320 additions and 501 deletions

14

.github/workflows/manually_build.yml

vendored

14

.github/workflows/manually_build.yml

vendored

|

|

@ -30,7 +30,7 @@ on:

|

|||

- bigdl-ppml-trusted-realtime-ml-scala-graphene

|

||||

- bigdl-ppml-trusted-realtime-ml-scala-occlum

|

||||

- bigdl-ppml-trusted-bigdl-llm-tdx

|

||||

- bigdl-ppml-trusted-fastchat-tdx

|

||||

- bigdl-ppml-trusted-bigdl-llm-serving-tdx

|

||||

- bigdl-ppml-kmsutil

|

||||

- bigdl-ppml-pccs

|

||||

- bigdl-ppml-trusted-python-toolkit-base

|

||||

|

|

@ -839,21 +839,21 @@ jobs:

|

|||

docker push 10.239.45.10/arda/${image}:${TAG}

|

||||

docker rmi -f ${image}:${TAG} 10.239.45.10/arda/${image}:${TAG}

|

||||

|

||||

bigdl-ppml-trusted-fastchat-tdx:

|

||||

if: ${{ github.event.inputs.artifact == 'bigdl-ppml-trusted-fastchat-tdx' || github.event.inputs.artifact == 'all' }}

|

||||

bigdl-ppml-trusted-bigdl-llm-serving-tdx:

|

||||

if: ${{ github.event.inputs.artifact == 'bigdl-ppml-trusted-bigdl-llm-serving-tdx' || github.event.inputs.artifact == 'all' }}

|

||||

runs-on: [self-hosted, Shire]

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: docker login

|

||||

run: |

|

||||

docker login -u ${DOCKERHUB_USERNAME} -p ${DOCKERHUB_PASSWORD}

|

||||

- name: bigdl-ppml-trusted-fastchat-tdx

|

||||

- name: bigdl-ppml-trusted-bigdl-llm-serving-tdx

|

||||

run: |

|

||||

echo "##############################################################"

|

||||

echo "############## bigdl-ppml-trusted-fastchat-tdx ###############"

|

||||

echo "############## bigdl-ppml-trusted-bigdl-llm-serving-tdx ###############"

|

||||

echo "##############################################################"

|

||||

export image=intelanalytics/bigdl-ppml-trusted-fastchat-tdx

|

||||

cd ppml/tdx/docker/trusted-bigdl-llm-fschat/

|

||||

export image=intelanalytics/bigdl-ppml-trusted-bigdl-llm-serving-tdx

|

||||

cd ppml/tdx/docker/trusted-bigdl-llm-serving-tdx/

|

||||

sudo docker build \

|

||||

--no-cache=true \

|

||||

--build-arg http_proxy=${HTTP_PROXY} \

|

||||

|

|

|

|||

|

|

@ -109,3 +109,4 @@ Example responce:

|

|||

```json

|

||||

{"quote_list":{"bigdl-lora-finetuning-job-worker-0":"BAACAIEAAAAAAA...","bigdl-lora-finetuning-job-worker-1":"BAACAIEAAAAAAA...","launcher":"BAACAIEAAAAAA..."}}

|

||||

```

|

||||

|

||||

|

|

|

|||

57

docker/llm/finetune/lora/cpu/README.md

Normal file

57

docker/llm/finetune/lora/cpu/README.md

Normal file

|

|

@ -0,0 +1,57 @@

|

|||

## Run BF16-Optimized Lora Finetuning on Kubernetes with OneCCL

|

||||

|

||||

[Alpaca Lora](https://github.com/tloen/alpaca-lora/tree/main) uses [low-rank adaption](https://arxiv.org/pdf/2106.09685.pdf) to speed up the finetuning process of base model [Llama2-7b](https://huggingface.co/meta-llama/Llama-2-7b), and tries to reproduce the standard Alpaca, a general finetuned LLM. This is on top of Hugging Face transformers with Pytorch backend, which natively requires a number of expensive GPU resources and takes significant time.

|

||||

|

||||

By constract, BigDL here provides a CPU optimization to accelerate the lora finetuning of Llama2-7b, in the power of mixed-precision and distributed training. Detailedly, [Intel OneCCL](https://www.intel.com/content/www/us/en/developer/tools/oneapi/oneccl.html), an available Hugging Face backend, is able to speed up the Pytorch computation with BF16 datatype on CPUs, as well as parallel processing on Kubernetes enabled by [Intel MPI](https://www.intel.com/content/www/us/en/developer/tools/oneapi/mpi-library.html).

|

||||

|

||||

The architecture is illustrated in the following:

|

||||

|

||||

|

||||

|

||||

As above, BigDL implements its MPI training with [Kubeflow MPI operator](https://github.com/kubeflow/mpi-operator/tree/master), which encapsulates the deployment as MPIJob CRD, and assists users to handle the construction of a MPI worker cluster on Kubernetes, such as public key distribution, SSH connection, and log collection.

|

||||

|

||||

Now, let's go to deploy a Lora finetuning to create a LLM from Llama2-7b.

|

||||

|

||||

**Note: Please make sure you have already have an available Kubernetes infrastructure and NFS shared storage, and install [Helm CLI](https://helm.sh/docs/helm/helm_install/) for Kubernetes job submission.**

|

||||

|

||||

### 1. Install Kubeflow MPI Operator

|

||||

|

||||

Follow [here](https://github.com/kubeflow/mpi-operator/tree/master#installation) to install a Kubeflow MPI operator in your Kubernetes, which will listen and receive the following MPIJob request at backend.

|

||||

|

||||

### 2. Download Image, Base Model and Finetuning Data

|

||||

|

||||

Follow [here](https://github.com/intel-analytics/BigDL/tree/main/docker/llm/finetune/lora/docker#prepare-bigdl-image-for-lora-finetuning) to prepare BigDL Lora Finetuning image in your cluster.

|

||||

|

||||

As finetuning is from a base model, first download [Llama2-7b model from the public download site of Hugging Face](https://huggingface.co/meta-llama/Llama-2-7b). Then, download [cleaned alpaca data](https://raw.githubusercontent.com/tloen/alpaca-lora/main/alpaca_data_cleaned_archive.json), which contains all kinds of general knowledge and has already been cleaned. Next, move the downloaded files to a shared directory on your NFS server.

|

||||

|

||||

### 3. Deploy through Helm Chart

|

||||

|

||||

You are allowed to edit and experiment with different parameters in `./kubernetes/values.yaml` to improve finetuning performance and accuracy. For example, you can adjust `trainerNum` and `cpuPerPod` according to node and CPU core numbers in your cluster to make full use of these resources, and different `microBatchSize` result in different training speed and loss (here note that `microBatchSize`×`trainerNum` should not more than 128, as it is the batch size).

|

||||

|

||||

**Note: `dataSubPath` and `modelSubPath` need to have the same names as files under the NFS directory in step 2.**

|

||||

|

||||

After preparing parameters in `./kubernetes/values.yaml`, submit the job as beflow:

|

||||

|

||||

```bash

|

||||

cd ./kubernetes

|

||||

helm install bigdl-lora-finetuning .

|

||||

```

|

||||

|

||||

### 4. Check Deployment

|

||||

```bash

|

||||

kubectl get all -n bigdl-lora-finetuning # you will see launcher and worker pods running

|

||||

```

|

||||

|

||||

### 5. Check Finetuning Process

|

||||

|

||||

After deploying successfully, you can find a launcher pod, and then go inside this pod and check the logs collected from all workers.

|

||||

|

||||

```bash

|

||||

kubectl get all -n bigdl-lora-finetuning # you will see a launcher pod

|

||||

kubectl exec -it <launcher_pod_name> bash -n bigdl-ppml-finetuning # enter launcher pod

|

||||

cat launcher.log # display logs collected from other workers

|

||||

```

|

||||

|

||||

From the log, you can see whether finetuning process has been invoked successfully in all MPI worker pods, and a progress bar with finetuning speed and estimated time will be showed after some data preprocessing steps (this may take quiet a while).

|

||||

|

||||

For the fine-tuned model, it is written by the worker 0 (who holds rank 0), so you can find the model output inside the pod, which can be saved to host by command tools like `kubectl cp` or `scp`.

|

||||

52

docker/llm/finetune/lora/cpu/docker/Dockerfile

Normal file

52

docker/llm/finetune/lora/cpu/docker/Dockerfile

Normal file

|

|

@ -0,0 +1,52 @@

|

|||

ARG http_proxy

|

||||

ARG https_proxy

|

||||

|

||||

FROM mpioperator/intel as builder

|

||||

|

||||

ARG http_proxy

|

||||

ARG https_proxy

|

||||

ENV PIP_NO_CACHE_DIR=false

|

||||

ADD ./requirements.txt /ppml/requirements.txt

|

||||

|

||||

RUN mkdir /ppml/data && mkdir /ppml/model && \

|

||||

# install pytorch 2.0.1

|

||||

apt-get update && \

|

||||

apt-get install -y python3-pip python3.9-dev python3-wheel git software-properties-common && \

|

||||

pip3 install --upgrade pip && \

|

||||

pip install torch==2.0.1 && \

|

||||

# install ipex and oneccl

|

||||

pip install intel_extension_for_pytorch==2.0.100 && \

|

||||

pip install oneccl_bind_pt -f https://developer.intel.com/ipex-whl-stable && \

|

||||

# install transformers etc.

|

||||

cd /ppml && \

|

||||

git clone https://github.com/huggingface/transformers.git && \

|

||||

cd transformers && \

|

||||

git reset --hard 057e1d74733f52817dc05b673a340b4e3ebea08c && \

|

||||

pip install . && \

|

||||

pip install -r /ppml/requirements.txt && \

|

||||

# install python

|

||||

add-apt-repository ppa:deadsnakes/ppa -y && \

|

||||

apt-get install -y python3.9 && \

|

||||

rm /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3.9 /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3 /usr/bin/python && \

|

||||

pip install --no-cache requests argparse cryptography==3.3.2 urllib3 && \

|

||||

pip install --upgrade requests && \

|

||||

pip install setuptools==58.4.0 && \

|

||||

# Install OpenSSH for MPI to communicate between containers

|

||||

apt-get install -y --no-install-recommends openssh-client openssh-server && \

|

||||

mkdir -p /var/run/sshd && \

|

||||

# Allow OpenSSH to talk to containers without asking for confirmation

|

||||

# by disabling StrictHostKeyChecking.

|

||||

# mpi-operator mounts the .ssh folder from a Secret. For that to work, we need

|

||||

# to disable UserKnownHostsFile to avoid write permissions.

|

||||

# Disabling StrictModes avoids directory and files read permission checks.

|

||||

sed -i 's/[ #]\(.*StrictHostKeyChecking \).*/ \1no/g' /etc/ssh/ssh_config && \

|

||||

echo " UserKnownHostsFile /dev/null" >> /etc/ssh/ssh_config && \

|

||||

sed -i 's/#\(StrictModes \).*/\1no/g' /etc/ssh/sshd_config

|

||||

|

||||

ADD ./bigdl-lora-finetuing-entrypoint.sh /ppml/bigdl-lora-finetuing-entrypoint.sh

|

||||

ADD ./lora_finetune.py /ppml/lora_finetune.py

|

||||

|

||||

RUN chown -R mpiuser /ppml

|

||||

USER mpiuser

|

||||

|

|

@ -3,7 +3,7 @@

|

|||

You can download directly from Dockerhub like:

|

||||

|

||||

```bash

|

||||

docker pull intelanalytics/bigdl-lora-finetuning:2.4.0-SNAPSHOT

|

||||

docker pull intelanalytics/bigdl-llm-finetune-cpu:2.4.0-SNAPSHOT

|

||||

```

|

||||

|

||||

Or build the image from source:

|

||||

|

|

@ -13,8 +13,8 @@ export HTTP_PROXY=your_http_proxy

|

|||

export HTTPS_PROXY=your_https_proxy

|

||||

|

||||

docker build \

|

||||

--build-arg HTTP_PROXY=${HTTP_PROXY} \

|

||||

--build-arg HTTPS_PROXY=${HTTPS_PROXY} \

|

||||

-t intelanalytics/bigdl-lora-finetuning:2.4.0-SNAPSHOT \

|

||||

--build-arg http_proxy=${HTTP_PROXY} \

|

||||

--build-arg https_proxy=${HTTPS_PROXY} \

|

||||

-t intelanalytics/bigdl-llm-finetune-cpu:2.4.0-SNAPSHOT \

|

||||

-f ./Dockerfile .

|

||||

```

|

||||

|

|

@ -1,4 +1,3 @@

|

|||

{{- if eq .Values.TEEMode "native" }}

|

||||

apiVersion: kubeflow.org/v2beta1

|

||||

kind: MPIJob

|

||||

metadata:

|

||||

|

|

@ -90,4 +89,3 @@ spec:

|

|||

- name: nfs-storage

|

||||

persistentVolumeClaim:

|

||||

claimName: nfs-pvc

|

||||

{{- end }}

|

||||

|

|

@ -1,15 +1,9 @@

|

|||

imageName: intelanalytics/bigdl-lora-finetuning:2.4.0-SNAPSHOT

|

||||

imageName: intelanalytics/bigdl-llm-finetune-cpu:2.4.0-SNAPSHOT

|

||||

trainerNum: 8

|

||||

microBatchSize: 8

|

||||

TEEMode: tdx # tdx or native

|

||||

nfsServerIp: your_nfs_server_ip

|

||||

nfsPath: a_nfs_shared_folder_path_on_the_server

|

||||

dataSubPath: alpaca_data_cleaned_archive.json # a subpath of the data file under nfs directory

|

||||

modelSubPath: llama-7b-hf # a subpath of the model file (dir) under nfs directory

|

||||

ompNumThreads: 14

|

||||

cpuPerPod: 42

|

||||

attestionApiServicePort: 9870

|

||||

|

||||

enableTLS: false # true or false

|

||||

base64ServerCrt: "your_base64_format_server_crt"

|

||||

base64ServerKey: "your_base64_format_server_key"

|

||||

|

|

@ -1,83 +0,0 @@

|

|||

ARG HTTP_PROXY

|

||||

ARG HTTPS_PROXY

|

||||

|

||||

FROM mpioperator/intel as builder

|

||||

|

||||

ARG HTTP_PROXY

|

||||

ARG HTTPS_PROXY

|

||||

ADD ./requirements.txt /ppml/requirements.txt

|

||||

|

||||

RUN mkdir /ppml/data && mkdir /ppml/model && mkdir /ppml/output && \

|

||||

# install pytorch 2.0.1

|

||||

export http_proxy=$HTTP_PROXY && \

|

||||

export https_proxy=$HTTPS_PROXY && \

|

||||

apt-get update && \

|

||||

# Basic dependencies and DCAP

|

||||

apt-get update && \

|

||||

apt install -y build-essential apt-utils wget git sudo vim && \

|

||||

mkdir -p /opt/intel/ && \

|

||||

cd /opt/intel && \

|

||||

wget https://download.01.org/intel-sgx/sgx-dcap/1.16/linux/distro/ubuntu20.04-server/sgx_linux_x64_sdk_2.19.100.3.bin && \

|

||||

chmod a+x ./sgx_linux_x64_sdk_2.19.100.3.bin && \

|

||||

printf "no\n/opt/intel\n"|./sgx_linux_x64_sdk_2.19.100.3.bin && \

|

||||

. /opt/intel/sgxsdk/environment && \

|

||||

cd /opt/intel && \

|

||||

wget https://download.01.org/intel-sgx/sgx-dcap/1.16/linux/distro/ubuntu20.04-server/sgx_debian_local_repo.tgz && \

|

||||

tar xzf sgx_debian_local_repo.tgz && \

|

||||

echo 'deb [trusted=yes arch=amd64] file:///opt/intel/sgx_debian_local_repo focal main' | tee /etc/apt/sources.list.d/intel-sgx.list && \

|

||||

wget -qO - https://download.01.org/intel-sgx/sgx_repo/ubuntu/intel-sgx-deb.key | apt-key add - && \

|

||||

env DEBIAN_FRONTEND=noninteractive apt-get update && apt install -y libsgx-enclave-common-dev libsgx-qe3-logic libsgx-pce-logic libsgx-ae-qe3 libsgx-ae-qve libsgx-urts libsgx-dcap-ql libsgx-dcap-default-qpl libsgx-dcap-quote-verify-dev libsgx-dcap-ql-dev libsgx-dcap-default-qpl-dev libsgx-ra-network libsgx-ra-uefi libtdx-attest libtdx-attest-dev && \

|

||||

apt-get install -y python3-pip python3.9-dev python3-wheel && \

|

||||

pip3 install --upgrade pip && \

|

||||

pip install torch==2.0.1 && \

|

||||

# install ipex and oneccl

|

||||

pip install intel_extension_for_pytorch==2.0.100 && \

|

||||

pip install oneccl_bind_pt -f https://developer.intel.com/ipex-whl-stable && \

|

||||

# install transformers etc.

|

||||

cd /ppml && \

|

||||

apt-get update && \

|

||||

apt-get install -y git && \

|

||||

git clone https://github.com/huggingface/transformers.git && \

|

||||

cd transformers && \

|

||||

git reset --hard 057e1d74733f52817dc05b673a340b4e3ebea08c && \

|

||||

pip install . && \

|

||||

pip install -r /ppml/requirements.txt && \

|

||||

# install python

|

||||

env DEBIAN_FRONTEND=noninteractive apt-get update && \

|

||||

apt install software-properties-common -y && \

|

||||

add-apt-repository ppa:deadsnakes/ppa -y && \

|

||||

apt-get install -y python3.9 && \

|

||||

rm /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3.9 /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3 /usr/bin/python && \

|

||||

apt-get install -y python3-pip python3.9-dev python3-wheel && \

|

||||

pip install --upgrade pip && \

|

||||

pip install --no-cache requests argparse cryptography==3.3.2 urllib3 && \

|

||||

pip install --upgrade requests && \

|

||||

pip install setuptools==58.4.0 && \

|

||||

# Install OpenSSH for MPI to communicate between containers

|

||||

apt-get install -y --no-install-recommends openssh-client openssh-server && \

|

||||

mkdir -p /var/run/sshd && \

|

||||

# Allow OpenSSH to talk to containers without asking for confirmation

|

||||

# by disabling StrictHostKeyChecking.

|

||||

# mpi-operator mounts the .ssh folder from a Secret. For that to work, we need

|

||||

# to disable UserKnownHostsFile to avoid write permissions.

|

||||

# Disabling StrictModes avoids directory and files read permission checks.

|

||||

sed -i 's/[ #]\(.*StrictHostKeyChecking \).*/ \1no/g' /etc/ssh/ssh_config && \

|

||||

echo " UserKnownHostsFile /dev/null" >> /etc/ssh/ssh_config && \

|

||||

sed -i 's/#\(StrictModes \).*/\1no/g' /etc/ssh/sshd_config && \

|

||||

echo 'port=4050' | tee /etc/tdx-attest.conf && \

|

||||

pip install flask && \

|

||||

echo "mpiuser ALL = NOPASSWD:SETENV: /opt/intel/oneapi/mpi/2021.9.0/bin/mpirun\nmpiuser ALL = NOPASSWD:SETENV: /usr/bin/python" > /etc/sudoers.d/mpivisudo && \

|

||||

chmod 440 /etc/sudoers.d/mpivisudo

|

||||

|

||||

ADD ./bigdl_aa.py /ppml/bigdl_aa.py

|

||||

ADD ./quote_generator.py /ppml/quote_generator.py

|

||||

ADD ./worker_quote_generate.py /ppml/worker_quote_generate.py

|

||||

ADD ./get_worker_quote.sh /ppml/get_worker_quote.sh

|

||||

|

||||

ADD ./bigdl-lora-finetuing-entrypoint.sh /ppml/bigdl-lora-finetuing-entrypoint.sh

|

||||

ADD ./lora_finetune.py /ppml/lora_finetune.py

|

||||

|

||||

RUN chown -R mpiuser /ppml

|

||||

USER mpiuser

|

||||

|

|

@ -1,58 +0,0 @@

|

|||

import quote_generator

|

||||

from flask import Flask, request

|

||||

from configparser import ConfigParser

|

||||

import ssl, os

|

||||

import base64

|

||||

import requests

|

||||

import subprocess

|

||||

|

||||

app = Flask(__name__)

|

||||

|

||||

@app.route('/gen_quote', methods=['POST'])

|

||||

def gen_quote():

|

||||

data = request.get_json()

|

||||

user_report_data = data.get('user_report_data')

|

||||

try:

|

||||

quote_b = quote_generator.generate_tdx_quote(user_report_data)

|

||||

quote = base64.b64encode(quote_b).decode('utf-8')

|

||||

return {'quote': quote}

|

||||

except Exception as e:

|

||||

return {'quote': "quote generation failed: %s" % (e)}

|

||||

|

||||

@app.route('/attest', methods=['POST'])

|

||||

def get_cluster_quote_list():

|

||||

data = request.get_json()

|

||||

user_report_data = data.get('user_report_data')

|

||||

quote_list = []

|

||||

|

||||

try:

|

||||

quote_b = quote_generator.generate_tdx_quote(user_report_data)

|

||||

quote = base64.b64encode(quote_b).decode("utf-8")

|

||||

quote_list.append(("launcher", quote))

|

||||

except Exception as e:

|

||||

quote_list.append("launcher", "quote generation failed: %s" % (e))

|

||||

|

||||

command = "sudo -u mpiuser -E bash /ppml/get_worker_quote.sh %s" % (user_report_data)

|

||||

output = subprocess.check_output(command, shell=True)

|

||||

|

||||

with open("/ppml/output/quote.log", "r") as quote_file:

|

||||

for line in quote_file:

|

||||

line = line.strip()

|

||||

if line:

|

||||

parts = line.split(":")

|

||||

if len(parts) == 2:

|

||||

quote_list.append((parts[0].strip(), parts[1].strip()))

|

||||

return {"quote_list": dict(quote_list)}

|

||||

|

||||

if __name__ == '__main__':

|

||||

print("BigDL-AA: Agent Started.")

|

||||

port = int(os.environ.get('ATTESTATION_API_SERVICE_PORT'))

|

||||

enable_tls = os.environ.get('ENABLE_TLS')

|

||||

if enable_tls == 'true':

|

||||

context = ssl.SSLContext(ssl.PROTOCOL_TLS)

|

||||

context.load_cert_chain(certfile='/ppml/keys/server.crt', keyfile='/ppml/keys/server.key')

|

||||

# https_key_store_token = os.environ.get('HTTPS_KEY_STORE_TOKEN')

|

||||

# context.load_cert_chain(certfile='/ppml/keys/server.crt', keyfile='/ppml/keys/server.key', password=https_key_store_token)

|

||||

app.run(host='0.0.0.0', port=port, ssl_context=context)

|

||||

else:

|

||||

app.run(host='0.0.0.0', port=port)

|

||||

|

|

@ -1,17 +0,0 @@

|

|||

#!/bin/bash

|

||||

set -x

|

||||

source /opt/intel/oneapi/setvars.sh

|

||||

export CCL_WORKER_COUNT=$WORLD_SIZE

|

||||

export CCL_WORKER_AFFINITY=auto

|

||||

export SAVE_PATH="/ppml/output"

|

||||

|

||||

mpirun \

|

||||

-n $WORLD_SIZE \

|

||||

-ppn 1 \

|

||||

-f /home/mpiuser/hostfile \

|

||||

-iface eth0 \

|

||||

-genv OMP_NUM_THREADS=$OMP_NUM_THREADS \

|

||||

-genv KMP_AFFINITY="granularity=fine,none" \

|

||||

-genv KMP_BLOCKTIME=1 \

|

||||

-genv TF_ENABLE_ONEDNN_OPTS=1 \

|

||||

sudo -E python /ppml/worker_quote_generate.py --user_report_data $1 > $SAVE_PATH/quote.log 2>&1

|

||||

|

|

@ -1,88 +0,0 @@

|

|||

#

|

||||

# Copyright 2016 The BigDL Authors.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

import ctypes

|

||||

import base64

|

||||

import os

|

||||

|

||||

def generate_tdx_quote(user_report_data):

|

||||

# Define the uuid data structure

|

||||

TDX_UUID_SIZE = 16

|

||||

class TdxUuid(ctypes.Structure):

|

||||

_fields_ = [("d", ctypes.c_uint8 * TDX_UUID_SIZE)]

|

||||

|

||||

# Define the report data structure

|

||||

TDX_REPORT_DATA_SIZE = 64

|

||||

class TdxReportData(ctypes.Structure):

|

||||

_fields_ = [("d", ctypes.c_uint8 * TDX_REPORT_DATA_SIZE)]

|

||||

|

||||

# Define the report structure

|

||||

TDX_REPORT_SIZE = 1024

|

||||

class TdxReport(ctypes.Structure):

|

||||

_fields_ = [("d", ctypes.c_uint8 * TDX_REPORT_SIZE)]

|

||||

|

||||

# Load the library

|

||||

tdx_attest = ctypes.cdll.LoadLibrary("/usr/lib/x86_64-linux-gnu/libtdx_attest.so.1")

|

||||

|

||||

# Set the argument and return types for the function

|

||||

tdx_attest.tdx_att_get_report.argtypes = [ctypes.POINTER(TdxReportData), ctypes.POINTER(TdxReport)]

|

||||

tdx_attest.tdx_att_get_report.restype = ctypes.c_uint16

|

||||

|

||||

tdx_attest.tdx_att_get_quote.argtypes = [ctypes.POINTER(TdxReportData), ctypes.POINTER(TdxUuid), ctypes.c_uint32, ctypes.POINTER(TdxUuid), ctypes.POINTER(ctypes.POINTER(ctypes.c_uint8)), ctypes.POINTER(ctypes.c_uint32), ctypes.c_uint32]

|

||||

tdx_attest.tdx_att_get_quote.restype = ctypes.c_uint16

|

||||

|

||||

|

||||

# Call the function and check the return code

|

||||

byte_array_data = bytearray(user_report_data.ljust(64)[:64], "utf-8").replace(b' ', b'\x00')

|

||||

report_data = TdxReportData()

|

||||

report_data.d = (ctypes.c_uint8 * 64).from_buffer(byte_array_data)

|

||||

report = TdxReport()

|

||||

result = tdx_attest.tdx_att_get_report(ctypes.byref(report_data), ctypes.byref(report))

|

||||

if result != 0:

|

||||

print("Error: " + hex(result))

|

||||

|

||||

att_key_id_list = None

|

||||

list_size = 0

|

||||

att_key_id = TdxUuid()

|

||||

p_quote = ctypes.POINTER(ctypes.c_uint8)()

|

||||

quote_size = ctypes.c_uint32()

|

||||

flags = 0

|

||||

|

||||

result = tdx_attest.tdx_att_get_quote(ctypes.byref(report_data), att_key_id_list, list_size, ctypes.byref(att_key_id), ctypes.byref(p_quote), ctypes.byref(quote_size), flags)

|

||||

|

||||

if result != 0:

|

||||

print("Error: " + hex(result))

|

||||

else:

|

||||

quote = ctypes.string_at(p_quote, quote_size.value)

|

||||

return quote

|

||||

|

||||

def generate_gramine_quote(user_report_data):

|

||||

USER_REPORT_PATH = "/dev/attestation/user_report_data"

|

||||

QUOTE_PATH = "/dev/attestation/quote"

|

||||

if not os.path.isfile(USER_REPORT_PATH):

|

||||

print(f"File {USER_REPORT_PATH} not found.")

|

||||

return ""

|

||||

if not os.path.isfile(QUOTE_PATH):

|

||||

print(f"File {QUOTE_PATH} not found.")

|

||||

return ""

|

||||

with open(USER_REPORT_PATH, 'w') as out:

|

||||

out.write(user_report_data)

|

||||

with open(QUOTE_PATH, "rb") as f:

|

||||

quote = f.read()

|

||||

return quote

|

||||

|

||||

if __name__ == "__main__":

|

||||

print(generate_tdx_quote("ppml"))

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

import quote_generator

|

||||

import argparse

|

||||

import ssl, os

|

||||

import base64

|

||||

import requests

|

||||

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument("--user_report_data", type=str, default="ppml")

|

||||

|

||||

args = parser.parse_args()

|

||||

|

||||

host = os.environ.get('HYDRA_BSTRAP_LOCALHOST').split('.')[0]

|

||||

user_report_data = args.user_report_data

|

||||

try:

|

||||

quote_b = quote_generator.generate_tdx_quote(user_report_data)

|

||||

quote = base64.b64encode(quote_b).decode('utf-8')

|

||||

except Exception as e:

|

||||

quote = "quote generation failed: %s" % (e)

|

||||

|

||||

print("%s: %s"%(host, quote))

|

||||

|

|

@ -1,162 +0,0 @@

|

|||

{{- if eq .Values.TEEMode "tdx" }}

|

||||

apiVersion: kubeflow.org/v2beta1

|

||||

kind: MPIJob

|

||||

metadata:

|

||||

name: bigdl-lora-finetuning-job

|

||||

namespace: bigdl-lora-finetuning

|

||||

spec:

|

||||

slotsPerWorker: 1

|

||||

runPolicy:

|

||||

cleanPodPolicy: Running

|

||||

sshAuthMountPath: /home/mpiuser/.ssh

|

||||

mpiImplementation: Intel

|

||||

mpiReplicaSpecs:

|

||||

Launcher:

|

||||

replicas: 1

|

||||

template:

|

||||

spec:

|

||||

volumes:

|

||||

- name: nfs-storage

|

||||

persistentVolumeClaim:

|

||||

claimName: nfs-pvc

|

||||

- name: dev

|

||||

hostPath:

|

||||

path: /dev

|

||||

{{- if eq .Values.enableTLS true }}

|

||||

- name: ssl-keys

|

||||

secret:

|

||||

secretName: ssl-keys

|

||||

{{- end }}

|

||||

runtimeClassName: kata-qemu-tdx

|

||||

containers:

|

||||

- image: {{ .Values.imageName }}

|

||||

name: bigdl-ppml-finetuning-launcher

|

||||

securityContext:

|

||||

runAsUser: 0

|

||||

privileged: true

|

||||

command: ["/bin/sh", "-c"]

|

||||

args:

|

||||

- |

|

||||

nohup python /ppml/bigdl_aa.py > /ppml/bigdl_aa.log 2>&1 &

|

||||

sudo -E -u mpiuser bash /ppml/bigdl-lora-finetuing-entrypoint.sh

|

||||

env:

|

||||

- name: WORKER_ROLE

|

||||

value: "launcher"

|

||||

- name: WORLD_SIZE

|

||||

value: "{{ .Values.trainerNum }}"

|

||||

- name: MICRO_BATCH_SIZE

|

||||

value: "{{ .Values.microBatchSize }}"

|

||||

- name: MASTER_PORT

|

||||

value: "42679"

|

||||

- name: MASTER_ADDR

|

||||

value: "bigdl-lora-finetuning-job-worker-0.bigdl-lora-finetuning-job-worker"

|

||||

- name: DATA_SUB_PATH

|

||||

value: "{{ .Values.dataSubPath }}"

|

||||

- name: OMP_NUM_THREADS

|

||||

value: "{{ .Values.ompNumThreads }}"

|

||||

- name: LOCAL_POD_NAME

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: HF_DATASETS_CACHE

|

||||

value: "/ppml/output/cache"

|

||||

- name: ATTESTATION_API_SERVICE_PORT

|

||||

value: "{{ .Values.attestionApiServicePort }}"

|

||||

- name: ENABLE_TLS

|

||||

value: "{{ .Values.enableTLS }}"

|

||||

volumeMounts:

|

||||

- name: nfs-storage

|

||||

subPath: {{ .Values.modelSubPath }}

|

||||

mountPath: /ppml/model

|

||||

- name: nfs-storage

|

||||

subPath: {{ .Values.dataSubPath }}

|

||||

mountPath: "/ppml/data/{{ .Values.dataSubPath }}"

|

||||

- name: dev

|

||||

mountPath: /dev

|

||||

{{- if eq .Values.enableTLS true }}

|

||||

- name: ssl-keys

|

||||

mountPath: /ppml/keys

|

||||

{{- end }}

|

||||

Worker:

|

||||

replicas: {{ .Values.trainerNum }}

|

||||

template:

|

||||

spec:

|

||||

runtimeClassName: kata-qemu-tdx

|

||||

containers:

|

||||

- image: {{ .Values.imageName }}

|

||||

name: bigdl-ppml-finetuning-worker

|

||||

securityContext:

|

||||

runAsUser: 0

|

||||

privileged: true

|

||||

command: ["/bin/sh", "-c"]

|

||||

args:

|

||||

- |

|

||||

chown nobody /home/mpiuser/.ssh/id_rsa &

|

||||

sudo -E -u mpiuser bash /ppml/bigdl-lora-finetuing-entrypoint.sh

|

||||

env:

|

||||

- name: WORKER_ROLE

|

||||

value: "trainer"

|

||||

- name: WORLD_SIZE

|

||||

value: "{{ .Values.trainerNum }}"

|

||||

- name: MICRO_BATCH_SIZE

|

||||

value: "{{ .Values.microBatchSize }}"

|

||||

- name: MASTER_PORT

|

||||

value: "42679"

|

||||

- name: MASTER_ADDR

|

||||

value: "bigdl-lora-finetuning-job-worker-0.bigdl-lora-finetuning-job-worker"

|

||||

- name: LOCAL_POD_NAME

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

volumeMounts:

|

||||

- name: nfs-storage

|

||||

subPath: {{ .Values.modelSubPath }}

|

||||

mountPath: /ppml/model

|

||||

- name: nfs-storage

|

||||

subPath: {{ .Values.dataSubPath }}

|

||||

mountPath: "/ppml/data/{{ .Values.dataSubPath }}"

|

||||

- name: dev

|

||||

mountPath: /dev

|

||||

resources:

|

||||

requests:

|

||||

cpu: {{ .Values.cpuPerPod }}

|

||||

limits:

|

||||

cpu: {{ .Values.cpuPerPod }}

|

||||

volumes:

|

||||

- name: nfs-storage

|

||||

persistentVolumeClaim:

|

||||

claimName: nfs-pvc

|

||||

- name: dev

|

||||

hostPath:

|

||||

path: /dev

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

metadata:

|

||||

name: bigdl-lora-finetuning-launcher-attestation-api-service

|

||||

namespace: bigdl-lora-finetuning

|

||||

spec:

|

||||

selector:

|

||||

job-name: bigdl-lora-finetuning-job-launcher

|

||||

training.kubeflow.org/job-name: bigdl-lora-finetuning-job

|

||||

training.kubeflow.org/job-role: launcher

|

||||

ports:

|

||||

- name: launcher-attestation-api-service-port

|

||||

protocol: TCP

|

||||

port: {{ .Values.attestionApiServicePort }}

|

||||

targetPort: {{ .Values.attestionApiServicePort }}

|

||||

type: ClusterIP

|

||||

---

|

||||

{{- if eq .Values.enableTLS true }}

|

||||

apiVersion: v1

|

||||

kind: Secret

|

||||

metadata:

|

||||

name: ssl-keys

|

||||

namespace: bigdl-lora-finetuning

|

||||

type: Opaque

|

||||

data:

|

||||

server.crt: {{ .Values.base64ServerCrt }}

|

||||

server.key: {{ .Values.base64ServerKey }}

|

||||

{{- end }}

|

||||

|

||||

{{- end }}

|

||||

38

docker/llm/finetune/qlora/xpu/docker/Dockerfile

Normal file

38

docker/llm/finetune/qlora/xpu/docker/Dockerfile

Normal file

|

|

@ -0,0 +1,38 @@

|

|||

FROM intel/oneapi-basekit:2023.2.1-devel-ubuntu22.04

|

||||

ARG http_proxy

|

||||

ARG https_proxy

|

||||

ENV TZ=Asia/Shanghai

|

||||

ARG PIP_NO_CACHE_DIR=false

|

||||

ENV TRANSFORMERS_COMMIT_ID=95fe0f5

|

||||

|

||||

# retrive oneapi repo public key

|

||||

RUN curl -fsSL https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS-2023.PUB | gpg --dearmor | tee /usr/share/keyrings/intel-oneapi-archive-keyring.gpg && \

|

||||

echo "deb [signed-by=/usr/share/keyrings/intel-oneapi-archive-keyring.gpg] https://apt.repos.intel.com/oneapi all main " > /etc/apt/sources.list.d/oneAPI.list && \

|

||||

# retrive intel gpu driver repo public key

|

||||

wget -qO - https://repositories.intel.com/graphics/intel-graphics.key | gpg --dearmor --output /usr/share/keyrings/intel-graphics.gpg && \

|

||||

echo 'deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/graphics/ubuntu jammy arc' | tee /etc/apt/sources.list.d/intel.gpu.jammy.list && \

|

||||

rm /etc/apt/sources.list.d/intel-graphics.list && \

|

||||

# update dependencies

|

||||

apt-get update && \

|

||||

# install basic dependencies

|

||||

apt-get install -y curl wget git gnupg gpg-agent software-properties-common libunwind8-dev vim less && \

|

||||

# install Intel GPU driver

|

||||

apt-get install -y intel-opencl-icd intel-level-zero-gpu level-zero level-zero-dev && \

|

||||

# install python 3.9

|

||||

ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone && \

|

||||

env DEBIAN_FRONTEND=noninteractive apt-get update && \

|

||||

add-apt-repository ppa:deadsnakes/ppa -y && \

|

||||

apt-get install -y python3.9 && \

|

||||

rm /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3.9 /usr/bin/python3 && \

|

||||

ln -s /usr/bin/python3 /usr/bin/python && \

|

||||

apt-get install -y python3-pip python3.9-dev python3-wheel python3.9-distutils && \

|

||||

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && \

|

||||

# install XPU bigdl-llm

|

||||

pip install --pre --upgrade bigdl-llm[xpu] -f https://developer.intel.com/ipex-whl-stable-xpu && \

|

||||

# install huggingface dependencies

|

||||

pip install git+https://github.com/huggingface/transformers.git@${TRANSFORMERS_COMMIT_ID} && \

|

||||

pip install peft==0.5.0 datasets && \

|

||||

wget https://raw.githubusercontent.com/intel-analytics/BigDL/main/python/llm/example/gpu/qlora_finetuning/qlora_finetuning.py

|

||||

|

||||

ADD ./start-qlora-finetuning-on-xpu.sh /start-qlora-finetuning-on-xpu.sh

|

||||

89

docker/llm/finetune/qlora/xpu/docker/README.md

Normal file

89

docker/llm/finetune/qlora/xpu/docker/README.md

Normal file

|

|

@ -0,0 +1,89 @@

|

|||

## Fine-tune LLM with BigDL LLM Container

|

||||

|

||||

The following shows how to fine-tune LLM with Quantization (QLoRA built on BigDL-LLM 4bit optimizations) in a docker environment, which is accelerated by Intel XPU.

|

||||

|

||||

### 1. Prepare Docker Image

|

||||

|

||||

You can download directly from Dockerhub like:

|

||||

|

||||

```bash

|

||||

docker pull intelanalytics/bigdl-llm-finetune-qlora-xpu:2.4.0-SNAPSHOT

|

||||

```

|

||||

|

||||

Or build the image from source:

|

||||

|

||||

```bash

|

||||

export HTTP_PROXY=your_http_proxy

|

||||

export HTTPS_PROXY=your_https_proxy

|

||||

|

||||

docker build \

|

||||

--build-arg http_proxy=${HTTP_PROXY} \

|

||||

--build-arg https_proxy=${HTTPS_PROXY} \

|

||||

-t intelanalytics/bigdl-llm-finetune-qlora-xpu:2.4.0-SNAPSHOT \

|

||||

-f ./Dockerfile .

|

||||

```

|

||||

|

||||

### 2. Prepare Base Model, Data and Container

|

||||

|

||||

Here, we try to fine-tune a [Llama2-7b](https://huggingface.co/meta-llama/Llama-2-7b) with [English Quotes](https://huggingface.co/datasets/Abirate/english_quotes) dataset, and please download them and start a docker container with files mounted like below:

|

||||

|

||||

```bash

|

||||

export BASE_MODE_PATH=<your_downloaded_base_model_path>

|

||||

export DATA_PATH=<your_downloaded_data_path>

|

||||

|

||||

docker run -itd \

|

||||

--net=host \

|

||||

--device=/dev/dri \

|

||||

--memory="32G" \

|

||||

--name=bigdl-llm-fintune-qlora-xpu \

|

||||

-v $BASE_MODE_PATH:/model \

|

||||

-v $DATA_PATH:/data/english_quotes \

|

||||

--shm-size="16g" \

|

||||

intelanalytics/bigdl-llm-fintune-qlora-xpu:2.4.0-SNAPSHOT

|

||||

```

|

||||

|

||||

The download and mount of base model and data to a docker container demonstrates a standard fine-tuning process. You can skip this step for a quick start, and in this way, the fine-tuning codes will automatically download the needed files:

|

||||

|

||||

```bash

|

||||

docker run -itd \

|

||||

--net=host \

|

||||

--device=/dev/dri \

|

||||

--memory="32G" \

|

||||

--name=bigdl-llm-fintune-qlora-xpu \

|

||||

--shm-size="16g" \

|

||||

intelanalytics/bigdl-llm-fintune-qlora-xpu:2.4.0-SNAPSHOT

|

||||

```

|

||||

|

||||

However, we do recommend you to handle them manually, because the automatical download can be blocked by Internet access and Huggingface authentication etc. according to different environment, and the manual method allows you to fine-tune in a custom way (with different base model and dataset).

|

||||

|

||||

### 3. Start Fine-Tuning

|

||||

|

||||

Enter the running container:

|

||||

|

||||

```bash

|

||||

docker exec -it bigdl-llm-fintune-qlora-xpu bash

|

||||

```

|

||||

|

||||

Then, start QLoRA fine-tuning:

|

||||

|

||||

```bash

|

||||

bash start-qlora-finetuning-on-xpu.sh

|

||||

```

|

||||

|

||||

After minutes, it is expected to get results like:

|

||||

|

||||

```bash

|

||||

{'loss': 2.256, 'learning_rate': 0.0002, 'epoch': 0.03}

|

||||

{'loss': 1.8869, 'learning_rate': 0.00017777777777777779, 'epoch': 0.06}

|

||||

{'loss': 1.5334, 'learning_rate': 0.00015555555555555556, 'epoch': 0.1}

|

||||

{'loss': 1.4975, 'learning_rate': 0.00013333333333333334, 'epoch': 0.13}

|

||||

{'loss': 1.3245, 'learning_rate': 0.00011111111111111112, 'epoch': 0.16}

|

||||

{'loss': 1.2622, 'learning_rate': 8.888888888888889e-05, 'epoch': 0.19}

|

||||

{'loss': 1.3944, 'learning_rate': 6.666666666666667e-05, 'epoch': 0.22}

|

||||

{'loss': 1.2481, 'learning_rate': 4.4444444444444447e-05, 'epoch': 0.26}

|

||||

{'loss': 1.3442, 'learning_rate': 2.2222222222222223e-05, 'epoch': 0.29}

|

||||

{'loss': 1.3256, 'learning_rate': 0.0, 'epoch': 0.32}

|

||||

{'train_runtime': 204.4633, 'train_samples_per_second': 3.913, 'train_steps_per_second': 0.978, 'train_loss': 1.5072882556915284, 'epoch': 0.32}

|

||||

100%|██████████████████████████████████████████████████████████████████████████████████████| 200/200 [03:24<00:00, 1.02s/it]

|

||||

TrainOutput(global_step=200, training_loss=1.5072882556915284, metrics={'train_runtime': 204.4633, 'train_samples_per_second': 3.913, 'train_steps_per_second': 0.978, 'train_loss': 1.5072882556915284, 'epoch': 0.32})

|

||||

```

|

||||

|

|

@ -0,0 +1,18 @@

|

|||

#!/bin/bash

|

||||

set -x

|

||||

export USE_XETLA=OFF

|

||||

export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

|

||||

source /opt/intel/oneapi/setvars.sh

|

||||

|

||||

if [ -d "./model" ];

|

||||

then

|

||||

MODEL_PARAM="--repo-id-or-model-path ./model" # otherwise, default to download from HF repo

|

||||

fi

|

||||

|

||||

if [ -d "./data/english_quotes" ];

|

||||

then

|

||||

DATA_PARAM="--dataset ./data/english_quotes" # otherwise, default to download from HF dataset

|

||||

fi

|

||||

|

||||

python qlora_finetuning.py $MODEL_PARAM $DATA_PARAM

|

||||

|

||||

|

|

@ -15,6 +15,8 @@ After downloading the model, please change name from `vicuna-7b-v1.5` to `vicuna

|

|||

|

||||

You can download the model from [here](https://huggingface.co/lmsys/vicuna-7b-v1.5).

|

||||

|

||||

For ChatGLM models, users do not need to add `bigdl` into model path. We have already used the `BigDL-LLM` backend for this model.

|

||||

|

||||

### Kubernetes config

|

||||

|

||||

We recommend to setup your kubernetes cluster before deployment. Mostly importantly, please set `cpu-management-policy` to `static` by using this [tutorial](https://kubernetes.io/docs/tasks/administer-cluster/cpu-management-policies/). Also, it would be great to also set the `topology management policy` to `single-numa-node`.

|

||||

|

|

|

|||

|

|

@ -5,7 +5,7 @@ Before running, make sure to have [bigdl-llm](../../../README.md) and [bigdl-nan

|

|||

|

||||

## Dependencies

|

||||

```bash

|

||||

pip install omageconfig

|

||||

pip install omegaconf

|

||||

pip install pandas

|

||||

```

|

||||

|

||||

|

|

|

|||

2

python/llm/portable-executable/.gitignore

vendored

2

python/llm/portable-executable/.gitignore

vendored

|

|

@ -1,2 +0,0 @@

|

|||

python-embed

|

||||

portable-executable.zip

|

||||

|

|

@ -1,33 +0,0 @@

|

|||

# BigDL-LLM Portable Executable For Windows: User Guide

|

||||

|

||||

This portable executable includes everything you need to run LLM (except models). Please refer to How to use section to get started.

|

||||

|

||||

## 13B model running on an Intel 11-Gen Core PC (real-time screen capture)

|

||||

|

||||

<p align="left">

|

||||

<img src=https://llm-assets.readthedocs.io/en/latest/_images/one-click-installer-screen-capture.gif width='80%' />

|

||||

|

||||

</p>

|

||||

|

||||

## Verified Models

|

||||

|

||||

- ChatGLM2-6b

|

||||

- Baichuan-13B-Chat

|

||||

- Baichuan2-7B-Chat

|

||||

- internlm-chat-7b-8k

|

||||

- Llama-2-7b-chat-hf

|

||||

|

||||

## How to use

|

||||

|

||||

1. Download the model to your computer. Please ensure there is a file named `config.json` in the model folder, otherwise the script won't work.

|

||||

|

||||

|

||||

|

||||

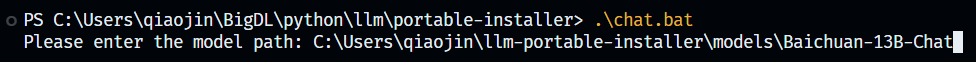

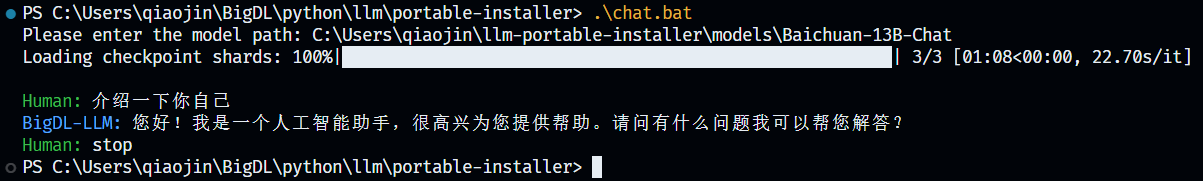

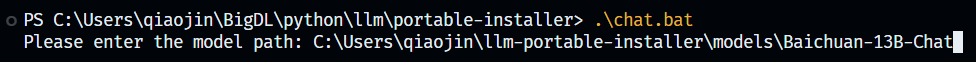

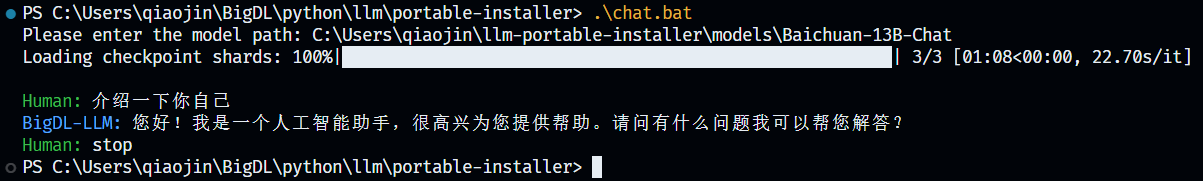

2. Run `chat.bat` in Terminal and input the path of the model (e.g. `path\to\model`, note that there's no slash at the end of the path).

|

||||

|

||||

|

||||

|

||||

3. Press Enter and wait until model finishes loading. Then enjoy chatting with the model!

|

||||

4. If you want to stop chatting, just input `stop` and the model will stop running.

|

||||

|

||||

|

||||

|

|

@ -1,5 +0,0 @@

|

|||

# BigDL-LLM Portable Executable Setup Script For Windows

|

||||

|

||||

# How to use

|

||||

|

||||

Just simply run `setup.bat` and it will download and install all dependency and generate a zip file for user to use.

|

||||

2

python/llm/portable-zip/.gitignore

vendored

Normal file

2

python/llm/portable-zip/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

python-embed

|

||||

bigdl-llm.zip

|

||||

37

python/llm/portable-zip/README.md

Normal file

37

python/llm/portable-zip/README.md

Normal file

|

|

@ -0,0 +1,37 @@

|

|||

# BigDL-LLM Portable Zip For Windows: User Guide

|

||||

|

||||

## Introduction

|

||||

|

||||

This portable zip includes everything you need to run an LLM with BigDL-LLM optimizations (except models) . Please refer to [How to use](#how-to-use) section to get started.

|

||||

|

||||

### 13B model running on an Intel 11-Gen Core PC (real-time screen capture)

|

||||

|

||||

<p align="left">

|

||||

<img src=https://llm-assets.readthedocs.io/en/latest/_images/one-click-installer-screen-capture.gif width='80%' />

|

||||

|

||||

</p>

|

||||

|

||||

### Verified Models

|

||||

|

||||

- ChatGLM2-6b

|

||||

- Baichuan-13B-Chat

|

||||

- Baichuan2-7B-Chat

|

||||

- internlm-chat-7b-8k

|

||||

- Llama-2-7b-chat-hf

|

||||

|

||||

## How to use

|

||||

|

||||

1. Download the zip from link [here]().

|

||||

2. (Optional) You could also build the zip on your own. Run `setup.bat` and it will generate the zip file.

|

||||

3. Unzip `bigdl-llm.zip`.

|

||||

4. Download the model to your computer. Please ensure there is a file named `config.json` in the model folder, otherwise the script won't work.

|

||||

|

||||

|

||||

|

||||

5. Go into the unzipped folder and double click `chat.bat`. Input the path of the model (e.g. `path\to\model`, note that there's no slash at the end of the path). Press Enter and wait until model finishes loading. Then enjoy chatting with the model!

|

||||

|

||||

|

||||

|

||||

6. If you want to stop chatting, just input `stop` and the model will stop running.

|

||||

|

||||

|

||||

|

|

@ -5,4 +5,6 @@

|

|||

set PYTHONUNBUFFERED=1

|

||||

|

||||

set /p modelpath="Please enter the model path: "

|

||||

.\python-embed\python.exe .\chat.py --model-path="%modelpath%"

|

||||

.\python-embed\python.exe .\chat.py --model-path="%modelpath%"

|

||||

|

||||

pause

|

||||

|

|

@ -20,4 +20,4 @@ cd ..

|

|||

%python-embed% -m pip install bigdl-llm[all] transformers_stream_generator tiktoken einops colorama

|

||||

|

||||

:: compress the python and scripts

|

||||

powershell -Command "Compress-Archive -Path '.\python-embed', '.\chat.bat', '.\chat.py', '.\README.md' -DestinationPath .\portable-executable.zip"

|

||||

powershell -Command "Compress-Archive -Path '.\python-embed', '.\chat.bat', '.\chat.py', '.\README.md' -DestinationPath .\bigdl-llm.zip"

|

||||

5

python/llm/portable-zip/setup.md

Normal file

5

python/llm/portable-zip/setup.md

Normal file

|

|

@ -0,0 +1,5 @@

|

|||

# BigDL-LLM Portable Zip Setup Script For Windows

|

||||

|

||||

# How to use

|

||||

|

||||

Just simply run `setup.bat` and it will download and install all dependency and generate `bigdl-llm.zip` for user to use.

|

||||

|

|

@ -44,7 +44,7 @@ from torch import nn

|

|||

|

||||

from bigdl.llm.transformers.models.utils import extend_kv_cache, init_kv_cache, append_kv_cache

|

||||

from bigdl.llm.transformers.models.utils import apply_rotary_pos_emb

|

||||

from bigdl.dllib.utils import log4Error

|

||||

from bigdl.llm.utils.common import log4Error

|

||||

|

||||

KV_CACHE_ALLOC_BLOCK_LENGTH = 256

|

||||

|

||||

|

|

|

|||

|

|

@ -71,7 +71,7 @@ def rotate_every_two(x):

|

|||

|

||||

|

||||

def apply_rotary_pos_emb(q, k, cos, sin, position_ids, model_family):

|

||||

if model_family in ["llama", "baichuan", "internlm", "aquila"]:

|

||||

if model_family in ["llama", "baichuan", "internlm", "aquila", "gpt_neox"]:

|

||||

# The first two dimensions of cos and sin are always 1, so we can `squeeze` them.

|

||||

cos = cos.squeeze(1).squeeze(0) # [seq_len, dim]

|

||||

sin = sin.squeeze(1).squeeze(0) # [seq_len, dim]

|

||||

|

|

@ -86,14 +86,6 @@ def apply_rotary_pos_emb(q, k, cos, sin, position_ids, model_family):

|

|||

q_embed = (q * cos) + (rotate_every_two(q) * sin)

|

||||

k_embed = (k * cos) + (rotate_every_two(k) * sin)

|

||||

return q_embed, k_embed

|

||||

elif model_family == "gpt_neox":

|

||||

gather_indices = position_ids[:, None, :, None] # [bs, 1, seq_len, 1]

|

||||

gather_indices = gather_indices.repeat(1, cos.shape[1], 1, cos.shape[3])

|

||||

cos = torch.gather(cos.repeat(gather_indices.shape[0], 1, 1, 1), 2, gather_indices)

|

||||

sin = torch.gather(sin.repeat(gather_indices.shape[0], 1, 1, 1), 2, gather_indices)

|

||||

q_embed = (q * cos) + (rotate_half(q) * sin)

|

||||

k_embed = (k * cos) + (rotate_half(k) * sin)

|

||||

return q_embed, k_embed

|

||||

else:

|

||||

invalidInputError(False,

|

||||

f"{model_family} is not supported.")

|

||||

|

|

|

|||

Loading…

Reference in a new issue