diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

index 04a9fd9b..0a409ddc 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

@@ -45,16 +45,16 @@ source /opt/intel/oneapi/setvars.sh

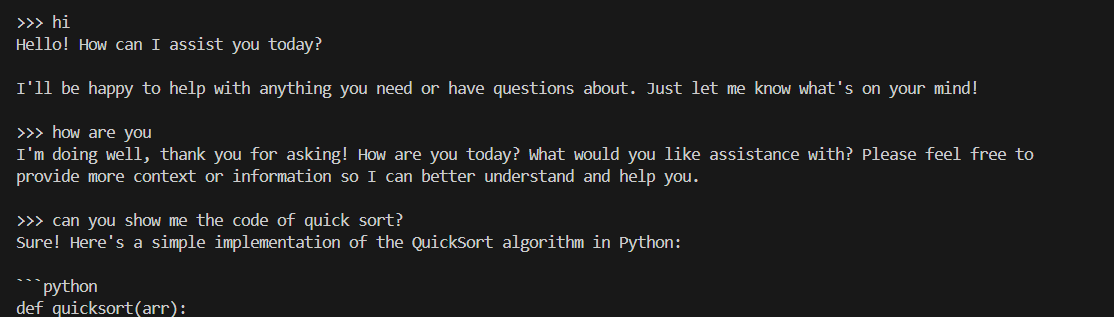

The console will display messages similar to the following:

-

+

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull ` to automatically pull a model. e.g. `dolphin-phi:latest`:

-

+

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull ` to automatically pull a model. e.g. `dolphin-phi:latest`:

-

+

@@ -77,7 +77,7 @@ curl http://localhost:11434/api/generate -d '

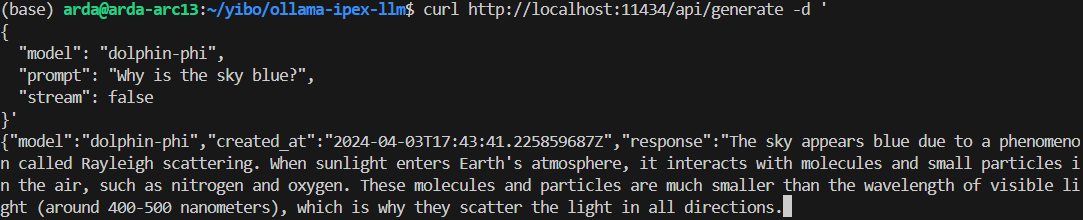

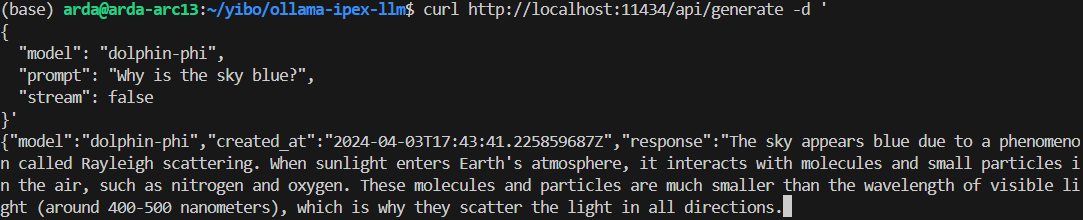

An example output of using model `doplphin-phi` looks like the following:

-

+

@@ -77,7 +77,7 @@ curl http://localhost:11434/api/generate -d '

An example output of using model `doplphin-phi` looks like the following:

-

+

@@ -99,6 +99,6 @@ source /opt/intel/oneapi/setvars.sh

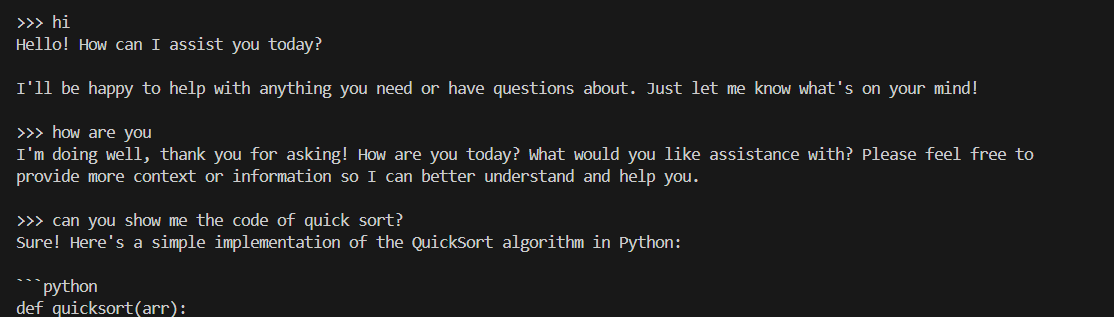

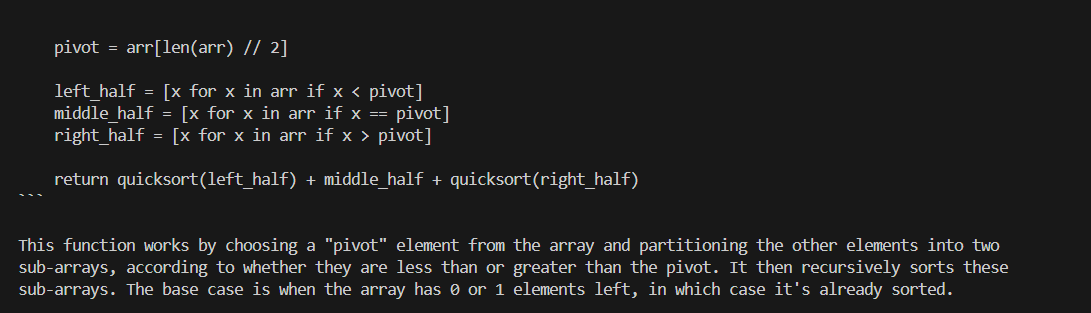

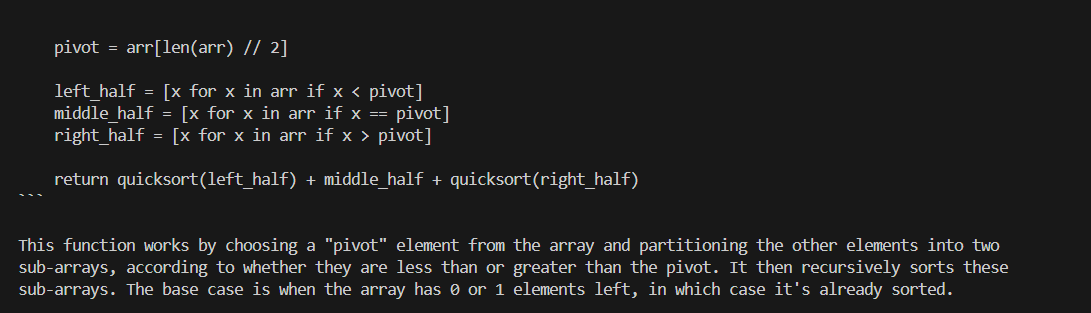

An example process of interacting with model with `ollama run` looks like the following:

-

+

@@ -99,6 +99,6 @@ source /opt/intel/oneapi/setvars.sh

An example process of interacting with model with `ollama run` looks like the following:

-

+

@@ -77,7 +77,7 @@ curl http://localhost:11434/api/generate -d '

An example output of using model `doplphin-phi` looks like the following:

-

+

@@ -77,7 +77,7 @@ curl http://localhost:11434/api/generate -d '

An example output of using model `doplphin-phi` looks like the following:

-

+

@@ -99,6 +99,6 @@ source /opt/intel/oneapi/setvars.sh

An example process of interacting with model with `ollama run` looks like the following:

-

+

@@ -99,6 +99,6 @@ source /opt/intel/oneapi/setvars.sh

An example process of interacting with model with `ollama run` looks like the following:

-

+

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull