+

+

+

++ +## Run PyTorch Examples + +We provide several PyTorch examples that you could apply IPEX-LLM INT4 optimizations on models on Intel GPUs + +For example, if your model is Llama-2-7b-chat-hf and mounted on /llm/models, you can navigate to /examples/llama2 directory, excute the following command to run example: + ```bash + cd /examples/

+

+

+

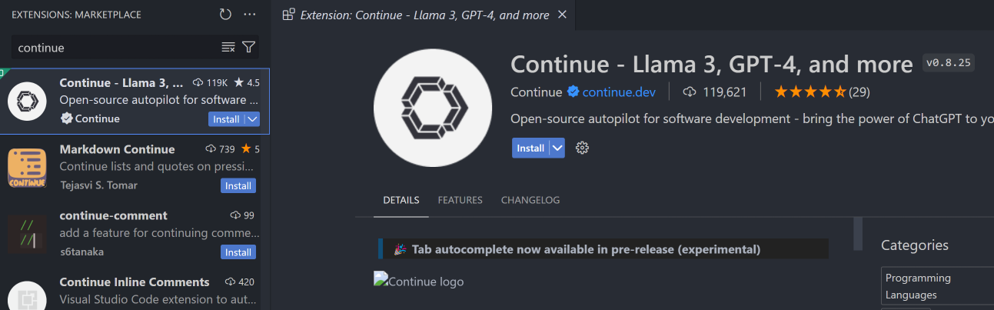

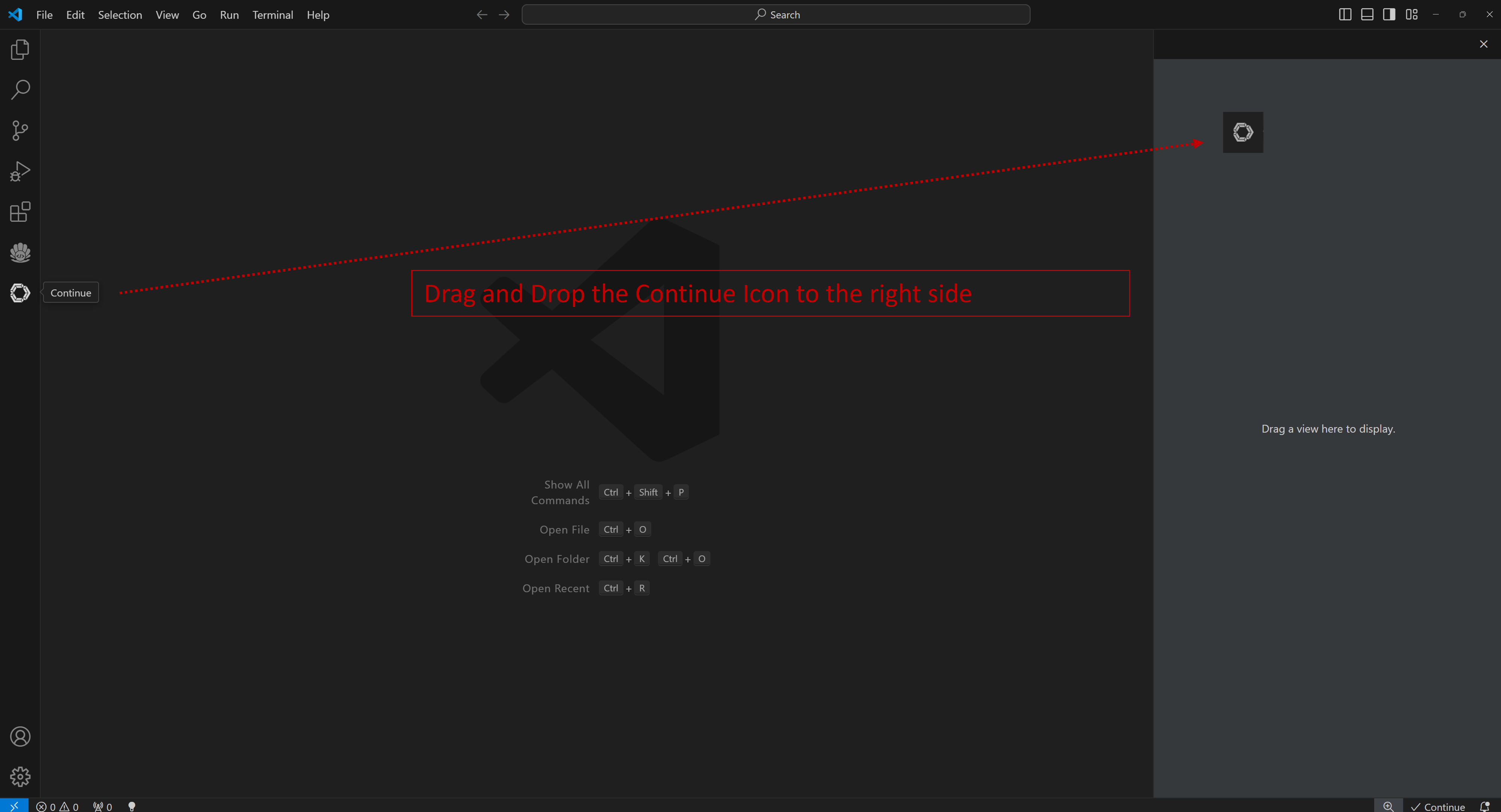

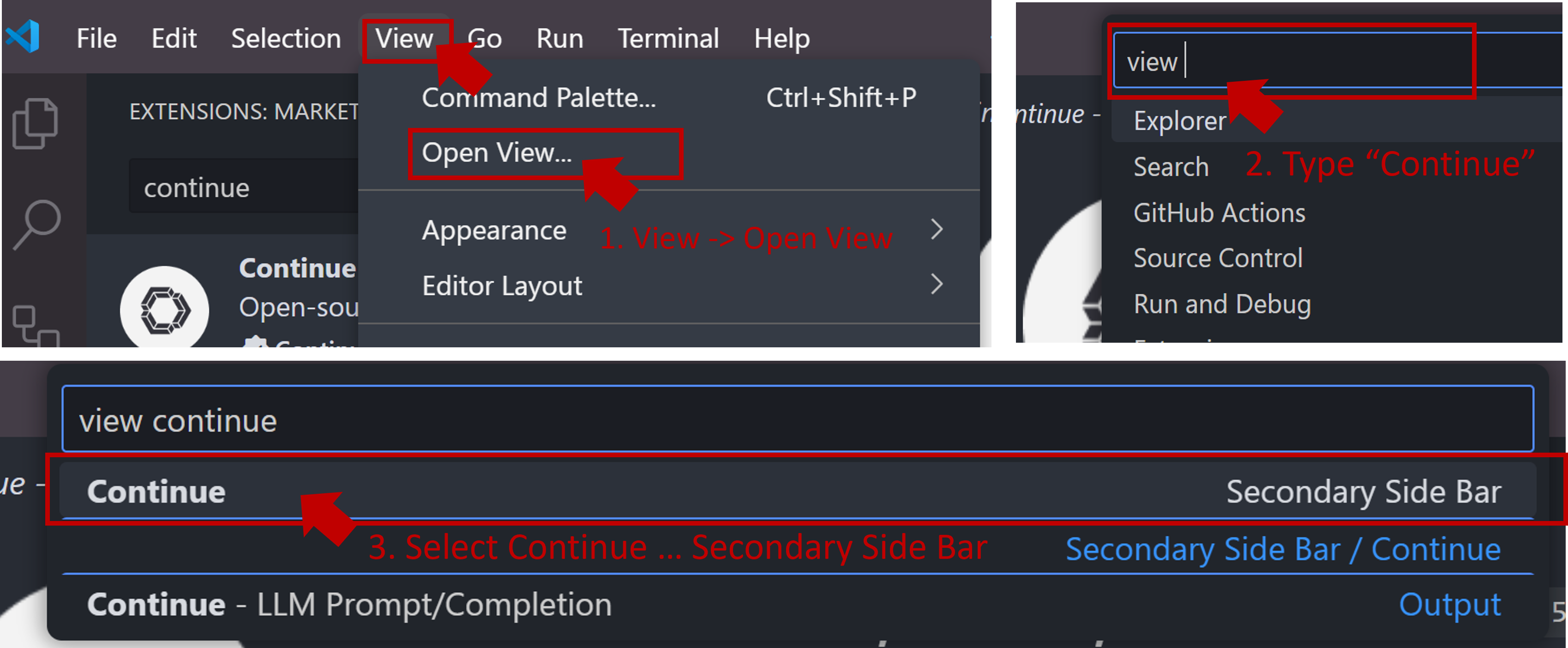

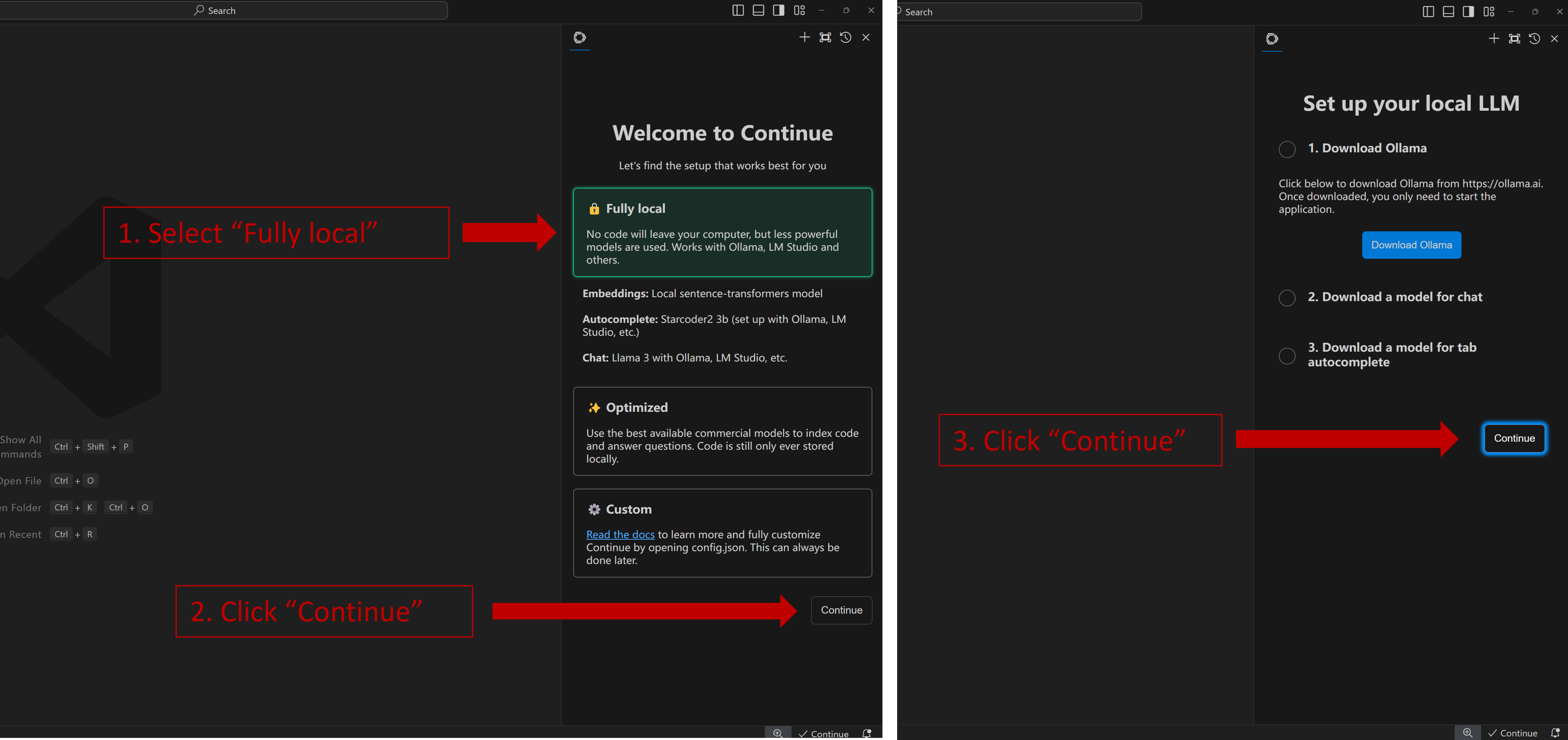

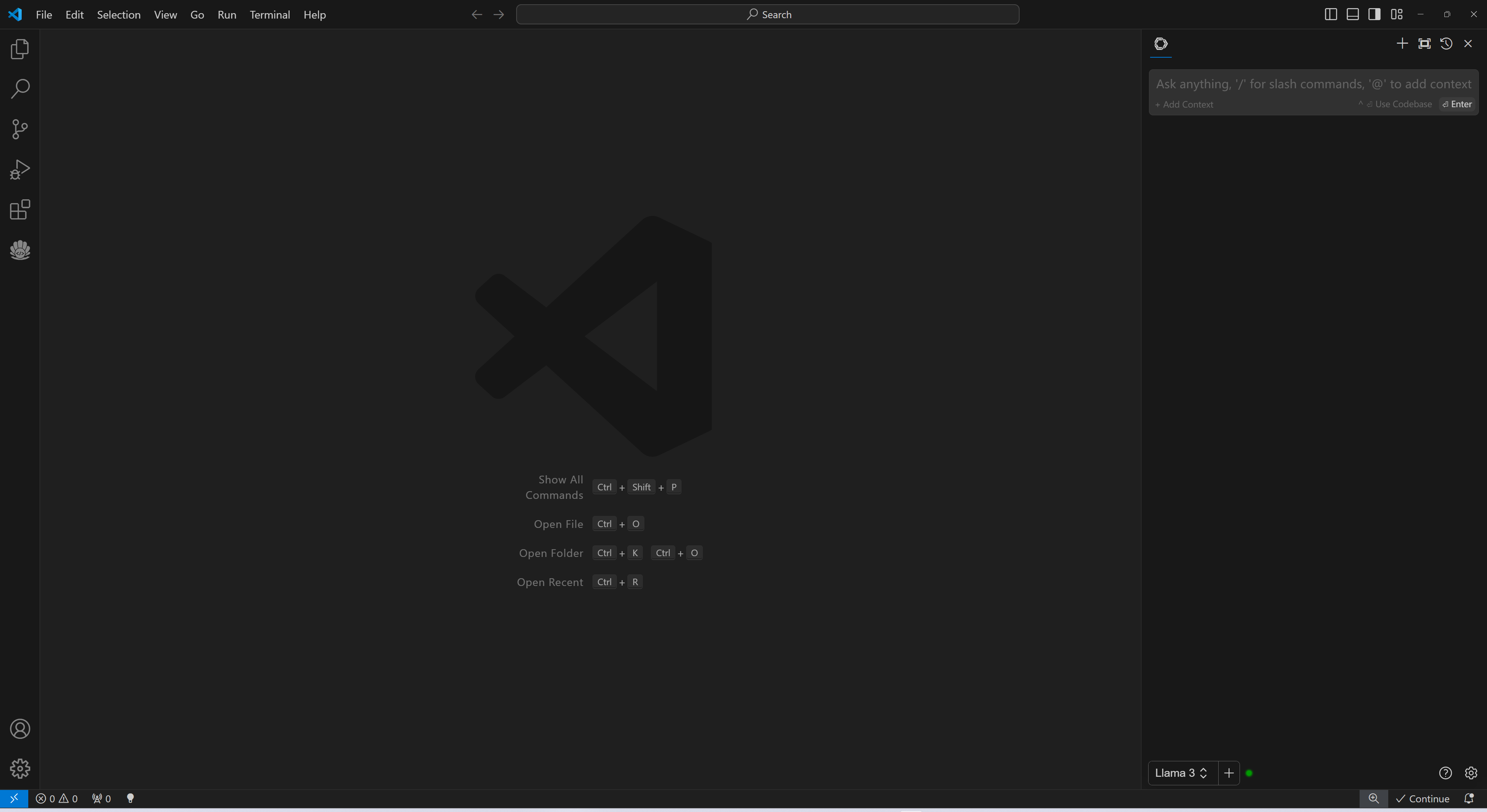

+#### Install WSL Extension for Windows

+

+For Windows, you will need to install wsl extension to to the WSL environment. Open the Extensions view in VSCode (you can use the shortcut `Ctrl+Shift+X`), then search for and install the `WSL` extension.

+

+Press F1 to bring up the Command Palette and type in `WSL: Connect to WSL Using Distro...` and select it and then select a specific WSL distro `Ubuntu`

+

+

+

+

+

+

+

+#### Install WSL Extension for Windows

+

+For Windows, you will need to install wsl extension to to the WSL environment. Open the Extensions view in VSCode (you can use the shortcut `Ctrl+Shift+X`), then search for and install the `WSL` extension.

+

+Press F1 to bring up the Command Palette and type in `WSL: Connect to WSL Using Distro...` and select it and then select a specific WSL distro `Ubuntu`

+

+

+

+  +

+

+

+## Launch Container

+

+Open the Terminal in VSCode (you can use the shortcut `` Ctrl+Shift+` ``), then pull ipex-llm-xpu Docker Image:

+

+```bash

+docker pull intelanalytics/ipex-llm-xpu:latest

+```

+

+Start ipex-llm-xpu Docker Container:

+

+```eval_rst

+.. tabs::

+ .. tab:: Linux

+

+ .. code-block:: bash

+

+ export DOCKER_IMAGE=intelanalytics/ipex-llm-xpu:latest

+ export CONTAINER_NAME=my_container

+ export MODEL_PATH=/llm/models[change to your model path]

+

+ docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ -v $MODEL_PATH:/llm/models \

+ $DOCKER_IMAGE

+

+ .. tab:: Windows WSL

+

+ .. code-block:: bash

+

+ #/bin/bash

+ export DOCKER_IMAGE=intelanalytics/ipex-llm-xpu:latest

+ export CONTAINER_NAME=my_container

+ export MODEL_PATH=/llm/models[change to your model path]

+

+ sudo docker run -itd \

+ --net=host \

+ --privileged \

+ --device /dev/dri \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ -v $MODEL_PATH:/llm/llm-models \

+ -v /usr/lib/wsl:/usr/lib/wsl \

+ $DOCKER_IMAGE

+```

+

+

+## Run/Develop Pytorch Examples

+

+Press F1 to bring up the Command Palette and type in `Dev Containers: Attach to Running Container...` and select it and then select `my_container`

+

+Now you are in a running Docker Container, Open folder `/ipex-llm/python/llm/example/GPU/HF-Transformers-AutoModels/Model/`.

+

+

+

+

+

+

+## Launch Container

+

+Open the Terminal in VSCode (you can use the shortcut `` Ctrl+Shift+` ``), then pull ipex-llm-xpu Docker Image:

+

+```bash

+docker pull intelanalytics/ipex-llm-xpu:latest

+```

+

+Start ipex-llm-xpu Docker Container:

+

+```eval_rst

+.. tabs::

+ .. tab:: Linux

+

+ .. code-block:: bash

+

+ export DOCKER_IMAGE=intelanalytics/ipex-llm-xpu:latest

+ export CONTAINER_NAME=my_container

+ export MODEL_PATH=/llm/models[change to your model path]

+

+ docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ -v $MODEL_PATH:/llm/models \

+ $DOCKER_IMAGE

+

+ .. tab:: Windows WSL

+

+ .. code-block:: bash

+

+ #/bin/bash

+ export DOCKER_IMAGE=intelanalytics/ipex-llm-xpu:latest

+ export CONTAINER_NAME=my_container

+ export MODEL_PATH=/llm/models[change to your model path]

+

+ sudo docker run -itd \

+ --net=host \

+ --privileged \

+ --device /dev/dri \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ -v $MODEL_PATH:/llm/llm-models \

+ -v /usr/lib/wsl:/usr/lib/wsl \

+ $DOCKER_IMAGE

+```

+

+

+## Run/Develop Pytorch Examples

+

+Press F1 to bring up the Command Palette and type in `Dev Containers: Attach to Running Container...` and select it and then select `my_container`

+

+Now you are in a running Docker Container, Open folder `/ipex-llm/python/llm/example/GPU/HF-Transformers-AutoModels/Model/`.

+

+

+  +

+

+In this folder, we provide several PyTorch examples that you could apply IPEX-LLM INT4 optimizations on models on Intel GPUs.

+

+For example, if your model is Llama-2-7b-chat-hf and mounted on /llm/models, you can navigate to llama2 directory, excute the following command to run example:

+ ```bash

+ cd

+

+

+In this folder, we provide several PyTorch examples that you could apply IPEX-LLM INT4 optimizations on models on Intel GPUs.

+

+For example, if your model is Llama-2-7b-chat-hf and mounted on /llm/models, you can navigate to llama2 directory, excute the following command to run example:

+ ```bash

+ cd  +

+

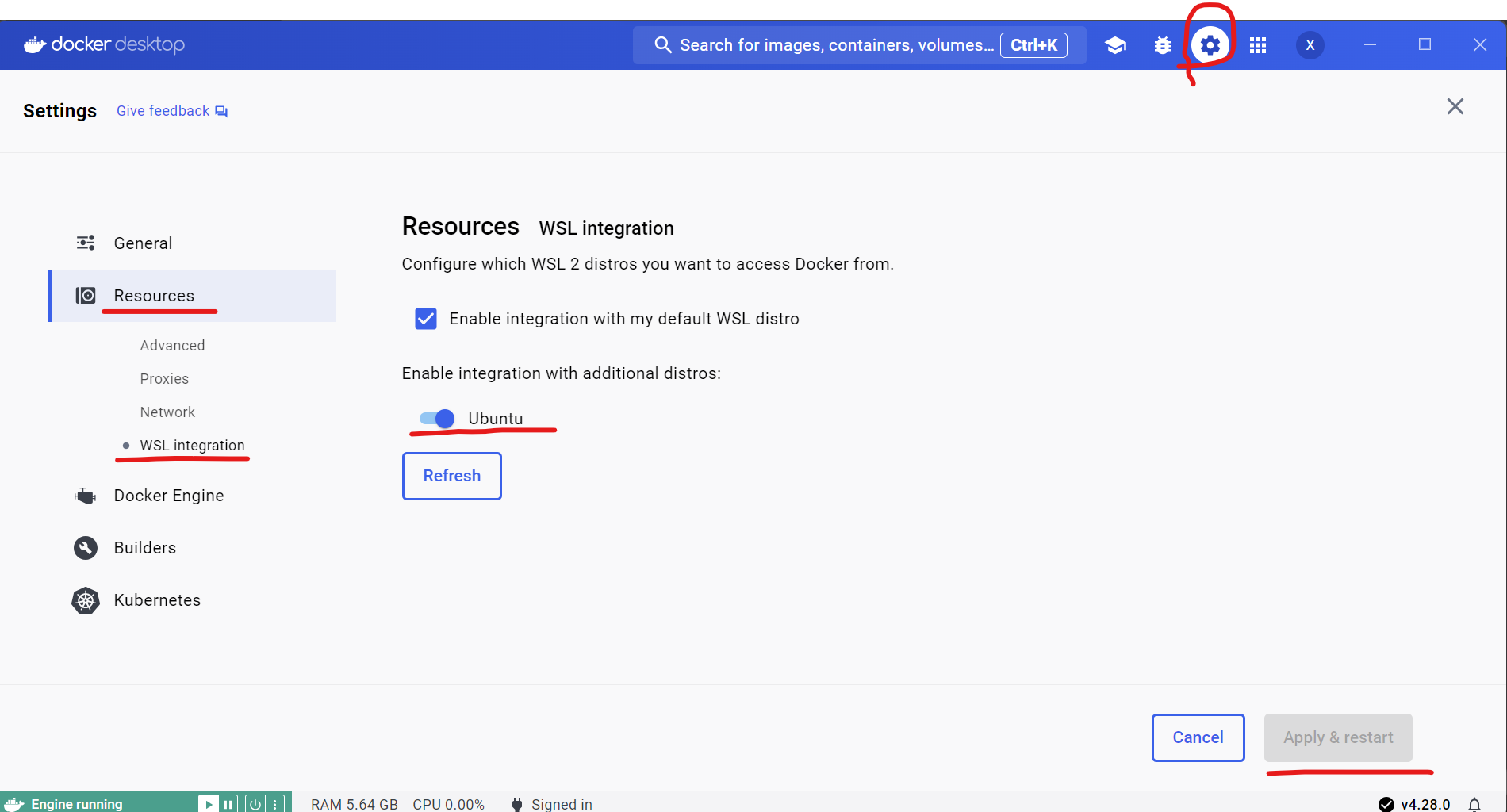

+```eval_rst

+.. tip::

+

+ If you encounter **Docker Engine stopped** when opening Docker Desktop, you can reopen it in administrator mode.

+```

+

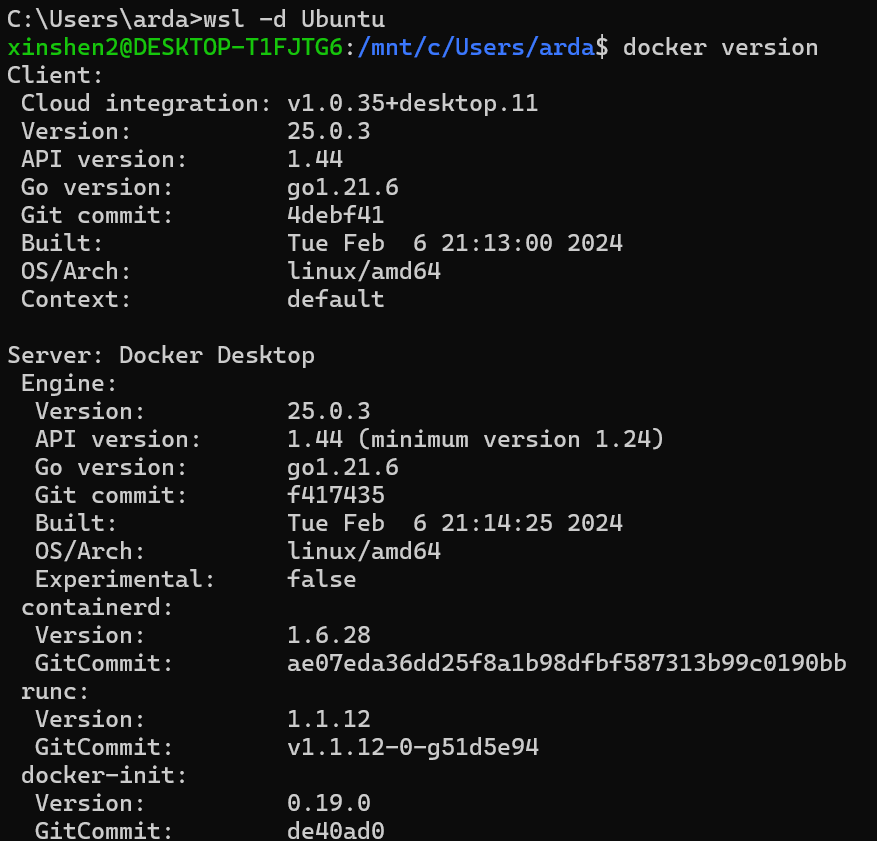

+ #### Verify Docker is enabled in WSL2

+

+ Execute the following commands in PowerShell or Command Prompt to verify that Docker is enabled in WSL2:

+ ```bash

+ wsl -d Ubuntu # Run Ubuntu WSL distribution

+ docker version # Check if Docker is enabled in WSL

+ ```

+

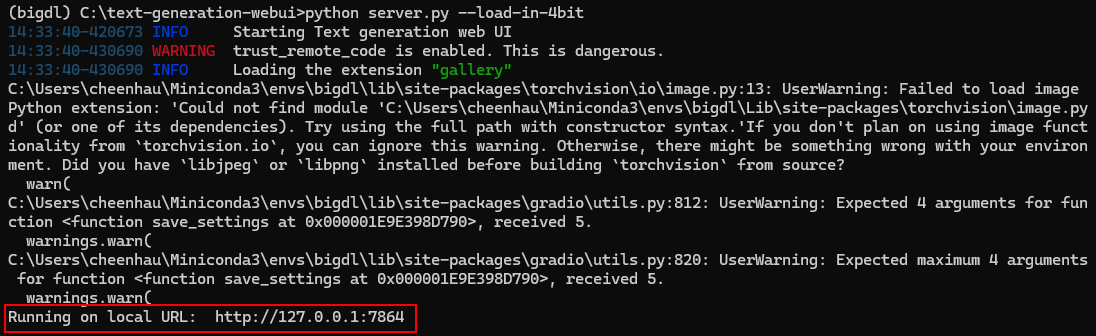

+You can see the output similar to the following:

+

+

+

+

+

+```eval_rst

+.. tip::

+

+ If you encounter **Docker Engine stopped** when opening Docker Desktop, you can reopen it in administrator mode.

+```

+

+ #### Verify Docker is enabled in WSL2

+

+ Execute the following commands in PowerShell or Command Prompt to verify that Docker is enabled in WSL2:

+ ```bash

+ wsl -d Ubuntu # Run Ubuntu WSL distribution

+ docker version # Check if Docker is enabled in WSL

+ ```

+

+You can see the output similar to the following:

+

+

+  +

+

+```eval_rst

+.. tip::

+

+ During the use of Docker in WSL, Docker Desktop needs to be kept open all the time.

+```

+

+

+## IPEX-LLM Docker Containers

+

+We have several docker images available for running LLMs on Intel GPUs. The following table lists the available images and their uses:

+

+| Image Name | Description | Use Case |

+|------------|-------------|----------|

+| intelanalytics/ipex-llm-cpu:latest | CPU Inference |For development and running LLMs using llama.cpp, Ollama and Python|

+| intelanalytics/ipex-llm-xpu:latest | GPU Inference |For development and running LLMs using llama.cpp, Ollama and Python|

+| intelanalytics/ipex-llm-serving-cpu:latest | CPU Serving|For serving multiple users/requests through REST APIs using vLLM/FastChat|

+| intelanalytics/ipex-llm-serving-xpu:latest | GPU Serving|For serving multiple users/requests through REST APIs using vLLM/FastChat|

+| intelanalytics/ipex-llm-finetune-qlora-cpu-standalone:latest | CPU Finetuning via Docker|For fine-tuning LLMs using QLora/Lora, etc. |

+|intelanalytics/ipex-llm-finetune-qlora-cpu-k8s:latest|CPU Finetuning via Kubernetes|For fine-tuning LLMs using QLora/Lora, etc. |

+| intelanalytics/ipex-llm-finetune-qlora-xpu:latest| GPU Finetuning|For fine-tuning LLMs using QLora/Lora, etc.|

+

+We have also provided several quickstarts for various usage scenarios:

+- [Run and develop LLM applications in PyTorch](./docker_pytorch_inference_gpu.html)

+

+... to be added soon.

+

+## Troubleshooting

+

+

+If your machine has both an integrated GPU (iGPU) and a dedicated GPU (dGPU) like ARC, you may encounter the following issue:

+

+```bash

+Abort was called at 62 line in file:

+./shared/source/os_interface/os_interface.h

+LIBXSMM_VERSION: main_stable-1.17-3651 (25693763)

+LIBXSMM_TARGET: adl [Intel(R) Core(TM) i7-14700K]

+Registry and code: 13 MB

+Command: python chat.py --model-path /llm/llm-models/chatglm2-6b/

+Uptime: 29.349235 s

+Aborted

+```

+To resolve this problem, you can disable the iGPU in Device Manager on Windows. For details, refer to [this guide](https://www.elevenforum.com/t/enable-or-disable-integrated-graphics-igpu-in-windows-11.18616/)

diff --git a/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md b/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md

new file mode 100644

index 00000000..786316fd

--- /dev/null

+++ b/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md

@@ -0,0 +1,117 @@

+# FastChat Serving with IPEX-LLM on Intel GPUs via docker

+

+This guide demonstrates how to run `FastChat` serving with `IPEX-LLM` on Intel GPUs via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/DockerGuides/docker_windows_gpu.html#linux) to install Docker on Linux.

+

+## Pull the latest image

+

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-xpu:latest

+```

+

+## Start Docker Container

+

+ To map the `xpu` into the container, you need to specify `--device=/dev/dri` when booting the container. Change the `/path/to/models` to mount the models.

+

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-xpu:latest

+export CONTAINER_NAME=ipex-llm-serving-xpu-container

+sudo docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-xpu-container /bin/bash

+```

+

+

+To verify the device is successfully mapped into the container, run `sycl-ls` to check the result. In a machine with Arc A770, the sampled output is:

+

+```bash

+root@arda-arc12:/# sycl-ls

+[opencl:acc:0] Intel(R) FPGA Emulation Platform for OpenCL(TM), Intel(R) FPGA Emulation Device 1.2 [2023.16.7.0.21_160000]

+[opencl:cpu:1] Intel(R) OpenCL, 13th Gen Intel(R) Core(TM) i9-13900K 3.0 [2023.16.7.0.21_160000]

+[opencl:gpu:2] Intel(R) OpenCL Graphics, Intel(R) Arc(TM) A770 Graphics 3.0 [23.17.26241.33]

+[ext_oneapi_level_zero:gpu:0] Intel(R) Level-Zero, Intel(R) Arc(TM) A770 Graphics 1.3 [1.3.26241]

+```

+

+

+## Running FastChat serving with IPEX-LLM on Intel GPU in Docker

+

+For convenience, we have provided a script named `/llm/start-fastchat-service.sh` for you to start the service.

+

+However, the script only provide instructions for the most common scenarios. If this script doesn't meet your needs, you can always find the complete guidance for FastChat at [Serving using IPEX-LLM and FastChat](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/fastchat_quickstart.html#start-the-service).

+

+Before starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#runtime-configurations) to setup our recommended runtime configurations.

+

+Now we can start the FastChat service, you can use our provided script `/llm/start-fastchat-service.sh` like the following way:

+

+```bash

+# Only the MODEL_PATH needs to be set, other parameters have default values

+export MODEL_PATH=YOUR_SELECTED_MODEL_PATH

+export LOW_BIT_FORMAT=sym_int4

+export CONTROLLER_HOST=localhost

+export CONTROLLER_PORT=21001

+export WORKER_HOST=localhost

+export WORKER_PORT=21002

+export API_HOST=localhost

+export API_PORT=8000

+

+# Use the default model_worker

+bash /llm/start-fastchat-service.sh -w model_worker

+```

+

+If everything goes smoothly, the result should be similar to the following figure:

+

+

+

+

+

+```eval_rst

+.. tip::

+

+ During the use of Docker in WSL, Docker Desktop needs to be kept open all the time.

+```

+

+

+## IPEX-LLM Docker Containers

+

+We have several docker images available for running LLMs on Intel GPUs. The following table lists the available images and their uses:

+

+| Image Name | Description | Use Case |

+|------------|-------------|----------|

+| intelanalytics/ipex-llm-cpu:latest | CPU Inference |For development and running LLMs using llama.cpp, Ollama and Python|

+| intelanalytics/ipex-llm-xpu:latest | GPU Inference |For development and running LLMs using llama.cpp, Ollama and Python|

+| intelanalytics/ipex-llm-serving-cpu:latest | CPU Serving|For serving multiple users/requests through REST APIs using vLLM/FastChat|

+| intelanalytics/ipex-llm-serving-xpu:latest | GPU Serving|For serving multiple users/requests through REST APIs using vLLM/FastChat|

+| intelanalytics/ipex-llm-finetune-qlora-cpu-standalone:latest | CPU Finetuning via Docker|For fine-tuning LLMs using QLora/Lora, etc. |

+|intelanalytics/ipex-llm-finetune-qlora-cpu-k8s:latest|CPU Finetuning via Kubernetes|For fine-tuning LLMs using QLora/Lora, etc. |

+| intelanalytics/ipex-llm-finetune-qlora-xpu:latest| GPU Finetuning|For fine-tuning LLMs using QLora/Lora, etc.|

+

+We have also provided several quickstarts for various usage scenarios:

+- [Run and develop LLM applications in PyTorch](./docker_pytorch_inference_gpu.html)

+

+... to be added soon.

+

+## Troubleshooting

+

+

+If your machine has both an integrated GPU (iGPU) and a dedicated GPU (dGPU) like ARC, you may encounter the following issue:

+

+```bash

+Abort was called at 62 line in file:

+./shared/source/os_interface/os_interface.h

+LIBXSMM_VERSION: main_stable-1.17-3651 (25693763)

+LIBXSMM_TARGET: adl [Intel(R) Core(TM) i7-14700K]

+Registry and code: 13 MB

+Command: python chat.py --model-path /llm/llm-models/chatglm2-6b/

+Uptime: 29.349235 s

+Aborted

+```

+To resolve this problem, you can disable the iGPU in Device Manager on Windows. For details, refer to [this guide](https://www.elevenforum.com/t/enable-or-disable-integrated-graphics-igpu-in-windows-11.18616/)

diff --git a/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md b/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md

new file mode 100644

index 00000000..786316fd

--- /dev/null

+++ b/docs/mddocs/DockerGuides/fastchat_docker_quickstart.md

@@ -0,0 +1,117 @@

+# FastChat Serving with IPEX-LLM on Intel GPUs via docker

+

+This guide demonstrates how to run `FastChat` serving with `IPEX-LLM` on Intel GPUs via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/DockerGuides/docker_windows_gpu.html#linux) to install Docker on Linux.

+

+## Pull the latest image

+

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-xpu:latest

+```

+

+## Start Docker Container

+

+ To map the `xpu` into the container, you need to specify `--device=/dev/dri` when booting the container. Change the `/path/to/models` to mount the models.

+

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-xpu:latest

+export CONTAINER_NAME=ipex-llm-serving-xpu-container

+sudo docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-xpu-container /bin/bash

+```

+

+

+To verify the device is successfully mapped into the container, run `sycl-ls` to check the result. In a machine with Arc A770, the sampled output is:

+

+```bash

+root@arda-arc12:/# sycl-ls

+[opencl:acc:0] Intel(R) FPGA Emulation Platform for OpenCL(TM), Intel(R) FPGA Emulation Device 1.2 [2023.16.7.0.21_160000]

+[opencl:cpu:1] Intel(R) OpenCL, 13th Gen Intel(R) Core(TM) i9-13900K 3.0 [2023.16.7.0.21_160000]

+[opencl:gpu:2] Intel(R) OpenCL Graphics, Intel(R) Arc(TM) A770 Graphics 3.0 [23.17.26241.33]

+[ext_oneapi_level_zero:gpu:0] Intel(R) Level-Zero, Intel(R) Arc(TM) A770 Graphics 1.3 [1.3.26241]

+```

+

+

+## Running FastChat serving with IPEX-LLM on Intel GPU in Docker

+

+For convenience, we have provided a script named `/llm/start-fastchat-service.sh` for you to start the service.

+

+However, the script only provide instructions for the most common scenarios. If this script doesn't meet your needs, you can always find the complete guidance for FastChat at [Serving using IPEX-LLM and FastChat](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/fastchat_quickstart.html#start-the-service).

+

+Before starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#runtime-configurations) to setup our recommended runtime configurations.

+

+Now we can start the FastChat service, you can use our provided script `/llm/start-fastchat-service.sh` like the following way:

+

+```bash

+# Only the MODEL_PATH needs to be set, other parameters have default values

+export MODEL_PATH=YOUR_SELECTED_MODEL_PATH

+export LOW_BIT_FORMAT=sym_int4

+export CONTROLLER_HOST=localhost

+export CONTROLLER_PORT=21001

+export WORKER_HOST=localhost

+export WORKER_PORT=21002

+export API_HOST=localhost

+export API_PORT=8000

+

+# Use the default model_worker

+bash /llm/start-fastchat-service.sh -w model_worker

+```

+

+If everything goes smoothly, the result should be similar to the following figure:

+

+

+  +

+

+By default, we are using the `ipex_llm_worker` as the backend engine. You can also use `vLLM` as the backend engine. Try the following examples:

+

+```bash

+# Only the MODEL_PATH needs to be set, other parameters have default values

+export MODEL_PATH=YOUR_SELECTED_MODEL_PATH

+export LOW_BIT_FORMAT=sym_int4

+export CONTROLLER_HOST=localhost

+export CONTROLLER_PORT=21001

+export WORKER_HOST=localhost

+export WORKER_PORT=21002

+export API_HOST=localhost

+export API_PORT=8000

+

+# Use the default model_worker

+bash /llm/start-fastchat-service.sh -w vllm_worker

+```

+

+The `vllm_worker` may start slowly than normal `ipex_llm_worker`. The booted service should be similar to the following figure:

+

+

+

+

+

+By default, we are using the `ipex_llm_worker` as the backend engine. You can also use `vLLM` as the backend engine. Try the following examples:

+

+```bash

+# Only the MODEL_PATH needs to be set, other parameters have default values

+export MODEL_PATH=YOUR_SELECTED_MODEL_PATH

+export LOW_BIT_FORMAT=sym_int4

+export CONTROLLER_HOST=localhost

+export CONTROLLER_PORT=21001

+export WORKER_HOST=localhost

+export WORKER_PORT=21002

+export API_HOST=localhost

+export API_PORT=8000

+

+# Use the default model_worker

+bash /llm/start-fastchat-service.sh -w vllm_worker

+```

+

+The `vllm_worker` may start slowly than normal `ipex_llm_worker`. The booted service should be similar to the following figure:

+

+

+  +

+

+

+```eval_rst

+.. note::

+ To verify/use the service booted by the script, follow the instructions in `this guide

+

+

+

+```eval_rst

+.. note::

+ To verify/use the service booted by the script, follow the instructions in `this guide  +

diff --git a/docs/mddocs/DockerGuides/index.rst b/docs/mddocs/DockerGuides/index.rst

new file mode 100644

index 00000000..29781e52

--- /dev/null

+++ b/docs/mddocs/DockerGuides/index.rst

@@ -0,0 +1,15 @@

+IPEX-LLM Docker Container User Guides

+=====================================

+

+In this section, you will find guides related to using IPEX-LLM with Docker, covering how to:

+

+* `Overview of IPEX-LLM Containers <./docker_windows_gpu.html>`_

+

+* Inference in Python/C++

+ * `GPU Inference in Python with IPEX-LLM <./docker_pytorch_inference_gpu.html>`_

+ * `VSCode LLM Development with IPEX-LLM on Intel GPU <./docker_pytorch_inference_gpu.html>`_

+ * `llama.cpp/Ollama/Open-WebUI with IPEX-LLM on Intel GPU <./docker_cpp_xpu_quickstart.html>`_

+* Serving

+ * `FastChat with IPEX-LLM on Intel GPU <./fastchat_docker_quickstart.html>`_

+ * `vLLM with IPEX-LLM on Intel GPU <./vllm_docker_quickstart.html>`_

+ * `vLLM with IPEX-LLM on Intel CPU <./vllm_cpu_docker_quickstart.html>`_

diff --git a/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md b/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md

new file mode 100644

index 00000000..36b39ed5

--- /dev/null

+++ b/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md

@@ -0,0 +1,118 @@

+# vLLM Serving with IPEX-LLM on Intel CPU via Docker

+

+This guide demonstrates how to run `vLLM` serving with `ipex-llm` on Intel CPU via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://www.docker.com/get-started/) to install Docker on Linux.

+

+## Pull the latest image

+

+*Note: For running vLLM serving on Intel CPUs, you can currently use either the `intelanalytics/ipex-llm-serving-cpu:latest` or `intelanalytics/ipex-llm-serving-vllm-cpu:latest` Docker image.*

+

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-cpu:latest

+```

+

+## Start Docker Container

+

+To fully use your Intel CPU to run vLLM inference and serving, you should

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-cpu:latest

+export CONTAINER_NAME=ipex-llm-serving-cpu-container

+sudo docker run -itd \

+ --net=host \

+ --cpuset-cpus="0-47" \

+ --cpuset-mems="0" \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="64G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-cpu-container /bin/bash

+```

+

+## Running vLLM serving with IPEX-LLM on Intel CPU in Docker

+

+We have included multiple vLLM-related files in `/llm/`:

+1. `vllm_offline_inference.py`: Used for vLLM offline inference example

+2. `benchmark_vllm_throughput.py`: Used for benchmarking throughput

+3. `payload-1024.lua`: Used for testing request per second using 1k-128 request

+4. `start-vllm-service.sh`: Used for template for starting vLLM service

+

+Before performing benchmark or starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_cpu.html#environment-setup) to setup our recommended runtime configurations.

+

+### Service

+

+A script named `/llm/start-vllm-service.sh` have been included in the image for starting the service conveniently.

+

+Modify the `model` and `served_model_name` in the script so that it fits your requirement. The `served_model_name` indicates the model name used in the API.

+

+Then start the service using `bash /llm/start-vllm-service.sh`, the following message should be print if the service started successfully.

+

+If the service have booted successfully, you should see the output similar to the following figure:

+

+

+

+

diff --git a/docs/mddocs/DockerGuides/index.rst b/docs/mddocs/DockerGuides/index.rst

new file mode 100644

index 00000000..29781e52

--- /dev/null

+++ b/docs/mddocs/DockerGuides/index.rst

@@ -0,0 +1,15 @@

+IPEX-LLM Docker Container User Guides

+=====================================

+

+In this section, you will find guides related to using IPEX-LLM with Docker, covering how to:

+

+* `Overview of IPEX-LLM Containers <./docker_windows_gpu.html>`_

+

+* Inference in Python/C++

+ * `GPU Inference in Python with IPEX-LLM <./docker_pytorch_inference_gpu.html>`_

+ * `VSCode LLM Development with IPEX-LLM on Intel GPU <./docker_pytorch_inference_gpu.html>`_

+ * `llama.cpp/Ollama/Open-WebUI with IPEX-LLM on Intel GPU <./docker_cpp_xpu_quickstart.html>`_

+* Serving

+ * `FastChat with IPEX-LLM on Intel GPU <./fastchat_docker_quickstart.html>`_

+ * `vLLM with IPEX-LLM on Intel GPU <./vllm_docker_quickstart.html>`_

+ * `vLLM with IPEX-LLM on Intel CPU <./vllm_cpu_docker_quickstart.html>`_

diff --git a/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md b/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md

new file mode 100644

index 00000000..36b39ed5

--- /dev/null

+++ b/docs/mddocs/DockerGuides/vllm_cpu_docker_quickstart.md

@@ -0,0 +1,118 @@

+# vLLM Serving with IPEX-LLM on Intel CPU via Docker

+

+This guide demonstrates how to run `vLLM` serving with `ipex-llm` on Intel CPU via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://www.docker.com/get-started/) to install Docker on Linux.

+

+## Pull the latest image

+

+*Note: For running vLLM serving on Intel CPUs, you can currently use either the `intelanalytics/ipex-llm-serving-cpu:latest` or `intelanalytics/ipex-llm-serving-vllm-cpu:latest` Docker image.*

+

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-cpu:latest

+```

+

+## Start Docker Container

+

+To fully use your Intel CPU to run vLLM inference and serving, you should

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-cpu:latest

+export CONTAINER_NAME=ipex-llm-serving-cpu-container

+sudo docker run -itd \

+ --net=host \

+ --cpuset-cpus="0-47" \

+ --cpuset-mems="0" \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="64G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-cpu-container /bin/bash

+```

+

+## Running vLLM serving with IPEX-LLM on Intel CPU in Docker

+

+We have included multiple vLLM-related files in `/llm/`:

+1. `vllm_offline_inference.py`: Used for vLLM offline inference example

+2. `benchmark_vllm_throughput.py`: Used for benchmarking throughput

+3. `payload-1024.lua`: Used for testing request per second using 1k-128 request

+4. `start-vllm-service.sh`: Used for template for starting vLLM service

+

+Before performing benchmark or starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_cpu.html#environment-setup) to setup our recommended runtime configurations.

+

+### Service

+

+A script named `/llm/start-vllm-service.sh` have been included in the image for starting the service conveniently.

+

+Modify the `model` and `served_model_name` in the script so that it fits your requirement. The `served_model_name` indicates the model name used in the API.

+

+Then start the service using `bash /llm/start-vllm-service.sh`, the following message should be print if the service started successfully.

+

+If the service have booted successfully, you should see the output similar to the following figure:

+

+

+  +

+

+

+#### Verify

+After the service has been booted successfully, you can send a test request using `curl`. Here, `YOUR_MODEL` should be set equal to `served_model_name` in your booting script, e.g. `Qwen1.5`.

+

+```bash

+curl http://localhost:8000/v1/completions \

+-H "Content-Type: application/json" \

+-d '{

+ "model": "YOUR_MODEL",

+ "prompt": "San Francisco is a",

+ "max_tokens": 128,

+ "temperature": 0

+}' | jq '.choices[0].text'

+```

+

+Below shows an example output using `Qwen1.5-7B-Chat` with low-bit format `sym_int4`:

+

+

+

+

+

+

+#### Verify

+After the service has been booted successfully, you can send a test request using `curl`. Here, `YOUR_MODEL` should be set equal to `served_model_name` in your booting script, e.g. `Qwen1.5`.

+

+```bash

+curl http://localhost:8000/v1/completions \

+-H "Content-Type: application/json" \

+-d '{

+ "model": "YOUR_MODEL",

+ "prompt": "San Francisco is a",

+ "max_tokens": 128,

+ "temperature": 0

+}' | jq '.choices[0].text'

+```

+

+Below shows an example output using `Qwen1.5-7B-Chat` with low-bit format `sym_int4`:

+

+

+  +

+

+#### Tuning

+

+You can tune the service using these four arguments:

+- `--max-model-len`

+- `--max-num-batched-token`

+- `--max-num-seq`

+

+You can refer to this [doc](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#service) for a detailed explaination on these parameters.

+

+### Benchmark

+

+#### Online benchmark throurgh api_server

+

+We can benchmark the api_server to get an estimation about TPS (transactions per second). To do so, you need to start the service first according to the instructions mentioned above.

+

+Then in the container, do the following:

+1. modify the `/llm/payload-1024.lua` so that the "model" attribute is correct. By default, we use a prompt that is roughly 1024 token long, you can change it if needed.

+2. Start the benchmark using `wrk` using the script below:

+

+```bash

+cd /llm

+# warmup

+wrk -t4 -c4 -d3m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+# You can change -t and -c to control the concurrency.

+# By default, we use 8 connections to benchmark the service.

+wrk -t8 -c8 -d15m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+```

+

+#### Offline benchmark through benchmark_vllm_throughput.py

+

+Please refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#performing-benchmark) on how to use `benchmark_vllm_throughput.py` for benchmarking.

diff --git a/docs/mddocs/DockerGuides/vllm_docker_quickstart.md b/docs/mddocs/DockerGuides/vllm_docker_quickstart.md

new file mode 100644

index 00000000..eb7fff3e

--- /dev/null

+++ b/docs/mddocs/DockerGuides/vllm_docker_quickstart.md

@@ -0,0 +1,146 @@

+# vLLM Serving with IPEX-LLM on Intel GPUs via Docker

+

+This guide demonstrates how to run `vLLM` serving with `IPEX-LLM` on Intel GPUs via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/DockerGuides/docker_windows_gpu.html#linux) to install Docker on Linux.

+

+## Pull the latest image

+

+*Note: For running vLLM serving on Intel GPUs, you can currently use either the `intelanalytics/ipex-llm-serving-xpu:latest` or `intelanalytics/ipex-llm-serving-vllm-xpu:latest` Docker image.*

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-xpu:latest

+```

+

+## Start Docker Container

+

+ To map the `xpu` into the container, you need to specify `--device=/dev/dri` when booting the container. Change the `/path/to/models` to mount the models.

+

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-xpu:latest

+export CONTAINER_NAME=ipex-llm-serving-xpu-container

+sudo docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-xpu-container /bin/bash

+```

+

+

+To verify the device is successfully mapped into the container, run `sycl-ls` to check the result. In a machine with Arc A770, the sampled output is:

+

+```bash

+root@arda-arc12:/# sycl-ls

+[opencl:acc:0] Intel(R) FPGA Emulation Platform for OpenCL(TM), Intel(R) FPGA Emulation Device 1.2 [2023.16.7.0.21_160000]

+[opencl:cpu:1] Intel(R) OpenCL, 13th Gen Intel(R) Core(TM) i9-13900K 3.0 [2023.16.7.0.21_160000]

+[opencl:gpu:2] Intel(R) OpenCL Graphics, Intel(R) Arc(TM) A770 Graphics 3.0 [23.17.26241.33]

+[ext_oneapi_level_zero:gpu:0] Intel(R) Level-Zero, Intel(R) Arc(TM) A770 Graphics 1.3 [1.3.26241]

+```

+

+## Running vLLM serving with IPEX-LLM on Intel GPU in Docker

+

+We have included multiple vLLM-related files in `/llm/`:

+1. `vllm_offline_inference.py`: Used for vLLM offline inference example

+2. `benchmark_vllm_throughput.py`: Used for benchmarking throughput

+3. `payload-1024.lua`: Used for testing request per second using 1k-128 request

+4. `start-vllm-service.sh`: Used for template for starting vLLM service

+

+Before performing benchmark or starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#runtime-configurations) to setup our recommended runtime configurations.

+

+

+### Service

+

+#### Single card serving

+

+A script named `/llm/start-vllm-service.sh` have been included in the image for starting the service conveniently.

+

+Modify the `model` and `served_model_name` in the script so that it fits your requirement. The `served_model_name` indicates the model name used in the API.

+

+Then start the service using `bash /llm/start-vllm-service.sh`, the following message should be print if the service started successfully.

+

+If the service have booted successfully, you should see the output similar to the following figure:

+

+

+

+

+

+#### Tuning

+

+You can tune the service using these four arguments:

+- `--max-model-len`

+- `--max-num-batched-token`

+- `--max-num-seq`

+

+You can refer to this [doc](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#service) for a detailed explaination on these parameters.

+

+### Benchmark

+

+#### Online benchmark throurgh api_server

+

+We can benchmark the api_server to get an estimation about TPS (transactions per second). To do so, you need to start the service first according to the instructions mentioned above.

+

+Then in the container, do the following:

+1. modify the `/llm/payload-1024.lua` so that the "model" attribute is correct. By default, we use a prompt that is roughly 1024 token long, you can change it if needed.

+2. Start the benchmark using `wrk` using the script below:

+

+```bash

+cd /llm

+# warmup

+wrk -t4 -c4 -d3m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+# You can change -t and -c to control the concurrency.

+# By default, we use 8 connections to benchmark the service.

+wrk -t8 -c8 -d15m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+```

+

+#### Offline benchmark through benchmark_vllm_throughput.py

+

+Please refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#performing-benchmark) on how to use `benchmark_vllm_throughput.py` for benchmarking.

diff --git a/docs/mddocs/DockerGuides/vllm_docker_quickstart.md b/docs/mddocs/DockerGuides/vllm_docker_quickstart.md

new file mode 100644

index 00000000..eb7fff3e

--- /dev/null

+++ b/docs/mddocs/DockerGuides/vllm_docker_quickstart.md

@@ -0,0 +1,146 @@

+# vLLM Serving with IPEX-LLM on Intel GPUs via Docker

+

+This guide demonstrates how to run `vLLM` serving with `IPEX-LLM` on Intel GPUs via Docker.

+

+## Install docker

+

+Follow the instructions in this [guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/DockerGuides/docker_windows_gpu.html#linux) to install Docker on Linux.

+

+## Pull the latest image

+

+*Note: For running vLLM serving on Intel GPUs, you can currently use either the `intelanalytics/ipex-llm-serving-xpu:latest` or `intelanalytics/ipex-llm-serving-vllm-xpu:latest` Docker image.*

+```bash

+# This image will be updated every day

+docker pull intelanalytics/ipex-llm-serving-xpu:latest

+```

+

+## Start Docker Container

+

+ To map the `xpu` into the container, you need to specify `--device=/dev/dri` when booting the container. Change the `/path/to/models` to mount the models.

+

+```

+#/bin/bash

+export DOCKER_IMAGE=intelanalytics/ipex-llm-serving-xpu:latest

+export CONTAINER_NAME=ipex-llm-serving-xpu-container

+sudo docker run -itd \

+ --net=host \

+ --device=/dev/dri \

+ -v /path/to/models:/llm/models \

+ -e no_proxy=localhost,127.0.0.1 \

+ --memory="32G" \

+ --name=$CONTAINER_NAME \

+ --shm-size="16g" \

+ $DOCKER_IMAGE

+```

+

+After the container is booted, you could get into the container through `docker exec`.

+

+```bash

+docker exec -it ipex-llm-serving-xpu-container /bin/bash

+```

+

+

+To verify the device is successfully mapped into the container, run `sycl-ls` to check the result. In a machine with Arc A770, the sampled output is:

+

+```bash

+root@arda-arc12:/# sycl-ls

+[opencl:acc:0] Intel(R) FPGA Emulation Platform for OpenCL(TM), Intel(R) FPGA Emulation Device 1.2 [2023.16.7.0.21_160000]

+[opencl:cpu:1] Intel(R) OpenCL, 13th Gen Intel(R) Core(TM) i9-13900K 3.0 [2023.16.7.0.21_160000]

+[opencl:gpu:2] Intel(R) OpenCL Graphics, Intel(R) Arc(TM) A770 Graphics 3.0 [23.17.26241.33]

+[ext_oneapi_level_zero:gpu:0] Intel(R) Level-Zero, Intel(R) Arc(TM) A770 Graphics 1.3 [1.3.26241]

+```

+

+## Running vLLM serving with IPEX-LLM on Intel GPU in Docker

+

+We have included multiple vLLM-related files in `/llm/`:

+1. `vllm_offline_inference.py`: Used for vLLM offline inference example

+2. `benchmark_vllm_throughput.py`: Used for benchmarking throughput

+3. `payload-1024.lua`: Used for testing request per second using 1k-128 request

+4. `start-vllm-service.sh`: Used for template for starting vLLM service

+

+Before performing benchmark or starting the service, you can refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#runtime-configurations) to setup our recommended runtime configurations.

+

+

+### Service

+

+#### Single card serving

+

+A script named `/llm/start-vllm-service.sh` have been included in the image for starting the service conveniently.

+

+Modify the `model` and `served_model_name` in the script so that it fits your requirement. The `served_model_name` indicates the model name used in the API.

+

+Then start the service using `bash /llm/start-vllm-service.sh`, the following message should be print if the service started successfully.

+

+If the service have booted successfully, you should see the output similar to the following figure:

+

+

+  +

+

+

+#### Multi-card serving

+

+vLLM supports to utilize multiple cards through tensor parallel.

+

+You can refer to this [documentation](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#about-tensor-parallel) on how to utilize the `tensor-parallel` feature and start the service.

+

+#### Verify

+After the service has been booted successfully, you can send a test request using `curl`. Here, `YOUR_MODEL` should be set equal to `served_model_name` in your booting script, e.g. `Qwen1.5`.

+

+

+```bash

+curl http://localhost:8000/v1/completions \

+-H "Content-Type: application/json" \

+-d '{

+ "model": "YOUR_MODEL",

+ "prompt": "San Francisco is a",

+ "max_tokens": 128,

+ "temperature": 0

+}' | jq '.choices[0].text'

+```

+

+Below shows an example output using `Qwen1.5-7B-Chat` with low-bit format `sym_int4`:

+

+

+

+

+

+

+#### Multi-card serving

+

+vLLM supports to utilize multiple cards through tensor parallel.

+

+You can refer to this [documentation](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#about-tensor-parallel) on how to utilize the `tensor-parallel` feature and start the service.

+

+#### Verify

+After the service has been booted successfully, you can send a test request using `curl`. Here, `YOUR_MODEL` should be set equal to `served_model_name` in your booting script, e.g. `Qwen1.5`.

+

+

+```bash

+curl http://localhost:8000/v1/completions \

+-H "Content-Type: application/json" \

+-d '{

+ "model": "YOUR_MODEL",

+ "prompt": "San Francisco is a",

+ "max_tokens": 128,

+ "temperature": 0

+}' | jq '.choices[0].text'

+```

+

+Below shows an example output using `Qwen1.5-7B-Chat` with low-bit format `sym_int4`:

+

+

+  +

+

+#### Tuning

+

+You can tune the service using these four arguments:

+- `--gpu-memory-utilization`

+- `--max-model-len`

+- `--max-num-batched-token`

+- `--max-num-seq`

+

+You can refer to this [doc](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#service) for a detailed explaination on these parameters.

+

+### Benchmark

+

+#### Online benchmark throurgh api_server

+

+We can benchmark the api_server to get an estimation about TPS (transactions per second). To do so, you need to start the service first according to the instructions mentioned above.

+

+Then in the container, do the following:

+1. modify the `/llm/payload-1024.lua` so that the "model" attribute is correct. By default, we use a prompt that is roughly 1024 token long, you can change it if needed.

+2. Start the benchmark using `wrk` using the script below:

+

+```bash

+cd /llm

+# warmup due to JIT compliation

+wrk -t4 -c4 -d3m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+# You can change -t and -c to control the concurrency.

+# By default, we use 12 connections to benchmark the service.

+wrk -t12 -c12 -d15m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+```

+

+The following figure shows performing benchmark on `Llama-2-7b-chat-hf` using the above script:

+

+

+

+

+

+#### Tuning

+

+You can tune the service using these four arguments:

+- `--gpu-memory-utilization`

+- `--max-model-len`

+- `--max-num-batched-token`

+- `--max-num-seq`

+

+You can refer to this [doc](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#service) for a detailed explaination on these parameters.

+

+### Benchmark

+

+#### Online benchmark throurgh api_server

+

+We can benchmark the api_server to get an estimation about TPS (transactions per second). To do so, you need to start the service first according to the instructions mentioned above.

+

+Then in the container, do the following:

+1. modify the `/llm/payload-1024.lua` so that the "model" attribute is correct. By default, we use a prompt that is roughly 1024 token long, you can change it if needed.

+2. Start the benchmark using `wrk` using the script below:

+

+```bash

+cd /llm

+# warmup due to JIT compliation

+wrk -t4 -c4 -d3m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+# You can change -t and -c to control the concurrency.

+# By default, we use 12 connections to benchmark the service.

+wrk -t12 -c12 -d15m -s payload-1024.lua http://localhost:8000/v1/completions --timeout 1h

+```

+

+The following figure shows performing benchmark on `Llama-2-7b-chat-hf` using the above script:

+

+

+  +

+

+

+#### Offline benchmark through benchmark_vllm_throughput.py

+

+Please refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#performing-benchmark) on how to use `benchmark_vllm_throughput.py` for benchmarking.

diff --git a/docs/mddocs/Inference/Self_Speculative_Decoding.md b/docs/mddocs/Inference/Self_Speculative_Decoding.md

new file mode 100644

index 00000000..99179194

--- /dev/null

+++ b/docs/mddocs/Inference/Self_Speculative_Decoding.md

@@ -0,0 +1,23 @@

+# Self-Speculative Decoding

+

+### Speculative Decoding in Practice

+In [speculative](https://arxiv.org/abs/2302.01318) [decoding](https://arxiv.org/abs/2211.17192), a small (draft) model quickly generates multiple draft tokens, which are then verified in parallel by the large (target) model. While speculative decoding can effectively speed up the target model, ***in practice it is difficult to maintain or even obtain a proper draft model***, especially when the target model is finetuned with customized data.

+

+### Self-Speculative Decoding

+Built on top of the concept of “[self-speculative decoding](https://arxiv.org/abs/2309.08168)”, IPEX-LLM can now accelerate the original FP16 or BF16 model ***without the need of a separate draft model or model finetuning***; instead, it automatically converts the original model to INT4, and uses the INT4 model as the draft model behind the scene. In practice, this brings ***~30% speedup*** for FP16 and BF16 LLM inference latency on Intel GPU and CPU respectively.

+

+### Using IPEX-LLM Self-Speculative Decoding

+Please refer to IPEX-LLM self-speculative decoding code snippets below, and the detailed [GPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU/Speculative-Decoding) and [CPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/CPU/Speculative-Decoding) examples in the project repo.

+

+```python

+model = AutoModelForCausalLM.from_pretrained(model_path,

+ optimize_model=True,

+ torch_dtype=torch.float16, #use bfloat16 on cpu

+ load_in_low_bit="fp16", #use bf16 on cpu

+ speculative=True, #set speculative to true

+ trust_remote_code=True,

+ use_cache=True)

+output = model.generate(input_ids,

+ max_new_tokens=args.n_predict,

+ do_sample=False)

+```

diff --git a/docs/mddocs/Overview/FAQ/faq.md b/docs/mddocs/Overview/FAQ/faq.md

new file mode 100644

index 00000000..caf8bd51

--- /dev/null

+++ b/docs/mddocs/Overview/FAQ/faq.md

@@ -0,0 +1,79 @@

+# Frequently Asked Questions (FAQ)

+

+## General Info & Concepts

+

+### GGUF format usage with IPEX-LLM?

+

+IPEX-LLM supports running GGUF/AWQ/GPTQ models on both [CPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations) and [GPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU/HF-Transformers-AutoModels/Advanced-Quantizations).

+Please also refer to [here](https://github.com/intel-analytics/ipex-llm?tab=readme-ov-file#latest-update-) for our latest support.

+

+## How to Resolve Errors

+

+### Fail to install `ipex-llm` through `pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/` or `pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/`

+

+You could try to install IPEX-LLM dependencies for Intel XPU from source archives:

+- For Windows system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#install-ipex-llm-from-wheel) for the steps.

+- For Linux system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#id3) for the steps.

+

+### PyTorch is not linked with support for xpu devices

+

+1. Before running on Intel GPUs, please make sure you've prepared environment follwing [installation instruction](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html).

+2. If you are using an older version of `ipex-llm` (specifically, older than 2.5.0b20240104), you need to manually add `import intel_extension_for_pytorch as ipex` at the beginning of your code.

+3. After optimizing the model with IPEX-LLM, you need to move model to GPU through `model = model.to('xpu')`.

+4. If you have mutil GPUs, you could refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/KeyFeatures/multi_gpus_selection.html) for details about GPU selection.

+5. If you do inference using the optimized model on Intel GPUs, you also need to set `to('xpu')` for input tensors.

+

+### Import `intel_extension_for_pytorch` error on Windows GPU

+

+Please refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#error-loading-intel-extension-for-pytorch) for detailed guide. We list the possible missing requirements in environment which could lead to this error.

+

+### XPU device count is zero

+

+It's recommended to reinstall driver:

+- For Windows system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#prerequisites) for the steps.

+- For Linux system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#id1) for the steps.

+

+### Error such as `The size of tensor a (33) must match the size of tensor b (17) at non-singleton dimension 2` duing attention forward function

+

+If you are using IPEX-LLM PyTorch API, please try to set `optimize_llm=False` manually when call `optimize_model` function to work around. As for IPEX-LLM `transformers`-style API, you could try to set `optimize_model=False` manually when call `from_pretrained` function to work around.

+

+### ValueError: Unrecognized configuration class

+

+This error is not quite relevant to IPEX-LLM. It could be that you're using the incorrect AutoClass, or the transformers version is not updated, or transformers does not support using AutoClasses to load this model. You need to refer to the model card in huggingface to confirm these information. Besides, if you load the model from local path, please also make sure you download the complete model files.

+

+### `mixed dtype (CPU): expect input to have scalar type of BFloat16` during inference

+

+You could solve this error by converting the optimized model to `bf16` through `model.to(torch.bfloat16)` before inference.

+

+### Native API failed. Native API returns: -5 (PI_ERROR_OUT_OF_RESOURCES) -5 (PI_ERROR_OUT_OF_RESOURCES)

+

+This error is caused by out of GPU memory. Some possible solutions to decrease GPU memory uage:

+1. If you run several models continuously, please make sure you have released GPU memory of previous model through `del model` timely.

+2. You could try `model = model.float16()` or `model = model.bfloat16()` before moving model to GPU to use less GPU memory.

+3. You could try set `cpu_embedding=True` when call `from_pretrained` of AutoClass or `optimize_model` function.

+

+### Failed to enable AMX

+

+You could use `export BIGDL_LLM_AMX_DISABLED=1` to disable AMX manually and solve this error.

+

+### oneCCL: comm_selector.cpp:57 create_comm_impl: EXCEPTION: ze_data was not initialized

+

+You may encounter this error during finetuning on multi GPUs. Please try `sudo apt install level-zero-dev` to fix it.

+

+### Random and unreadable output of Gemma-7b-it on Arc770 ubuntu 22.04 due to driver and OneAPI missmatching.

+

+If driver and OneAPI missmatching, it will lead to some error when IPEX-LLM uses XMX(short prompts) for speeding up.

+The output of `What's AI?` may like below:

+```

+wiedzy Artificial Intelligence meliti: Artificial Intelligence undenti beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng

+```

+If you meet this error. Please check your driver version and OneAPI version. Commnad is `sudo apt list --installed | egrep "intel-basekit|intel-level-zero-gpu"`.

+Make sure intel-basekit>=2024.0.1-43 and intel-level-zero-gpu>=1.3.27191.42-775~22.04.

+

+### Too many open files

+

+You may encounter this error during finetuning, expecially when run 70B model. Please raise the system open file limit using `ulimit -n 1048576`.

+

+### `RuntimeError: could not create a primitive` on Windows

+

+This error may happen when multiple GPUs exists for Windows Users. To solve this error, you can open Device Manager (search "Device Manager" in your start menu). Then click the "Display adapter" option, and disable all the GPU device you do not want to use. Restart your computer and try again. IPEX-LLM should work fine this time.

\ No newline at end of file

diff --git a/docs/mddocs/Overview/KeyFeatures/cli.md b/docs/mddocs/Overview/KeyFeatures/cli.md

new file mode 100644

index 00000000..ab162594

--- /dev/null

+++ b/docs/mddocs/Overview/KeyFeatures/cli.md

@@ -0,0 +1,40 @@

+# CLI (Command Line Interface) Tool

+

+```eval_rst

+

+.. note::

+

+ Currently ``ipex-llm`` CLI supports *LLaMA* (e.g., vicuna), *GPT-NeoX* (e.g., redpajama), *BLOOM* (e.g., pheonix) and *GPT2* (e.g., starcoder) model architecture; for other models, you may use the ``transformers``-style or LangChain APIs.

+```

+

+## Convert Model

+

+You may convert the downloaded model into native INT4 format using `llm-convert`.

+

+```bash

+# convert PyTorch (fp16 or fp32) model;

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-convert "/path/to/model/" --model-format pth --model-family "bloom" --outfile "/path/to/output/"

+

+# convert GPTQ-4bit model

+# only llama model family is currently supported

+llm-convert "/path/to/model/" --model-format gptq --model-family "llama" --outfile "/path/to/output/"

+```

+

+## Run Model

+

+You may run the converted model using `llm-cli` or `llm-chat` (built on top of `main.cpp` in [`llama.cpp`](https://github.com/ggerganov/llama.cpp))

+

+```bash

+# help

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-cli -x gptneox -h

+

+# text completion

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-cli -t 16 -x gptneox -m "/path/to/output/model.bin" -p 'Once upon a time,'

+

+# chat mode

+# llama/gptneox model family is currently supported

+llm-chat -m "/path/to/output/model.bin" -x llama

+```

\ No newline at end of file

diff --git a/docs/mddocs/Overview/KeyFeatures/finetune.md b/docs/mddocs/Overview/KeyFeatures/finetune.md

new file mode 100644

index 00000000..b895b89f

--- /dev/null

+++ b/docs/mddocs/Overview/KeyFeatures/finetune.md

@@ -0,0 +1,64 @@

+# Finetune (QLoRA)

+

+We also support finetuning LLMs (large language models) using QLoRA with IPEX-LLM 4bit optimizations on Intel GPUs.

+

+```eval_rst

+.. note::

+

+ Currently, only Hugging Face Transformers models are supported running QLoRA finetuning.

+```

+

+To help you better understand the finetuning process, here we use model [Llama-2-7b-hf](https://huggingface.co/meta-llama/Llama-2-7b-hf) as an example.

+

+**Make sure you have prepared environment following instructions [here](../install_gpu.html).**

+

+```eval_rst

+.. note::

+

+ If you are using an older version of ``ipex-llm`` (specifically, older than 2.5.0b20240104), you need to manually add ``import intel_extension_for_pytorch as ipex`` at the beginning of your code.

+```

+

+First, load model using `transformers`-style API and **set it to `to('xpu')`**. We specify `load_in_low_bit="nf4"` here to apply 4-bit NormalFloat optimization. According to the [QLoRA paper](https://arxiv.org/pdf/2305.14314.pdf), using `"nf4"` could yield better model quality than `"int4"`.

+

+```python

+from ipex_llm.transformers import AutoModelForCausalLM

+

+model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-hf",

+ load_in_low_bit="nf4",

+ optimize_model=False,

+ torch_dtype=torch.float16,

+ modules_to_not_convert=["lm_head"],)

+model = model.to('xpu')

+```

+

+Then, we have to apply some preprocessing to the model to prepare it for training.

+```python

+from ipex_llm.transformers.qlora import prepare_model_for_kbit_training

+model.gradient_checkpointing_enable()

+model = prepare_model_for_kbit_training(model)

+```

+

+Next, we can obtain a Peft model from the optimized model and a configuration object containing the parameters as follows:

+```python

+from ipex_llm.transformers.qlora import get_peft_model

+from peft import LoraConfig

+config = LoraConfig(r=8,

+ lora_alpha=32,

+ target_modules=["q_proj", "k_proj", "v_proj"],

+ lora_dropout=0.05,

+ bias="none",

+ task_type="CAUSAL_LM")

+model = get_peft_model(model, config)

+```

+

+```eval_rst

+.. important::

+

+ Instead of ``from peft import prepare_model_for_kbit_training, get_peft_model`` as we did for regular QLoRA using bitandbytes and cuda, we import them from ``ipex_llm.transformers.qlora`` here to get a IPEX-LLM compatible Peft model. And the rest is just the same as regular LoRA finetuning process using ``peft``.

+```

+

+```eval_rst

+.. seealso::

+

+ See the complete examples `here

+

+

+

+#### Offline benchmark through benchmark_vllm_throughput.py

+

+Please refer to this [section](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/vLLM_quickstart.html#performing-benchmark) on how to use `benchmark_vllm_throughput.py` for benchmarking.

diff --git a/docs/mddocs/Inference/Self_Speculative_Decoding.md b/docs/mddocs/Inference/Self_Speculative_Decoding.md

new file mode 100644

index 00000000..99179194

--- /dev/null

+++ b/docs/mddocs/Inference/Self_Speculative_Decoding.md

@@ -0,0 +1,23 @@

+# Self-Speculative Decoding

+

+### Speculative Decoding in Practice

+In [speculative](https://arxiv.org/abs/2302.01318) [decoding](https://arxiv.org/abs/2211.17192), a small (draft) model quickly generates multiple draft tokens, which are then verified in parallel by the large (target) model. While speculative decoding can effectively speed up the target model, ***in practice it is difficult to maintain or even obtain a proper draft model***, especially when the target model is finetuned with customized data.

+

+### Self-Speculative Decoding

+Built on top of the concept of “[self-speculative decoding](https://arxiv.org/abs/2309.08168)”, IPEX-LLM can now accelerate the original FP16 or BF16 model ***without the need of a separate draft model or model finetuning***; instead, it automatically converts the original model to INT4, and uses the INT4 model as the draft model behind the scene. In practice, this brings ***~30% speedup*** for FP16 and BF16 LLM inference latency on Intel GPU and CPU respectively.

+

+### Using IPEX-LLM Self-Speculative Decoding

+Please refer to IPEX-LLM self-speculative decoding code snippets below, and the detailed [GPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU/Speculative-Decoding) and [CPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/CPU/Speculative-Decoding) examples in the project repo.

+

+```python

+model = AutoModelForCausalLM.from_pretrained(model_path,

+ optimize_model=True,

+ torch_dtype=torch.float16, #use bfloat16 on cpu

+ load_in_low_bit="fp16", #use bf16 on cpu

+ speculative=True, #set speculative to true

+ trust_remote_code=True,

+ use_cache=True)

+output = model.generate(input_ids,

+ max_new_tokens=args.n_predict,

+ do_sample=False)

+```

diff --git a/docs/mddocs/Overview/FAQ/faq.md b/docs/mddocs/Overview/FAQ/faq.md

new file mode 100644

index 00000000..caf8bd51

--- /dev/null

+++ b/docs/mddocs/Overview/FAQ/faq.md

@@ -0,0 +1,79 @@

+# Frequently Asked Questions (FAQ)

+

+## General Info & Concepts

+

+### GGUF format usage with IPEX-LLM?

+

+IPEX-LLM supports running GGUF/AWQ/GPTQ models on both [CPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations) and [GPU](https://github.com/intel-analytics/ipex-llm/tree/main/python/llm/example/GPU/HF-Transformers-AutoModels/Advanced-Quantizations).

+Please also refer to [here](https://github.com/intel-analytics/ipex-llm?tab=readme-ov-file#latest-update-) for our latest support.

+

+## How to Resolve Errors

+

+### Fail to install `ipex-llm` through `pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/` or `pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/`

+

+You could try to install IPEX-LLM dependencies for Intel XPU from source archives:

+- For Windows system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#install-ipex-llm-from-wheel) for the steps.

+- For Linux system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#id3) for the steps.

+

+### PyTorch is not linked with support for xpu devices

+

+1. Before running on Intel GPUs, please make sure you've prepared environment follwing [installation instruction](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html).

+2. If you are using an older version of `ipex-llm` (specifically, older than 2.5.0b20240104), you need to manually add `import intel_extension_for_pytorch as ipex` at the beginning of your code.

+3. After optimizing the model with IPEX-LLM, you need to move model to GPU through `model = model.to('xpu')`.

+4. If you have mutil GPUs, you could refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/KeyFeatures/multi_gpus_selection.html) for details about GPU selection.

+5. If you do inference using the optimized model on Intel GPUs, you also need to set `to('xpu')` for input tensors.

+

+### Import `intel_extension_for_pytorch` error on Windows GPU

+

+Please refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#error-loading-intel-extension-for-pytorch) for detailed guide. We list the possible missing requirements in environment which could lead to this error.

+

+### XPU device count is zero

+

+It's recommended to reinstall driver:

+- For Windows system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#prerequisites) for the steps.

+- For Linux system, refer to [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#id1) for the steps.

+

+### Error such as `The size of tensor a (33) must match the size of tensor b (17) at non-singleton dimension 2` duing attention forward function

+

+If you are using IPEX-LLM PyTorch API, please try to set `optimize_llm=False` manually when call `optimize_model` function to work around. As for IPEX-LLM `transformers`-style API, you could try to set `optimize_model=False` manually when call `from_pretrained` function to work around.

+

+### ValueError: Unrecognized configuration class

+

+This error is not quite relevant to IPEX-LLM. It could be that you're using the incorrect AutoClass, or the transformers version is not updated, or transformers does not support using AutoClasses to load this model. You need to refer to the model card in huggingface to confirm these information. Besides, if you load the model from local path, please also make sure you download the complete model files.

+

+### `mixed dtype (CPU): expect input to have scalar type of BFloat16` during inference

+

+You could solve this error by converting the optimized model to `bf16` through `model.to(torch.bfloat16)` before inference.

+

+### Native API failed. Native API returns: -5 (PI_ERROR_OUT_OF_RESOURCES) -5 (PI_ERROR_OUT_OF_RESOURCES)

+

+This error is caused by out of GPU memory. Some possible solutions to decrease GPU memory uage:

+1. If you run several models continuously, please make sure you have released GPU memory of previous model through `del model` timely.

+2. You could try `model = model.float16()` or `model = model.bfloat16()` before moving model to GPU to use less GPU memory.

+3. You could try set `cpu_embedding=True` when call `from_pretrained` of AutoClass or `optimize_model` function.

+

+### Failed to enable AMX

+

+You could use `export BIGDL_LLM_AMX_DISABLED=1` to disable AMX manually and solve this error.

+

+### oneCCL: comm_selector.cpp:57 create_comm_impl: EXCEPTION: ze_data was not initialized

+

+You may encounter this error during finetuning on multi GPUs. Please try `sudo apt install level-zero-dev` to fix it.

+

+### Random and unreadable output of Gemma-7b-it on Arc770 ubuntu 22.04 due to driver and OneAPI missmatching.

+

+If driver and OneAPI missmatching, it will lead to some error when IPEX-LLM uses XMX(short prompts) for speeding up.

+The output of `What's AI?` may like below:

+```

+wiedzy Artificial Intelligence meliti: Artificial Intelligence undenti beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng beng

+```

+If you meet this error. Please check your driver version and OneAPI version. Commnad is `sudo apt list --installed | egrep "intel-basekit|intel-level-zero-gpu"`.

+Make sure intel-basekit>=2024.0.1-43 and intel-level-zero-gpu>=1.3.27191.42-775~22.04.

+

+### Too many open files

+

+You may encounter this error during finetuning, expecially when run 70B model. Please raise the system open file limit using `ulimit -n 1048576`.

+

+### `RuntimeError: could not create a primitive` on Windows

+

+This error may happen when multiple GPUs exists for Windows Users. To solve this error, you can open Device Manager (search "Device Manager" in your start menu). Then click the "Display adapter" option, and disable all the GPU device you do not want to use. Restart your computer and try again. IPEX-LLM should work fine this time.

\ No newline at end of file

diff --git a/docs/mddocs/Overview/KeyFeatures/cli.md b/docs/mddocs/Overview/KeyFeatures/cli.md

new file mode 100644

index 00000000..ab162594

--- /dev/null

+++ b/docs/mddocs/Overview/KeyFeatures/cli.md

@@ -0,0 +1,40 @@

+# CLI (Command Line Interface) Tool

+

+```eval_rst

+

+.. note::

+

+ Currently ``ipex-llm`` CLI supports *LLaMA* (e.g., vicuna), *GPT-NeoX* (e.g., redpajama), *BLOOM* (e.g., pheonix) and *GPT2* (e.g., starcoder) model architecture; for other models, you may use the ``transformers``-style or LangChain APIs.

+```

+

+## Convert Model

+

+You may convert the downloaded model into native INT4 format using `llm-convert`.

+

+```bash

+# convert PyTorch (fp16 or fp32) model;

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-convert "/path/to/model/" --model-format pth --model-family "bloom" --outfile "/path/to/output/"

+

+# convert GPTQ-4bit model

+# only llama model family is currently supported

+llm-convert "/path/to/model/" --model-format gptq --model-family "llama" --outfile "/path/to/output/"

+```

+

+## Run Model

+

+You may run the converted model using `llm-cli` or `llm-chat` (built on top of `main.cpp` in [`llama.cpp`](https://github.com/ggerganov/llama.cpp))

+

+```bash

+# help

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-cli -x gptneox -h

+

+# text completion

+# llama/bloom/gptneox/starcoder model family is currently supported

+llm-cli -t 16 -x gptneox -m "/path/to/output/model.bin" -p 'Once upon a time,'

+

+# chat mode

+# llama/gptneox model family is currently supported

+llm-chat -m "/path/to/output/model.bin" -x llama

+```

\ No newline at end of file

diff --git a/docs/mddocs/Overview/KeyFeatures/finetune.md b/docs/mddocs/Overview/KeyFeatures/finetune.md

new file mode 100644

index 00000000..b895b89f

--- /dev/null

+++ b/docs/mddocs/Overview/KeyFeatures/finetune.md

@@ -0,0 +1,64 @@

+# Finetune (QLoRA)

+

+We also support finetuning LLMs (large language models) using QLoRA with IPEX-LLM 4bit optimizations on Intel GPUs.

+

+```eval_rst

+.. note::

+

+ Currently, only Hugging Face Transformers models are supported running QLoRA finetuning.

+```

+

+To help you better understand the finetuning process, here we use model [Llama-2-7b-hf](https://huggingface.co/meta-llama/Llama-2-7b-hf) as an example.

+

+**Make sure you have prepared environment following instructions [here](../install_gpu.html).**

+

+```eval_rst

+.. note::

+

+ If you are using an older version of ``ipex-llm`` (specifically, older than 2.5.0b20240104), you need to manually add ``import intel_extension_for_pytorch as ipex`` at the beginning of your code.

+```

+

+First, load model using `transformers`-style API and **set it to `to('xpu')`**. We specify `load_in_low_bit="nf4"` here to apply 4-bit NormalFloat optimization. According to the [QLoRA paper](https://arxiv.org/pdf/2305.14314.pdf), using `"nf4"` could yield better model quality than `"int4"`.

+

+```python

+from ipex_llm.transformers import AutoModelForCausalLM

+

+model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-hf",

+ load_in_low_bit="nf4",

+ optimize_model=False,

+ torch_dtype=torch.float16,

+ modules_to_not_convert=["lm_head"],)

+model = model.to('xpu')

+```

+

+Then, we have to apply some preprocessing to the model to prepare it for training.

+```python

+from ipex_llm.transformers.qlora import prepare_model_for_kbit_training

+model.gradient_checkpointing_enable()

+model = prepare_model_for_kbit_training(model)

+```

+

+Next, we can obtain a Peft model from the optimized model and a configuration object containing the parameters as follows:

+```python

+from ipex_llm.transformers.qlora import get_peft_model

+from peft import LoraConfig

+config = LoraConfig(r=8,

+ lora_alpha=32,

+ target_modules=["q_proj", "k_proj", "v_proj"],

+ lora_dropout=0.05,

+ bias="none",

+ task_type="CAUSAL_LM")

+model = get_peft_model(model, config)

+```

+

+```eval_rst

+.. important::

+

+ Instead of ``from peft import prepare_model_for_kbit_training, get_peft_model`` as we did for regular QLoRA using bitandbytes and cuda, we import them from ``ipex_llm.transformers.qlora`` here to get a IPEX-LLM compatible Peft model. And the rest is just the same as regular LoRA finetuning process using ``peft``.

+```

+

+```eval_rst

+.. seealso::

+

+ See the complete examples `here

+

diff --git a/docs/mddocs/Quickstart/axolotl_quickstart.md b/docs/mddocs/Quickstart/axolotl_quickstart.md

new file mode 100644

index 00000000..4a2cbb3a

--- /dev/null

+++ b/docs/mddocs/Quickstart/axolotl_quickstart.md

@@ -0,0 +1,314 @@

+# Finetune LLM with Axolotl on Intel GPU

+

+[Axolotl](https://github.com/OpenAccess-AI-Collective/axolotl) is a popular tool designed to streamline the fine-tuning of various AI models, offering support for multiple configurations and architectures. You can now use [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) as an accelerated backend for `Axolotl` running on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+

+See the demo of finetuning LLaMA2-7B on Intel Arc GPU below.

+

+

+

+## Quickstart

+

+### 0. Prerequisites

+

+IPEX-LLM's support for [Axolotl v0.4.0](https://github.com/OpenAccess-AI-Collective/axolotl/tree/v0.4.0) is only available for Linux system. We recommend Ubuntu 20.04 or later (Ubuntu 22.04 is preferred).

+

+Visit the [Install IPEX-LLM on Linux with Intel GPU](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html), follow [Install Intel GPU Driver](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#install-intel-gpu-driver) and [Install oneAPI](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/install_linux_gpu.html#install-oneapi) to install GPU driver and Intel® oneAPI Base Toolkit 2024.0.

+

+### 1. Install IPEX-LLM for Axolotl

+

+Create a new conda env, and install `ipex-llm[xpu]`.

+

+```cmd

+conda create -n axolotl python=3.11

+conda activate axolotl

+# install ipex-llm

+pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+```

+

+Install [axolotl v0.4.0](https://github.com/OpenAccess-AI-Collective/axolotl/tree/v0.4.0) from git.

+

+```cmd

+# install axolotl v0.4.0

+git clone https://github.com/OpenAccess-AI-Collective/axolotl/tree/v0.4.0

+cd axolotl

+# replace requirements.txt

+remove requirements.txt

+wget -O requirements.txt https://raw.githubusercontent.com/intel-analytics/ipex-llm/main/python/llm/example/GPU/LLM-Finetuning/axolotl/requirements-xpu.txt

+pip install -e .

+pip install transformers==4.36.0

+# to avoid https://github.com/OpenAccess-AI-Collective/axolotl/issues/1544

+pip install datasets==2.15.0

+# prepare axolotl entrypoints

+wget https://raw.githubusercontent.com/intel-analytics/ipex-llm/main/python/llm/example/GPU/LLM-Finetuning/axolotl/finetune.py

+wget https://raw.githubusercontent.com/intel-analytics/ipex-llm/main/python/llm/example/GPU/LLM-Finetuning/axolotl/train.py

+```