LLM: adjust portable zip content (#9054)

* LLM: adjust portable zip content * LLM: adjust portable zip README

This commit is contained in:

parent

df8df751c4

commit

65373d2a8b

9 changed files with 48 additions and 42 deletions

2

python/llm/portable-executable/.gitignore

vendored

2

python/llm/portable-executable/.gitignore

vendored

|

|

@ -1,2 +0,0 @@

|

||||||

python-embed

|

|

||||||

portable-executable.zip

|

|

||||||

|

|

@ -1,33 +0,0 @@

|

||||||

# BigDL-LLM Portable Executable For Windows: User Guide

|

|

||||||

|

|

||||||

This portable executable includes everything you need to run LLM (except models). Please refer to How to use section to get started.

|

|

||||||

|

|

||||||

## 13B model running on an Intel 11-Gen Core PC (real-time screen capture)

|

|

||||||

|

|

||||||

<p align="left">

|

|

||||||

<img src=https://llm-assets.readthedocs.io/en/latest/_images/one-click-installer-screen-capture.gif width='80%' />

|

|

||||||

|

|

||||||

</p>

|

|

||||||

|

|

||||||

## Verified Models

|

|

||||||

|

|

||||||

- ChatGLM2-6b

|

|

||||||

- Baichuan-13B-Chat

|

|

||||||

- Baichuan2-7B-Chat

|

|

||||||

- internlm-chat-7b-8k

|

|

||||||

- Llama-2-7b-chat-hf

|

|

||||||

|

|

||||||

## How to use

|

|

||||||

|

|

||||||

1. Download the model to your computer. Please ensure there is a file named `config.json` in the model folder, otherwise the script won't work.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

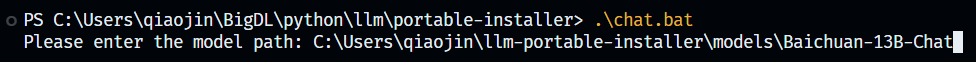

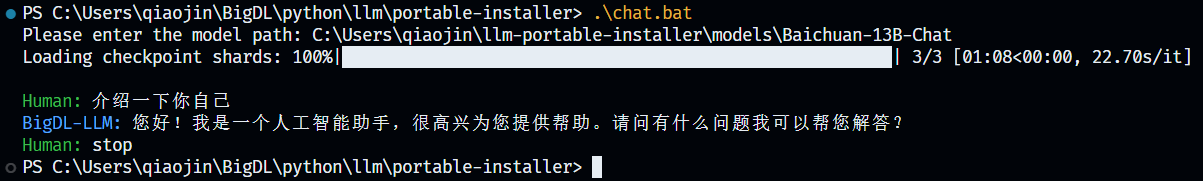

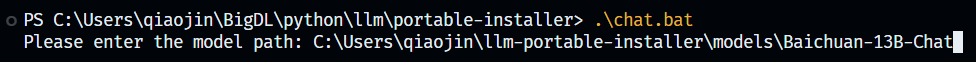

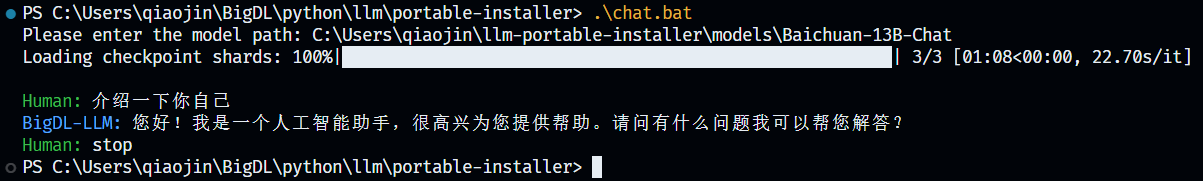

2. Run `chat.bat` in Terminal and input the path of the model (e.g. `path\to\model`, note that there's no slash at the end of the path).

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

3. Press Enter and wait until model finishes loading. Then enjoy chatting with the model!

|

|

||||||

4. If you want to stop chatting, just input `stop` and the model will stop running.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -1,5 +0,0 @@

|

||||||

# BigDL-LLM Portable Executable Setup Script For Windows

|

|

||||||

|

|

||||||

# How to use

|

|

||||||

|

|

||||||

Just simply run `setup.bat` and it will download and install all dependency and generate a zip file for user to use.

|

|

||||||

2

python/llm/portable-zip/.gitignore

vendored

Normal file

2

python/llm/portable-zip/.gitignore

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

||||||

|

python-embed

|

||||||

|

bigdl-llm.zip

|

||||||

37

python/llm/portable-zip/README.md

Normal file

37

python/llm/portable-zip/README.md

Normal file

|

|

@ -0,0 +1,37 @@

|

||||||

|

# BigDL-LLM Portable Zip For Windows: User Guide

|

||||||

|

|

||||||

|

## Introduction

|

||||||

|

|

||||||

|

This portable zip includes everything you need to run an LLM with BigDL-LLM optimizations (except models) . Please refer to [How to use](#how-to-use) section to get started.

|

||||||

|

|

||||||

|

### 13B model running on an Intel 11-Gen Core PC (real-time screen capture)

|

||||||

|

|

||||||

|

<p align="left">

|

||||||

|

<img src=https://llm-assets.readthedocs.io/en/latest/_images/one-click-installer-screen-capture.gif width='80%' />

|

||||||

|

|

||||||

|

</p>

|

||||||

|

|

||||||

|

### Verified Models

|

||||||

|

|

||||||

|

- ChatGLM2-6b

|

||||||

|

- Baichuan-13B-Chat

|

||||||

|

- Baichuan2-7B-Chat

|

||||||

|

- internlm-chat-7b-8k

|

||||||

|

- Llama-2-7b-chat-hf

|

||||||

|

|

||||||

|

## How to use

|

||||||

|

|

||||||

|

1. Download the zip from link [here]().

|

||||||

|

2. (Optional) You could also build the zip on your own. Run `setup.bat` and it will generate the zip file.

|

||||||

|

3. Unzip `bigdl-llm.zip`.

|

||||||

|

4. Download the model to your computer. Please ensure there is a file named `config.json` in the model folder, otherwise the script won't work.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

5. Go into the unzipped folder and double click `chat.bat`. Input the path of the model (e.g. `path\to\model`, note that there's no slash at the end of the path). Press Enter and wait until model finishes loading. Then enjoy chatting with the model!

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

6. If you want to stop chatting, just input `stop` and the model will stop running.

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -5,4 +5,6 @@

|

||||||

set PYTHONUNBUFFERED=1

|

set PYTHONUNBUFFERED=1

|

||||||

|

|

||||||

set /p modelpath="Please enter the model path: "

|

set /p modelpath="Please enter the model path: "

|

||||||

.\python-embed\python.exe .\chat.py --model-path="%modelpath%"

|

.\python-embed\python.exe .\chat.py --model-path="%modelpath%"

|

||||||

|

|

||||||

|

pause

|

||||||

|

|

@ -20,4 +20,4 @@ cd ..

|

||||||

%python-embed% -m pip install bigdl-llm[all] transformers_stream_generator tiktoken einops colorama

|

%python-embed% -m pip install bigdl-llm[all] transformers_stream_generator tiktoken einops colorama

|

||||||

|

|

||||||

:: compress the python and scripts

|

:: compress the python and scripts

|

||||||

powershell -Command "Compress-Archive -Path '.\python-embed', '.\chat.bat', '.\chat.py', '.\README.md' -DestinationPath .\portable-executable.zip"

|

powershell -Command "Compress-Archive -Path '.\python-embed', '.\chat.bat', '.\chat.py', '.\README.md' -DestinationPath .\bigdl-llm.zip"

|

||||||

5

python/llm/portable-zip/setup.md

Normal file

5

python/llm/portable-zip/setup.md

Normal file

|

|

@ -0,0 +1,5 @@

|

||||||

|

# BigDL-LLM Portable Zip Setup Script For Windows

|

||||||

|

|

||||||

|

# How to use

|

||||||

|

|

||||||

|

Just simply run `setup.bat` and it will download and install all dependency and generate `bigdl-llm.zip` for user to use.

|

||||||

Loading…

Reference in a new issue