diff --git a/.github/actions/llm/setup-llm-env/action.yml b/.github/actions/llm/setup-llm-env/action.yml

index 1d530972..4b25ea0c 100644

--- a/.github/actions/llm/setup-llm-env/action.yml

+++ b/.github/actions/llm/setup-llm-env/action.yml

@@ -19,7 +19,7 @@ runs:

sed -i 's/"bigdl-core-xe==" + CORE_XE_VERSION + "/"bigdl-core-xe/g' python/llm/setup.py

sed -i 's/"bigdl-core-xe-esimd==" + CORE_XE_VERSION + "/"bigdl-core-xe-esimd/g' python/llm/setup.py

sed -i 's/"bigdl-core-xe-21==" + CORE_XE_VERSION/"bigdl-core-xe-21"/g' python/llm/setup.py

- sed -i 's/"bigdl-core-xe-esimd-21==" + CORE_XE_VERSION + "/"bigdl-core-xe-esimd-21/g' python/llm/setup.py

+ sed -i 's/"bigdl-core-xe-esimd-21==" + CORE_XE_VERSION/"bigdl-core-xe-esimd-21"/g' python/llm/setup.py

pip install requests

if [[ ${{ runner.os }} == 'Linux' ]]; then

diff --git a/.github/workflows/llm-c-evaluation.yml b/.github/workflows/llm-c-evaluation.yml

index b77e622a..9ca18276 100644

--- a/.github/workflows/llm-c-evaluation.yml

+++ b/.github/workflows/llm-c-evaluation.yml

@@ -95,7 +95,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

model_name: ${{ fromJson(needs.set-matrix.outputs.model_name) }}

precision: ${{ fromJson(needs.set-matrix.outputs.precision) }}

device: [xpu]

@@ -193,10 +193,10 @@ jobs:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

@@ -230,10 +230,10 @@ jobs:

runs-on: ["self-hosted", "llm", "accuracy1", "accuracy-nightly"]

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

diff --git a/.github/workflows/llm-harness-evaluation.yml b/.github/workflows/llm-harness-evaluation.yml

index 11255e03..54417019 100644

--- a/.github/workflows/llm-harness-evaluation.yml

+++ b/.github/workflows/llm-harness-evaluation.yml

@@ -105,7 +105,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

model_name: ${{ fromJson(needs.set-matrix.outputs.model_name) }}

task: ${{ fromJson(needs.set-matrix.outputs.task) }}

precision: ${{ fromJson(needs.set-matrix.outputs.precision) }}

@@ -189,7 +189,7 @@ jobs:

fi

python run_llb.py \

- --model bigdl-llm \

+ --model ipex-llm \

--pretrained ${MODEL_PATH} \

--precision ${{ matrix.precision }} \

--device ${{ matrix.device }} \

@@ -216,10 +216,10 @@ jobs:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

@@ -243,10 +243,10 @@ jobs:

runs-on: ["self-hosted", "llm", "accuracy1", "accuracy-nightly"]

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

diff --git a/.github/workflows/llm-nightly-test.yml b/.github/workflows/llm-nightly-test.yml

index 457c7a61..10bfb746 100644

--- a/.github/workflows/llm-nightly-test.yml

+++ b/.github/workflows/llm-nightly-test.yml

@@ -34,10 +34,10 @@ jobs:

include:

- os: windows

instruction: AVX-VNNI-UT

- python-version: "3.9"

+ python-version: "3.11"

- os: ubuntu-20.04-lts

instruction: avx512

- python-version: "3.9"

+ python-version: "3.11"

runs-on: [self-hosted, llm, "${{matrix.instruction}}", "${{matrix.os}}"]

env:

ANALYTICS_ZOO_ROOT: ${{ github.workspace }}

diff --git a/.github/workflows/llm-ppl-evaluation.yml b/.github/workflows/llm-ppl-evaluation.yml

index e2f0b92c..7ad621f9 100644

--- a/.github/workflows/llm-ppl-evaluation.yml

+++ b/.github/workflows/llm-ppl-evaluation.yml

@@ -104,7 +104,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

model_name: ${{ fromJson(needs.set-matrix.outputs.model_name) }}

precision: ${{ fromJson(needs.set-matrix.outputs.precision) }}

seq_len: ${{ fromJson(needs.set-matrix.outputs.seq_len) }}

@@ -201,10 +201,10 @@ jobs:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

@@ -227,10 +227,10 @@ jobs:

runs-on: ["self-hosted", "llm", "accuracy1", "accuracy-nightly"]

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Install dependencies

shell: bash

run: |

diff --git a/.github/workflows/llm-whisper-evaluation.yml b/.github/workflows/llm-whisper-evaluation.yml

index 0a918c56..e60eadbf 100644

--- a/.github/workflows/llm-whisper-evaluation.yml

+++ b/.github/workflows/llm-whisper-evaluation.yml

@@ -81,7 +81,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

model_name: ${{ fromJson(needs.set-matrix.outputs.model_name) }}

task: ${{ fromJson(needs.set-matrix.outputs.task) }}

precision: ${{ fromJson(needs.set-matrix.outputs.precision) }}

@@ -158,10 +158,10 @@ jobs:

runs-on: ["self-hosted", "llm", "perf"]

steps:

- uses: actions/checkout@f43a0e5ff2bd294095638e18286ca9a3d1956744 # actions/checkout@v3

- - name: Set up Python 3.9

+ - name: Set up Python 3.11

uses: actions/setup-python@v4

with:

- python-version: 3.9

+ python-version: 3.11

- name: Set output path

shell: bash

diff --git a/.github/workflows/llm_example_tests.yml b/.github/workflows/llm_example_tests.yml

index 8338e48a..a19606a8 100644

--- a/.github/workflows/llm_example_tests.yml

+++ b/.github/workflows/llm_example_tests.yml

@@ -39,7 +39,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

instruction: ["AVX512"]

runs-on: [ self-hosted, llm,"${{matrix.instruction}}", ubuntu-20.04-lts ]

env:

diff --git a/.github/workflows/llm_performance_tests.yml b/.github/workflows/llm_performance_tests.yml

index b5495491..48cc7dc7 100644

--- a/.github/workflows/llm_performance_tests.yml

+++ b/.github/workflows/llm_performance_tests.yml

@@ -33,7 +33,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, perf]

env:

OMP_NUM_THREADS: 16

@@ -163,7 +163,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, spr-perf]

env:

OMP_NUM_THREADS: 16

@@ -238,10 +238,10 @@ jobs:

include:

- os: windows

platform: dp

- python-version: "3.9"

+ python-version: "3.11"

# - os: windows

# platform: lp

- # python-version: "3.9"

+ # python-version: "3.11"

runs-on: [self-hosted, "${{ matrix.os }}", llm, perf-core, "${{ matrix.platform }}"]

env:

ANALYTICS_ZOO_ROOT: ${{ github.workspace }}

@@ -309,7 +309,7 @@ jobs:

matrix:

include:

- os: windows

- python-version: "3.9"

+ python-version: "3.11"

runs-on: [self-hosted, "${{ matrix.os }}", llm, perf-igpu]

env:

ANALYTICS_ZOO_ROOT: ${{ github.workspace }}

@@ -380,7 +380,7 @@ jobs:

- name: Create env for html generation

shell: cmd

run: |

- call conda create -n html-gen python=3.9 -y

+ call conda create -n html-gen python=3.11 -y

call conda activate html-gen

pip install pandas==1.5.3

diff --git a/.github/workflows/llm_tests_for_stable_version_on_arc.yml b/.github/workflows/llm_tests_for_stable_version_on_arc.yml

index 8522ad2d..1b8c48d9 100644

--- a/.github/workflows/llm_tests_for_stable_version_on_arc.yml

+++ b/.github/workflows/llm_tests_for_stable_version_on_arc.yml

@@ -30,7 +30,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, perf]

env:

OMP_NUM_THREADS: 16

@@ -154,7 +154,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, perf]

env:

OMP_NUM_THREADS: 16

diff --git a/.github/workflows/llm_tests_for_stable_version_on_spr.yml b/.github/workflows/llm_tests_for_stable_version_on_spr.yml

index cedc1624..d852499c 100644

--- a/.github/workflows/llm_tests_for_stable_version_on_spr.yml

+++ b/.github/workflows/llm_tests_for_stable_version_on_spr.yml

@@ -29,7 +29,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, spr01-perf]

env:

OMP_NUM_THREADS: 16

@@ -87,7 +87,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.9"]

+ python-version: ["3.11"]

runs-on: [self-hosted, llm, spr01-perf]

env:

OMP_NUM_THREADS: 16

diff --git a/.github/workflows/llm_unit_tests.yml b/.github/workflows/llm_unit_tests.yml

index f1b762d9..cf4a7312 100644

--- a/.github/workflows/llm_unit_tests.yml

+++ b/.github/workflows/llm_unit_tests.yml

@@ -51,7 +51,7 @@ jobs:

if [ ${{ github.event_name }} == 'schedule' ]; then

python_version='["3.9", "3.10", "3.11"]'

else

- python_version='["3.9"]'

+ python_version='["3.11"]'

fi

list=$(echo ${python_version} | jq -c)

echo "python-version=${list}" >> "$GITHUB_OUTPUT"

@@ -224,6 +224,7 @@ jobs:

run: |

pip install llama-index-readers-file llama-index-vector-stores-postgres llama-index-embeddings-huggingface

pip install transformers==4.31.0

+ pip install "pydantic>=2.0.0"

bash python/llm/test/run-llm-llamaindex-tests.sh

llm-unit-test-on-arc:

needs: [setup-python-version, llm-cpp-build]

@@ -398,4 +399,5 @@ jobs:

pip install --pre --upgrade ipex-llm[xpu_2.0] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

source /home/arda/intel/oneapi/setvars.sh

fi

+ pip install "pydantic>=2.0.0"

bash python/llm/test/run-llm-llamaindex-tests-gpu.sh

\ No newline at end of file

diff --git a/README.md b/README.md

index 73d3c5ae..35ccdea6 100644

--- a/README.md

+++ b/README.md

@@ -7,7 +7,7 @@

**`IPEX-LLM`** is a PyTorch library for running **LLM** on Intel CPU and GPU *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)* with very low latency[^1].

> [!NOTE]

> - *It is built on top of **Intel Extension for PyTorch** (**`IPEX`**), as well as the excellent work of **`llama.cpp`**, **`bitsandbytes`**, **`vLLM`**, **`qlora`**, **`AutoGPTQ`**, **`AutoAWQ`**, etc.*

-> - *It provides seamless integration with [llama.cpp](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html), [Text-Generation-WebUI](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html), [HuggingFace transformers](python/llm/example/GPU/HF-Transformers-AutoModels), [HuggingFace PEFT](python/llm/example/GPU/LLM-Finetuning), [LangChain](python/llm/example/GPU/LangChain), [LlamaIndex](python/llm/example/GPU/LlamaIndex), [DeepSpeed-AutoTP](python/llm/example/GPU/Deepspeed-AutoTP), [vLLM](python/llm/example/GPU/vLLM-Serving), [FastChat](python/llm/src/ipex_llm/serving/fastchat), [HuggingFace TRL](python/llm/example/GPU/LLM-Finetuning/DPO), [AutoGen](python/llm/example/CPU/Applications/autogen), [ModeScope](python/llm/example/GPU/ModelScope-Models), etc.*

+> - *It provides seamless integration with [llama.cpp](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html), [ollama](https://ipex-llm.readthedocs.io/en/main/doc/LLM/Quickstart/ollama_quickstart.html), [Text-Generation-WebUI](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html), [HuggingFace transformers](python/llm/example/GPU/HF-Transformers-AutoModels), [HuggingFace PEFT](python/llm/example/GPU/LLM-Finetuning), [LangChain](python/llm/example/GPU/LangChain), [LlamaIndex](python/llm/example/GPU/LlamaIndex), [DeepSpeed-AutoTP](python/llm/example/GPU/Deepspeed-AutoTP), [vLLM](python/llm/example/GPU/vLLM-Serving), [FastChat](python/llm/src/ipex_llm/serving/fastchat), [HuggingFace TRL](python/llm/example/GPU/LLM-Finetuning/DPO), [AutoGen](python/llm/example/CPU/Applications/autogen), [ModeScope](python/llm/example/GPU/ModelScope-Models), etc.*

> - ***50+ models** have been optimized/verified on `ipex-llm` (including LLaMA2, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, RWKV, and more); see the complete list [here](#verified-models).*

## `ipex-llm` Demo

@@ -48,9 +48,10 @@ See the demo of running [*Text-Generation-WebUI*](https://ipex-llm.readthedocs.i

## Latest Update 🔥

+- [2024/04] `ipex-llm` now provides C++ interface, which can be used as an accelerated backend for running [llama.cpp](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html) and [ollama](https://ipex-llm.readthedocs.io/en/main/doc/LLM/Quickstart/ollama_quickstart.html) on Intel GPU.

- [2024/03] `bigdl-llm` has now become `ipex-llm` (see the migration guide [here](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/bigdl_llm_migration.html)); you may find the original `BigDL` project [here](https://github.com/intel-analytics/bigdl-2.x).

- [2024/02] `ipex-llm` now supports directly loading model from [ModelScope](python/llm/example/GPU/ModelScope-Models) ([魔搭](python/llm/example/CPU/ModelScope-Models)).

-- [2024/02] `ipex-llm` added inital **INT2** support (based on llama.cpp [IQ2](python/llm/example/GPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF-IQ2) mechanism), which makes it possible to run large-size LLM (e.g., Mixtral-8x7B) on Intel GPU with 16GB VRAM.

+- [2024/02] `ipex-llm` added initial **INT2** support (based on llama.cpp [IQ2](python/llm/example/GPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF-IQ2) mechanism), which makes it possible to run large-size LLM (e.g., Mixtral-8x7B) on Intel GPU with 16GB VRAM.

- [2024/02] Users can now use `ipex-llm` through [Text-Generation-WebUI](https://github.com/intel-analytics/text-generation-webui) GUI.

- [2024/02] `ipex-llm` now supports *[Self-Speculative Decoding](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Inference/Self_Speculative_Decoding.html)*, which in practice brings **~30% speedup** for FP16 and BF16 inference latency on Intel [GPU](python/llm/example/GPU/Speculative-Decoding) and [CPU](python/llm/example/CPU/Speculative-Decoding) respectively.

- [2024/02] `ipex-llm` now supports a comprehensive list of LLM **finetuning** on Intel GPU (including [LoRA](python/llm/example/GPU/LLM-Finetuning/LoRA), [QLoRA](python/llm/example/GPU/LLM-Finetuning/QLoRA), [DPO](python/llm/example/GPU/LLM-Finetuning/DPO), [QA-LoRA](python/llm/example/GPU/LLM-Finetuning/QA-LoRA) and [ReLoRA](python/llm/example/GPU/LLM-Finetuning/ReLora)).

@@ -81,7 +82,8 @@ See the demo of running [*Text-Generation-WebUI*](https://ipex-llm.readthedocs.i

- *For more details, please refer to the [installation guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install.html)*

### Run `ipex-llm`

-- [llama.cpp](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html): running **ipex-llm for llama.cpp** (*using C++ interface of `ipex-llm` as an accelerated backend for `llama.cpp` on Intel GPU*)

+- [llama.cpp](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/llama_cpp_quickstart.html): running **llama.cpp** (*using C++ interface of `ipex-llm` as an accelerated backend for `llama.cpp`*) on Intel GPU

+- [ollama](https://ipex-llm.readthedocs.io/en/main/doc/LLM/Quickstart/ollama_quickstart.html): running **ollama** (*using C++ interface of `ipex-llm` as an accelerated backend for `ollama`*) on Intel GPU

- [vLLM](python/llm/example/GPU/vLLM-Serving): running `ipex-llm` in `vLLM` on both Intel [GPU](python/llm/example/GPU/vLLM-Serving) and [CPU](python/llm/example/CPU/vLLM-Serving)

- [FastChat](python/llm/src/ipex_llm/serving/fastchat): running `ipex-llm` in `FastChat` serving on on both Intel GPU and CPU

- [LangChain-Chatchat RAG](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/chatchat_quickstart.html): running `ipex-llm` in `LangChain-Chatchat` (*Knowledge Base QA using **RAG** pipeline*)

diff --git a/docker/llm/finetune/lora/cpu/docker/Dockerfile b/docker/llm/finetune/lora/cpu/docker/Dockerfile

index 4b6f51b9..8c564b03 100644

--- a/docker/llm/finetune/lora/cpu/docker/Dockerfile

+++ b/docker/llm/finetune/lora/cpu/docker/Dockerfile

@@ -21,7 +21,7 @@ RUN echo "deb [signed-by=/usr/share/keyrings/intel-oneapi-archive-keyring.gpg] h

RUN mkdir /ipex_llm/data && mkdir /ipex_llm/model && \

# install pytorch 2.0.1

apt-get update && \

- apt-get install -y python3-pip python3.9-dev python3-wheel git software-properties-common && \

+ apt-get install -y python3-pip python3.11-dev python3-wheel git software-properties-common && \

pip3 install --upgrade pip && \

export PIP_DEFAULT_TIMEOUT=100 && \

pip install --upgrade torch==2.1.0 --index-url https://download.pytorch.org/whl/cpu && \

@@ -37,9 +37,9 @@ RUN mkdir /ipex_llm/data && mkdir /ipex_llm/model && \

pip install -r /ipex_llm/requirements.txt && \

# install python

add-apt-repository ppa:deadsnakes/ppa -y && \

- apt-get install -y python3.9 && \

+ apt-get install -y python3.11 && \

rm /usr/bin/python3 && \

- ln -s /usr/bin/python3.9 /usr/bin/python3 && \

+ ln -s /usr/bin/python3.11 /usr/bin/python3 && \

ln -s /usr/bin/python3 /usr/bin/python && \

pip install --no-cache requests argparse cryptography==3.3.2 urllib3 && \

pip install --upgrade requests && \

diff --git a/docker/llm/finetune/qlora/cpu/docker/Dockerfile b/docker/llm/finetune/qlora/cpu/docker/Dockerfile

index 2aaaa08e..4f68d486 100644

--- a/docker/llm/finetune/qlora/cpu/docker/Dockerfile

+++ b/docker/llm/finetune/qlora/cpu/docker/Dockerfile

@@ -21,7 +21,7 @@ RUN echo "deb [signed-by=/usr/share/keyrings/intel-oneapi-archive-keyring.gpg] h

RUN mkdir -p /ipex_llm/data && mkdir -p /ipex_llm/model && \

# install pytorch 2.1.0

apt-get update && \

- apt-get install -y --no-install-recommends python3-pip python3.9-dev python3-wheel python3.9-distutils git software-properties-common && \

+ apt-get install -y --no-install-recommends python3-pip python3.11-dev python3-wheel python3.11-distutils git software-properties-common && \

apt-get clean && \

rm -rf /var/lib/apt/lists/* && \

pip3 install --upgrade pip && \

diff --git a/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s b/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

index 71a8a5e1..f14e0b08 100644

--- a/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

+++ b/docker/llm/finetune/qlora/cpu/docker/Dockerfile.k8s

@@ -22,7 +22,7 @@ RUN echo "deb [signed-by=/usr/share/keyrings/intel-oneapi-archive-keyring.gpg] h

RUN mkdir -p /ipex_llm/data && mkdir -p /ipex_llm/model && \

apt-get update && \

apt install -y --no-install-recommends openssh-server openssh-client libcap2-bin gnupg2 ca-certificates \

- python3-pip python3.9-dev python3-wheel python3.9-distutils git software-properties-common && \

+ python3-pip python3.11-dev python3-wheel python3.11-distutils git software-properties-common && \

apt-get clean && \

rm -rf /var/lib/apt/lists/* && \

mkdir -p /var/run/sshd && \

diff --git a/docker/llm/finetune/qlora/xpu/docker/Dockerfile b/docker/llm/finetune/qlora/xpu/docker/Dockerfile

index ea5fe693..478ed5bc 100644

--- a/docker/llm/finetune/qlora/xpu/docker/Dockerfile

+++ b/docker/llm/finetune/qlora/xpu/docker/Dockerfile

@@ -18,15 +18,15 @@ RUN curl -fsSL https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-P

apt-get install -y curl wget git gnupg gpg-agent software-properties-common libunwind8-dev vim less && \

# install Intel GPU driver

apt-get install -y intel-opencl-icd intel-level-zero-gpu=1.3.26241.33-647~22.04 level-zero level-zero-dev --allow-downgrades && \

- # install python 3.9

+ # install python 3.11

ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone && \

env DEBIAN_FRONTEND=noninteractive apt-get update && \

add-apt-repository ppa:deadsnakes/ppa -y && \

- apt-get install -y python3.9 && \

+ apt-get install -y python3.11 && \

rm /usr/bin/python3 && \

- ln -s /usr/bin/python3.9 /usr/bin/python3 && \

+ ln -s /usr/bin/python3.11 /usr/bin/python3 && \

ln -s /usr/bin/python3 /usr/bin/python && \

- apt-get install -y python3-pip python3.9-dev python3-wheel python3.9-distutils && \

+ apt-get install -y python3-pip python3.11-dev python3-wheel python3.11-distutils && \

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && \

# install XPU ipex-llm

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/ && \

diff --git a/docker/llm/inference/cpu/docker/Dockerfile b/docker/llm/inference/cpu/docker/Dockerfile

index f8a302f7..319ffcdf 100644

--- a/docker/llm/inference/cpu/docker/Dockerfile

+++ b/docker/llm/inference/cpu/docker/Dockerfile

@@ -9,22 +9,30 @@ ENV PYTHONUNBUFFERED=1

COPY ./start-notebook.sh /llm/start-notebook.sh

-# Install PYTHON 3.9

+# Update the software sources

RUN env DEBIAN_FRONTEND=noninteractive apt-get update && \

+# Install essential packages

apt install software-properties-common libunwind8-dev vim less -y && \

+# Install git, curl, and wget

+ apt-get install -y git curl wget && \

+# Install Python 3.11

+ # Add Python 3.11 PPA repository

add-apt-repository ppa:deadsnakes/ppa -y && \

- apt-get install -y python3.9 git curl wget && \

+ # Install Python 3.11

+ apt-get install -y python3.11 && \

+ # Remove the original /usr/bin/python3 symbolic link

rm /usr/bin/python3 && \

- ln -s /usr/bin/python3.9 /usr/bin/python3 && \

+ # Create a symbolic link pointing to Python 3.11 at /usr/bin/python3

+ ln -s /usr/bin/python3.11 /usr/bin/python3 && \

+ # Create a symbolic link pointing to /usr/bin/python3 at /usr/bin/python

ln -s /usr/bin/python3 /usr/bin/python && \

- apt-get install -y python3-pip python3.9-dev python3-wheel python3.9-distutils && \

+ # Install Python 3.11 development and utility packages

+ apt-get install -y python3-pip python3.11-dev python3-wheel python3.11-distutils && \

+# Download and install pip, install FastChat from source requires PEP 660 support

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && \

-# Install FastChat from source requires PEP 660 support

python3 get-pip.py && \

rm get-pip.py && \

pip install --upgrade requests argparse urllib3 && \

- pip3 install --no-cache-dir --upgrade torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu && \

- pip install --pre --upgrade ipex-llm[all] && \

# Download ipex-llm-tutorial

cd /llm && \

pip install --upgrade jupyterlab && \

diff --git a/docker/llm/inference/xpu/docker/Dockerfile b/docker/llm/inference/xpu/docker/Dockerfile

index 74dec616..b3269627 100644

--- a/docker/llm/inference/xpu/docker/Dockerfile

+++ b/docker/llm/inference/xpu/docker/Dockerfile

@@ -20,16 +20,16 @@ RUN curl -fsSL https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-P

wget -qO - https://repositories.intel.com/graphics/intel-graphics.key | gpg --dearmor --output /usr/share/keyrings/intel-graphics.gpg && \

echo 'deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/graphics/ubuntu jammy arc' | tee /etc/apt/sources.list.d/intel.gpu.jammy.list && \

rm /etc/apt/sources.list.d/intel-graphics.list && \

- # Install PYTHON 3.9 and IPEX-LLM[xpu]

+ # Install PYTHON 3.11 and IPEX-LLM[xpu]

ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone && \

env DEBIAN_FRONTEND=noninteractive apt-get update && \

apt install software-properties-common libunwind8-dev vim less -y && \

add-apt-repository ppa:deadsnakes/ppa -y && \

- apt-get install -y python3.9 git curl wget && \

+ apt-get install -y python3.11 git curl wget && \

rm /usr/bin/python3 && \

- ln -s /usr/bin/python3.9 /usr/bin/python3 && \

+ ln -s /usr/bin/python3.11 /usr/bin/python3 && \

ln -s /usr/bin/python3 /usr/bin/python && \

- apt-get install -y python3-pip python3.9-dev python3-wheel python3.9-distutils && \

+ apt-get install -y python3-pip python3.11-dev python3-wheel python3.11-distutils && \

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py && \

# Install FastChat from source requires PEP 660 support

python3 get-pip.py && \

diff --git a/docker/llm/serving/cpu/docker/Dockerfile b/docker/llm/serving/cpu/docker/Dockerfile

index 06ddb7f5..e3ddb7ca 100644

--- a/docker/llm/serving/cpu/docker/Dockerfile

+++ b/docker/llm/serving/cpu/docker/Dockerfile

@@ -16,7 +16,7 @@ RUN cd /llm && \

# Fix Trivy CVE Issues

pip install Jinja2==3.1.3 transformers==4.36.2 gradio==4.19.2 cryptography==42.0.4 && \

# Fix Qwen model adpater in fastchat

- patch /usr/local/lib/python3.9/dist-packages/fastchat/model/model_adapter.py < /llm/model_adapter.py.patch && \

+ patch /usr/local/lib/python3.11/dist-packages/fastchat/model/model_adapter.py < /llm/model_adapter.py.patch && \

chmod +x /opt/entrypoint.sh && \

chmod +x /sbin/tini && \

cp /sbin/tini /usr/bin/tini

diff --git a/docs/readthedocs/source/_templates/sidebar_quicklinks.html b/docs/readthedocs/source/_templates/sidebar_quicklinks.html

index 3ce6d153..ef1ea3eb 100644

--- a/docs/readthedocs/source/_templates/sidebar_quicklinks.html

+++ b/docs/readthedocs/source/_templates/sidebar_quicklinks.html

@@ -29,13 +29,16 @@

Install IPEX-LLM in Docker on Windows with Intel GPU

- Run Langchain-Chatchat (RAG Application) on Intel GPU

+ Run Local RAG using Langchain-Chatchat on Intel GPU

Run Text Generation WebUI on Intel GPU

- Run Code Copilot (Continue) in VSCode with Intel GPU

+ Run Coding Copilot (Continue) in VSCode with Intel GPU

+

+

+ Run Open WebUI with IPEX-LLM on Intel GPU

Run Performance Benchmarking with IPEX-LLM

diff --git a/docs/readthedocs/source/_toc.yml b/docs/readthedocs/source/_toc.yml

index cf522a82..88a01688 100644

--- a/docs/readthedocs/source/_toc.yml

+++ b/docs/readthedocs/source/_toc.yml

@@ -25,6 +25,7 @@ subtrees:

- file: doc/LLM/Quickstart/docker_windows_gpu

- file: doc/LLM/Quickstart/chatchat_quickstart

- file: doc/LLM/Quickstart/webui_quickstart

+ - file: doc/LLM/Quickstart/open_webui_with_ollama_quickstart

- file: doc/LLM/Quickstart/continue_quickstart

- file: doc/LLM/Quickstart/benchmark_quickstart

- file: doc/LLM/Quickstart/llama_cpp_quickstart

diff --git a/docs/readthedocs/source/doc/LLM/Overview/install_cpu.md b/docs/readthedocs/source/doc/LLM/Overview/install_cpu.md

index bb2b952c..53342b77 100644

--- a/docs/readthedocs/source/doc/LLM/Overview/install_cpu.md

+++ b/docs/readthedocs/source/doc/LLM/Overview/install_cpu.md

@@ -17,7 +17,7 @@ Please refer to [Environment Setup](#environment-setup) for more information.

.. important::

- ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11; Python 3.9 is recommended for best practices.

+ ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11; Python 3.11 is recommended for best practices.

```

## Recommended Requirements

@@ -39,10 +39,10 @@ Here list the recommended hardware and OS for smooth IPEX-LLM optimization exper

For optimal performance with LLM models using IPEX-LLM optimizations on Intel CPUs, here are some best practices for setting up environment:

-First we recommend using [Conda](https://docs.conda.io/en/latest/miniconda.html) to create a python 3.9 enviroment:

+First we recommend using [Conda](https://docs.conda.io/en/latest/miniconda.html) to create a python 3.11 enviroment:

```bash

-conda create -n llm python=3.9

+conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[all] # install the latest ipex-llm nightly build with 'all' option

diff --git a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

index 22f49e1f..b58e6f1e 100644

--- a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

@@ -22,10 +22,10 @@ To apply Intel GPU acceleration, there're several prerequisite steps for tools i

* Step 4: Install Intel® oneAPI Base Toolkit 2024.0:

- First, Create a Python 3.9 enviroment and activate it. In Anaconda Prompt:

+ First, Create a Python 3.11 enviroment and activate it. In Anaconda Prompt:

```cmd

- conda create -n llm python=3.9 libuv

+ conda create -n llm python=3.11 libuv

conda activate llm

```

@@ -33,7 +33,7 @@ To apply Intel GPU acceleration, there're several prerequisite steps for tools i

```eval_rst

.. important::

- ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11. Python 3.9 is recommended for best practices.

+ ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11. Python 3.11 is recommended for best practices.

```

Then, use `pip` to install the Intel oneAPI Base Toolkit 2024.0:

@@ -93,17 +93,17 @@ If you encounter network issues when installing IPEX, you can also install IPEX-

Download the wheels on Windows system:

```

-wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.1.0a0%2Bcxx11.abi-cp39-cp39-win_amd64.whl

-wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.16.0a0%2Bcxx11.abi-cp39-cp39-win_amd64.whl

-wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.1.10%2Bxpu-cp39-cp39-win_amd64.whl

+wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.1.0a0%2Bcxx11.abi-cp311-cp311-win_amd64.whl

+wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.16.0a0%2Bcxx11.abi-cp311-cp311-win_amd64.whl

+wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.1.10%2Bxpu-cp311-cp311-win_amd64.whl

```

You may install dependencies directly from the wheel archives and then install `ipex-llm` using following commands:

```

-pip install torch-2.1.0a0+cxx11.abi-cp39-cp39-win_amd64.whl

-pip install torchvision-0.16.0a0+cxx11.abi-cp39-cp39-win_amd64.whl

-pip install intel_extension_for_pytorch-2.1.10+xpu-cp39-cp39-win_amd64.whl

+pip install torch-2.1.0a0+cxx11.abi-cp311-cp311-win_amd64.whl

+pip install torchvision-0.16.0a0+cxx11.abi-cp311-cp311-win_amd64.whl

+pip install intel_extension_for_pytorch-2.1.10+xpu-cp311-cp311-win_amd64.whl

pip install --pre --upgrade ipex-llm[xpu]

```

@@ -111,7 +111,7 @@ pip install --pre --upgrade ipex-llm[xpu]

```eval_rst

.. note::

- All the wheel packages mentioned here are for Python 3.9. If you would like to use Python 3.10 or 3.11, you should modify the wheel names for ``torch``, ``torchvision``, and ``intel_extension_for_pytorch`` by replacing ``cp39`` with ``cp310`` or ``cp311``, respectively.

+ All the wheel packages mentioned here are for Python 3.11. If you would like to use Python 3.9 or 3.10, you should modify the wheel names for ``torch``, ``torchvision``, and ``intel_extension_for_pytorch`` by replacing ``cp11`` with ``cp39`` or ``cp310``, respectively.

```

### Runtime Configuration

@@ -164,7 +164,7 @@ If you met error when importing `intel_extension_for_pytorch`, please ensure tha

* Ensure that `libuv` is installed in your conda environment. This can be done during the creation of the environment with the command:

```cmd

- conda create -n llm python=3.9 libuv

+ conda create -n llm python=3.11 libuv

```

If you missed `libuv`, you can add it to your existing environment through

```cmd

@@ -399,12 +399,12 @@ IPEX-LLM GPU support on Linux has been verified on:

### Install IPEX-LLM

#### Install IPEX-LLM From PyPI

-We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) to create a python 3.9 enviroment:

+We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) to create a python 3.11 enviroment:

```eval_rst

.. important::

- ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11. Python 3.9 is recommended for best practices.

+ ``ipex-llm`` is tested with Python 3.9, 3.10 and 3.11. Python 3.11 is recommended for best practices.

```

```eval_rst

@@ -422,7 +422,7 @@ We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) t

.. code-block:: bash

- conda create -n llm python=3.9

+ conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

@@ -439,7 +439,7 @@ We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) t

.. code-block:: bash

- conda create -n llm python=3.9

+ conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

@@ -461,7 +461,7 @@ We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) t

.. code-block:: bash

- conda create -n llm python=3.9

+ conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[xpu_2.0] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

@@ -470,7 +470,7 @@ We recommend using [miniconda](https://docs.conda.io/en/latest/miniconda.html) t

.. code-block:: bash

- conda create -n llm python=3.9

+ conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[xpu_2.0] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

@@ -488,18 +488,18 @@ If you encounter network issues when installing IPEX, you can also install IPEX-

.. code-block:: bash

# get the wheels on Linux system for IPEX 2.1.10+xpu

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.1.0a0%2Bcxx11.abi-cp39-cp39-linux_x86_64.whl

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.16.0a0%2Bcxx11.abi-cp39-cp39-linux_x86_64.whl

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.1.10%2Bxpu-cp39-cp39-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.1.0a0%2Bcxx11.abi-cp311-cp311-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.16.0a0%2Bcxx11.abi-cp311-cp311-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.1.10%2Bxpu-cp311-cp311-linux_x86_64.whl

Then you may install directly from the wheel archives using following commands:

.. code-block:: bash

# install the packages from the wheels

- pip install torch-2.1.0a0+cxx11.abi-cp39-cp39-linux_x86_64.whl

- pip install torchvision-0.16.0a0+cxx11.abi-cp39-cp39-linux_x86_64.whl

- pip install intel_extension_for_pytorch-2.1.10+xpu-cp39-cp39-linux_x86_64.whl

+ pip install torch-2.1.0a0+cxx11.abi-cp311-cp311-linux_x86_64.whl

+ pip install torchvision-0.16.0a0+cxx11.abi-cp311-cp311-linux_x86_64.whl

+ pip install intel_extension_for_pytorch-2.1.10+xpu-cp311-cp311-linux_x86_64.whl

# install ipex-llm for Intel GPU

pip install --pre --upgrade ipex-llm[xpu]

@@ -509,18 +509,18 @@ If you encounter network issues when installing IPEX, you can also install IPEX-

.. code-block:: bash

# get the wheels on Linux system for IPEX 2.0.110+xpu

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.0.1a0%2Bcxx11.abi-cp39-cp39-linux_x86_64.whl

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.15.2a0%2Bcxx11.abi-cp39-cp39-linux_x86_64.whl

- wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.0.110%2Bxpu-cp39-cp39-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torch-2.0.1a0%2Bcxx11.abi-cp311-cp311-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/torchvision-0.15.2a0%2Bcxx11.abi-cp311-cp311-linux_x86_64.whl

+ wget https://intel-extension-for-pytorch.s3.amazonaws.com/ipex_stable/xpu/intel_extension_for_pytorch-2.0.110%2Bxpu-cp311-cp311-linux_x86_64.whl

Then you may install directly from the wheel archives using following commands:

.. code-block:: bash

# install the packages from the wheels

- pip install torch-2.0.1a0+cxx11.abi-cp39-cp39-linux_x86_64.whl

- pip install torchvision-0.15.2a0+cxx11.abi-cp39-cp39-linux_x86_64.whl

- pip install intel_extension_for_pytorch-2.0.110+xpu-cp39-cp39-linux_x86_64.whl

+ pip install torch-2.0.1a0+cxx11.abi-cp311-cp311-linux_x86_64.whl

+ pip install torchvision-0.15.2a0+cxx11.abi-cp311-cp311-linux_x86_64.whl

+ pip install intel_extension_for_pytorch-2.0.110+xpu-cp311-cp311-linux_x86_64.whl

# install ipex-llm for Intel GPU

pip install --pre --upgrade ipex-llm[xpu_2.0]

@@ -530,7 +530,7 @@ If you encounter network issues when installing IPEX, you can also install IPEX-

```eval_rst

.. note::

- All the wheel packages mentioned here are for Python 3.9. If you would like to use Python 3.10 or 3.11, you should modify the wheel names for ``torch``, ``torchvision``, and ``intel_extension_for_pytorch`` by replacing ``cp39`` with ``cp310`` or ``cp311``, respectively.

+ All the wheel packages mentioned here are for Python 3.11. If you would like to use Python 3.9 or 3.10, you should modify the wheel names for ``torch``, ``torchvision``, and ``intel_extension_for_pytorch`` by replacing ``cp11`` with ``cp39`` or ``cp310``, respectively.

```

### Runtime Configuration

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

index 9939b860..ad5ca185 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/chatchat_quickstart.md

@@ -6,8 +6,8 @@

- | English |

- 简体中文 |

+ English |

+ 简体中文 |

|

@@ -33,7 +33,7 @@ See the Langchain-Chatchat architecture below ([source](https://github.com/chatc

Follow the guide that corresponds to your specific system and GPU type from the links provided below:

- For systems with Intel Core Ultra integrated GPU: [Windows Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_win_mtl.md#)

-- For systems with Intel Arc A-Series GPU: [Windows Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_windows_arc.md#) | [Linux Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_linux_arc.md#)

+- For systems with Intel Arc A-Series GPU: [Windows Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_win_arc.md#) | [Linux Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_linux_arc.md#)

- For systems with Intel Data Center Max Series GPU: [Linux Guide](https://github.com/intel-analytics/Langchain-Chatchat/blob/ipex-llm/INSTALL_linux_max.md#)

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/continue_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/continue_quickstart.md

index 0f75491c..d5176180 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/continue_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/continue_quickstart.md

@@ -1,8 +1,9 @@

-# Run Code Copilot on Windows with Intel GPU

+# Run Coding Copilot on Windows with Intel GPU

-[**Continue**](https://marketplace.visualstudio.com/items?itemName=Continue.continue) is a coding copilot extension in [Microsoft Visual Studio Code](https://code.visualstudio.com/); by porting it to [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily leverage local llms running on Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) for code explanation, code generation/completion; see the demos of using Continue with [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) running on Intel A770 GPU below.

+[**Continue**](https://marketplace.visualstudio.com/items?itemName=Continue.continue) is a coding copilot extension in [Microsoft Visual Studio Code](https://code.visualstudio.com/); by porting it to [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily leverage local LLMs running on Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) for code explanation, code generation/completion, etc.

+See the demos of using Continue with [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) running on Intel A770 GPU below.

@@ -27,7 +28,7 @@ This guide walks you through setting up and running **Continue** within _Visual

Visit [Run Text Generation WebUI Quickstart Guide](webui_quickstart.html), and follow the steps 1) [Install IPEX-LLM](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html#install-ipex-llm), 2) [Install WebUI](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html#install-the-webui) and 3) [Start the Server](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html#start-the-webui-server) to install and start the Text Generation WebUI API Service. **Please pay attention to below items during installation:**

-- The Text Generation WebUI API service requires Python version 3.10 or higher. But [IPEX-LLM installation instructions](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Quickstart/webui_quickstart.html#install-ipex-llm) used ``python=3.9`` as default for creating the conda environment. We recommend changing it to ``3.11``, using below command:

+- The Text Generation WebUI API service requires Python version 3.10 or higher. We recommend use Python 3.11 as below:

```bash

conda create -n llm python=3.11 libuv

```

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/index.rst b/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

index 1ed1aa2b..ea9df495 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/index.rst

@@ -13,9 +13,10 @@ This section includes efficient guide to show you how to:

* `Install IPEX-LLM on Windows with Intel GPU <./install_windows_gpu.html>`_

* `Install IPEX-LLM in Docker on Windows with Intel GPU <./docker_windows_gpu.html>`_

* `Run Performance Benchmarking with IPEX-LLM <./benchmark_quickstart.html>`_

-* `Run Langchain-Chatchat (RAG Application) on Intel GPU <./chatchat_quickstart.html>`_

+* `Run Local RAG using Langchain-Chatchat on Intel GPU <./chatchat_quickstart.html>`_

* `Run Text Generation WebUI on Intel GPU <./webui_quickstart.html>`_

-* `Run Code Copilot (Continue) in VSCode with Intel GPU <./continue_quickstart.html>`_

+* `Run Open WebUI on Intel GPU <./open_webui_with_ollama_quickstart.html>`_

+* `Run Coding Copilot (Continue) in VSCode with Intel GPU <./continue_quickstart.html>`_

* `Run llama.cpp with IPEX-LLM on Intel GPU <./llama_cpp_quickstart.html>`_

* `Run Ollama with IPEX-LLM on Intel GPU <./ollama_quickstart.html>`_

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

index 157e03f4..efcf95b1 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

@@ -144,7 +144,7 @@ You can use `conda --version` to verify you conda installation.

After installation, create a new python environment `llm`:

```cmd

-conda create -n llm python=3.9

+conda create -n llm python=3.11

```

Activate the newly created environment `llm`:

```cmd

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 14439e70..6a0c2e78 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -57,7 +57,7 @@ Visit [Miniconda installation page](https://docs.anaconda.com/free/miniconda/),

Open the **Anaconda Prompt**. Then create a new python environment `llm` and activate it:

```cmd

-conda create -n llm python=3.9 libuv

+conda create -n llm python=3.11 libuv

conda activate llm

```

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

index 87a0c40c..4736b6dc 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/llama_cpp_quickstart.md

@@ -1,6 +1,6 @@

# Run llama.cpp with IPEX-LLM on Intel GPU

-[ggerganov/llama.cpp](https://github.com/ggerganov/llama.cpp) prvoides fast LLM inference in in pure C++ across a variety of hardware; you can now use the C++ interface of `ipex-llm` as an accelerated backend for `llama.cpp` running on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+[ggerganov/llama.cpp](https://github.com/ggerganov/llama.cpp) prvoides fast LLM inference in in pure C++ across a variety of hardware; you can now use the C++ interface of [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) as an accelerated backend for `llama.cpp` running on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

See the demo of running LLaMA2-7B on Intel Arc GPU below.

@@ -26,7 +26,7 @@ Visit the [Install IPEX-LLM on Windows with Intel GPU Guide](https://ipex-llm.re

To use `llama.cpp` with IPEX-LLM, first ensure that `ipex-llm[cpp]` is installed.

```cmd

-conda create -n llm-cpp python=3.9

+conda create -n llm-cpp python=3.11

conda activate llm-cpp

pip install --pre --upgrade ipex-llm[cpp]

```

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

index 04a9fd9b..998dd2d8 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/ollama_quickstart.md

@@ -1,10 +1,16 @@

# Run Ollama on Linux with Intel GPU

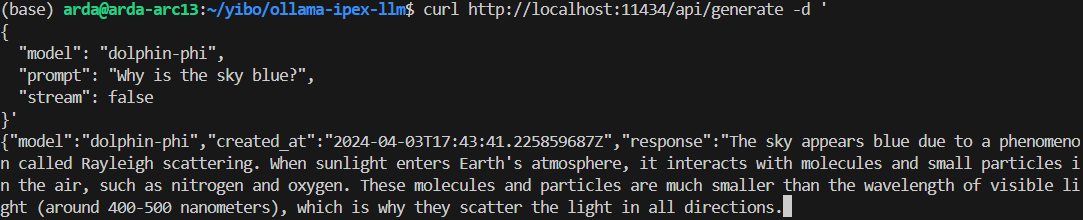

-The [ollama/ollama](https://github.com/ollama/ollama) is popular framework designed to build and run language models on a local machine. Now you can run Ollama with [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) on Intel GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max); see the demo of running LLaMA2-7B on an Intel A770 GPU below.

+[ollama/ollama](https://github.com/ollama/ollama) is popular framework designed to build and run language models on a local machine; you can now use the C++ interface of [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) as an accelerated backend for `ollama` running on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+

+```eval_rst

+.. note::

+ Only Linux is currently supported.

+```

+

+See the demo of running LLaMA2-7B on Intel Arc GPU below.

-

## Quickstart

### 1 Install IPEX-LLM with Ollama Binaries

@@ -45,16 +51,16 @@ source /opt/intel/oneapi/setvars.sh

The console will display messages similar to the following:

-

+

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull ` to automatically pull a model. e.g. `dolphin-phi:latest`:

-

+

### 4 Pull Model

-Keep the Ollama service on and open a new terminal and pull a model, e.g. `dolphin-phi:latest`:

+Keep the Ollama service on and open another terminal and run `./ollama pull ` to automatically pull a model. e.g. `dolphin-phi:latest`:

-

+

@@ -77,7 +83,7 @@ curl http://localhost:11434/api/generate -d '

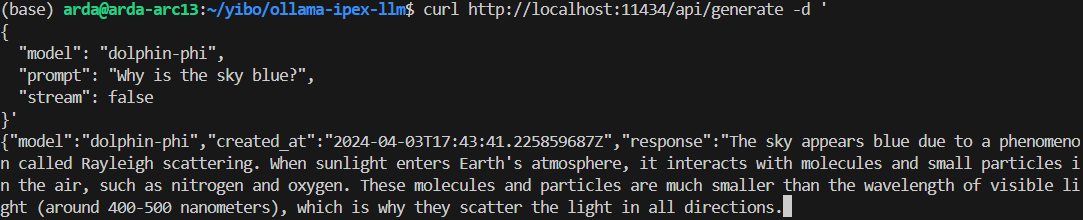

An example output of using model `doplphin-phi` looks like the following:

-

+

@@ -77,7 +83,7 @@ curl http://localhost:11434/api/generate -d '

An example output of using model `doplphin-phi` looks like the following:

-

+

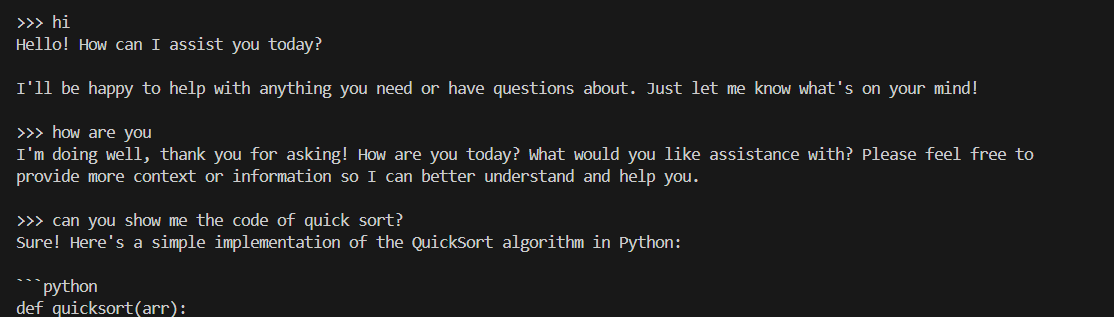

@@ -99,6 +105,6 @@ source /opt/intel/oneapi/setvars.sh

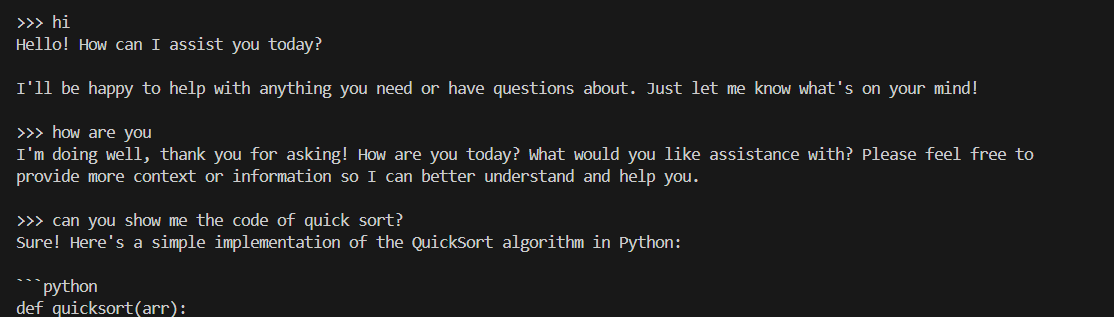

An example process of interacting with model with `ollama run` looks like the following:

-

+

@@ -99,6 +105,6 @@ source /opt/intel/oneapi/setvars.sh

An example process of interacting with model with `ollama run` looks like the following:

-

+

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

new file mode 100644

index 00000000..d6de90df

--- /dev/null

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

@@ -0,0 +1,151 @@

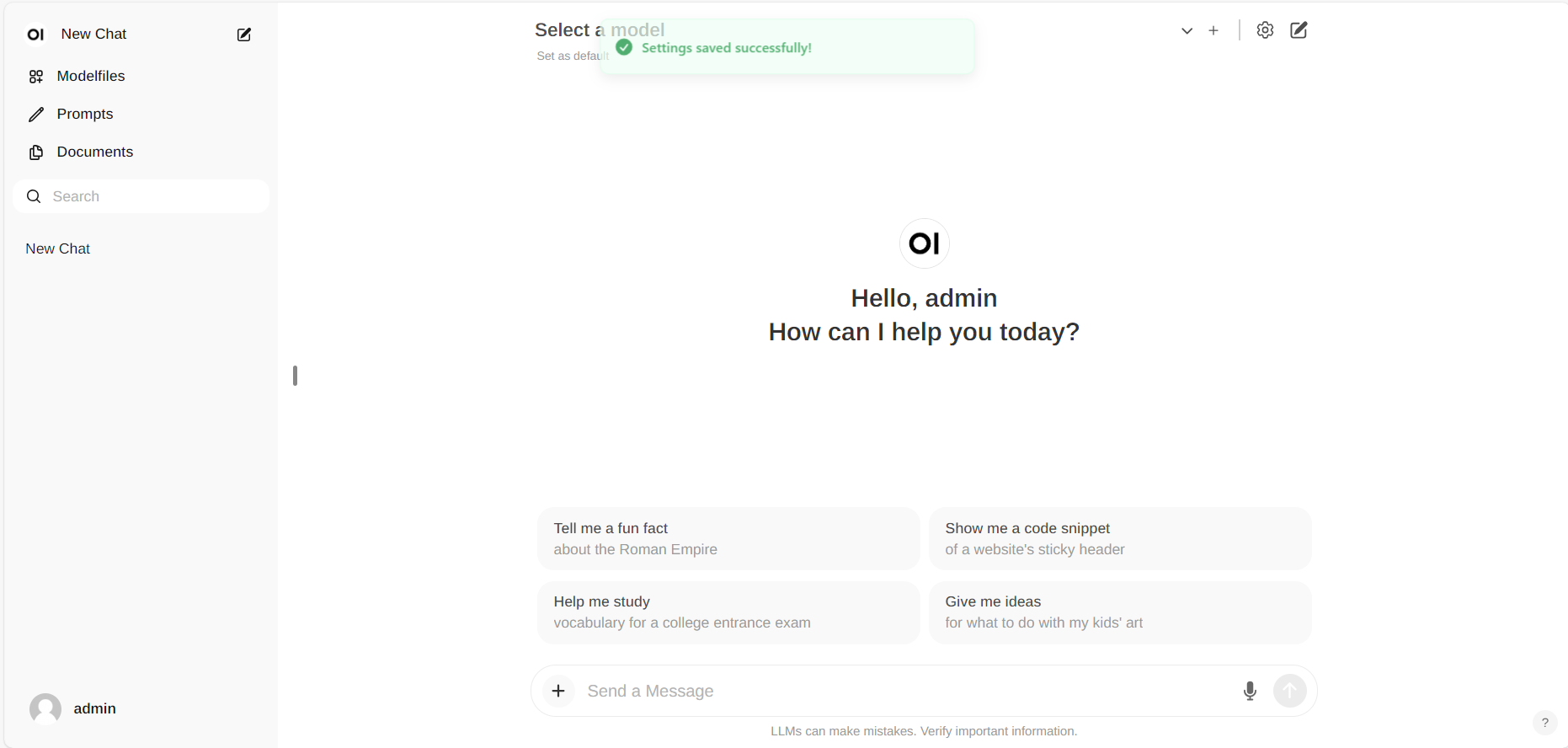

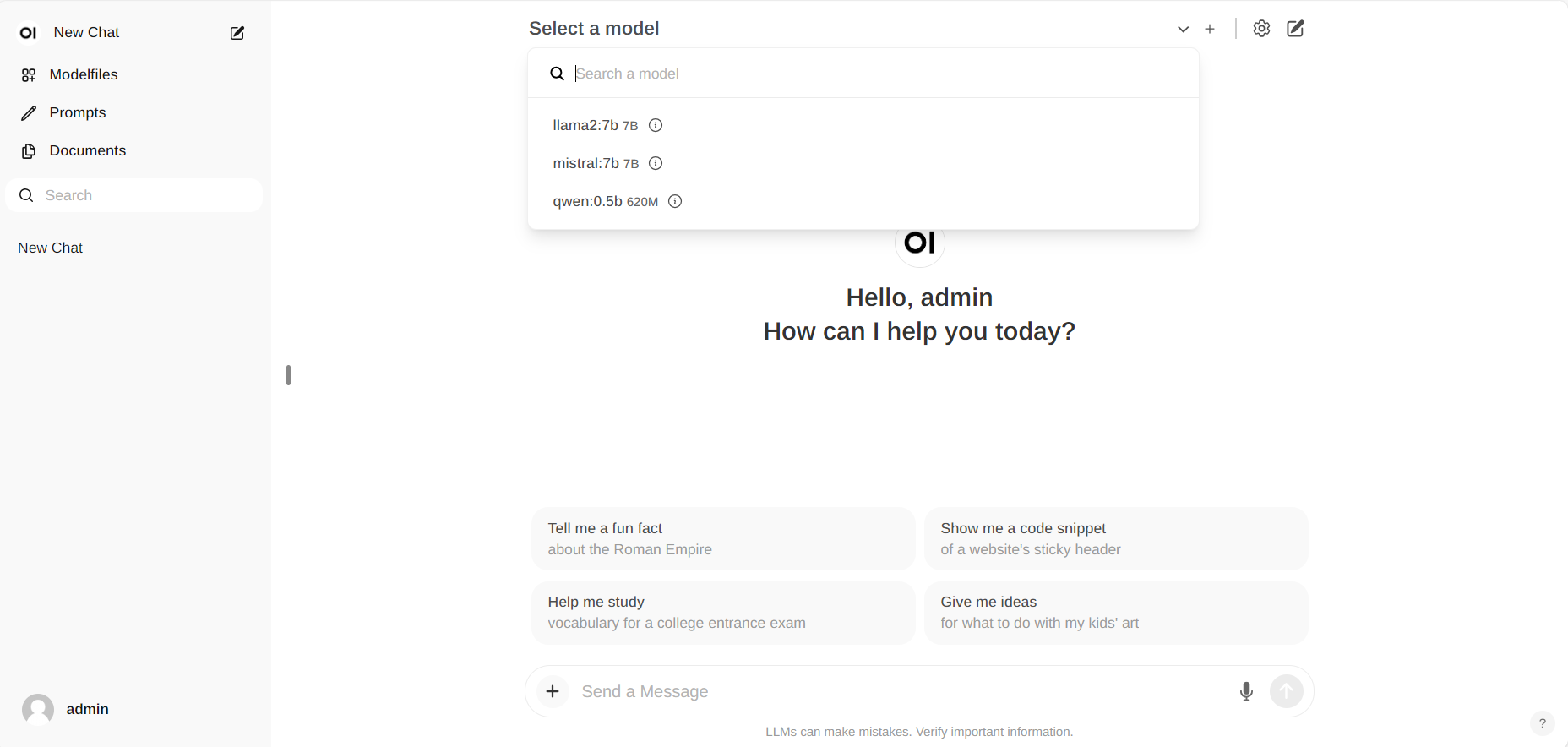

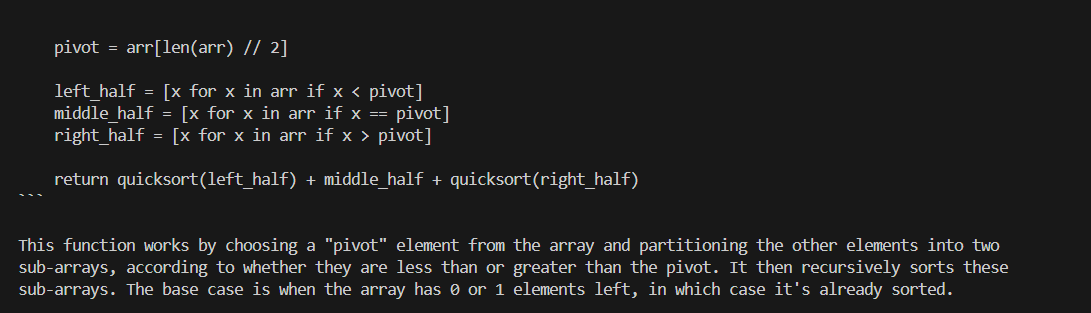

+# Run Open WebUI on Linux with Intel GPU

+

+[Open WebUI](https://github.com/open-webui/open-webui) is a user friendly GUI for running LLM locally; by porting it to [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily run LLM in [Open WebUI](https://github.com/open-webui/open-webui) on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+

+See the demo of running Mistral:7B on Intel Arc A770 below.

+

+

+

+## Quickstart

+

+This quickstart guide walks you through setting up and using [Open WebUI](https://github.com/open-webui/open-webui) with Ollama (using the C++ interface of [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) as an accelerated backend).

+

+

+### 1 Run Ollama on Linux with Intel GPU

+

+Follow the instructions on the [Run Ollama on Linux with Intel GPU](ollama_quickstart.html) to install and run "Ollam Serve". Please ensure that the Ollama server continues to run while you're using the Open WebUI.

+

+### 2 Install and Run Open-Webui

+

+

+#### Installation

+

+```eval_rst

+.. note::

+

+ Package version requirements for running Open WebUI: Node.js (>= 20.10) or Bun (>= 1.0.21), Python (>= 3.11)

+```

+

+1. Run below commands to install Node.js & npm. Once the installation is complete, verify the installation by running ```node -v``` and ```npm -v``` to check the versions of Node.js and npm, respectively.

+ ```sh

+ sudo apt update

+ sudo apt install nodejs

+ sudo apt install npm

+ ```

+

+2. Use `git` to clone the [open-webui repo](https://github.com/open-webui/open-webui.git), or download the open-webui source code zip from [this link](https://github.com/open-webui/open-webui/archive/refs/heads/main.zip) and unzip it to a directory, e.g. `~/open-webui`.

+

+3. Run below commands to install Open WebUI.

+ ```sh

+ cd ~/open-webui/

+ cp -RPp .env.example .env # Copy required .env file

+

+ # Build frontend

+ npm i

+ npm run build

+

+ # Install Dependencies

+ cd ./backend

+ pip install -r requirements.txt -U

+ ```

+

+#### Start the service

+

+Run below commands to start the service:

+

+```sh

+export no_proxy=localhost,127.0.0.1

+bash start.sh

+```

+

+

+```eval_rst

+.. note::

+

+ If you have difficulty accessing the huggingface repositories, you may use a mirror, e.g. add `export HF_ENDPOINT=https://hf-mirror.com` before running `bash start.sh`.

+```

+

+#### Access the WebUI

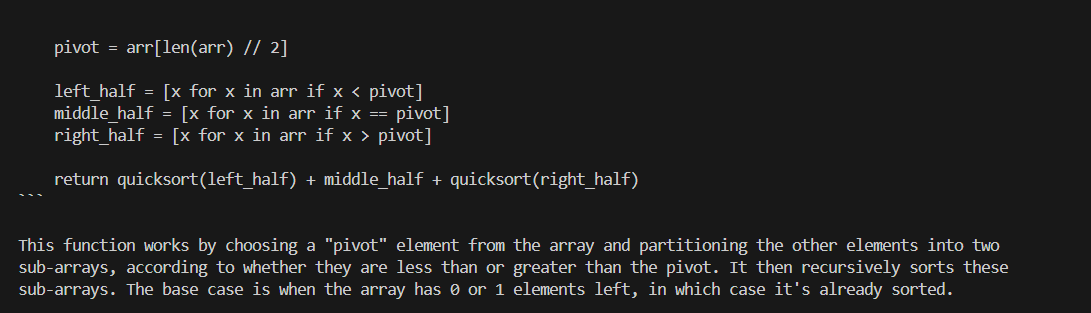

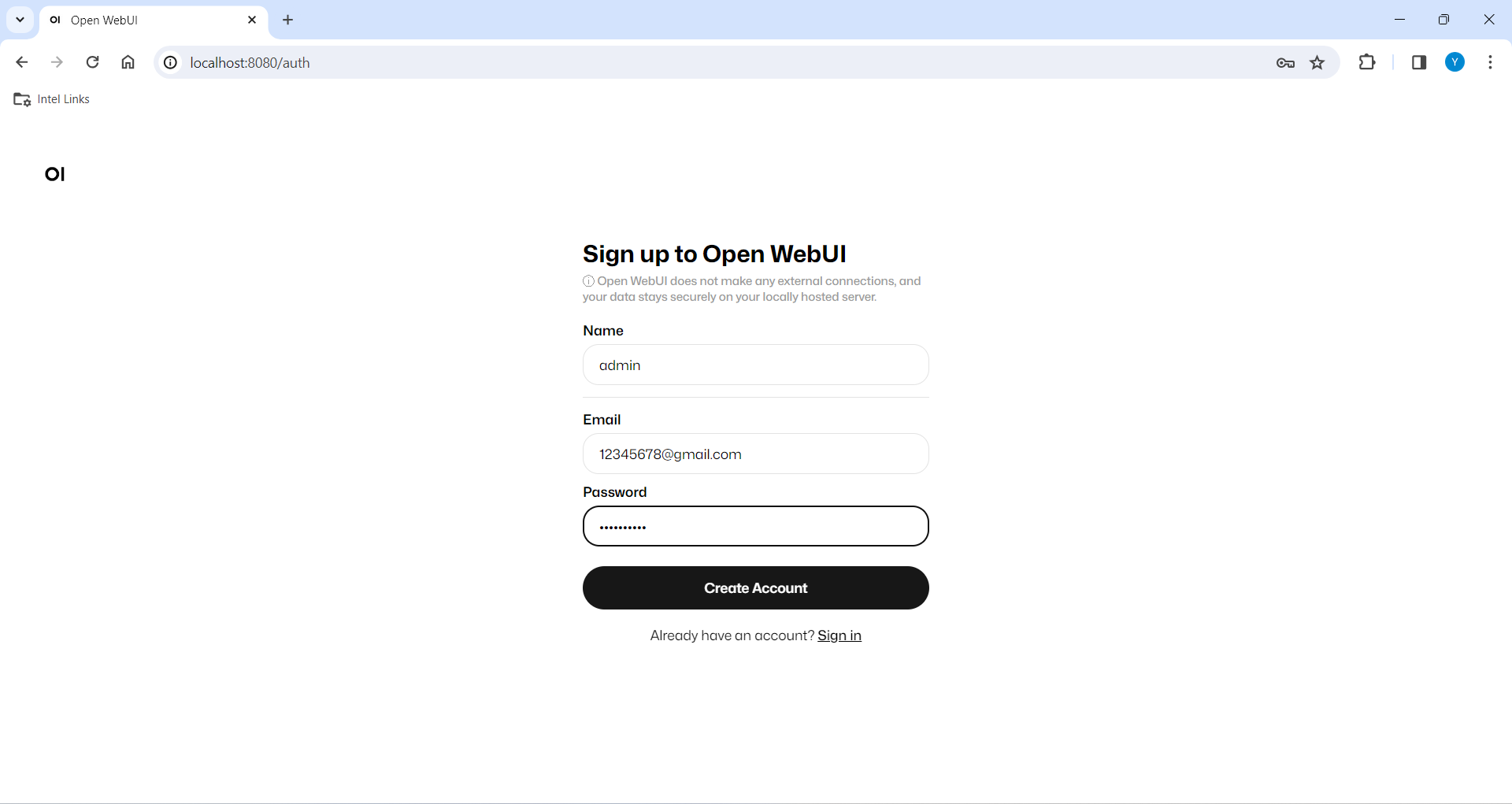

+Upon successful launch, URLs to access the WebUI will be displayed in the terminal. Open the provided local URL in your browser to interact with the WebUI, e.g. http://localhost:8080/.

+

+

+

+### 3. Using Open-Webui

+

+```eval_rst

+.. note::

+

+ For detailed information about how to use Open WebUI, visit the README of `open-webui official repository `_.

+

+```

+

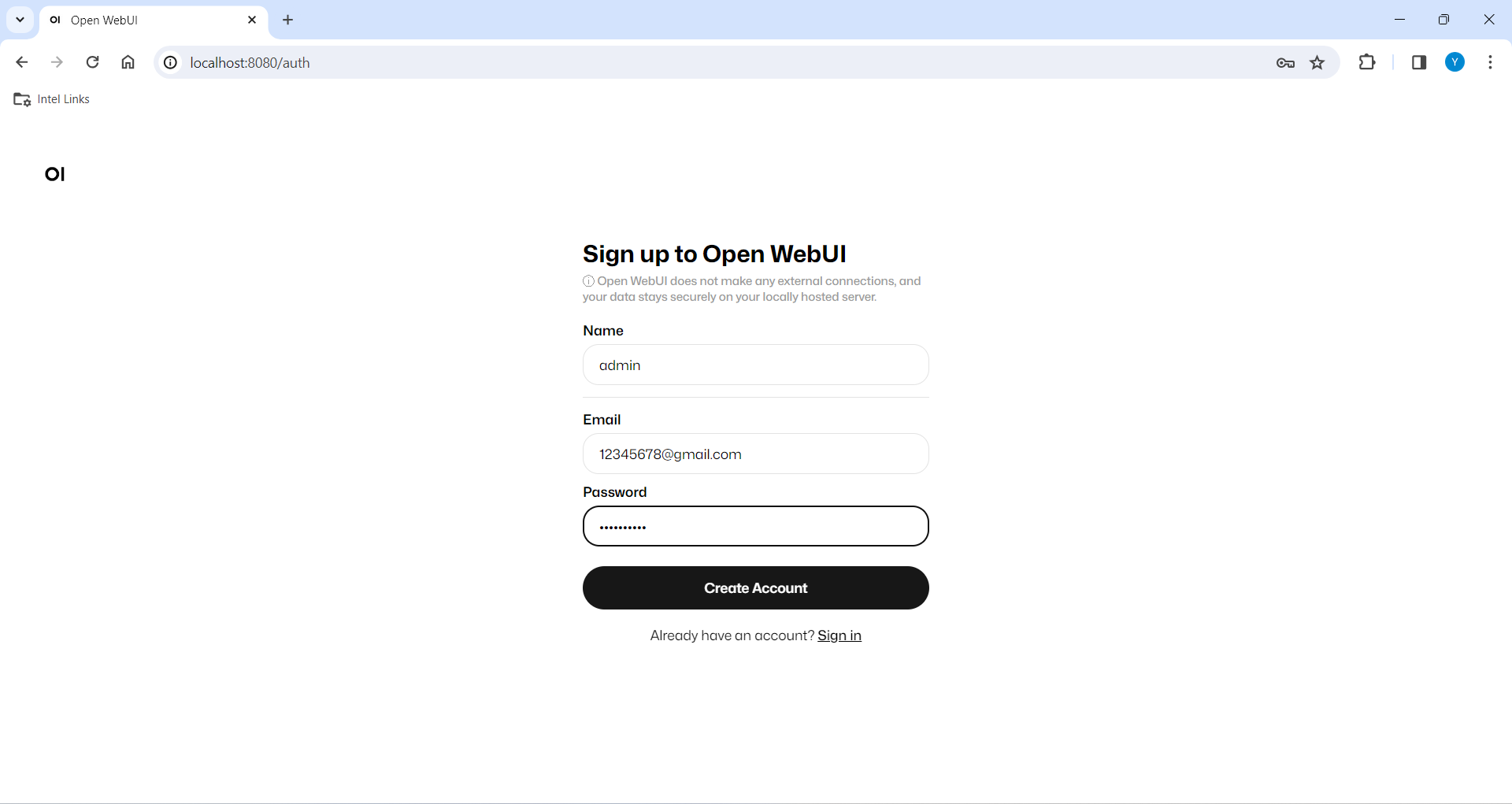

+#### Log-in

+

+If this is your first time using it, you need to register. After registering, log in with the registered account to access the interface.

+

+

+

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

new file mode 100644

index 00000000..d6de90df

--- /dev/null

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

@@ -0,0 +1,151 @@

+# Run Open WebUI on Linux with Intel GPU

+

+[Open WebUI](https://github.com/open-webui/open-webui) is a user friendly GUI for running LLM locally; by porting it to [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily run LLM in [Open WebUI](https://github.com/open-webui/open-webui) on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+

+See the demo of running Mistral:7B on Intel Arc A770 below.

+

+

+

+## Quickstart

+

+This quickstart guide walks you through setting up and using [Open WebUI](https://github.com/open-webui/open-webui) with Ollama (using the C++ interface of [`ipex-llm`](https://github.com/intel-analytics/ipex-llm) as an accelerated backend).

+

+

+### 1 Run Ollama on Linux with Intel GPU

+

+Follow the instructions on the [Run Ollama on Linux with Intel GPU](ollama_quickstart.html) to install and run "Ollam Serve". Please ensure that the Ollama server continues to run while you're using the Open WebUI.

+

+### 2 Install and Run Open-Webui

+

+

+#### Installation

+

+```eval_rst

+.. note::

+

+ Package version requirements for running Open WebUI: Node.js (>= 20.10) or Bun (>= 1.0.21), Python (>= 3.11)

+```

+

+1. Run below commands to install Node.js & npm. Once the installation is complete, verify the installation by running ```node -v``` and ```npm -v``` to check the versions of Node.js and npm, respectively.

+ ```sh

+ sudo apt update

+ sudo apt install nodejs

+ sudo apt install npm

+ ```

+

+2. Use `git` to clone the [open-webui repo](https://github.com/open-webui/open-webui.git), or download the open-webui source code zip from [this link](https://github.com/open-webui/open-webui/archive/refs/heads/main.zip) and unzip it to a directory, e.g. `~/open-webui`.

+

+3. Run below commands to install Open WebUI.

+ ```sh

+ cd ~/open-webui/

+ cp -RPp .env.example .env # Copy required .env file

+

+ # Build frontend

+ npm i

+ npm run build

+

+ # Install Dependencies

+ cd ./backend

+ pip install -r requirements.txt -U

+ ```

+

+#### Start the service

+

+Run below commands to start the service:

+

+```sh

+export no_proxy=localhost,127.0.0.1

+bash start.sh

+```

+

+

+```eval_rst

+.. note::

+

+ If you have difficulty accessing the huggingface repositories, you may use a mirror, e.g. add `export HF_ENDPOINT=https://hf-mirror.com` before running `bash start.sh`.

+```

+

+#### Access the WebUI

+Upon successful launch, URLs to access the WebUI will be displayed in the terminal. Open the provided local URL in your browser to interact with the WebUI, e.g. http://localhost:8080/.

+

+

+

+### 3. Using Open-Webui

+

+```eval_rst

+.. note::

+

+ For detailed information about how to use Open WebUI, visit the README of `open-webui official repository `_.

+

+```

+

+#### Log-in

+

+If this is your first time using it, you need to register. After registering, log in with the registered account to access the interface.

+

+

+  +

+

+

+

+

+

+

+

+

+  +

+

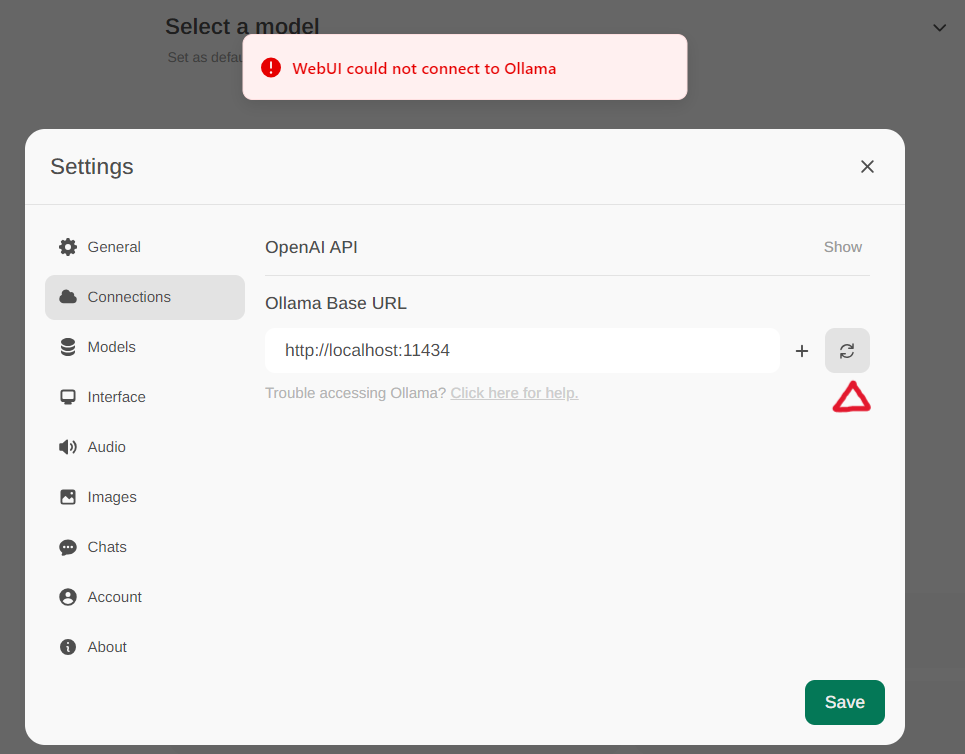

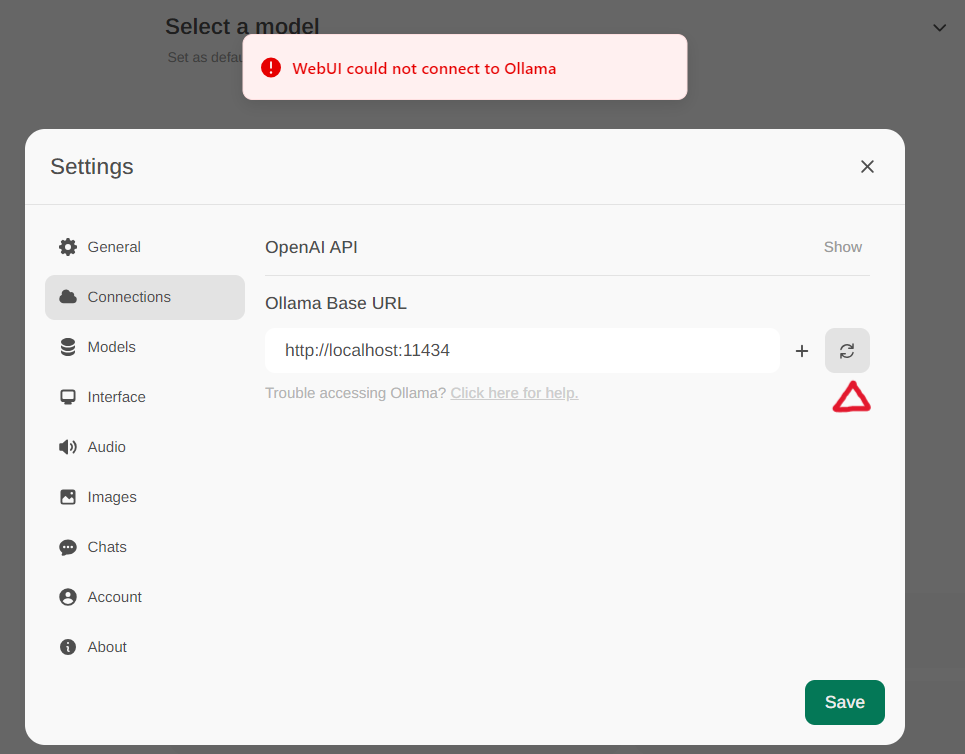

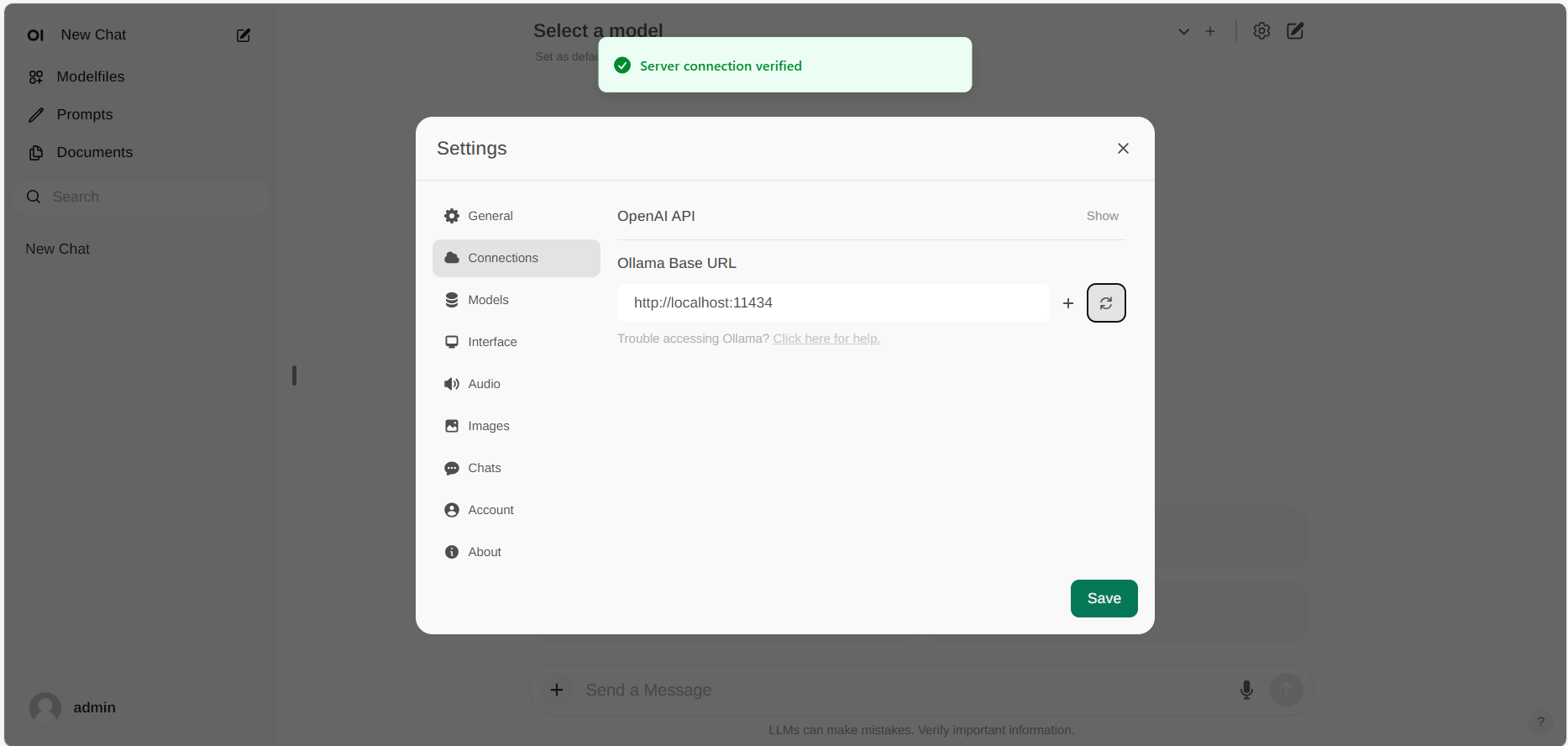

+#### Configure `Ollama` service URL

+

+Access the Ollama settings through **Settings -> Connections** in the menu. By default, the **Ollama Base URL** is preset to https://localhost:11434, as illustrated in the snapshot below. To verify the status of the Ollama service connection, click the **Refresh button** located next to the textbox. If the WebUI is unable to establish a connection with the Ollama server, you will see an error message stating, `WebUI could not connect to Ollama`.

+

+

+

+

+

+

+#### Configure `Ollama` service URL

+

+Access the Ollama settings through **Settings -> Connections** in the menu. By default, the **Ollama Base URL** is preset to https://localhost:11434, as illustrated in the snapshot below. To verify the status of the Ollama service connection, click the **Refresh button** located next to the textbox. If the WebUI is unable to establish a connection with the Ollama server, you will see an error message stating, `WebUI could not connect to Ollama`.

+

+

+

+  +

+

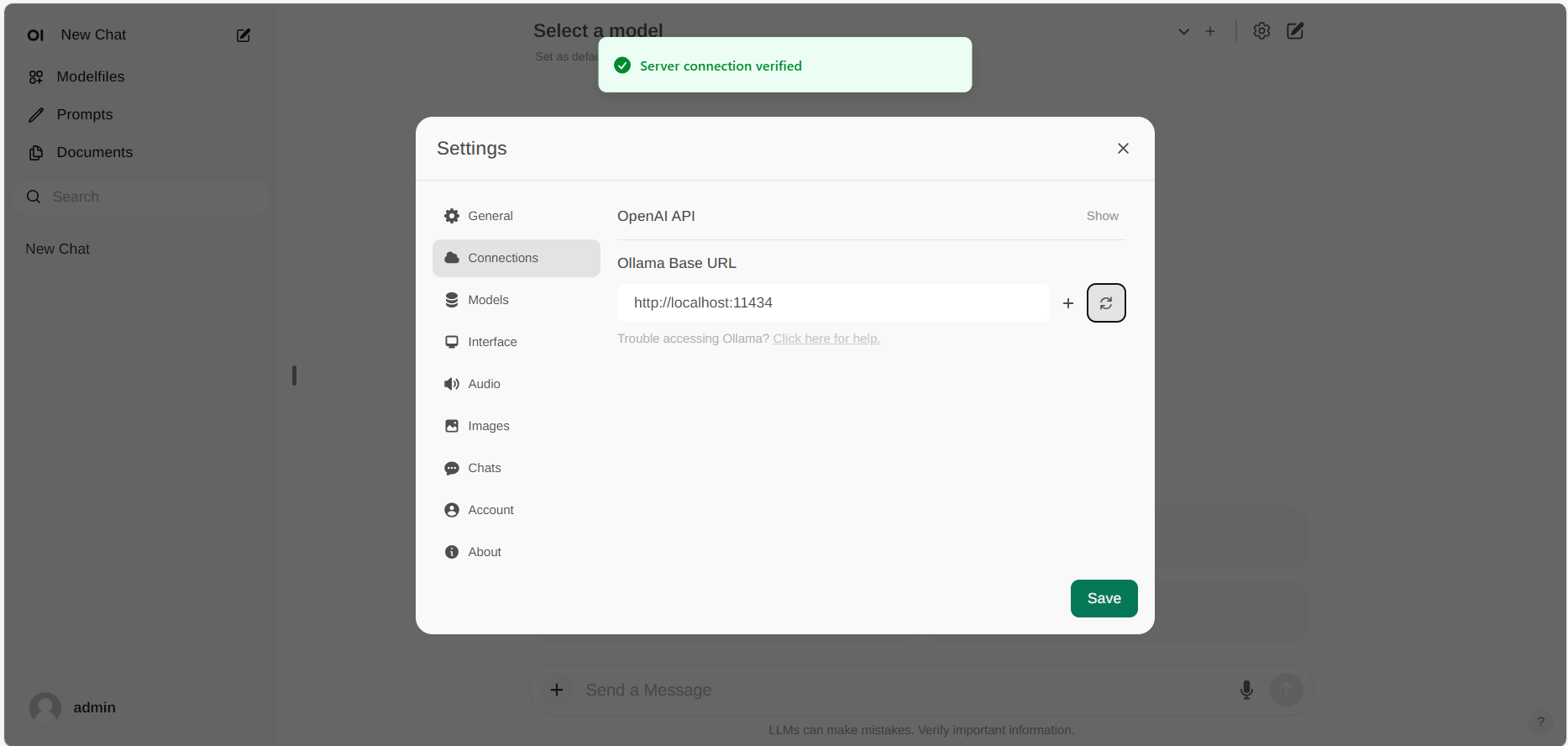

+If the connection is successful, you will see a message stating `Service Connection Verified`, as illustrated below.

+

+

+

+

+

+If the connection is successful, you will see a message stating `Service Connection Verified`, as illustrated below.

+

+

+  +

+

+```eval_rst

+.. note::

+

+ If you want to use an Ollama server hosted at a different URL, simply update the **Ollama Base URL** to the new URL and press the **Refresh** button to re-confirm the connection to Ollama.

+```

+

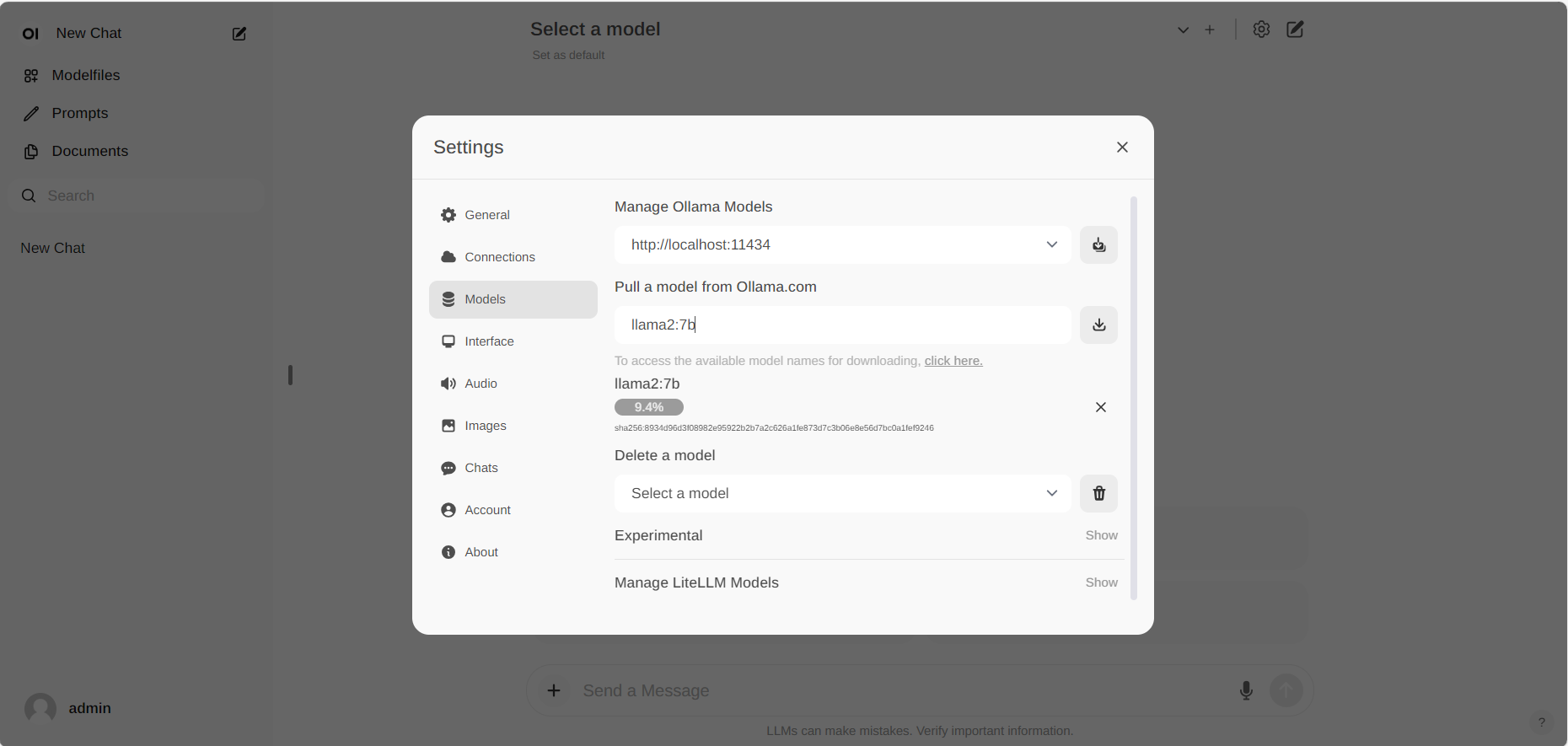

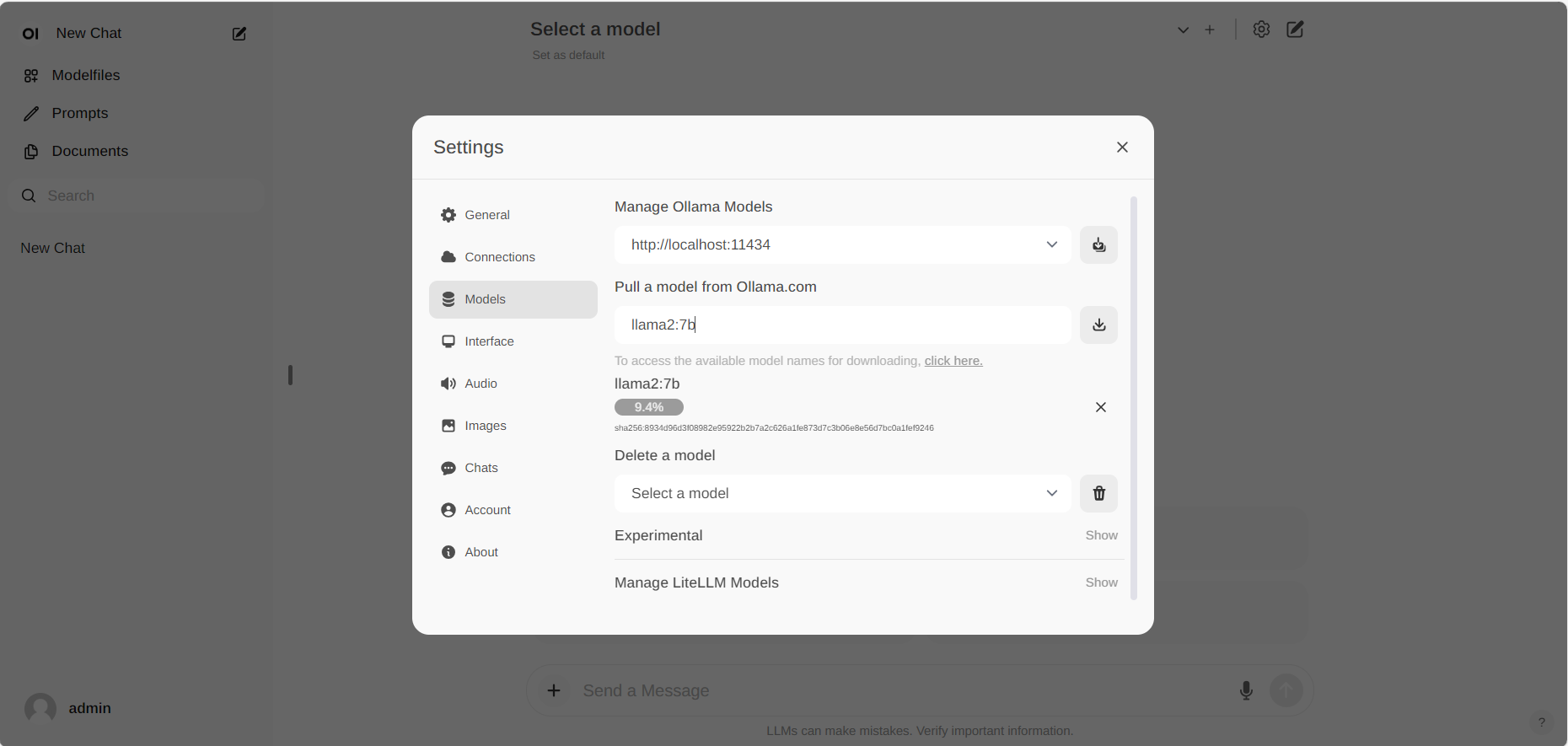

+#### Pull Model

+

+Go to **Settings -> Models** in the menu, choose a model under **Pull a model from Ollama.com** using the drop-down menu, and then hit the **Download** button on the right. Ollama will automatically download the selected model for you.

+

+

+

+

+

+```eval_rst

+.. note::

+

+ If you want to use an Ollama server hosted at a different URL, simply update the **Ollama Base URL** to the new URL and press the **Refresh** button to re-confirm the connection to Ollama.

+```

+

+#### Pull Model

+

+Go to **Settings -> Models** in the menu, choose a model under **Pull a model from Ollama.com** using the drop-down menu, and then hit the **Download** button on the right. Ollama will automatically download the selected model for you.

+

+

+  +

+

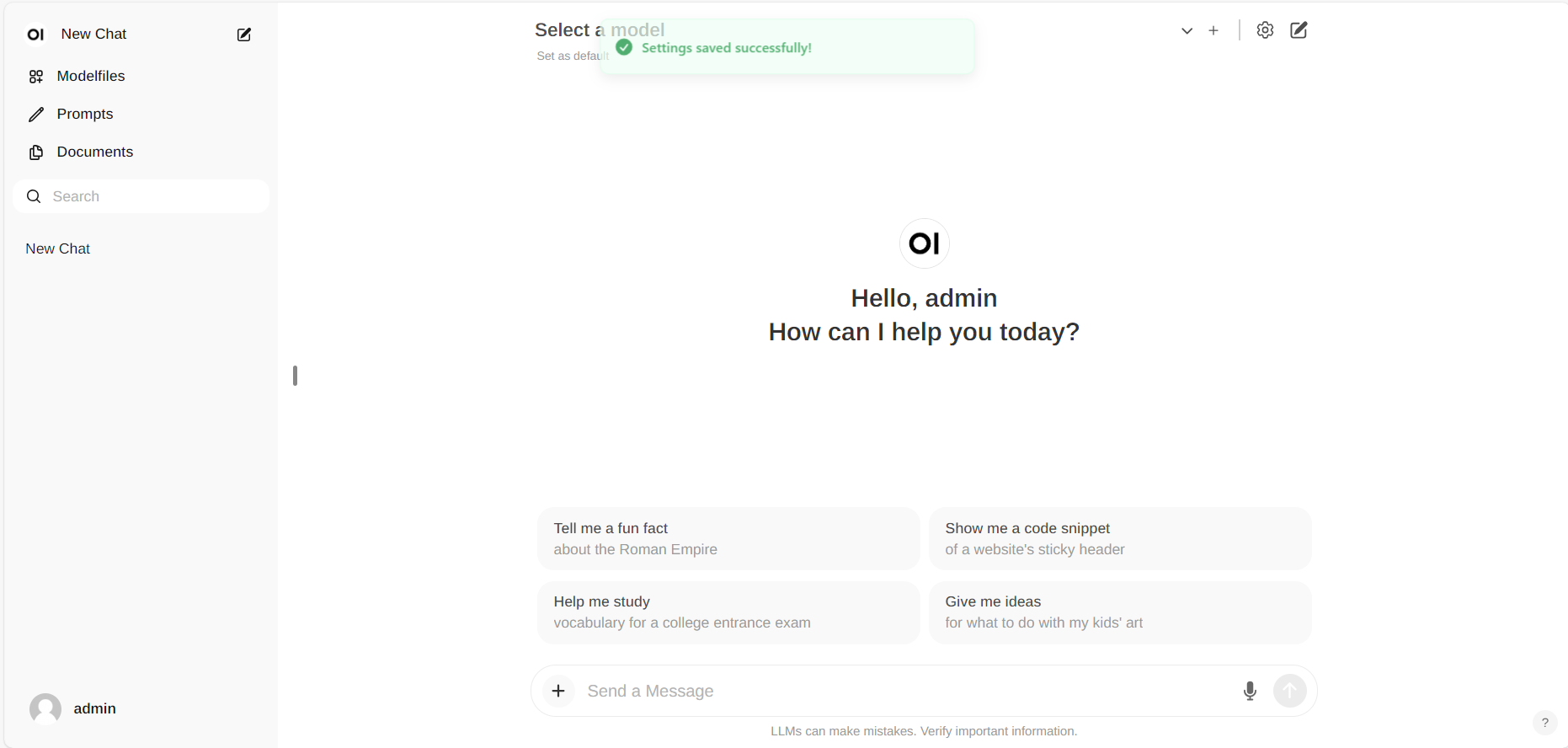

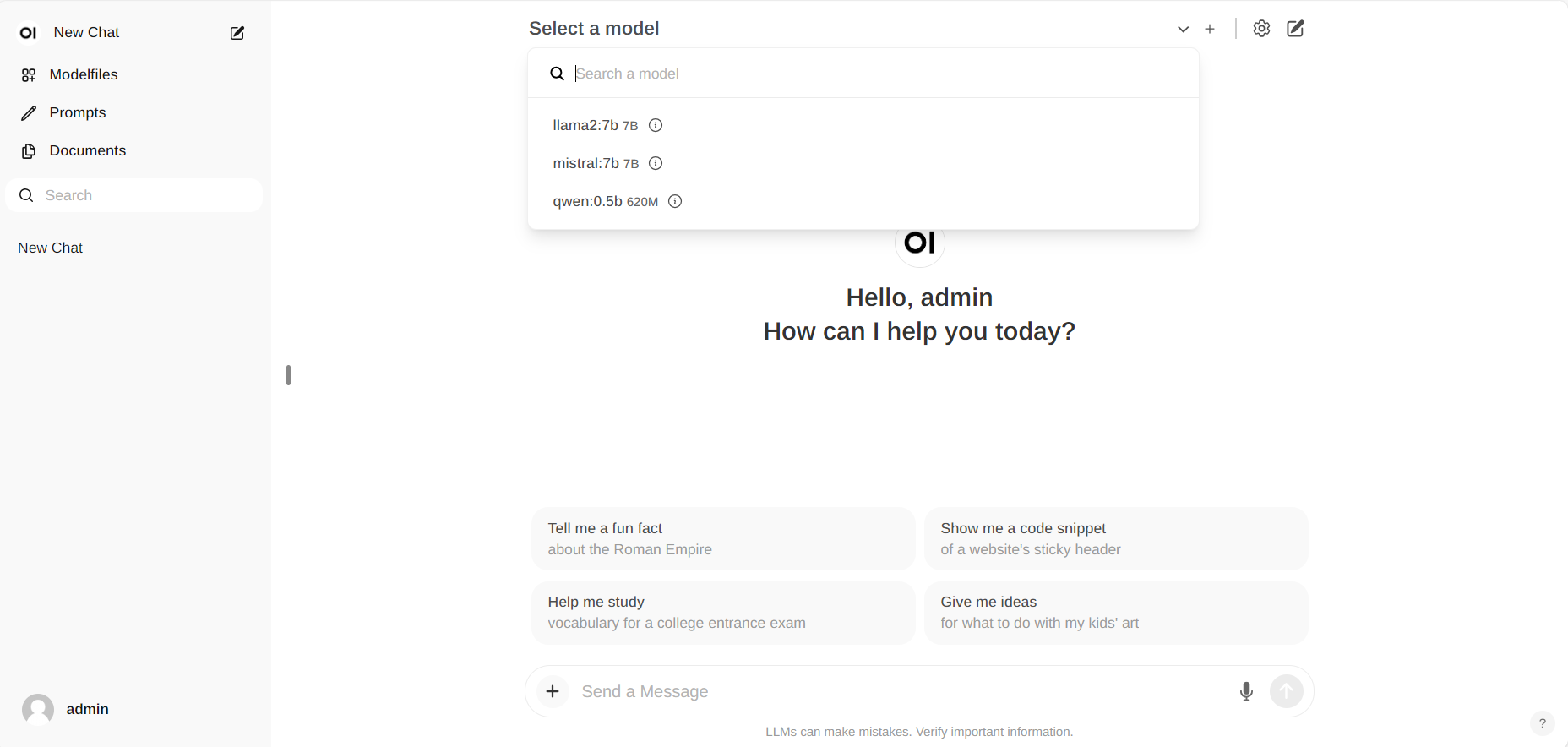

+#### Chat with the Model

+

+Start new conversations with **New chat** in the left-side menu.

+

+On the right-side, choose a downloaded model from the **Select a model** drop-down menu at the top, input your questions into the **Send a Message** textbox at the bottom, and click the button on the right to get responses.

+

+

+

+

+

+#### Chat with the Model

+

+Start new conversations with **New chat** in the left-side menu.

+

+On the right-side, choose a downloaded model from the **Select a model** drop-down menu at the top, input your questions into the **Send a Message** textbox at the bottom, and click the button on the right to get responses.

+

+

+  +

+

+

+

+

+

+

+

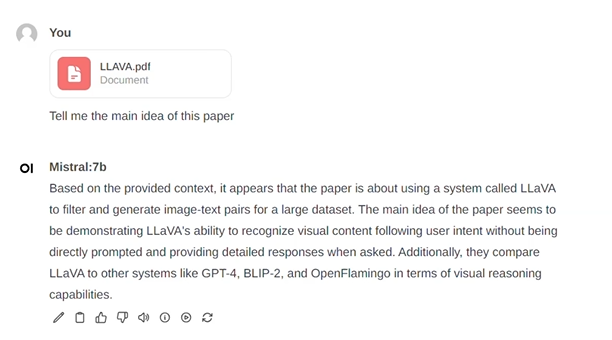

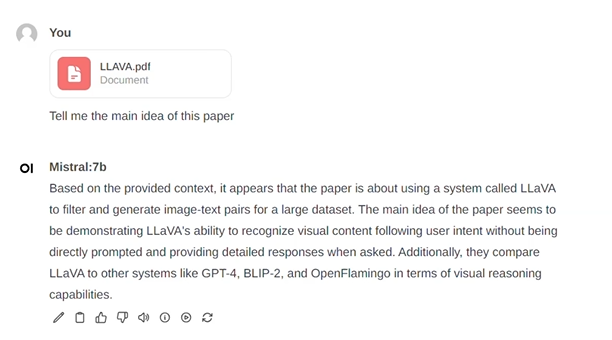

+Additionally, you can drag and drop a document into the textbox, allowing the LLM to access its contents. The LLM will then generate answers based on the document provided.

+

+

+  +

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.

+

+

+### 4. Troubleshooting

+

+##### Error `No module named 'torch._C`

+

+When you encounter the error ``ModuleNotFoundError: No module named 'torch._C'`` after executing ```bash start.sh```, you can resolve it by reinstalling PyTorch. First, use ```pip uninstall torch``` to remove the existing PyTorch installation, and then reinstall it along with its dependencies by running ```pip install torch torchvision torchaudio```.

diff --git a/docs/readthedocs/source/index.rst b/docs/readthedocs/source/index.rst

index 086ffe75..efe4728f 100644

--- a/docs/readthedocs/source/index.rst

+++ b/docs/readthedocs/source/index.rst

@@ -33,7 +33,7 @@

It is built on top of Intel Extension for PyTorch (

+

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.

+

+

+### 4. Troubleshooting

+

+##### Error `No module named 'torch._C`

+

+When you encounter the error ``ModuleNotFoundError: No module named 'torch._C'`` after executing ```bash start.sh```, you can resolve it by reinstalling PyTorch. First, use ```pip uninstall torch``` to remove the existing PyTorch installation, and then reinstall it along with its dependencies by running ```pip install torch torchvision torchaudio```.

diff --git a/docs/readthedocs/source/index.rst b/docs/readthedocs/source/index.rst

index 086ffe75..efe4728f 100644

--- a/docs/readthedocs/source/index.rst

+++ b/docs/readthedocs/source/index.rst

@@ -33,7 +33,7 @@

It is built on top of Intel Extension for PyTorch (IPEX), as well as the excellent work of llama.cpp, bitsandbytes, vLLM, qlora, AutoGPTQ, AutoAWQ, etc.

- It provides seamless integration with llama.cpp, Text-Generation-WebUI, HuggingFace transformers, HuggingFace PEFT, LangChain, LlamaIndex, DeepSpeed-AutoTP, vLLM, FastChat, HuggingFace TRL, AutoGen, ModeScope, etc.

+ It provides seamless integration with llama.cpp, ollama, Text-Generation-WebUI, HuggingFace transformers, HuggingFace PEFT, LangChain, LlamaIndex, DeepSpeed-AutoTP, vLLM, FastChat, HuggingFace TRL, AutoGen, ModeScope, etc.

50+ models have been optimized/verified on ipex-llm (including LLaMA2, Mistral, Mixtral, Gemma, LLaVA, Whisper, ChatGLM, Baichuan, Qwen, RWKV, and more); see the complete list here.

@@ -44,6 +44,8 @@

************************************************

Latest update 🔥

************************************************

+

+* [2024/04] ``ipex-llm`` now provides C++ interface, which can be used as an accelerated backend for running `llama.cpp `_ and `ollama `_ on Intel GPU.

* [2024/03] ``bigdl-llm`` has now become ``ipex-llm`` (see the migration guide `here `_); you may find the original ``BigDL`` project `here `_.

* [2024/02] ``ipex-llm`` now supports directly loading model from `ModelScope `_ (`魔搭 `_).

* [2024/02] ``ipex-llm`` added inital **INT2** support (based on llama.cpp `IQ2 `_ mechanism), which makes it possible to run large-size LLM (e.g., Mixtral-8x7B) on Intel GPU with 16GB VRAM.

@@ -106,6 +108,10 @@ See the **optimized performance** of ``chatglm2-6b`` and ``llama-2-13b-chat`` mo

``ipex-llm`` Quickstart

************************************************

+============================================

+Install ``ipex-llm``

+============================================

+

* `Windows GPU `_: installing ``ipex-llm`` on Windows with Intel GPU

* `Linux GPU `_: installing ``ipex-llm`` on Linux with Intel GPU

* `Docker `_: using ``ipex-llm`` dockers on Intel CPU and GPU

@@ -118,7 +124,8 @@ See the **optimized performance** of ``chatglm2-6b`` and ``llama-2-13b-chat`` mo

Run ``ipex-llm``

============================================

-* `llama.cpp `_: running **ipex-llm for llama.cpp** (*using C++ interface of* ``ipex-llm`` *as an accelerated backend for* ``llama.cpp`` *on Intel GPU*)

+* `llama.cpp `_: running **llama.cpp** (*using C++ interface of* ``ipex-llm`` *as an accelerated backend for* ``llama.cpp``) on Intel GPU

+* `ollama `_: running **ollama** (*using C++ interface of* ``ipex-llm`` *as an accelerated backend for* ``ollama``) on Intel GPU

* `vLLM `_: running ``ipex-llm`` in ``vLLM`` on both Intel `GPU `_ and `CPU `_

* `FastChat `_: running ``ipex-llm`` in ``FastChat`` serving on on both Intel GPU and CPU

* `LangChain-Chatchat RAG `_: running ``ipex-llm`` in ``LangChain-Chatchat`` (*Knowledge Base QA using* **RAG** *pipeline*)

diff --git a/python/llm/dev/benchmark/harness/README.md b/python/llm/dev/benchmark/harness/README.md

index 4dfcf09a..50ec4b86 100644

--- a/python/llm/dev/benchmark/harness/README.md

+++ b/python/llm/dev/benchmark/harness/README.md

@@ -30,6 +30,6 @@ Taking example above, the script will fork 3 processes, each for one xpu, to exe

## Results

We follow [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) to record our metrics, `acc_norm` for `hellaswag` and `arc_challenge`, `mc2` for `truthful_qa` and `acc` for `mmlu`. For `mmlu`, there are 57 subtasks which means users may need to average them manually to get final result.

## Summarize the results

-"""python

+```python

python make_table.py

-"""

\ No newline at end of file

+```

diff --git a/python/llm/dev/benchmark/harness/harness_to_leaderboard.py b/python/llm/dev/benchmark/harness/harness_to_leaderboard.py

index 82cdc341..5dd04b9a 100644

--- a/python/llm/dev/benchmark/harness/harness_to_leaderboard.py

+++ b/python/llm/dev/benchmark/harness/harness_to_leaderboard.py

@@ -48,7 +48,7 @@ task_to_metric = dict(

drop='f1'

)

-def parse_precision(precision, model="bigdl-llm"):

+def parse_precision(precision, model="ipex-llm"):

result = match(r"([a-zA-Z_]+)(\d+)([a-zA-Z_\d]*)", precision)

datatype = result.group(1)

bit = int(result.group(2))

@@ -62,6 +62,6 @@ def parse_precision(precision, model="bigdl-llm"):

else:

if model == "hf-causal":

return f"bnb_type={precision}"

- if model == "bigdl-llm":

+ if model == "ipex-llm":

return f"load_in_low_bit={precision}"

raise RuntimeError(f"invald precision {precision}")

diff --git a/python/llm/dev/benchmark/harness/bigdl_llm.py b/python/llm/dev/benchmark/harness/ipexllm.py

similarity index 98%

rename from python/llm/dev/benchmark/harness/bigdl_llm.py

rename to python/llm/dev/benchmark/harness/ipexllm.py

index 8626fc1a..0049f1e4 100644

--- a/python/llm/dev/benchmark/harness/bigdl_llm.py

+++ b/python/llm/dev/benchmark/harness/ipexllm.py

@@ -35,7 +35,7 @@ def force_decrease_order(Reorderer):

utils.Reorderer = force_decrease_order(utils.Reorderer)

-class BigDLLM(AutoCausalLM):

+class IPEXLLM(AutoCausalLM):

AUTO_MODEL_CLASS = AutoModelForCausalLM

AutoCausalLM_ARGS = inspect.getfullargspec(AutoCausalLM.__init__).args

def __init__(self, *args, **kwargs):

diff --git a/python/llm/dev/benchmark/harness/run_llb.py b/python/llm/dev/benchmark/harness/run_llb.py

index 3e8bd03a..a3ab55b0 100644

--- a/python/llm/dev/benchmark/harness/run_llb.py

+++ b/python/llm/dev/benchmark/harness/run_llb.py

@@ -20,8 +20,8 @@ import os

from harness_to_leaderboard import *

from lm_eval import tasks, evaluator, utils, models

-from bigdl_llm import BigDLLM

-models.MODEL_REGISTRY['bigdl-llm'] = BigDLLM # patch bigdl-llm to harness

+from ipexllm import IPEXLLM

+models.MODEL_REGISTRY['ipex-llm'] = IPEXLLM # patch ipex-llm to harness

logging.getLogger("openai").setLevel(logging.WARNING)

diff --git a/python/llm/dev/benchmark/harness/run_multi_llb.py b/python/llm/dev/benchmark/harness/run_multi_llb.py

index 77596b6d..7f4b2df3 100644

--- a/python/llm/dev/benchmark/harness/run_multi_llb.py

+++ b/python/llm/dev/benchmark/harness/run_multi_llb.py

@@ -22,8 +22,9 @@ from lm_eval import tasks, evaluator, utils, models

from multiprocessing import Queue, Process

import multiprocessing as mp

from contextlib import redirect_stdout, redirect_stderr

-from bigdl_llm import BigDLLM

-models.MODEL_REGISTRY['bigdl-llm'] = BigDLLM # patch bigdl-llm to harness

+

+from ipexllm import IPEXLLM

+models.MODEL_REGISTRY['ipex-llm'] = IPEXLLM # patch ipex-llm to harness

logging.getLogger("openai").setLevel(logging.WARNING)

diff --git a/python/llm/dev/benchmark/perplexity/README.md b/python/llm/dev/benchmark/perplexity/README.md

index bdee4593..bcf42ff4 100644

--- a/python/llm/dev/benchmark/perplexity/README.md

+++ b/python/llm/dev/benchmark/perplexity/README.md

@@ -20,6 +20,6 @@ python run.py --model_path meta-llama/Llama-2-7b-chat-hf --precisions float16 sy

- If you want to test perplexity on pre-downloaded datasets, please specify the `` in the `dataset_path` argument in your command.

## Summarize the results

-"""python

+```python

python make_table.py

-"""

\ No newline at end of file

+```

diff --git a/python/llm/example/CPU/Applications/autogen/README.md b/python/llm/example/CPU/Applications/autogen/README.md

index ceb9fd7a..de045510 100644

--- a/python/llm/example/CPU/Applications/autogen/README.md

+++ b/python/llm/example/CPU/Applications/autogen/README.md

@@ -11,7 +11,7 @@ mkdir autogen

cd autogen

# create respective conda environment

-conda create -n autogen python=3.9

+conda create -n autogen python=3.11

conda activate autogen

# install fastchat-adapted ipex-llm

diff --git a/python/llm/example/CPU/Applications/hf-agent/README.md b/python/llm/example/CPU/Applications/hf-agent/README.md

index edbae072..455f10ed 100644

--- a/python/llm/example/CPU/Applications/hf-agent/README.md

+++ b/python/llm/example/CPU/Applications/hf-agent/README.md

@@ -10,7 +10,7 @@ To run this example with IPEX-LLM, we have some recommended requirements for you

### 1. Install

We suggest using conda to manage environment:

```bash

-conda create -n llm python=3.9

+conda create -n llm python=3.11

conda activate llm

pip install ipex-llm[all] # install ipex-llm with 'all' option

diff --git a/python/llm/example/CPU/Applications/streaming-llm/README.md b/python/llm/example/CPU/Applications/streaming-llm/README.md

index a008b1d2..571f51a3 100644

--- a/python/llm/example/CPU/Applications/streaming-llm/README.md

+++ b/python/llm/example/CPU/Applications/streaming-llm/README.md

@@ -10,7 +10,7 @@ model = AutoModelForCausalLM.from_pretrained(model_name_or_path, load_in_4bit=Tr

## Prepare Environment

We suggest using conda to manage environment:

```bash

-conda create -n llm python=3.9

+conda create -n llm python=3.11

conda activate llm

pip install --pre --upgrade ipex-llm[all]

diff --git a/python/llm/example/CPU/Deepspeed-AutoTP/README.md b/python/llm/example/CPU/Deepspeed-AutoTP/README.md

index ed738567..45256563 100644

--- a/python/llm/example/CPU/Deepspeed-AutoTP/README.md

+++ b/python/llm/example/CPU/Deepspeed-AutoTP/README.md

@@ -2,7 +2,7 @@

#### 1. Install Dependencies

-Install necessary packages (here Python 3.9 is our test environment):

+Install necessary packages (here Python 3.11 is our test environment):

```bash

bash install.sh

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/AWQ/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/AWQ/README.md

index cecbe84a..b3078cbd 100644

--- a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/AWQ/README.md

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/AWQ/README.md

@@ -34,7 +34,7 @@ In the example [generate.py](./generate.py), we show a basic use case for a AWQ

We suggest using conda to manage environment:

```bash

-conda create -n llm python=3.9

+conda create -n llm python=3.11

conda activate llm

pip install autoawq==0.1.8 --no-deps

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF/README.md

index 33c28850..4741e604 100644

--- a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF/README.md

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GGUF/README.md

@@ -25,7 +25,7 @@ We suggest using conda to manage the Python environment. For more information ab

After installing conda, create a Python environment for IPEX-LLM:

```bash

-conda create -n llm python=3.9 # recommend to use Python 3.9

+conda create -n llm python=3.11 # recommend to use Python 3.11

conda activate llm

pip install --pre --upgrade ipex-llm[all] # install the latest ipex-llm nightly build with 'all' option

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GPTQ/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GPTQ/README.md

index d91f997e..139fa014 100644

--- a/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GPTQ/README.md

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Advanced-Quantizations/GPTQ/README.md

@@ -9,7 +9,7 @@ In the example [generate.py](./generate.py), we show a basic use case for a Llam

### 1. Install

We suggest using conda to manage environment:

```bash

-conda create -n llm python=3.9

+conda create -n llm python=3.11

conda activate llm

pip install ipex-llm[all] # install ipex-llm with 'all' option

diff --git a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/aquila/README.md b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/aquila/README.md

index 63468b19..8b3cfbf3 100644

--- a/python/llm/example/CPU/HF-Transformers-AutoModels/Model/aquila/README.md

+++ b/python/llm/example/CPU/HF-Transformers-AutoModels/Model/aquila/README.md

@@ -16,7 +16,7 @@ We suggest using conda to manage the Python environment. For more information ab

After installing conda, create a Python environment for IPEX-LLM:

```bash

-conda create -n llm python=3.9 # recommend to use Python 3.9

+conda create -n llm python=3.11 # recommend to use Python 3.11

conda activate llm

pip install --pre --upgrade ipex-llm[all] # install the latest ipex-llm nightly build with 'all' option