diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

new file mode 100644

index 00000000..af913fa8

--- /dev/null

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/open_webui_with_ollama_quickstart.md

@@ -0,0 +1,106 @@

+# Run Open-Webui with Ollama on Linux with Intel GPU

+

+The [open-webui](https://github.com/open-webui/open-webui) provides a user friendly GUI for anyone to run LLM locally; by porting it to [ollama](https://github.com/ollama/ollama) integrated with [`ipex-llm`](https://github.com/intel-analytics/ipex-llm), users can now easily run LLM in [open-webui](https://github.com/open-webui/open-webui) on Intel **GPU** *(e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)*.

+

+See the demo of running Mistral:7B on Intel Arc A770 below.

+

+

+

+## Quickstart

+This quickstart guide walks you through setting up and using the [open-webui](https://github.com/open-webui/open-webui) with Ollama.

+

+

+### 1 Run Ollama on Linux with Intel GPU

+

+To use the open-webui on Intel GPU, first ensure that you can run Ollama on Intel GPU. Follow the instructions on the [Run Ollama on Linux with Intel GPU](ollama_quickstart.md). Please keep ollama service running after completing the above steps.

+

+### 2 Install and Run Open-Webui

+

+**Requirements**

+

+- Node.js (>= 20.10) or Bun (>= 1.0.21)

+- Python (>= 3.11)

+

+**Installation Steps**

+

+```sh

+# Optional: Use Hugging Face mirror for restricted areas

+export HF_ENDPOINT=https://hf-mirror.com

+

+export no_proxy=localhost,127.0.0.1

+

+git clone https://github.com/open-webui/open-webui.git

+cd open-webui/

+cp -RPp .env.example .env # Copy required .env file

+

+# Build Frontend

+npm i

+npm run build

+

+# Serve Frontend with Backend

+cd ./backend

+pip install -r requirements.txt -U

+bash start.sh

+```

+

+open-webui will be accessible at http://localhost:8080/.

+

+> For detailed information, visit the [open-webui official repository](https://github.com/open-webui/open-webui).

+>

+

+### 3. Using Open-Webui

+

+#### Log-in and Pull Model

+

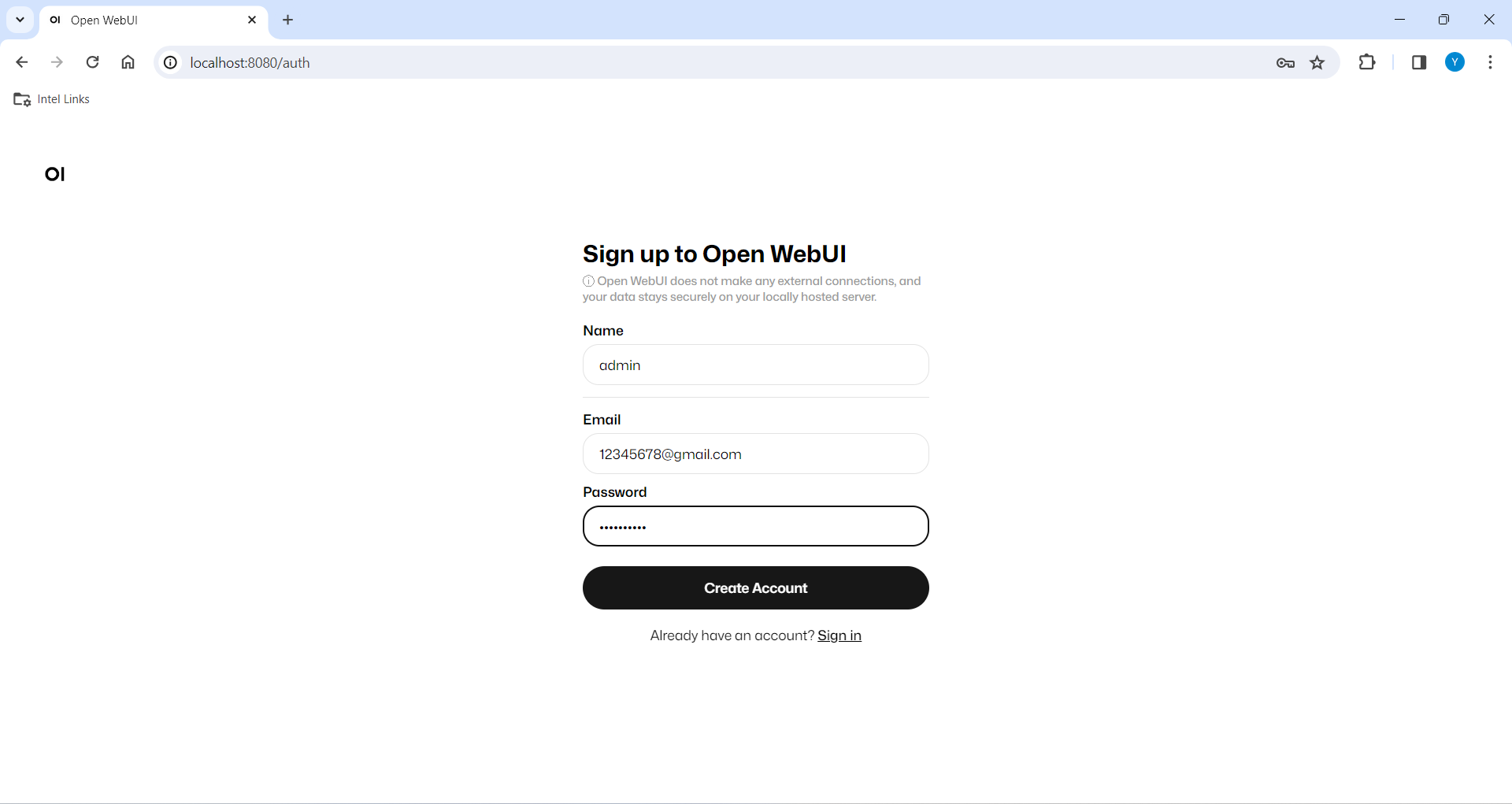

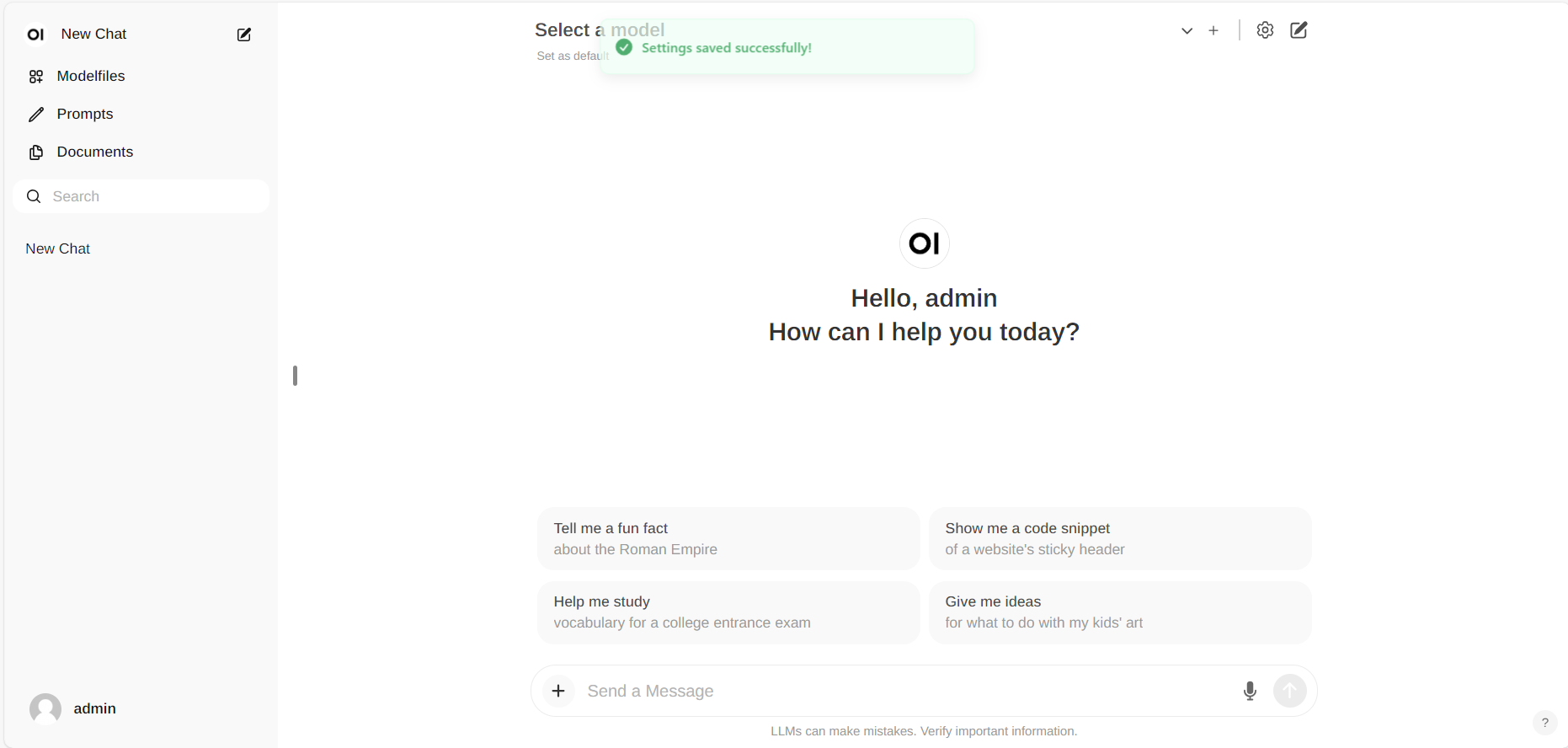

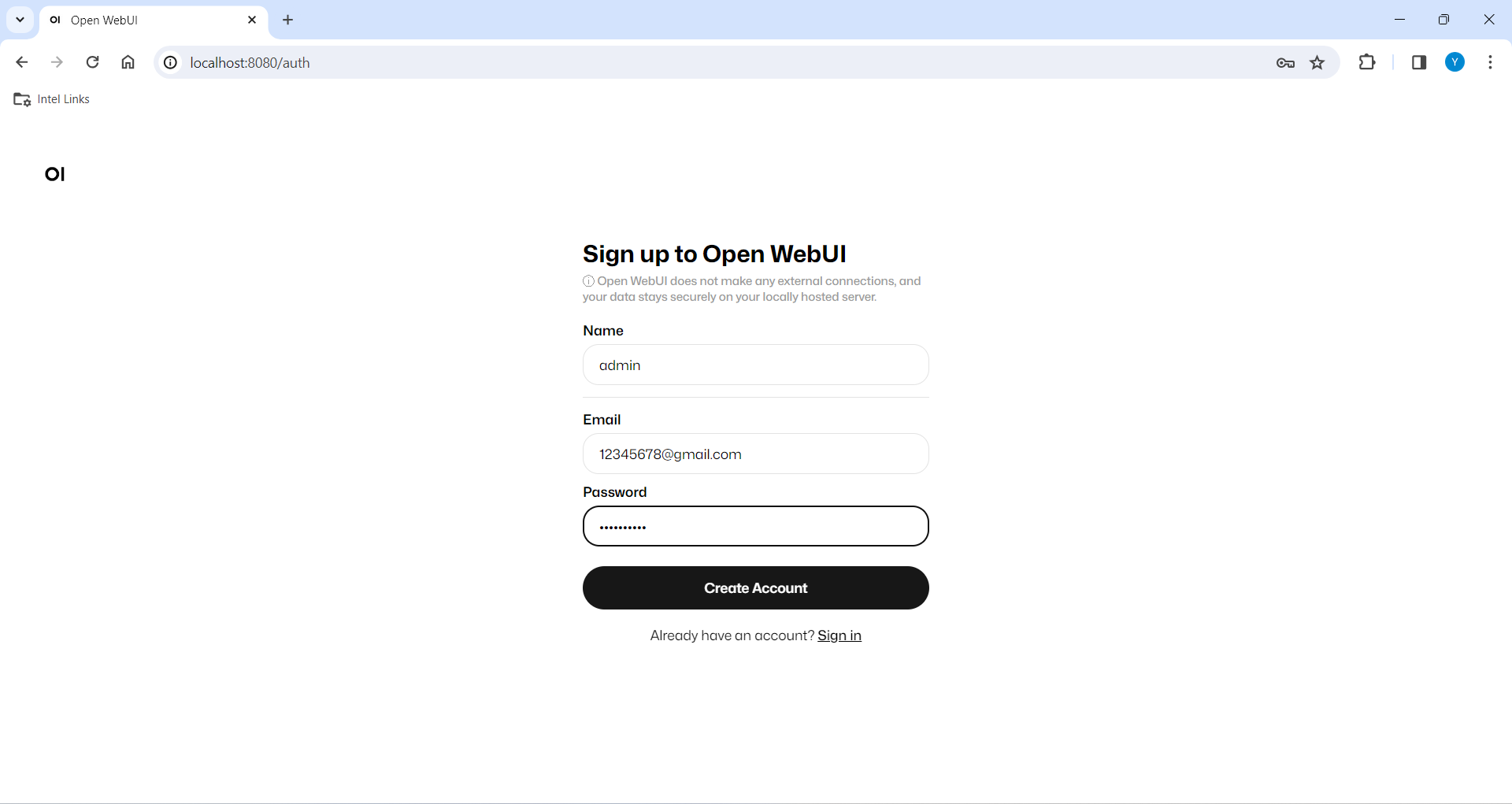

+If this is your first time using it, you need to register. After registering, log in with the registered account to access the interface.

+

+

+  +

+

+

+

+

+

+

+

+

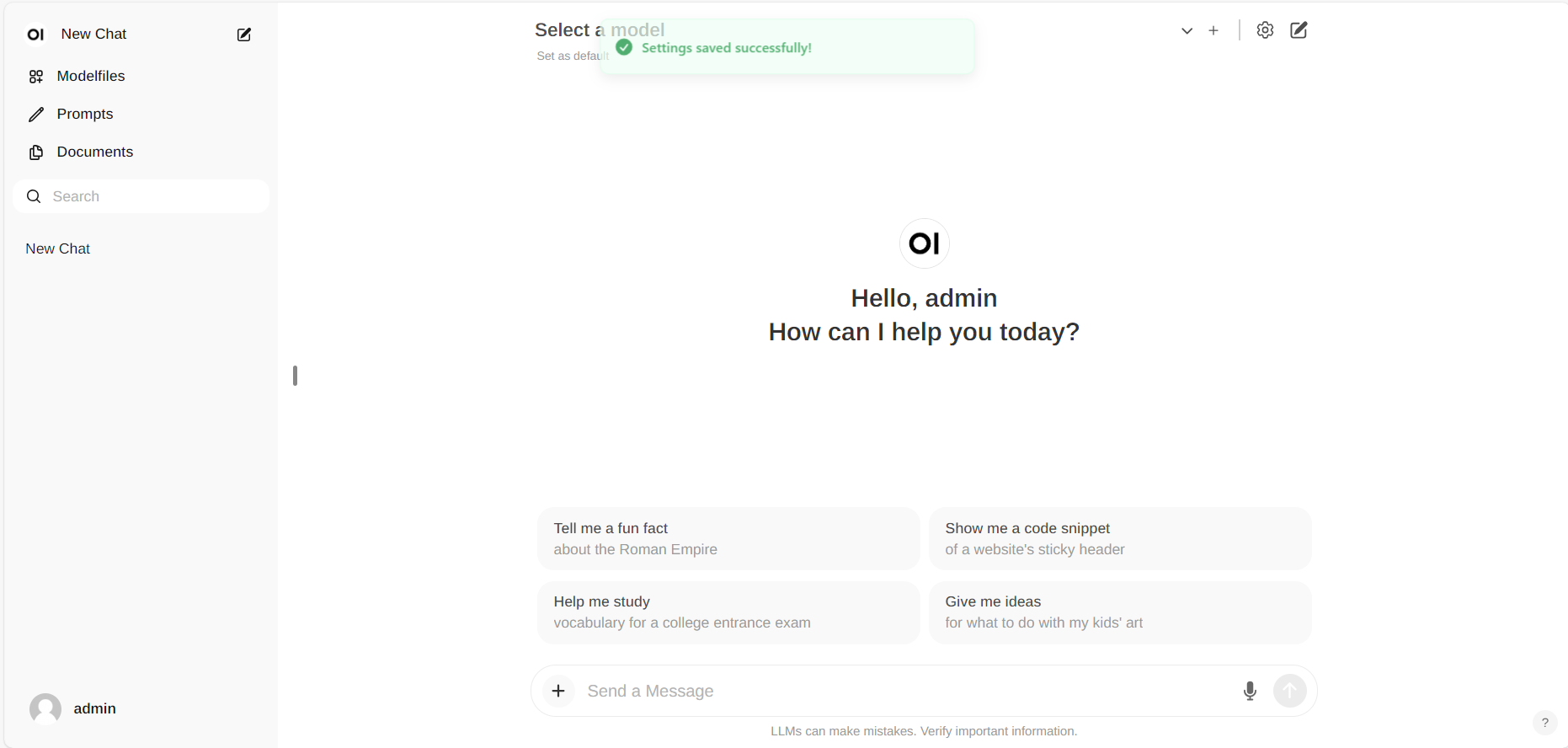

+  +

+

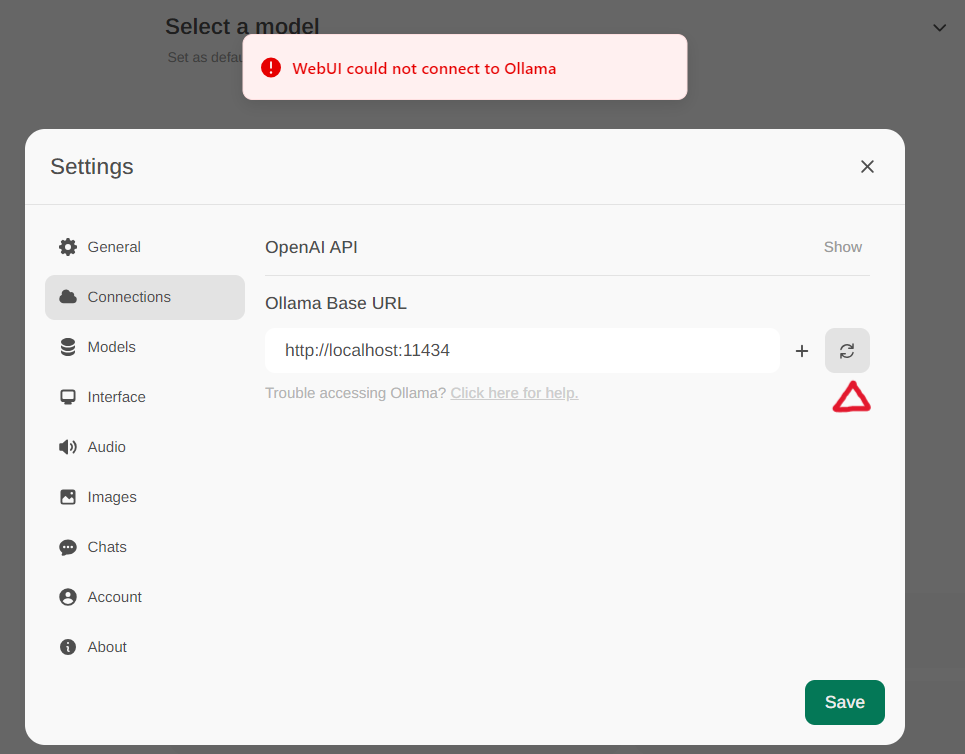

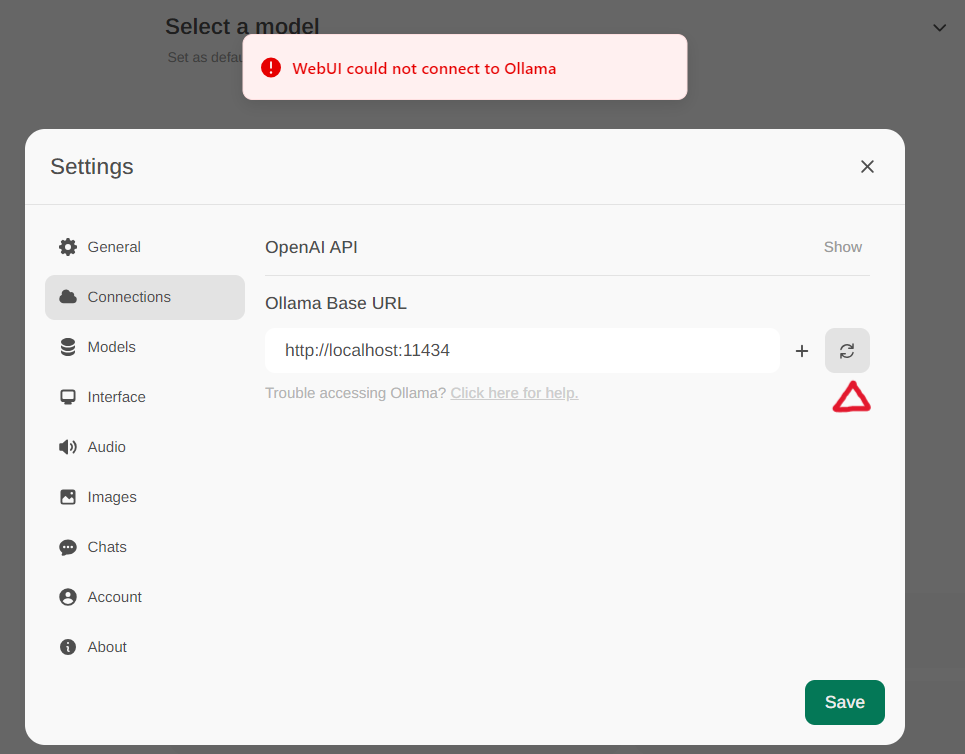

+Check your ollama service connection in `Settings`. The default Ollama Base URL is set to `https://localhost:11434`, you can also set your own url if you run Ollama service on another machine. Click this button to check if the Ollama service connection is functioning properly. If not, an alert will pop out as the below shows.

+

+

+

+

+

+Check your ollama service connection in `Settings`. The default Ollama Base URL is set to `https://localhost:11434`, you can also set your own url if you run Ollama service on another machine. Click this button to check if the Ollama service connection is functioning properly. If not, an alert will pop out as the below shows.

+

+

+  +

+

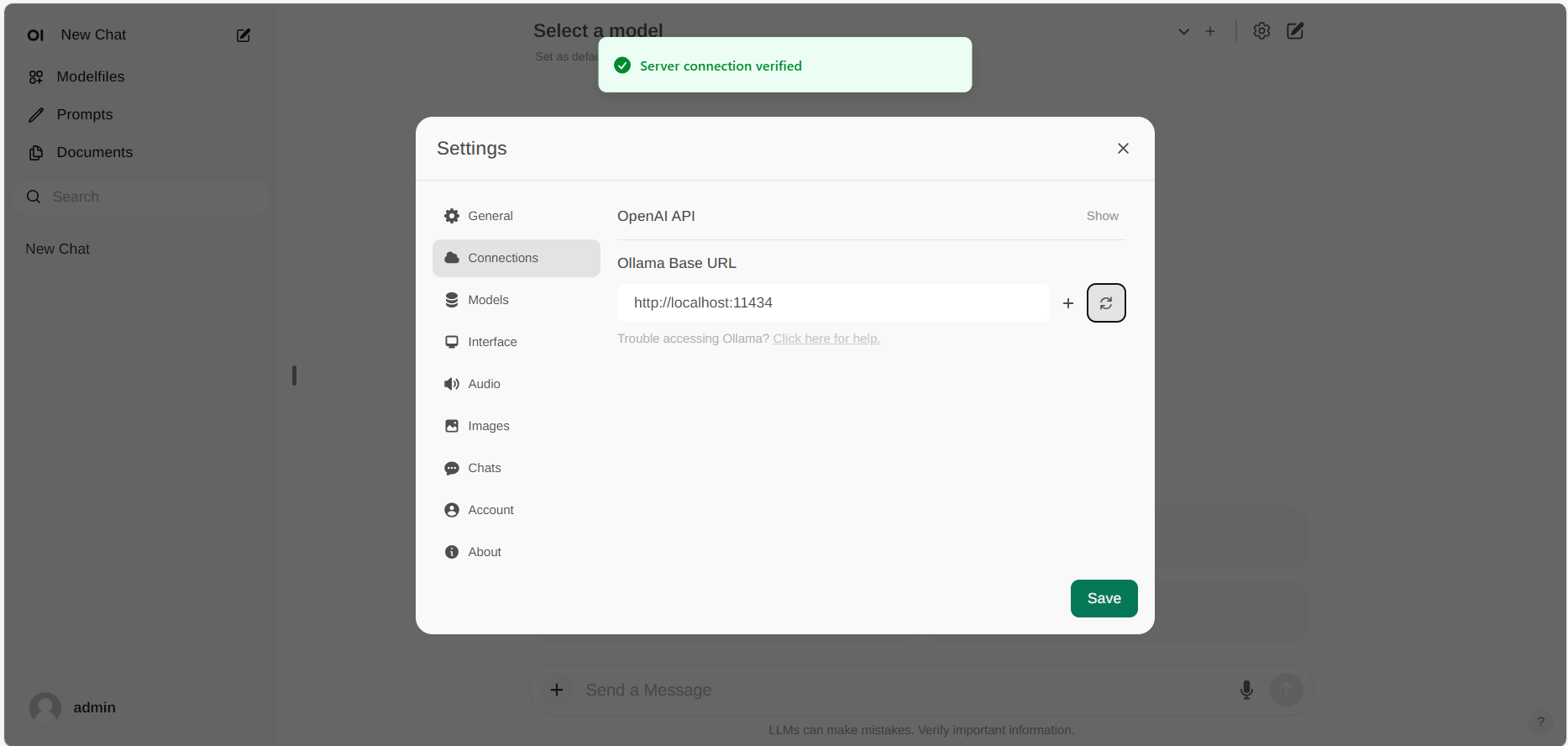

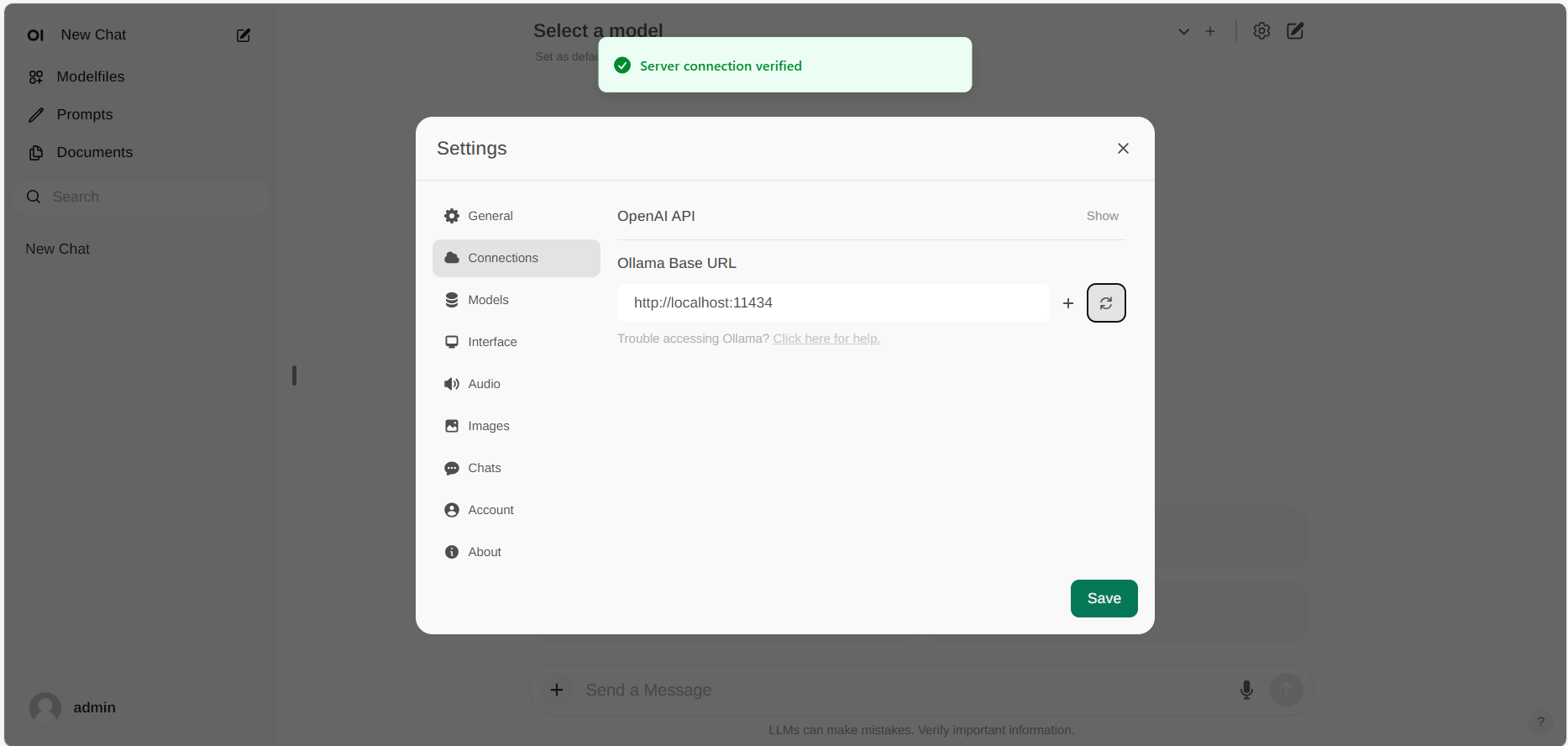

+If everything goes well, you will get a message as shown below.

+

+

+

+

+

+If everything goes well, you will get a message as shown below.

+

+

+  +

+

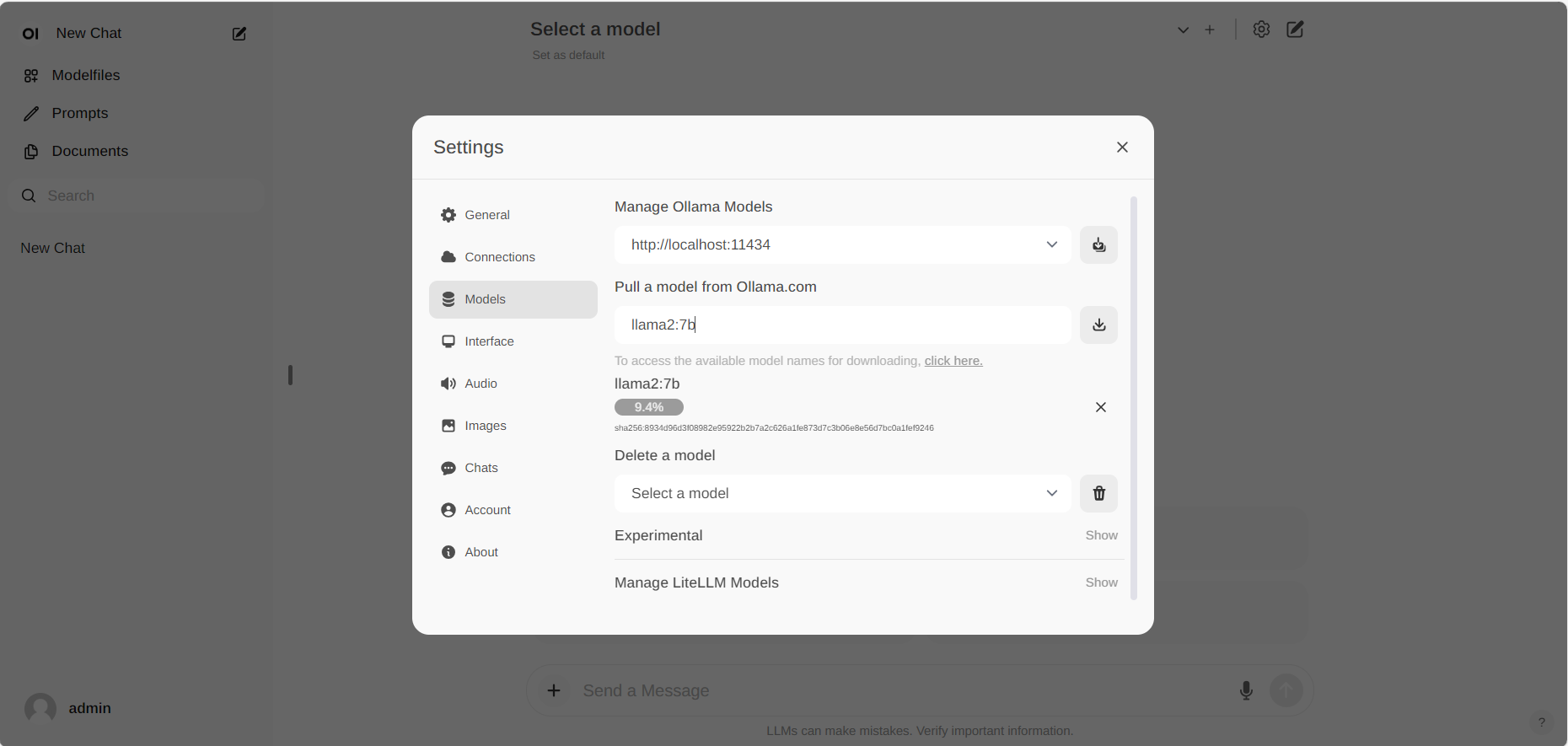

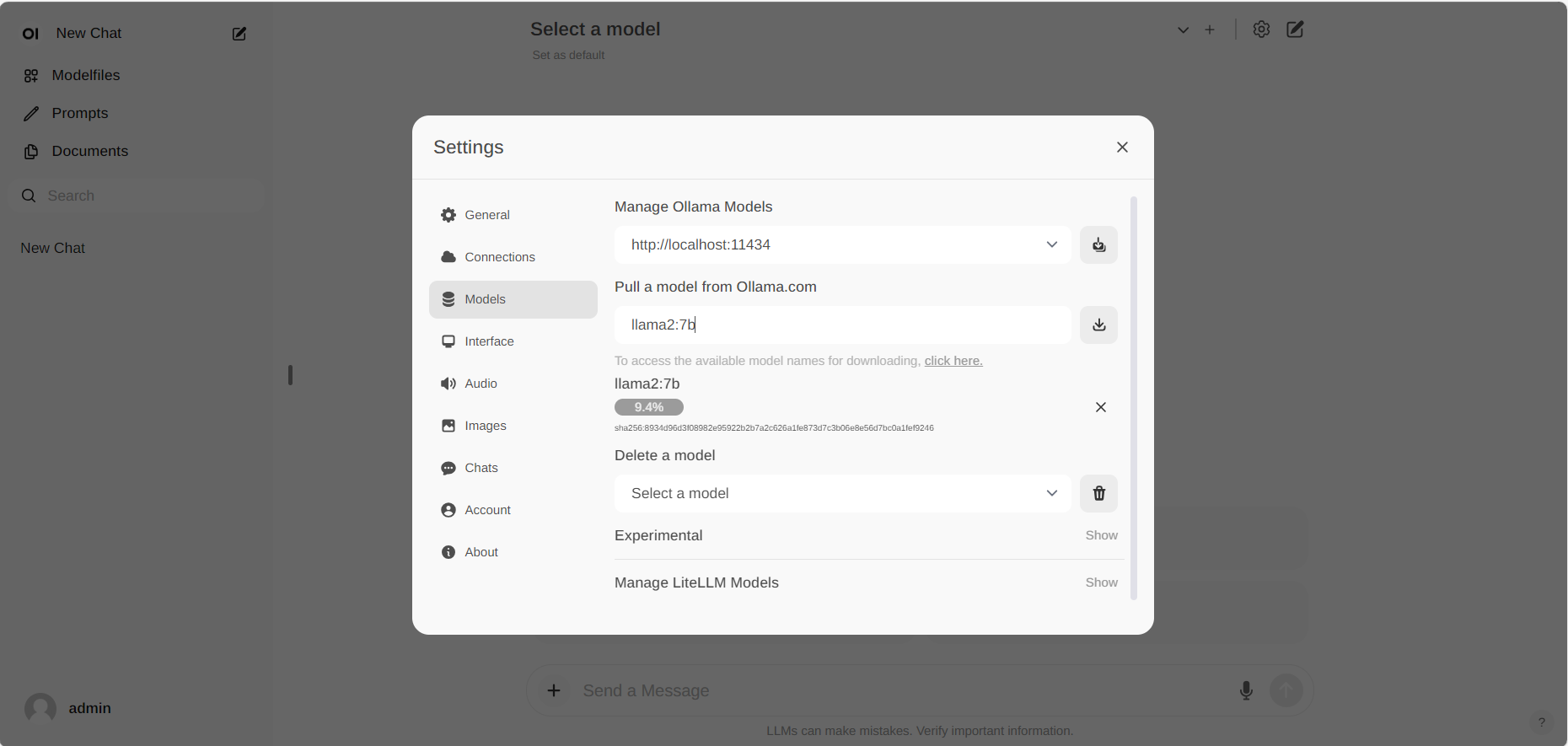

+Pull model in `Settings/Models`, click the download button and ollama will download the model you select automatically.

+

+

+

+

+

+Pull model in `Settings/Models`, click the download button and ollama will download the model you select automatically.

+

+

+  +

+

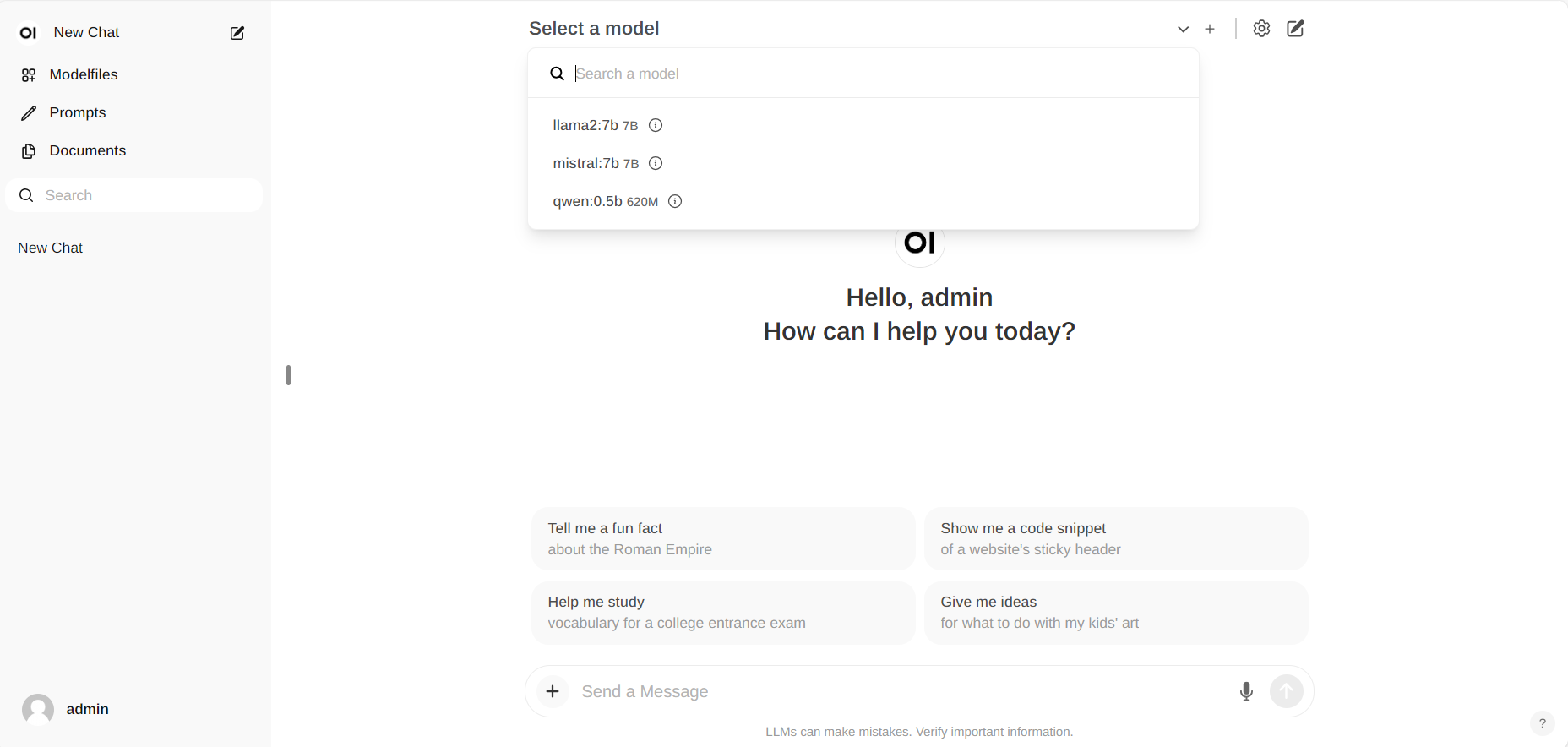

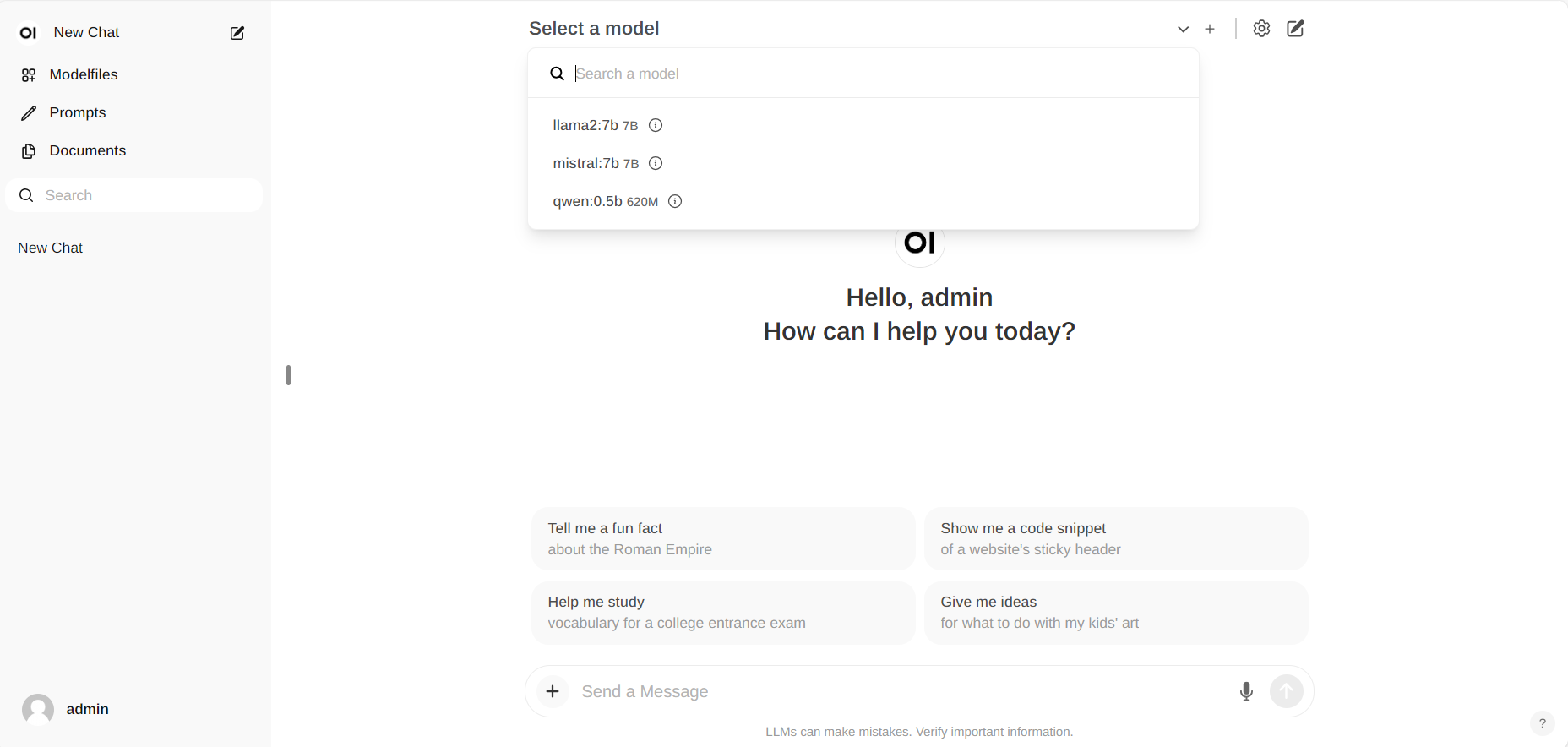

+#### Chat with the Model

+

+Start new conversations with **New chat**. Select a downloaded model here:

+

+

+

+

+

+#### Chat with the Model

+

+Start new conversations with **New chat**. Select a downloaded model here:

+

+

+  +

+

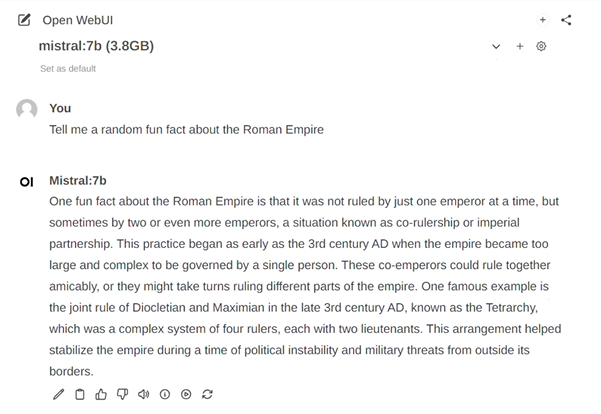

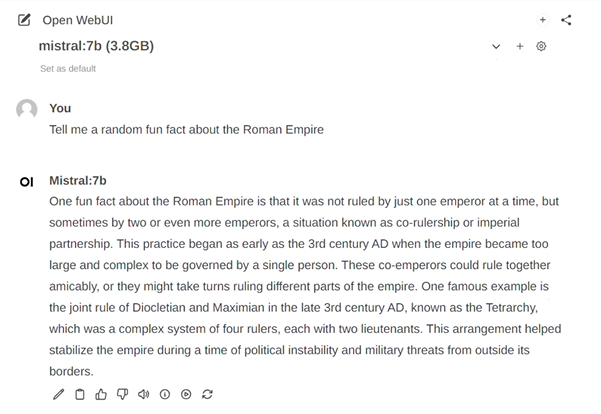

+Enter prompts into the textbox at the bottom and press the send button to receive responses.

+

+

+

+

+

+Enter prompts into the textbox at the bottom and press the send button to receive responses.

+

+

+  +

+

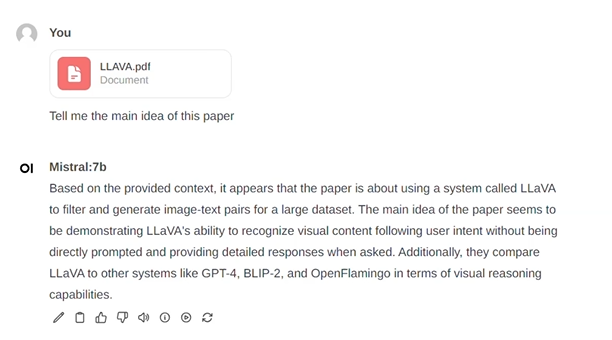

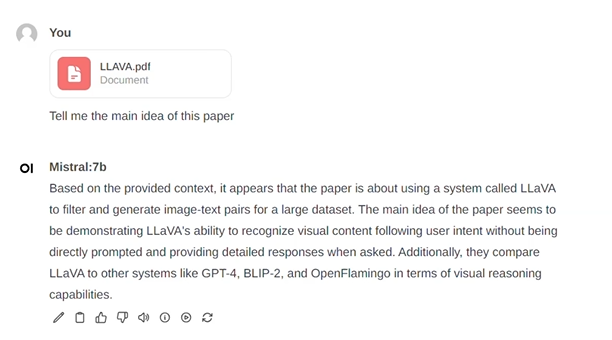

+You can also drop files into the textbox for LLM to read.

+

+

+

+

+

+You can also drop files into the textbox for LLM to read.

+

+

+  +

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.

+

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.

+

+

+

+

+

+

+

+

+

+  +

+

+Check your ollama service connection in `Settings`. The default Ollama Base URL is set to `https://localhost:11434`, you can also set your own url if you run Ollama service on another machine. Click this button to check if the Ollama service connection is functioning properly. If not, an alert will pop out as the below shows.

+

+

+

+

+

+Check your ollama service connection in `Settings`. The default Ollama Base URL is set to `https://localhost:11434`, you can also set your own url if you run Ollama service on another machine. Click this button to check if the Ollama service connection is functioning properly. If not, an alert will pop out as the below shows.

+

+

+  +

+

+If everything goes well, you will get a message as shown below.

+

+

+

+

+

+If everything goes well, you will get a message as shown below.

+

+

+  +

+

+Pull model in `Settings/Models`, click the download button and ollama will download the model you select automatically.

+

+

+

+

+

+Pull model in `Settings/Models`, click the download button and ollama will download the model you select automatically.

+

+

+  +

+

+#### Chat with the Model

+

+Start new conversations with **New chat**. Select a downloaded model here:

+

+

+

+

+

+#### Chat with the Model

+

+Start new conversations with **New chat**. Select a downloaded model here:

+

+

+  +

+

+Enter prompts into the textbox at the bottom and press the send button to receive responses.

+

+

+

+

+

+Enter prompts into the textbox at the bottom and press the send button to receive responses.

+

+

+  +

+

+You can also drop files into the textbox for LLM to read.

+

+

+

+

+

+You can also drop files into the textbox for LLM to read.

+

+

+  +

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.

+

+

+#### Exit Open-Webui

+

+To shut down the open-webui server, use **Ctrl+C** in the terminal where the open-webui server is runing, then close your browser tab.