diff --git a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

index 563402b6..bb2ac4f0 100644

--- a/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Overview/install_gpu.md

@@ -135,7 +135,7 @@ Please also set the following environment variable if you would like to run LLMs

set SYCL_CACHE_PERSISTENT=1

set BIGDL_LLM_XMX_DISABLED=1

- .. tab:: Intel Arc™ A-Series

+ .. tab:: Intel Arc™ A-Series Graphics

.. code-block:: cmd

@@ -581,6 +581,12 @@ To use GPU acceleration on Linux, several environment variables are required or

```

+```eval_rst

+.. note::

+

+ For **the first time** that **each model** runs on Intel iGPU/Intel Arc™ A300-Series or Pro A60, it may take several minutes to compile.

+```

+

### Known issues

#### 1. Potential suboptimal performance with Linux kernel 6.2.0

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

index 6bfa7acd..ebb4f8ae 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_linux_gpu.md

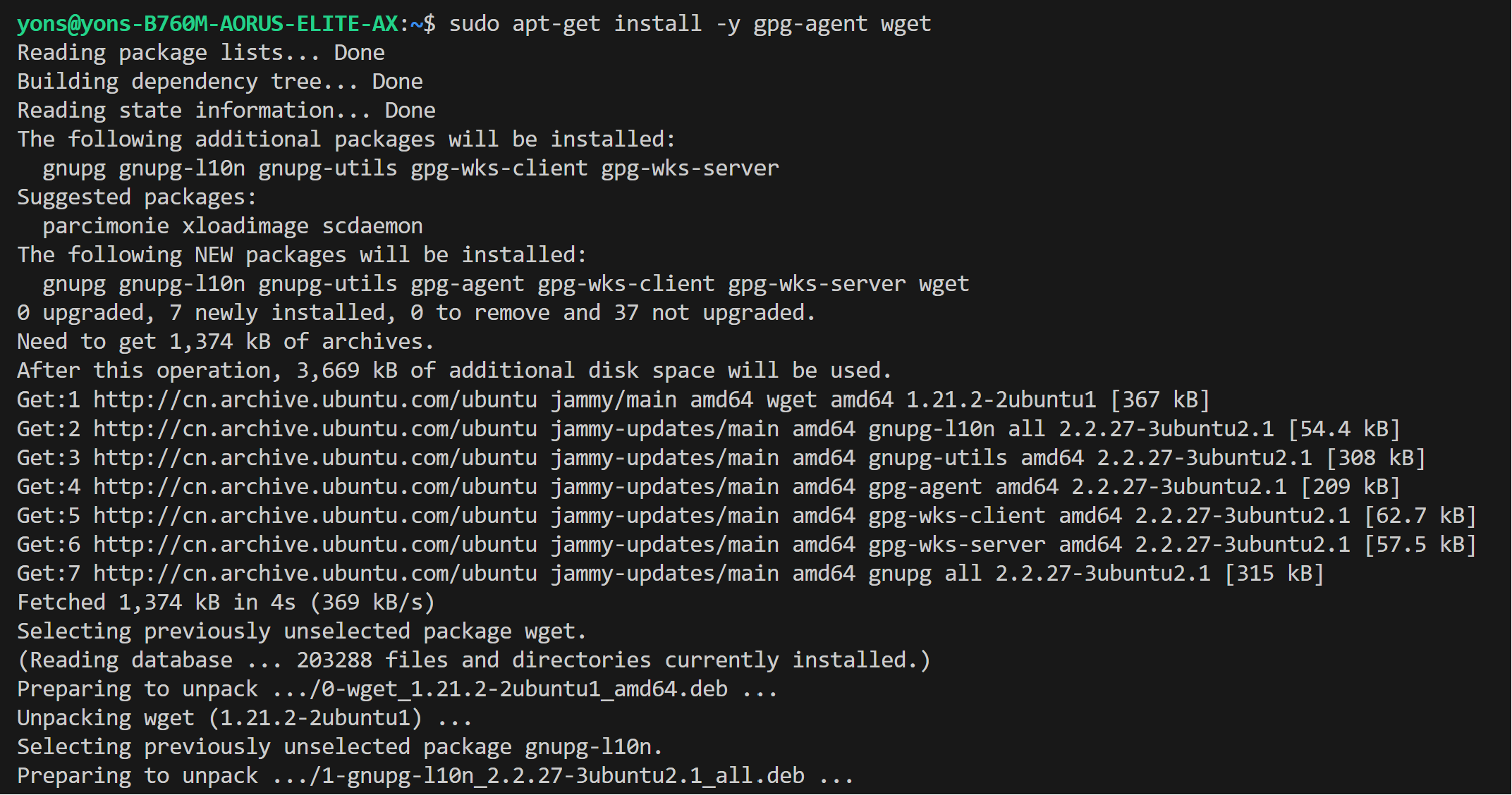

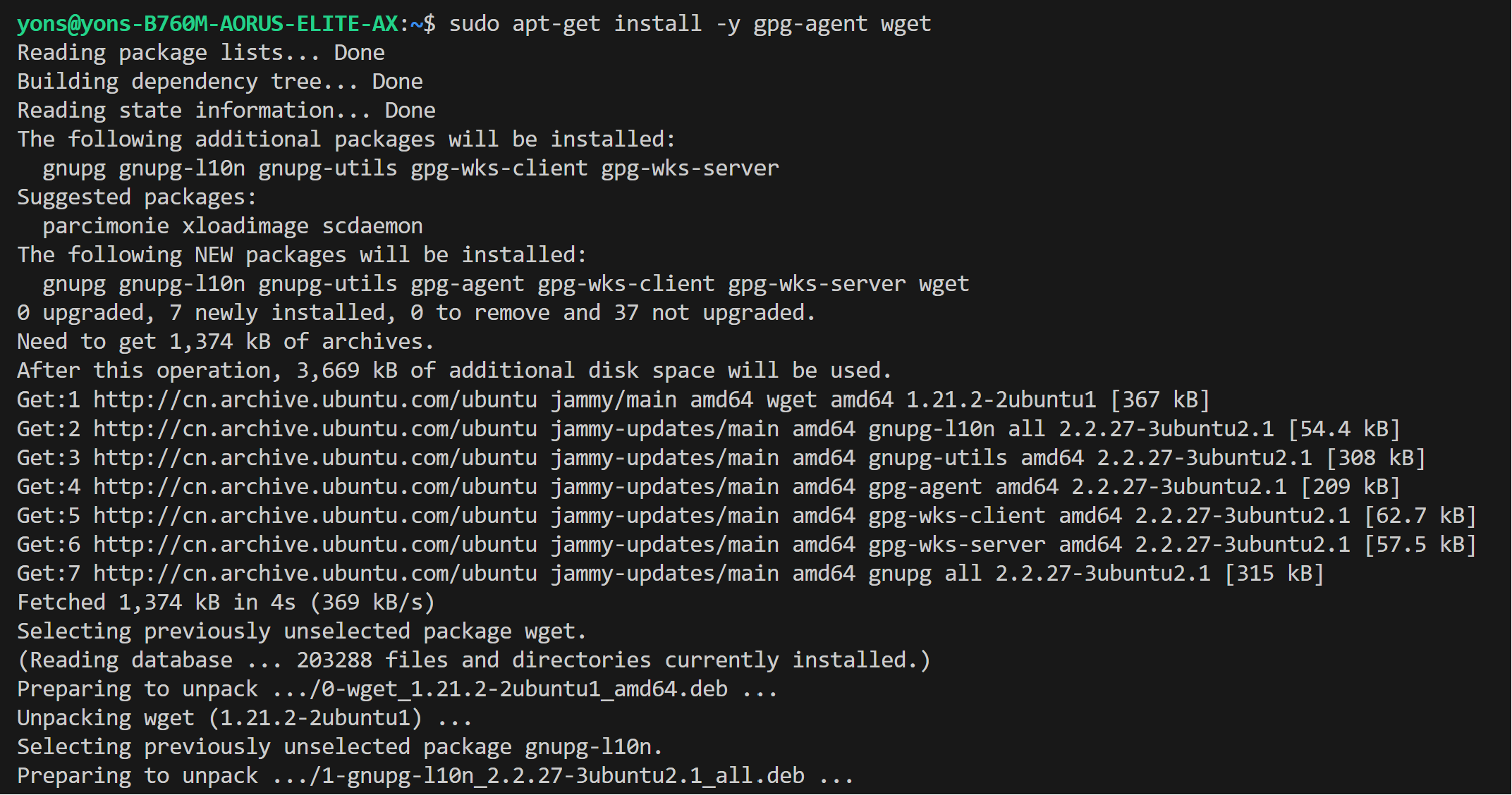

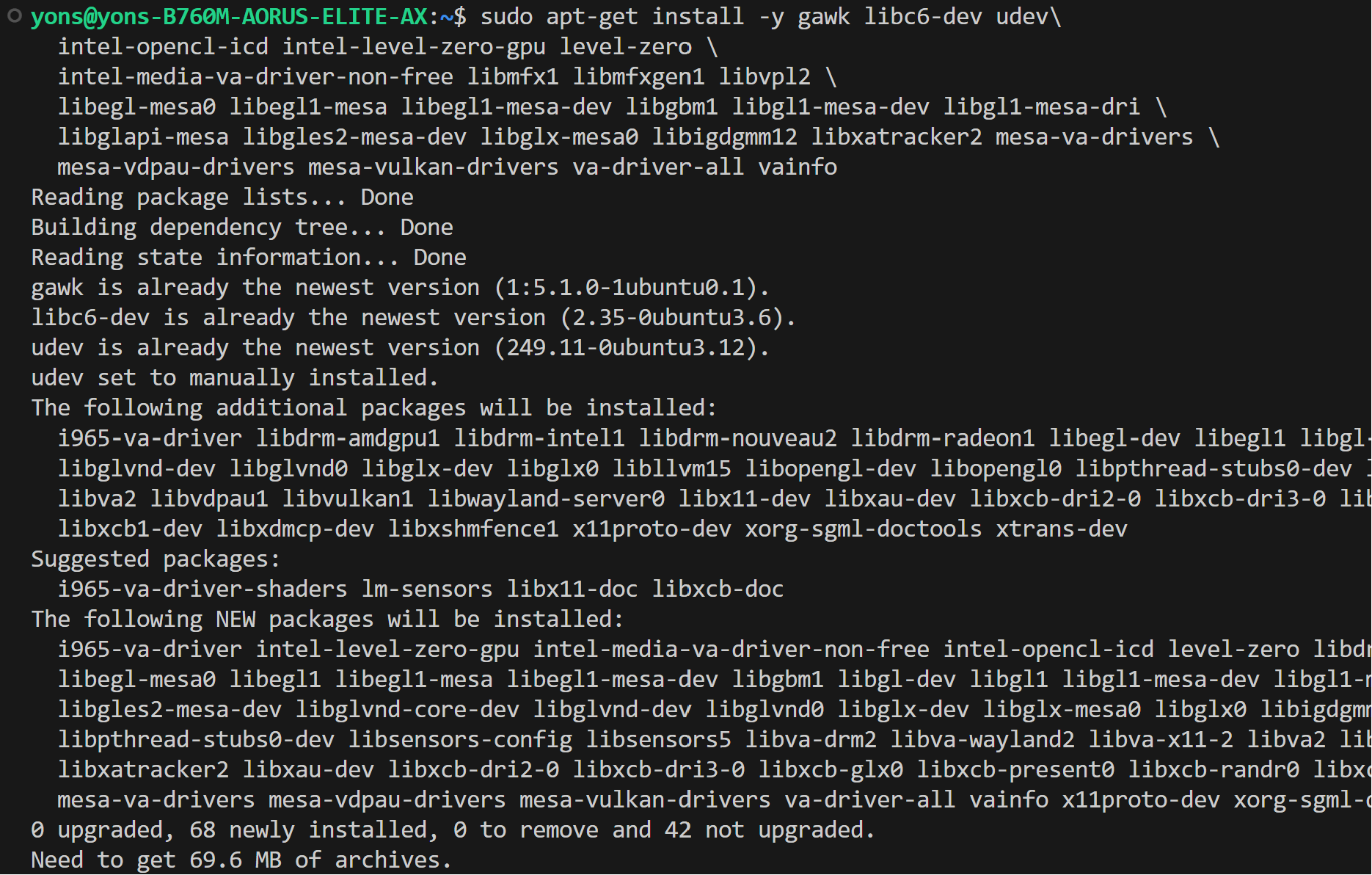

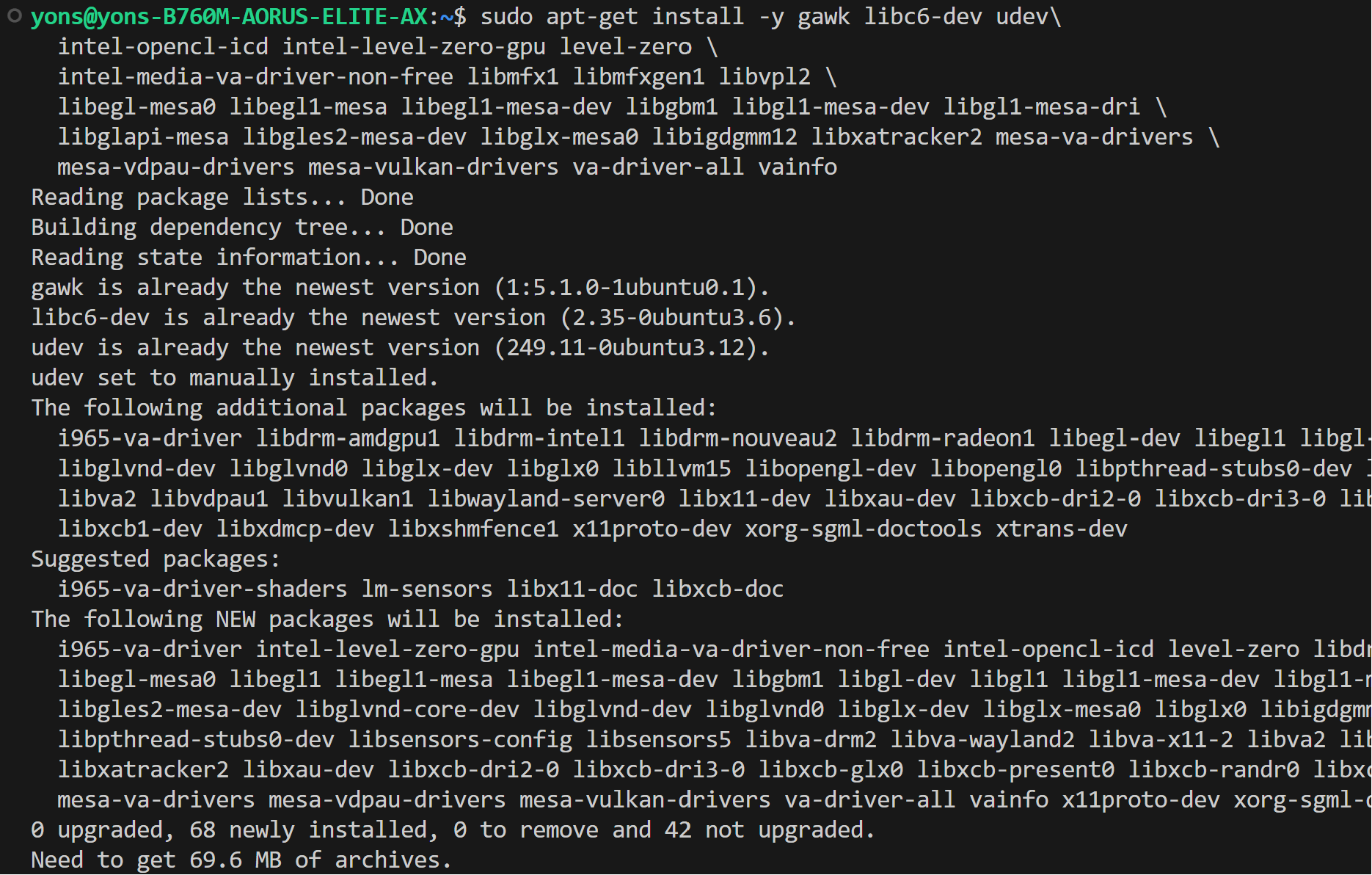

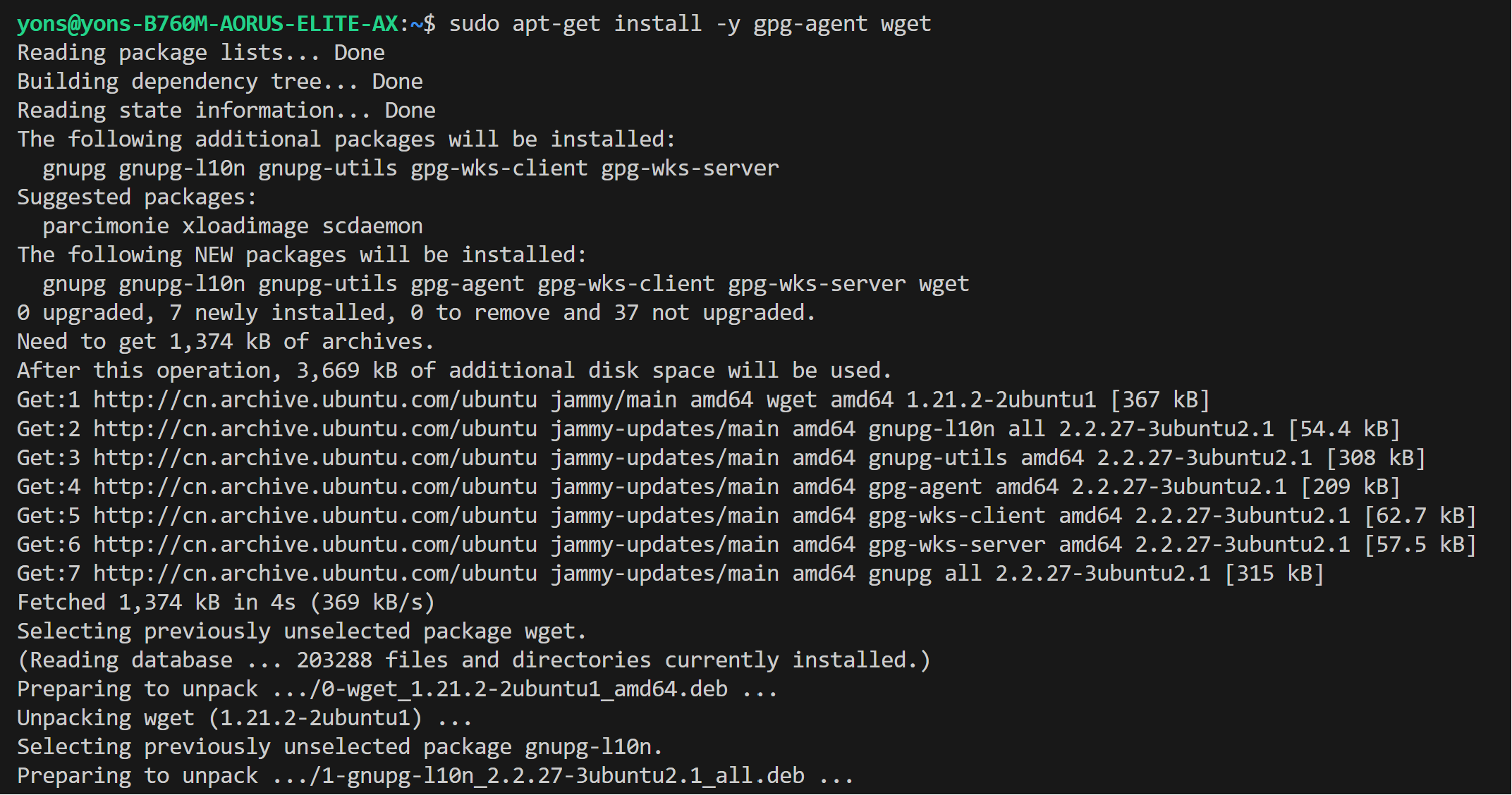

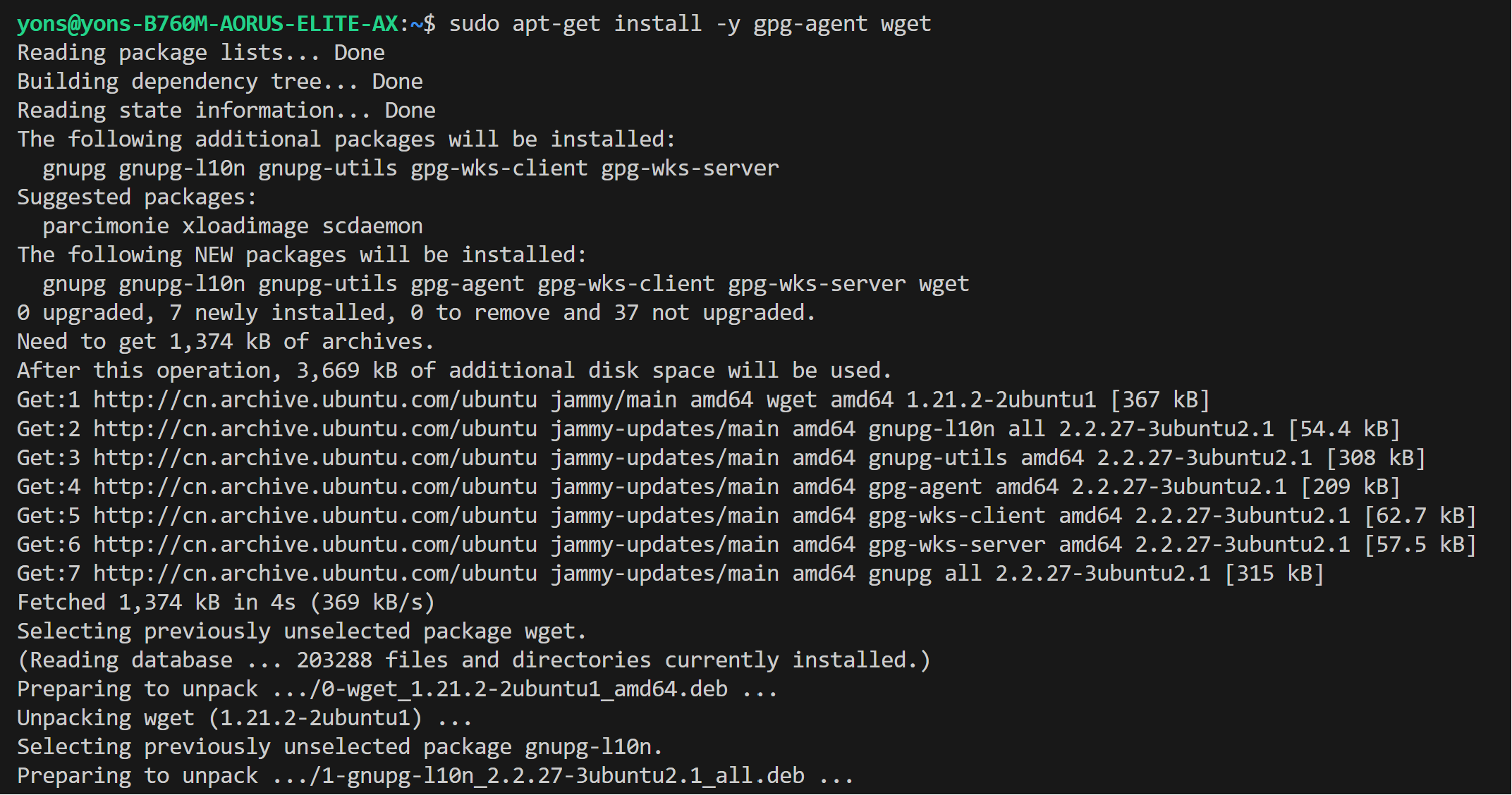

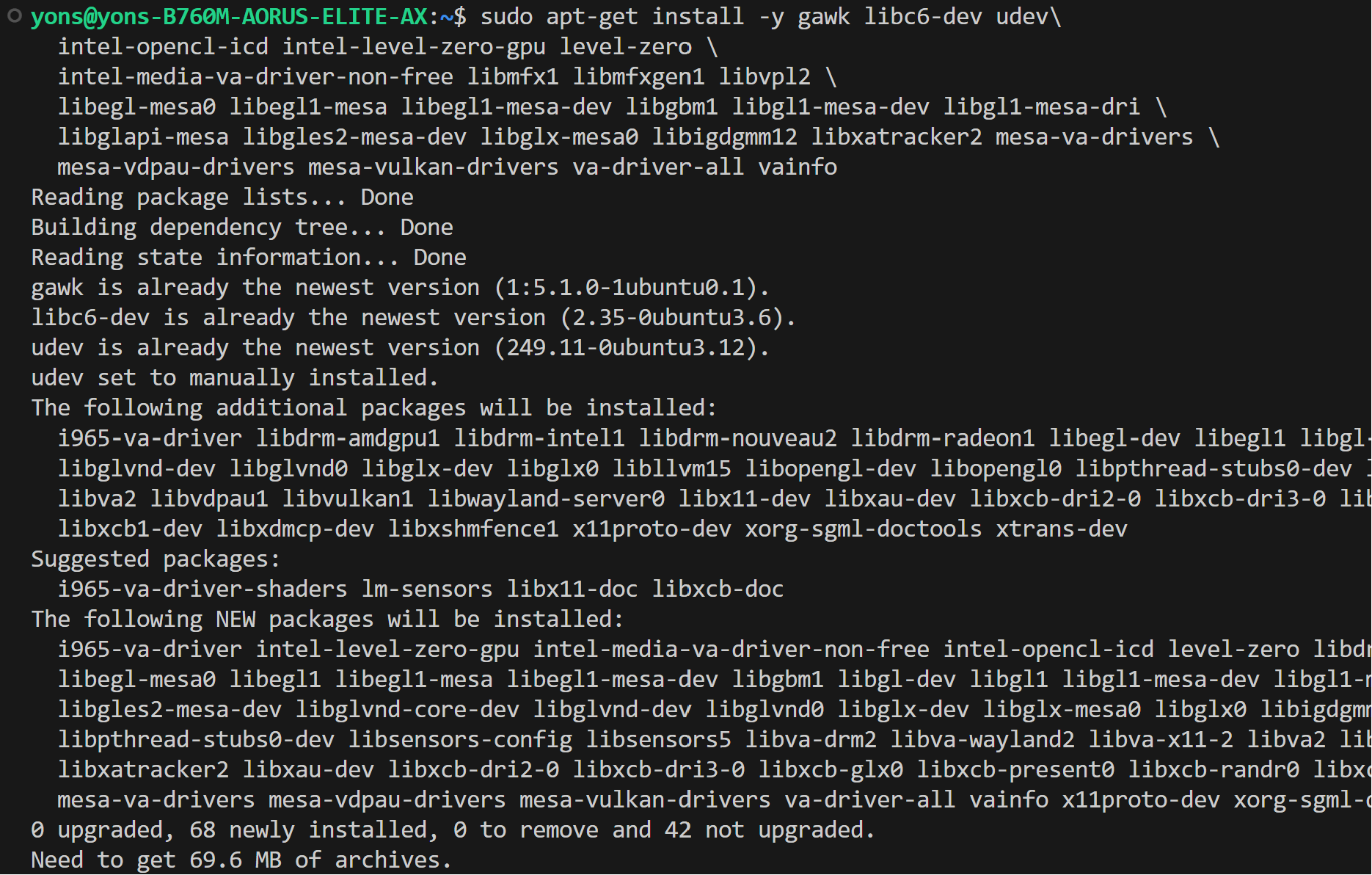

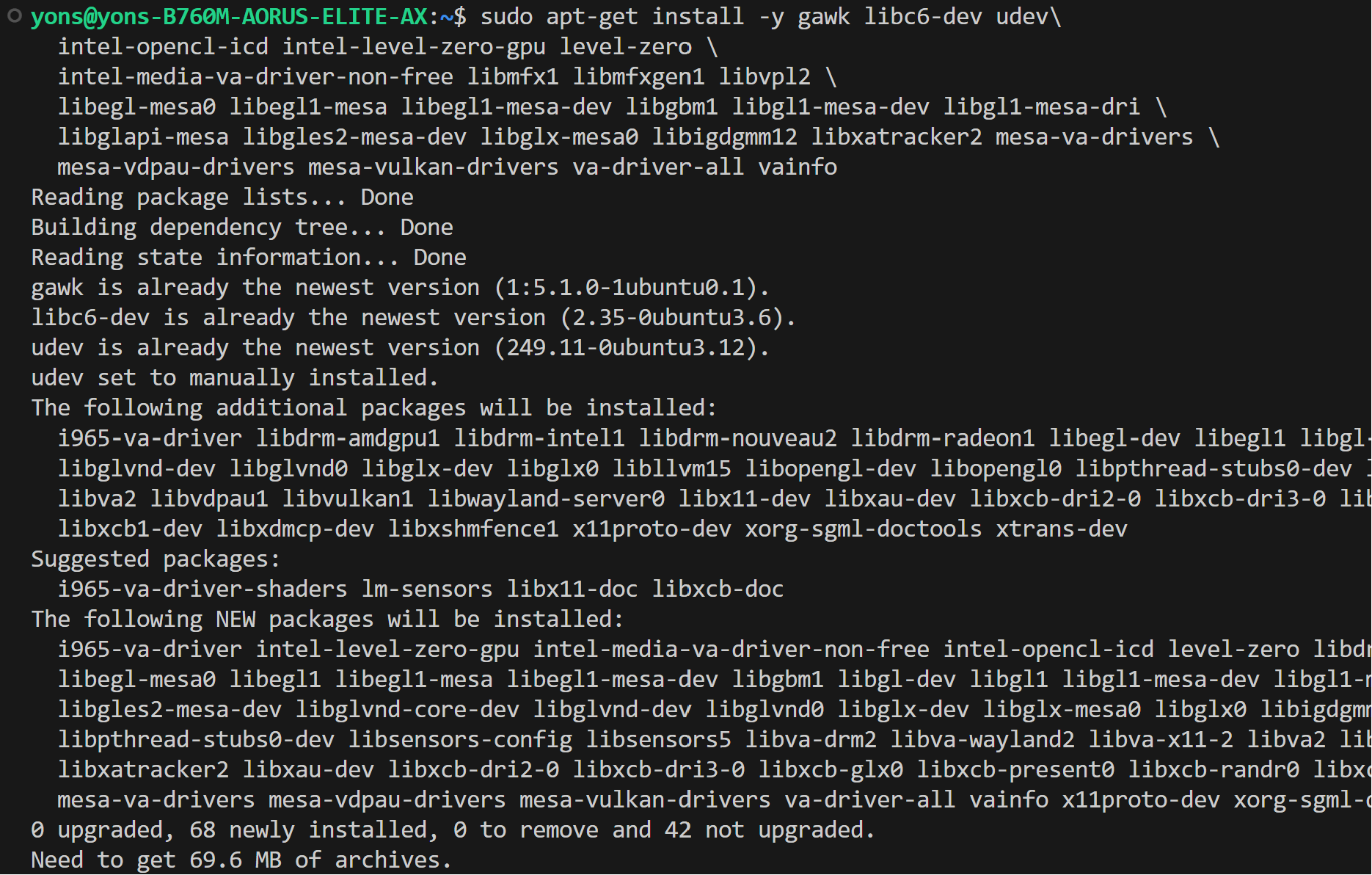

@@ -67,7 +67,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list

```

- >  +

+  * Install drivers

@@ -89,7 +89,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo reboot

```

- >

* Install drivers

@@ -89,7 +89,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo reboot

```

- >  +

+  * Configure permissions

@@ -229,6 +229,12 @@ To use GPU acceleration on Linux, several environment variables are required or

```

+ ```eval_rst

+ .. seealso::

+

+ Please refer to `this guide <../Overview/install_gpu.html#id5>`_ for more details regarding runtime configuration.

+ ```

+

## A Quick Example

Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface.co/microsoft/phi-1_5) model, a 1.3 billion parameter LLM for this demostration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 2d930ca3..8b55be0f 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -125,7 +125,7 @@ You can verify if `ipex-llm` is successfully installed following below steps.

```eval_rst

.. seealso::

- For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

+ For other Intel dGPU Series, please refer to `this guide <../Overview/install_gpu.html#runtime-configuration>`_ for more details regarding runtime configuration.

```

### Step 2: Run Python Code

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

index b59f7714..0e931eee 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

@@ -59,11 +59,10 @@ Configure oneAPI variables by running the following command in **Anaconda Prompt

```

```cmd

-call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+set SYCL_CACHE_PERSISTENT=1

```

If you're running on iGPU, set additional environment variables by running the following commands:

```cmd

-set SYCL_CACHE_PERSISTENT=1

set BIGDL_LLM_XMX_DISABLED=1

```

* Configure permissions

@@ -229,6 +229,12 @@ To use GPU acceleration on Linux, several environment variables are required or

```

+ ```eval_rst

+ .. seealso::

+

+ Please refer to `this guide <../Overview/install_gpu.html#id5>`_ for more details regarding runtime configuration.

+ ```

+

## A Quick Example

Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface.co/microsoft/phi-1_5) model, a 1.3 billion parameter LLM for this demostration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 2d930ca3..8b55be0f 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -125,7 +125,7 @@ You can verify if `ipex-llm` is successfully installed following below steps.

```eval_rst

.. seealso::

- For other Intel dGPU Series, please refer to `this guide `_ for more details regarding runtime configuration.

+ For other Intel dGPU Series, please refer to `this guide <../Overview/install_gpu.html#runtime-configuration>`_ for more details regarding runtime configuration.

```

### Step 2: Run Python Code

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md b/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

index b59f7714..0e931eee 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/webui_quickstart.md

@@ -59,11 +59,10 @@ Configure oneAPI variables by running the following command in **Anaconda Prompt

```

```cmd

-call "C:\Program Files (x86)\Intel\oneAPI\setvars.bat"

+set SYCL_CACHE_PERSISTENT=1

```

If you're running on iGPU, set additional environment variables by running the following commands:

```cmd

-set SYCL_CACHE_PERSISTENT=1

set BIGDL_LLM_XMX_DISABLED=1

```

+

+  * Install drivers

@@ -89,7 +89,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo reboot

```

- >

* Install drivers

@@ -89,7 +89,7 @@ IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and sup

sudo reboot

```

- >  +

+  * Configure permissions

@@ -229,6 +229,12 @@ To use GPU acceleration on Linux, several environment variables are required or

```

+ ```eval_rst

+ .. seealso::

+

+ Please refer to `this guide <../Overview/install_gpu.html#id5>`_ for more details regarding runtime configuration.

+ ```

+

## A Quick Example

Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface.co/microsoft/phi-1_5) model, a 1.3 billion parameter LLM for this demostration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 2d930ca3..8b55be0f 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -125,7 +125,7 @@ You can verify if `ipex-llm` is successfully installed following below steps.

```eval_rst

.. seealso::

- For other Intel dGPU Series, please refer to `this guide

* Configure permissions

@@ -229,6 +229,12 @@ To use GPU acceleration on Linux, several environment variables are required or

```

+ ```eval_rst

+ .. seealso::

+

+ Please refer to `this guide <../Overview/install_gpu.html#id5>`_ for more details regarding runtime configuration.

+ ```

+

## A Quick Example

Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface.co/microsoft/phi-1_5) model, a 1.3 billion parameter LLM for this demostration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 2d930ca3..8b55be0f 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -125,7 +125,7 @@ You can verify if `ipex-llm` is successfully installed following below steps.

```eval_rst

.. seealso::

- For other Intel dGPU Series, please refer to `this guide