| Functionality | -- | - | - | |||

| Model | -- | - | - | |||

| DL framework | -- | -- | ||||

| OS | -- | - | ||||

| Auto Tuning | -- | - | ||||

| Inference Opt | -- | - | ||||

| Hardware | -- | - | ||||

| Package | -- | - | ||||

| Version | -- | - | ||||

| Install CMD | -NA | -|||||

Please check the checkboxes or click tag buttons to show the related examples. Reclick or uncheck will hide corresponding examples. If nothing is checked or clicked, all the examples will be displayed.

-Tag: - - -

- Run in Google Colab

-

-

Run in Google Colab

-

-  View source on GitHub

-

View source on GitHub

- In this guide we will demonstrate how to use Chronos TSDataset and Chronos Forecaster for time series processing and predict number of taxi passengers.

-Tag: - - - -

- Run in Google Colab

-

-

Run in Google Colab

-

-  View source on GitHub

-

View source on GitHub

- In this guide we will demonstrate how to use Chronos AutoTSEstimator and Chronos TSPipeline to auto tune a time seires forecasting task and handle the whole model development process easily.

-Tag: - - -

- Run in Google Colab

-

-

Run in Google Colab

-

-  View source on GitHub

-

View source on GitHub

- In this guide we will demonstrate how to use Chronos Anomaly Detector for real time traffic data from the Twin Cities Metro area in Minnesota anomaly detection.

-Tag: - - - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demonstrate a reference use case where we use the network traffic KPI(s) in the past to predict traffic KPI(s) in the future. We demonstrate how to use AutoTSEstimator to adjust the parameters of a customized model.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demostrate a reference use case where we use the network traffic KPI(s) in the past to predict traffic KPI(s) in the future. We demostrate how to use AutoTS in project Chronos to do time series forecasting in an automated and distributed way.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demonstrate a reference use case where we use the network traffic KPI(s) in the past to predict traffic KPI(s) in the future. We demostrate how to do univariate forecasting (predict only 1 series), and multivariate forecasting (predicts more than 1 series at the same time) using Project Chronos.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demonstrate a reference use case where we use the network traffic KPI(s) in the past to predict traffic KPI(s) in the future. We demostrate how to do multivariate multistep forecasting using Project Chronos.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demonstrate a reference use case where we use historical stock price data to predict the future price. The dataset we use is the daily stock price of S&P500 stocks during 2013-2018 (data source). We demostrate how to do univariate forecasting using the past 80% of the total days' MMM price to predict the future 20% days' daily price.

-Reference: https://github.com/jwkanggist/tf-keras-stock-pred

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demonstrate a reference use case where we use historical stock price data to predict the future price using the ProphetForecaster and AutoProphet. The dataset we use is the daily stock price of S&P500 stocks during 2013-2018 data source.

-Reference: https://facebook.github.io/prophet, https://github.com/jwkanggist/tf-keras-stock-pred

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- We demonstrates how to perform anomaly detection based on Chronos's built-in DBScanDetector, AEDetector and ThresholdDetector.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- We demonstrates how to leverage Chronos's built-in models ie. MTNet, to do time series forecasting. Then perform anomaly detection on predicted value with ThresholdDetector.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- Chronos can help a 3rd party time series lib to improve the performance (both training and inferencing) and accuracy. This use-case shows Chronos can easily help pytorch-forecasting speed up the training of DeepAR model.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- Chronos can help a 3rd party time series lib to improve the performance (both training and inferencing) and accuracy. This use-case shows Chronos can easily help pytorch-forecasting speed up the training of TFT model.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- In this notebook, we demostrate how to use multi-objective hyperparameter optimization with built-in latency metric in project Chronos to do time series forecasting and achieve good tradeoff between performance and latency.

-Tag: - - - - -

- View source on GitHub

-

View source on GitHub

- This example collection will demonstrate Chronos auto models (i.e. autolstm & autoprophet) perform automatic time series forecasting on nyc_taxi dataset. The auto model will search the best hyperparameters automatically.

-Tag: - - - - -

- View source on GitHub

-

View source on GitHub

- Users can easily train their forecasters in a distributed fashion to handle extra large dataset and speed up the process (training and data processing) by utilizing a cluster or pseudo-distribution on a single node. The functionality is powered by Project Orca.

-Tag: - - - - - -

- View source on GitHub

-

View source on GitHub

- This example will demonstrate how to use ONNX to speed up the inferencing(prediction/evaluation) on forecasters and AutoTSEstimator. In this example, onnx speed up the inferencing for ~4X.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- Users can easily quantize their forecasters to low precision and speed up the inference process (both throughput and latency) by on a single node. The functionality is powered by Project Nano.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- This example shows how to generate synthetic data with similar distribution as training data with the fast and easy DPGANSimulator API provided by Chronos.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- This example demonstrates how to use BigDL Chronos TCMFForecaster to run distributed training and inference for high dimension time series forecasting task.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- This example demonstrates how to use TCNForecaster to penalize underestimation based on a built-in loss function LinexLoss.

-Tag: - - - -

- View source on GitHub

-

View source on GitHub

- In this example, we show an example to train the model on GPU and accelerate the model by using onnxruntime on CPU.

-Tag: - - -

- View source on GitHub

-

View source on GitHub

- In this example, we show how to serve Chronos forecaster and predict through TorchServe.

-

[1]

- Performance varies by use, configuration and other factors. bigdl-llm may not optimize to the same degree for non-Intel products. Learn more at www.Intel.com/PerformanceIndex.

+ Performance varies by use, configuration and other factors. ipex-llm may not optimize to the same degree for non-Intel products. Learn more at www.Intel.com/PerformanceIndex.

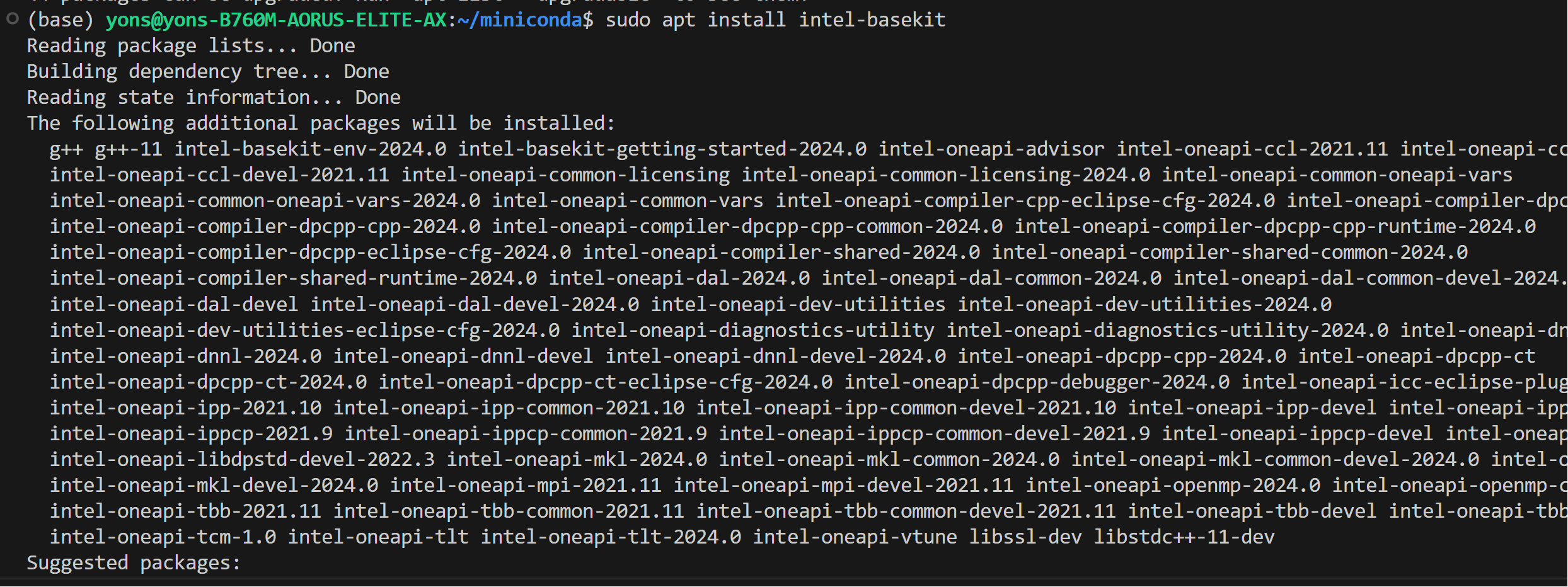

-## Install `bigdl-llm`

+## Install `ipex-llm`

-* With the `llm` environment active, use `pip` to install `bigdl-llm` for GPU:

+* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

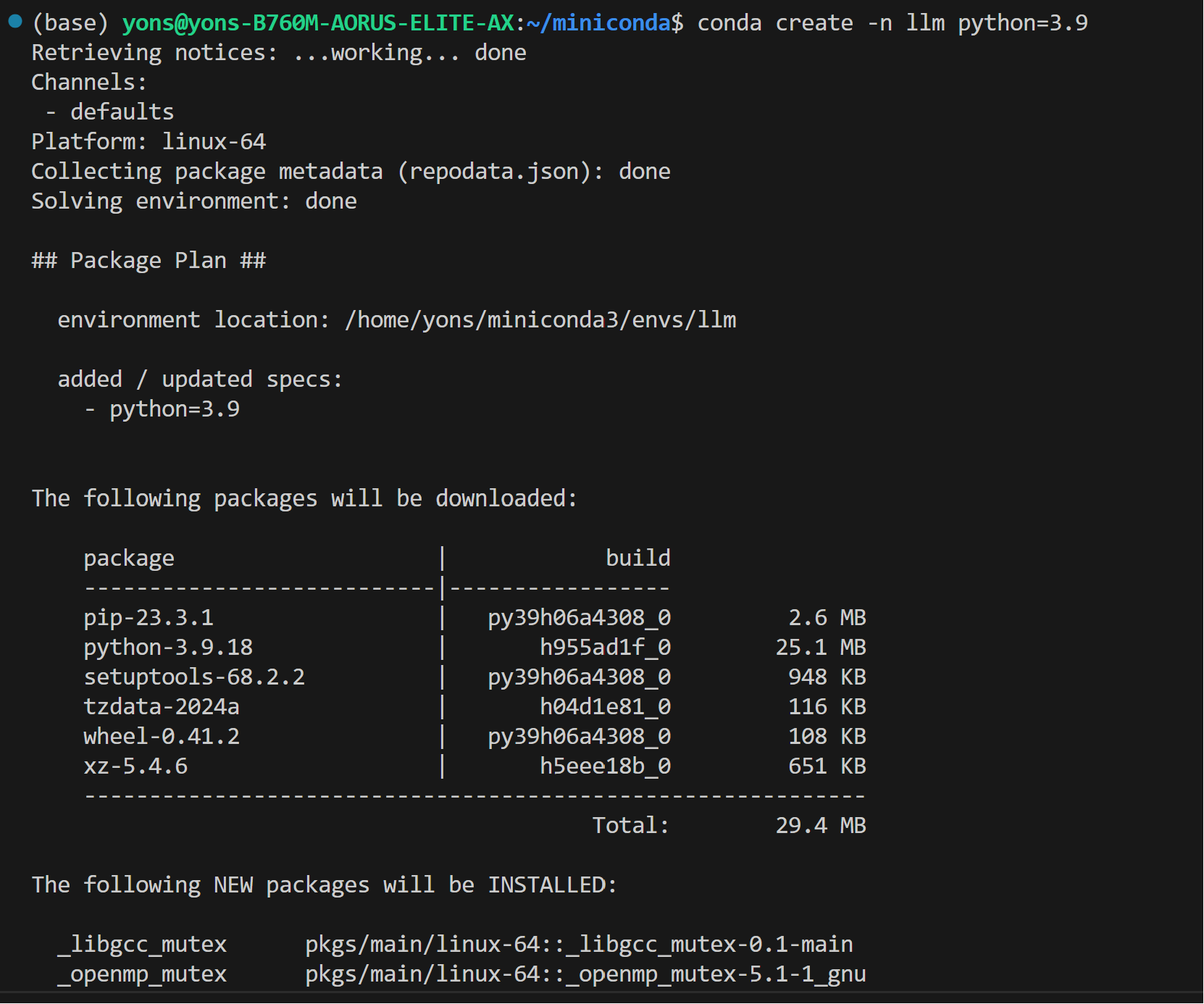

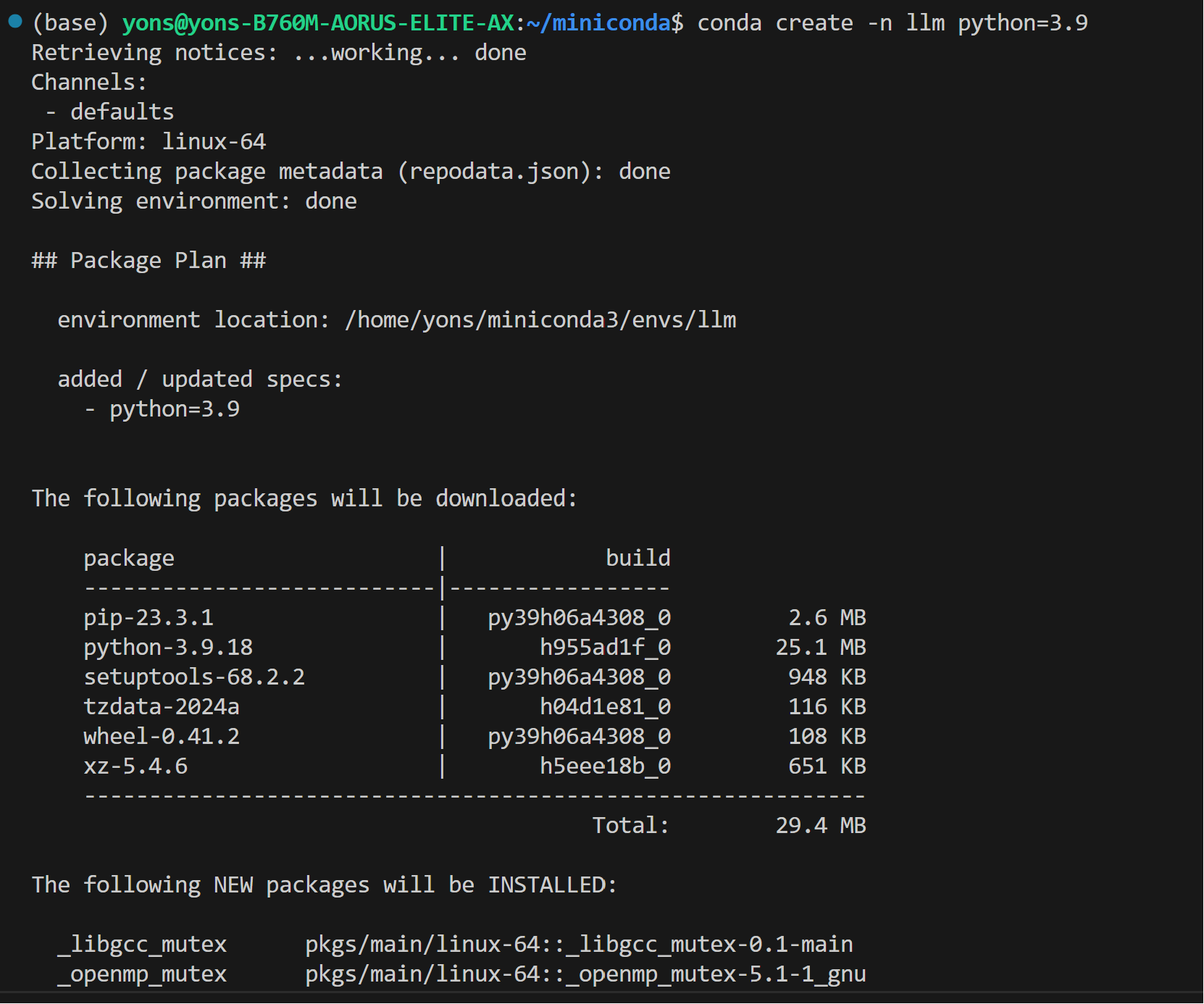

conda create -n llm python=3.9

conda activate llm

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

>

-## Install `bigdl-llm`

+## Install `ipex-llm`

-* With the `llm` environment active, use `pip` to install `bigdl-llm` for GPU:

+* With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

```

conda create -n llm python=3.9

conda activate llm

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://developer.intel.com/ipex-whl-stable-xpu

```

>  @@ -106,7 +106,7 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>

@@ -106,7 +106,7 @@ Install the Miniconda as follows if you don't have conda installed on your machi

>  -* You can verify if bigdl-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,7 +115,7 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >

-* You can verify if bigdl-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

+* You can verify if ipex-llm is successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:

```bash

source /opt/intel/oneapi/setvars.sh

@@ -115,7 +115,7 @@ Install the Miniconda as follows if you don't have conda installed on your machi

> from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

```

- >  + >

+ >  ## Runtime Configurations

@@ -157,7 +157,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

conda activate llm

```

* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://bigdl.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

+ > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

```bash

# Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

source /opt/intel/oneapi/setvars.sh

@@ -175,7 +175,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

generation_config = GenerationConfig(use_cache = True)

tokenizer = AutoTokenizer.from_pretrained("tiiuae/falcon-7b", trust_remote_code=True)

- # load Model using bigdl-llm and load it to GPU

+ # load Model using ipex-llm and load it to GPU

model = AutoModelForCausalLM.from_pretrained(

"tiiuae/falcon-7b", load_in_4bit=True, cpu_embedding=True, trust_remote_code=True)

model = model.to('xpu')

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 370422d1..7997d740 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -1,6 +1,6 @@

-# Install BigDL-LLM on Windows with Intel GPU

+# Install IPEX-LLM on Windows with Intel GPU

-This guide demonstrates how to install BigDL-LLM on Windows with Intel GPUs.

+This guide demonstrates how to install IPEX-LLM on Windows with Intel GPUs.

It applies to Intel Core Ultra and Core 12 - 14 gen integrated GPUs (iGPUs), as well as Intel Arc Series GPU.

@@ -66,9 +66,9 @@ Activate the newly created environment `llm`:

conda activate llm

```

-## Install `bigdl-llm`

+## Install `ipex-llm`

-With the `llm` environment active, use `pip` to install `bigdl-llm` for GPU:

+With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

Choose either US or CN website for `extra-index-url`:

```eval_rst

@@ -77,23 +77,23 @@ Choose either US or CN website for `extra-index-url`:

.. code-block:: cmd

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

.. tab:: CN

.. code-block:: cmd

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

```

```eval_rst

.. note::

- If you encounter network issues while installing IPEX, refer to `this guide

## Runtime Configurations

@@ -157,7 +157,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

conda activate llm

```

* Step 2: If you're running on iGPU, set some environment variables by running below commands:

- > For more details about runtime configurations, refer to [this guide](https://bigdl.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

+ > For more details about runtime configurations, refer to [this guide](https://ipex-llm.readthedocs.io/en/latest/doc/LLM/Overview/install_gpu.html#runtime-configuration):

```bash

# Skip this step for PIP-installed oneAPI since the environment has already been configured in LD_LIBRARY_PATH.

source /opt/intel/oneapi/setvars.sh

@@ -175,7 +175,7 @@ Now let's play with a real LLM. We'll be using the [phi-1.5](https://huggingface

generation_config = GenerationConfig(use_cache = True)

tokenizer = AutoTokenizer.from_pretrained("tiiuae/falcon-7b", trust_remote_code=True)

- # load Model using bigdl-llm and load it to GPU

+ # load Model using ipex-llm and load it to GPU

model = AutoModelForCausalLM.from_pretrained(

"tiiuae/falcon-7b", load_in_4bit=True, cpu_embedding=True, trust_remote_code=True)

model = model.to('xpu')

diff --git a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

index 370422d1..7997d740 100644

--- a/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

+++ b/docs/readthedocs/source/doc/LLM/Quickstart/install_windows_gpu.md

@@ -1,6 +1,6 @@

-# Install BigDL-LLM on Windows with Intel GPU

+# Install IPEX-LLM on Windows with Intel GPU

-This guide demonstrates how to install BigDL-LLM on Windows with Intel GPUs.

+This guide demonstrates how to install IPEX-LLM on Windows with Intel GPUs.

It applies to Intel Core Ultra and Core 12 - 14 gen integrated GPUs (iGPUs), as well as Intel Arc Series GPU.

@@ -66,9 +66,9 @@ Activate the newly created environment `llm`:

conda activate llm

```

-## Install `bigdl-llm`

+## Install `ipex-llm`

-With the `llm` environment active, use `pip` to install `bigdl-llm` for GPU:

+With the `llm` environment active, use `pip` to install `ipex-llm` for GPU:

Choose either US or CN website for `extra-index-url`:

```eval_rst

@@ -77,23 +77,23 @@ Choose either US or CN website for `extra-index-url`:

.. code-block:: cmd

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

.. tab:: CN

.. code-block:: cmd

- pip install --pre --upgrade bigdl-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

+ pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

```

```eval_rst

.. note::

- If you encounter network issues while installing IPEX, refer to `this guide

- bigdl-llm is a library for running LLM (large language model) on Intel XPU (from Laptop to GPU to Cloud) using INT4 with very low latency [1] (for any PyTorch model).

+ ipex-llm is a library for running LLM (large language model) on Intel XPU (from Laptop to GPU to Cloud) using INT4 with very low latency [1] (for any PyTorch model).

[1]

- Performance varies by use, configuration and other factors. bigdl-llm may not optimize to the same degree for non-Intel products. Learn more at www.Intel.com/PerformanceIndex.

+ Performance varies by use, configuration and other factors. ipex-llm may not optimize to the same degree for non-Intel products. Learn more at www.Intel.com/PerformanceIndex.

| FrameWork | -- | - | ||

| Version | -- | - | - | - |

| Inference Opt | -- | - | ||

| Release | -- | - | ||

| Install CMD | -NA | -|||